Attachment B_ Study Design and References

Attachment B_ Study Design and References.docx

Understanding States’ SNAP Customer Service Strategies (NEW)

Attachment B_ Study Design and References

OMB: 0584-0688

Attachment B: Study Design and References

This page is deliberately left blank.

This document presents the updated study plan for Understanding States’ Supplemental Nutrition Assistance Program (SNAP) Customer Service Strategies. The objectives of the study are to describe:

How each study State defines and measures good and/or bad customer service for SNAP applicants and participants, particularly those that go beyond the minimum requirements set by FNS.

How the State SNAP agency in each study State implements and refines its customer service approach.

The final report will present short case studies of each participating study State and will include a summary of State practices derived from a cross-site analysis of the data collected from the study States. It will also describe lessons learned and best practices, remaining knowledge gaps, and recommendations for future efforts to strengthen customer service practices in SNAP.

This study plan begins with a discussion of the study background. It then describes our research approach and provides a detailed workplan for achieving the study’s objectives. The timeline for accomplishing these tasks is also included.

Background

Providing high quality customer service is an important, but understudied factor in the success of SNAP, the largest Federal nutrition assistance program. Administrating SNAP requires repeated contacts between SNAP agency staff (State and local) and customers, from initial application to case closure. The numerous interactions along this journey affect applicants’ and participants’ experiences and perceptions about the program; the application and certification process are just the beginning of this process that can continue for many months or years. Poor customer service at any point could impact an applicant or participant’s access to the program. Examples of disrupted access have been profiled in media reports and prompted lawsuits (Marimow 2017, Valdivia 2022). Providing effective customer service in SNAP is therefore paramount to supporting FNS’s mission to increase food security and reduce hunger.

Numerous studies have found that chief among the barriers to SNAP participation are its often-high administrative burdens—that is, factors that create significant inconveniences for participants or prospective participants. Research has documented, for example, that prospective participants often perceive application requirements as time consuming and difficult to understand, required documentation of income and assets as burdensome and an invasion of privacy, and interactions with SNAP personnel as often unpleasant (AbuSabha et al., 2011; Bartlett & Burstein, 2004; Cody & Ohls, 2005; Gabor et al., 2002).

In the last few decades, FNS has allowed States to implement a variety of policies to improve customer service by addressing the barriers mentioned above. These policy options (such as demonstration projects and administrative waivers) aim to address one or more of these barriers to participation (Ganong & Liebman, 2018; Heflin & Mueser, 2010; Klerman & Danielson, 2009; Mabli et al., 2009; Ratcliffe, McKernan, & Finegold, 2007; Rutledge & Wu, 2014). States adopted various modernization initiatives such as streamlined eligibility determination processes through staff specialization, centralized call centers, and new technologies such as online client portals. Some States have moved eligibility determination functions out of local offices, limiting customers’ in-person access to eligibility staff.

Although the Food and Nutrition Act of 2008 and Federal regulations (7 CFR 272.4(a)) require that State agency staff conduct eligibility and benefit determinations, FNS has provided States with the flexibility in recent years to use contracted private vendor staff to perform a wider range of other eligibility functions, including some customer-facing tasks like eligibility screening and providing application assistance. While most of the policies mentioned above are intended to increase customer access to benefits, they often also have other intended goals, such as reducing agency costs or administrative errors. It is possible that the pursuit of these other goals may have unintended consequences on customer experiences (for example, loss of frontline workers) or the ability of staff to provide high-quality customer service.

Despite its importance, little current, systematic information is available about how State agencies support customer service in SNAP or how they monitor it. FNS collects key metrics related to customer service, including application processing timeliness and the accuracy of eligibility and benefit determination. These measure essential aspects of administering SNAP, but they do not directly measure important aspects of customer service, such as the quantity or quality of client interactions or customer or staff satisfaction. To address this information gap, FNS has engaged SPR and its partner Mathematica, to conduct a study to increase knowledge of existing SNAP customer service strategies and approaches to monitoring them. By reviewing current literature on customer service, profiling varied approaches used in case study States, and highlighting lessons learned and best practices, this study will provide FNS with a greater understanding of how States approach customer service and how such efforts could be strengthened further.

Research Objectives and Study Questions

FNS has defined three major objectives for the study and posed research questions for each objective, that will frame the study. Below we present the three objectives and the related study research questions:

Objective 1: Describe how each study State defines and measures good and/or bad customer service for SNAP applicants and participants, particularly those that go beyond the minimum requirements set by FNS.

1.1 How does the State SNAP agency define good and/or bad customer service? (Includes standards and expectations)

1.1a Are there specific definitions or metrics for certain subpopulations? (e.g., elderly people, people with disabilities, limited-English proficiency)

What data/measures are collected to monitor customer service, particularly those that go beyond metrics required by FNS?

What data/measures does the State find most useful in evaluating and improving customer service, and why?

What are the sources of data to monitor customer service? (e.g., system-generated reports, complaints and feedback from SNAP applicants and participants, front-line workers, community partners, etc.)

Does the State use any frameworks or models to inform its customer service approach?

Objective 2: For each study State, describe how the State SNAP agency implements and refines its customer service approach.

Who is responsible for monitoring and implementing the State’s customer service strategy?

How/by whom are measures, expectations, or standards for customer service developed?

What are the “touchpoints” where customer service is measured, or standards are implemented (e.g., call center interactions, State website or online application, eligibility interviews, office visits)?

Does the State agency have the means to monitor equity in customer service outcomes? In other words, does the State track customer service metrics for subpopulations, like the elderly, people with disabilities, and people with limited English proficiency?

How are front-line staff trained in customer service standards and expectations?

How do customer service measures, standards, and expectations factor into employee performance assessments?

How have customer service metrics or standards been used to improve customer service?

With whom are results from monitoring customer service shared?

What are the facilitators/barriers of collecting customer service data?

What are the facilitators/barriers of implementing customer service standards or expectations?

How and in what circumstances does the State refine customer service metrics? For example, based on feedback or in response to process improvements and modernization efforts?

Objective 3: Describe the current research and documentation available about customer service standards and measurement broadly, with a particular focus on government programs and safety net programs.

3.1 What customer service standards are being used in government programs, particularly safety net programs?

3.1 a. How could these standards be applied to SNAP?

How are customer service standards measured in government programs, particularly safety net programs?

3.2a How could these measures be applied to SNAP?

What customer service best practices and frameworks used in private industry could be applicable to SNAP? This may include a review of third-party apps that provide information about SNAP or facilitate SNAP applications.

What impact does customer service have on government program performance?

What customer service best practices for government programs, particularly safety net programs, are identified in the literature?

To explore these research questions and achieve our objectives, we will employ four main research methods: a comprehensive literature review; a review of State SNAP agency documents and data systems; interviews with SNAP staff and stakeholders; and observations of staff interactions with customer service systems.

The literature review will be conducted early in the study and will help frame customer service components and practices and highlight research findings for program outcomes related to customer service components. The State document and data systems review will build off of these findings and allow the team to develop a State selection index that will include States’ SNAP structure, approaches to customer service, indicators of program performance, and the FNS Region, and use data from the review and index to select case study States. It will entail reviewing extant information from State agency and other websites and any publicly available documents on customer service approaches and monitoring strategies.

Interviews with SNAP and key partner staff (e.g., local anti-hunger organization or other outreach partners) in case study States will elicit important information on how States approach customer service in SNAP, including along the multiple aspects of the study’s framework, such as strategy, operations, and funding. The research team will interview SNAP staff at the State level as well as regional and local levels (e.g., county level staff). The research team will also conduct interviews with key partner staff, such as from food banks and other food security organizations, who interface with frontline SNAP staff and participants. Finally, recognizing the importance of a customer-centered approach, site visits will include observations of SNAP staff interacting with their client management software and discussions with them about ease of use and pain points for efficient customer service.

Overview of Technical Approach

Emerging research suggests that, while a focus on customer service is beneficial, this approach should be expanded to place the participants engaging in public services at the center of program operations. A customer-centered approach is a step forward because it views public service clients as active participants and co-designers of public services, rather than simply recipients of services (Grönroos, 2019). In addition, without placing the participant at the center of their operations and examining customer experience overall, government agencies cannot achieve their missions (Osborne & Strokosch, 2021). Since FNS’ mission is that “No American should have to go hungry”, a customer-centered approach to service delivery should focus on how participants use SNAP to achieve that goal, and what the program can change to empower participants to achieve food security.

This customer-centered approach has become an important component of policy. In late 2021, the Biden-Harris administration issued an Executive Order titled “Transforming Federal Customer Experience and Service Delivery to Rebuild Trust in Government”, which directed “Federal agencies to put people at the center of everything the Government does.” Specifically, the Order includes 36 customer experience (CX) improvement commitments across 17 Federal agencies, all of which aim to improve people’s lives and the delivery of Government services (White House, 2021). Moreover, FNS is listed as one of 35 “high-impact service providers.”

To support a systematic exploration of customer service in SNAP, we propose a draft conceptual framework to guide the study. This framework, which was created by the Veterans Affairs and U.S. Digital Service, accounts for a wide range of factors potentially influencing customer experience initiatives (Veteran Affairs and U.S. Digital Service, 2020). This framework will anchor our entire study, beginning with our approach for the literature review, continuing with the case study selection, and ending with case study data analysis and reporting. We will update this framework with information gathered during the study and adapt it for FNS. The result will be a conceptual model that FNS and State agencies can use to analyze, monitor, and improve SNAP customer service initiatives and processes. The research team will use the framework to organize lessons learned and best practices, indicate remaining knowledge gaps, and help inform future efforts to strengthen customer service practices in SNAP.

Consistent with a customer-centered approach, our framework (Exhibit 1) puts customers (meaning SNAP applicants and participants) at the center of efforts to improve customer service. In addition, SNAP customers are not a monolithic group -- previous research on SNAP access has shown that particular groups experience more barriers than others. Thus, it will be important to assess how customer experience improvement efforts have the potential to affect different groups and whether they might affect the equity of benefit access. Moreover, the model centers frontline workers who are responsible for carrying out many customer service improvement efforts. Our experience in evaluating SNAP policies designed to increase access for vulnerable populations (Levin et al., 2020) tells us that modernization policies aimed at creating a better user experience can end up burdening already-overloaded frontline workers, which then works against the very goal of the policy. Therefore, it will be important to assess the extent to which customer experience-focused efforts result in a better experience for frontline staff.

Exhibit 1. U.S. Veterans Affairs and U.S. Digital Service Framework

Other important features of the framework are:

Strategy: What are the agency’s customer service initiatives? Do they have buy-in from multiple levels of SNAP operations staff, such as State and local administrators, local supervisors and frontline staff?

Operations: Are customer experience indicators built into agencywide performance metrics?

Funding: Are customer experience improvement efforts properly funded, and how?

Organization: Are there customer experience-focused staff positions at various levels within the organization, such as at the State and county levels?

Culture: Is customer experience included as an agency core value? How is this reinforced? Are local supervisors and frontline staff involved in designing customer experience solutions?

Incentives: What recognition (including increased compensation or release time) is there for employees who promote a customer experience agenda?

Partnerships: Is there collaboration and sharing of best practices from experts inside and outside of government, including people with lived experience applying for and using SNAP?

Capabilities: Do agency staff have the time, information, and tools to provide positive customer service? Does the agency have the right tools (e.g., customer experience surveys, access to data analytics & artificial intelligence) to monitor customer service performance?

The framework presented above will inform the entire research study. These categories will also guide the creation of coding systems for the literature review (Task 2) and of interview protocols and document review protocols (Task 4). This, however, is a draft framework, and the team expects that we will periodically refine it based on ongoing data analysis and our evolving understanding of the issues. For example, the literature review may suggest additional categories to consider, or how existing categories could be combined.

Detailed Work Plan

To meet the study’s objectives, we have developed a work plan for the remaining tasks. Our approach to carrying out these tasks, and the deliverable(s) associated with each, is described below.

Task 2: Conduct Research Review

To conduct the research review, we will use a multi-step process, relying on the Internet as the primary vehicle for our search. We will create a preliminary list of relevant studies, reports, and data by examining a variety of sources to ensure that all pertinent literature is included in the review and searching by topical areas. Our review will include three main sources of data: (1) materials focused on government safety net programs, such as SNAP and TANF, (2) broader industry research as it relates to client relations and safety net programs, and (3) innovations and promising practices related to customer service.

Initially, we will take a broad approach to our research review, noting all studies related to customer service across government programs. We will use citation management software, so that we can quickly and easily add articles to a list for consideration. From there, we will review summaries of the studies to refine the literature and derive a final list for inclusion in our analysis. This list will likely contain around 50 studies that examine customer service components and practices in detail. Importantly, SPR team members will document the findings for impact and outcomes studies in which customer service components are associated with increased enrollment, retention, and participant outcomes.

The selected studies will be added to our database, and we will begin to develop detailed categorization and coding to document the service components and participants included in the study, the research methods, and the study findings. Our citation management software will ensure consistency in our citations and provide the foundational list of sources. Categories we will use to describe the studies themselves include industry or program(s) represented, target population(s) addressed, research questions addressed, geographic location(s), and type of publication.

The coding process will be iterative, and our team’s researchers may discover new themes in the process of conducting the work. As such, we will start with a set of pre-established themes and add new ones as we code. We will schedule short check-ins with FNS as we work through the coding process to discuss preliminary findings and discuss any challenges. Ultimately, we will provide FNS with an Excel-based annotated bibliography of all sources reviewed.

We will prepare Deliverable 2.1 Draft Review List and submit it to FNS on December 5, 2022. We will incorporate FNS comments and submit Deliverable 2.2 Final Review List on January 17, 2023. We will submit Deliverable 2.3 Draft Annotated Bibliography on February 3, 2023, and Deliverable 2.4 Final Annotated Bibliography on March 6, 2023. This document will include each study’s title, author, major findings, publication date, and publication source, as well as a brief abstract. We will also append classifications from our coding, such as the component type, research method, and population. A final version of the annotated bibliography will be included as an attachment to the Final Report.

Task 3: Selection of Case Study States

To produce results applicable to the broadest range of States, case studies will include States with diverse approaches to supporting and monitoring customer service in SNAP. Because client experience is affected greatly by how States administer the program, we will also include States with diverse approaches to operating the program overall (e.g., States where the program is run at the county level and States where it is run at the State level). To select case study States that include this needed diversity, we will begin with a review of customer service strategies, then develop a State Selection Index1 that will include States’ SNAP structure, approaches to customer service, indicators of program performance, FNS Region, and use data from the review and index to select up to 9 States. Below we elaborate on each of these steps.

Review of Customer Service Strategies

We will use information from the research review in Task 2 on how States approach customer service as a starting point for mapping the range of approaches to customer service. We will augment this with a review of extant information from State agency and other websites and any publicly available documents on customer service approaches and monitoring strategies. This information will include, for example, any public State standards or measures on customer wait times in local offices, call center metrics (such as hold times and percentage of calls abandoned), the presence of staff or customer satisfaction surveys, processes for receiving and handling customer complaints, and any available documentation of staff training resources or requirements related to customer service. We will then hold discussions with staff from FNS headquarters and Regional Offices to gather staff knowledge on how States approach and monitor customer service as well as any indications of State interest in participation. We will also conduct discussions with one or more key stakeholders, such as Code for America, that work to enhance online access to SNAP for applicants and participants. We will synthesize this information in a clear, well-organized memo, describing the known range of SNAP customer service approaches and submit Deliverable 3.1. Memorandum on States’ Customer Service Strategies on February 10, 2023.

Development of State Selection Index

We will augment information on States’ customer service approaches with information on SNAP administration more broadly, as well as indicators of SNAP customer service performance to support a careful selection of case study States. We propose creating an index that catalogs important features of State SNAP administration, focusing on aspects likely to influence customers’ experience, such as (1) State versus county administration, (2) use of call centers and telephone interviews (3) availability of online client portals , (4) the extent of coverage of local offices open to the public, (5) availability of same-day service and self-service via kiosks in local offices, (6) extended local office hours, (7) transaction- or process-based versus case-based eligibility processing, (8) use of electronic case records, (9) administering eligibility for other programs along with SNAP via shared application and/or eligibility staff, and (10) use of contracted private vendor staff in customer-facing roles. The index will also include any known features of States’ approaches to customer service, indicators of program performance (including application processing timeliness, error rates and local wait time or call center hold times, if known), and the FNS Region.

This index will support a preliminary plan for selecting up to 9 case study States, by stratifying States across multiple implementation features of customer service design, which we will present in a second memo to FNS, Deliverable 3.2 Draft Case Study State Selection Memo, on March 3, 2023. This memo will include recommendations for States to select as well as a list of alternates and will describe how the recommended States would represent geographic diversity and variation in approaches to administering SNAP and in promoting and monitoring customer service.

State Selection and Recruitment

We will develop recruitment materials to promote State participation, including a study description that explains the study’s purpose and methods. The description will highlight the importance of identifying best practices in SNAP customer service and will underscore the benefits to participating States. These include gaining a deeper understanding of how customer service works in practice in their own systems and receiving lessons learned and best practices informed by their operations. We will also prepare a frequently asked questions document to provide additional context for prospective interview participants as well as email and call scripts to support a professional, consistent recruiting effort. These materials will be prepared for inclusion in the OMB package and will be submitted initially as Deliverable 3.3 Draft Recruitment Materials on March 3, 2023.

After OMB approval of materials and final selection of States by FNS, recruitment of States will begin with a letter from FNS informing its regional offices by email about which States FNS selected for the study and describing the recruitment process. FNS will then email the selected States, after which the research team will reach out to the States directly. To promote efficiency and streamline communication, SPR staff will recruit all States. Shortly after the FNS email is sent to the State, the SPR staff will send an introductory email to the State SNAP director that contains a basic introduction to the study, a formal project description, a letter of support from FNS, and a request to schedule a phone call to further discuss the study.

The staff will then schedule a virtual meeting with the State contact to provide an overview of the study, respond to any questions the State contact may have, and discuss the State’s potential participation. If the State agrees to participate, they will be assigned a liaison who will set up a meeting to schedule the site visit with appropriate staff. If the State declines to participate, the Project Director will communicate with FNS staff and go on to the next alternate State on the list.

Through each step of the recruitment process, mitigation strategies will be incorporated to minimize the risk of State refusal to participate. First, in anticipation that some States may decline participation for various reasons, we will identify with FNS several alternate States so that delays do not occur in the recruitment process. Further, recruitment materials and study processes will be developed to minimize staff burden and communicate the importance of the study. Throughout the State recruitment process, the Project Director will provide concise weekly reports to FNS on task progress and will adjust course as we receive guidance from FNS (Deliverable 3.4 Weekly Case Study State Selection Updates).

Task 4: Develop Data Collection Instruments

The team will craft data collection procedures and instruments for collecting in-depth qualitative data from State, county, and local SNAP staff on standards and measures of SNAP customer service across States. We will develop a primary interview guide based on the research questions and informed by the study’s conceptual framework, which will be refined based on the information gathered in Tasks 2 and 3. For example, we will include questions about the customer service strategies found most promising in the research review and will also address any specific challenges uncovered by that task. We will also incorporate and emphasize questions about the topics FNS identified as of interest during the orientation meeting, such as different modes of interaction between participants and staff, the employee experience, and alternative measures of customer service beyond application timeliness and accurate processing of eligibility determinations.

The primary interview guide will be comprehensive, including questions for use in all interviews. Site visitors will adapt the primary guide to tailor questions for specific respondents in each State. As part of the equity lens we bring to our work, the interview guide will reflect a thorough review of language to ensure sensitivity around how items are worded and how questions are posed to respondents. Instruments will also include a guide for deskside observations of SNAP staff as they work cases and provide customer service. The Draft Instruments and Procedures (Deliverable 4.1) will be submitted to FNS for review on January 27, 2023. After receiving review comments from FNS, we will submit Final Instruments and Procedures (Deliverable 4.2) on March 3, 2023.

We will pre-test the interview guide during the weeks of February 27 and March 6, 2023, with three interviews, each with a different respondent type. Having three separate interviews will ensure the respondents understand the phrasing and content of the questions and enable us to determine the need to add or remove questions. We will work with FNS to identify one State in which we can test the guide in one State-level SNAP administrator respondent interview, one local SNAP office respondent interview, and one stakeholder respondent interview. When selected, we will reach out to the State SNAP director to arrange the pre-test and identify respondents. Each interview will include one or two respondents of a similar type (typically the interview will be conducted with one respondent, but we can accommodate up to two individuals if the agency or organization prefers, for example if someone is newer to the position). We will then conduct the pre-tests over the phone with the respondents, with one researcher asking the questions and another listening and taking notes. At the end of each interview, we will debrief with the respondent(s) with a set of open-ended questions and probes to gather feedback on the interview guide and questions. We will plan for the three interviews to last 75 minutes each including the debrief discussion.

After completion of the pre-tests, we will revise the interview guide and write the Pre-test Memorandum (Deliverable 4.3) summarizing the revisions in response to the pre-test, and including the revised instruments, which will be submitted by March 24, 2023. We will make any needed final revisions to the instruments in response to FNS review comments. We will be eager to work with FNS to expedite this process, to the extent possible, so that OMB-Ready Instruments (Deliverable 4.4) can be delivered to FNS by April 7, 2023.

Task 5: Develop OMB ICR Package

The Draft Federal Register 60-day Notice (Deliverable 5.1) will be prepared by the team and submitted to FNS for review on March 10, 2023. After receiving and responding to feedback from FNS, the Final Federal Register 60-day Notice (Deliverable 5.2) will be submitted to FNS on April 7, 2023. Also, at the same time, we will submit the Draft OMB ICR package to FNS (Deliverable 5.3). The initial submission will include a supporting statement, the OMB-ready data collection instruments, revised drafts of recruitment letters and scripts, informed consent procedures, and all other required elements and appendices. It will also include specific procedures for virtual interviews, in the case that the public health status at the time of the planned site visits might prevent in-person interviews.

At the end of the 60-day period, we will summarize all public comments received in response to the Federal Register Notice and will draft responses to each comment to be sent by FNS as needed, including a summary of the actions taken to address the comments. A summary of the public comments will be included in the Supporting Statement and the comments will be provided as an appendix to the OMB package.

Throughout the 52-week OMB review period, our team will respond to each level of review within FNS and after submission to OMB. We will participate in any conference calls requested to present the study to OMB or respond to OMB comments. The OMB Package will be considered final (Deliverable 5.4) upon approval by OMB. If we receive OMB approval sooner or later than anticipated, we will work closely with FNS to discuss strategies for proceeding with data collection and revising timelines.

Task 6: Train Data Collectors

Our team will develop a training plan and materials and train site visit data collectors to ensure that they collect high quality and consistent data. Shortly before the OMB package is expected to be released, we will schedule the training, with a minimum of three weeks advance notice to FNS, should FNS staff want to participate in the trainings as observers. The study team will ensure flexibility in scheduling so that the training can be held earlier if OMB approval comes more quickly than anticipated. We will submit Deliverable 6.1 Draft Data Collector Training Plan at that time and submit Deliverable 6.2 Final Data Collector Training Plan, including changes addressing FNS’ comments on the draft plan, in advance of the training.

Trainings will be virtual (using Zoom or similar technology) and will last four hours. We will provide all data collectors with background information and an overview of the study, the study objectives and research questions, and the data collection activities. To ground their understanding of the work, data collectors will hear a summary of customer service models, best practices, and challenges as identified in Task 2, the research review. Specific research resources found to be especially relevant will also be shared. Trainings will cover procedures for identifying respondents and scheduling visits, interviewing techniques, taking notes, addressing potential challenges, and post-visit activities. Training will also include equity issues in interviewing such as awareness of power dynamics, importance of language used, openness to other ways of thinking and following new paths of discovery. We will model exemplary interviewing techniques for data collectors using site visit protocols, and site visitors will practice asking interview questions through role plays to demonstrate mastery of the protocols and interviewing techniques.

Within two weeks of the conclusion of data collector training, we will submit Deliverable 6.3, Data Collector Training Memorandum describing the training, issues encountered and how they have been addressed, and results of the quality control processes used to ensure data collectors are ready to begin work collecting data on the study.

Task 7: Collect and Analyze Case Study Data

The research team will collect case study data during two-day in-person site visits to each State that will include interviews with State, regional and local SNAP staff and key stakeholders, review of relevant documents and reports, and observations of staff interactions with customer service systems. If the public health status at the time prevents in-person interviews, the site visit will be moved to a virtual platform (i.e., Zoom). Findings from these site visits will address the research questions in objectives 1 and 2 and will inform the case studies. Below we describe the three main sources of case study data as well as our plans for analyzing each source: (1) interviews, (2) document review, and (3) user experience observations.

Interviews with SNAP State, regional and local staff, and stakeholders

This study focuses on State customer service efforts in SNAP, including how they use data to measure key customer service metrics, and ensure continuous improvement with the goal of improving customer experiences and increasing SNAP enrollment. Therefore, we plan to conduct up to 10 interviews of State and local SNAP administrative and frontline eligibility staff in each State, using the approved final data collection instruments. In addition, site visitors will seek to conduct up to two interviews with State Ombudspersons, SNAP outreach providers, or advocates who work with SNAP applicants and participants to enhance program access. We anticipate conducting up to 12 interviews with respondents for each State, with a maximum of 108 interviews across nine States. With participant consent, interviews will be audio-recorded; recordings will be transcribed verbatim.

Document review

Before and during the site visit, we will collect available State data documenting the implementation and measurement of SNAP customer service standards. We will inquire about gaining access to any aggregate reports and data summaries from the following sources: State performance reporting systems, including call center metrics where available; SNAP Quality Control and Error rates systems; and application timeliness data. Other documents may include State training materials for customer service, customer service bill of rights, frameworks for customer service, and others as available. The review and analysis of these documents will offer key insights about how each State developed and carried out its customer experience improvement projects.

User experience observations

While on-site, the research team will observe SNAP staff interacting with their client management software, and ask them questions about ease of use, pain points for efficient customer services, and any workarounds they have developed to address them. We will synthesize findings from these observations with qualitative data from staff interviews on how staff perceive the performance of their State’s client management software and customer-facing online tools. Examples of interview questions on this topic will include, “What are some of the clear system-related issues that participants and applicants identify? Which specific step in the process is related to these issues? Do they stop them from completing their tasks?”

Site visit data analysis

After completing the site visits, we will analyze the data collected to (1) explore how each case study State defines and measures good and/or bad customer service for SNAP applicants and participants; and (2) describe how the State SNAP agency implements and refines its customer service approach. We will organize the evidence from each data source, including interviews, observations, and documents, to ensure that findings depend on mutually confirming lines of evidence. The site visit teams will upload their finalized site visit notes into an NVivo database, organized by discussion guide question, which the study team will then use for analysis. If we identify any holes in the data during this process, the site visit team will follow up with respondents by telephone or email to ensure data accuracy and completeness.

Data will be analyzed using content analysis based on the study framework, where themes will be identified, coded, and linked to capture the diverse views of study participants. A code book will be created to guide the coding of data to ensure uniformity among multiple coders. The basic structure of the code book will mirror the study’s conceptual scheme (strategy, operations, funding, etc.), but new codes will be created as data are sorted and categorized. Data will be analyzed across States as well as by other defining characteristics (e.g., geographies). This analysis will inform our update to the study framework and identification of best practices in SNAP customer service.

During the data collection phase, we will provide weekly data collection status updates to FNS (Deliverable 7.3). Upon completion of the site visits, we will send a memorandum describing the data collection for FNS review (Deliverable 7.1). We will submit a final case study memorandum (Deliverable 7.2), with revisions to address any comments from FNS.

Task 8: Final Report

Our team will prepare and submit a report to FNS, in draft, revised draft, and final versions (Deliverables 8.1, 8.2 and 8.3). This final report will address the research objectives as described in the final study plan and will present our finalized conceptual model that FNS and State agencies can use to analyze, monitor, and improve SNAP customer service initiatives and processes. In addition to short case studies of each of the nine States, the report will also include a summary of State practices derived from a cross-site analysis of the data collected from the nine States that will include lessons learned and best practices, remaining knowledge gaps, and recommendations for future efforts to strengthen customer service practices in SNAP.

W

Final Report Elements A

brief executive summary A

full technical report that includes: An

introduction and background to the project The

study objectives A

discussion of study methodology A

brief description of the States studied Detailed

findings from the case studies and research review A

detailed discussion of policy implications, lessons learned, best

practices, and any limitations A

summary, including overall study findings, general lessons

learned, best practices, and recommendations Technical

appendices to fully document all technical specifications and

analytic procedures

The final report will be written for a broad, non-technical audience and will be visually engaging and easy to read using iconography and graphics. The first draft will be provided to FNS for comment and review in electronic form using Microsoft Word. Upon receiving FNS comments, we will revise the draft final report addressing all comments by reviewers and submit in electronic form a revised draft final report. The final report will incorporate any additional comments or revisions, including those raised at the study briefing.

Our team will ensure that the report is accessible for all users, by using a report format that is consistent with the requirements of the most recent version of the Government Printing Office Style Manual as well as the USDA Visual Standards Guide. Furthermore, along with five bound, hard copies, the electronic version will be submitted in Microsoft Word and PDF formats that meet 508 accessibility standards.

Task 9: Prepare and Submit Data Files

At the time of submission of the draft final report, we will provide FNS with copies of interview transcripts (with personally identifiable information removed) used to analyze the qualitative data, including a crosswalk of the codes/nodes that were used to inform responses to the research questions (Deliverable 9.1 Draft Data Files and Documentation). All data files will be in Excel or PDF documents. We will also submit to FNS final versions of all data files and documentation that incorporate changes necessitated by FNS comments on the draft documents or revisions to the analyses included in the final report (Deliverable 9.2 Final Data Files and Documentation).

Task 10: Final Briefing

Our team will conduct a briefing/presentation for FNS managers and staff at the end of the contract. This briefing will be conducted either virtually or in-person at a location identified by FNS. The presentation will include slides that provide an overview of the study objectives, methodology, major findings and limitations, and conclusions. The presentation will be designed with an eye to including the appropriate level of detail to convey the key findings without overwhelming the audience. The briefing will include ample time for questions and discussion by audience members. Visually engaging materials (light on text and high on graphics) in PowerPoint will be prepared and submitted to FNS for review two weeks prior to the presentation (Deliverable 10.1). An electronic copy of the final presentation, incorporating FNS feedback, will be emailed to the COR at least two days before the briefing (Deliverable 10.2). Feedback from the briefing will be incorporated into the final version of the report.

Task 11: Submit Monthly Progress Reports

We will produce monthly progress reports that include the task order title, number, award date, period of performance covered by the report, and number in the sequence of monthly reports. The report will describe activities (by subtask) that were carried out during the reporting period, descriptions of and potential solutions to technical or contractual issues (and any delays that have been encountered), descriptions of planned activities (by subtask) and next steps for the next reporting period, an updated timeline and schedule, including a table displaying all project deliverables (by subtask) and their due dates, and the dates the deliverables were accepted by the COR. The Project Director will notify the COR by email or phone of any project-related delays and will also be available for monthly phone calls to discuss research progress and answer questions from FNS staff. All monthly progress reports will be submitted in electronic format directly to the FNS COR by the fifteenth day of the month.

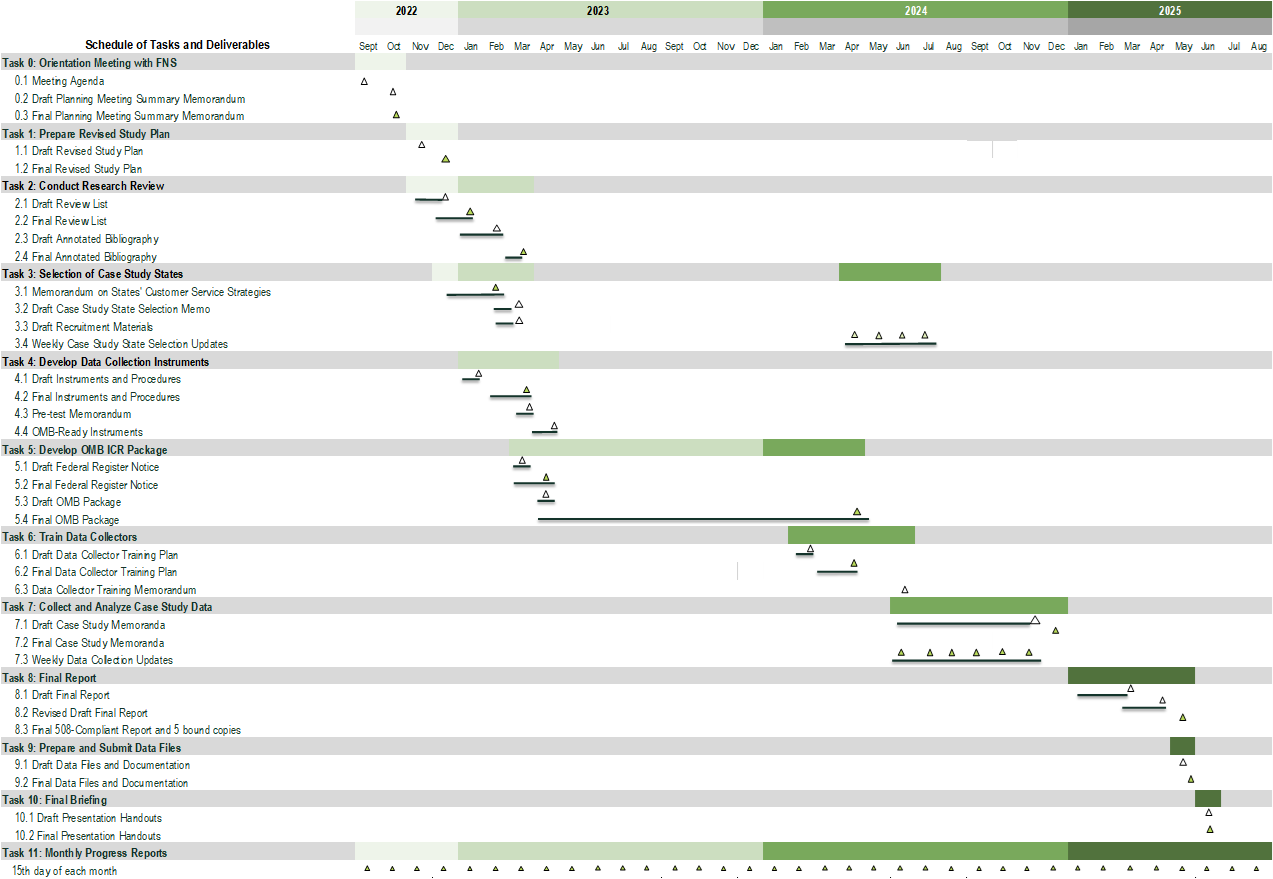

Study Schedule of Tasks and Deliverables

Task |

Deliverable |

Due Date |

0 |

Orientation Meeting with FNS |

|

|

0.1 Meeting Agenda |

Completed September 23, 2022 |

|

0.2 Draft Planning Meeting Summary Memorandum |

Completed October 7, 2022 |

|

0.3 Final Planning Meeting Summary Memorandum |

Completed October 13, 2022 |

1 |

Prepare Revised Study Plan |

|

|

1.1 Draft Revised Study Plan |

Completed November 15, 2022 |

|

1.2 Final Revised Study Plan |

December 30, 2022 |

2 |

Conduct Research Review |

|

|

2.1 Draft Review List |

Completed December 5, 2022 |

|

2.2 Final Review List |

January 10, 2023 |

|

2.3 Draft Annotated Bibliography |

January 27, 2023 |

|

2.4 Final Annotated Bibliography |

February 27, 2023 |

3 |

Selection of Case Study States |

|

|

3.1 Memorandum on States’ Customer Service Strategies |

February 10, 2023 |

|

3.2 Draft Case Study State Selection Memo |

March 3, 2023 |

|

3.3 Draft Recruitment Materials |

March 3, 2023 |

|

3.4 Weekly Case Study State Selection Updates |

Weekly throughout State selection expected to begin in May 2024 |

4 |

Develop Data Collection Instruments |

January – March 2023 |

|

4.1 Draft Instruments and Procedures |

January 27, 2023 |

|

4.2 Final Instruments and Procedures |

March 3, 2023 |

|

4.3 Pre-test Memorandum |

March 24, 2023 |

|

4.4 OMB-Ready Instruments |

April 7, 2023 |

5 |

Develop OMB ICR Package |

|

|

5.1 Draft Federal Register Notice |

March 10, 2023 |

|

5.2 Final Federal Register Notice |

April 7, 2023 |

|

5.3 Draft OMB Package |

April 7, 2023 |

|

5.4 Final OMB Package |

Out of OMB April 2024 |

6 |

Train Data Collectors |

|

|

6.1 Draft Data Collector Training Plan |

February 2024 |

|

6.2 Final Data Collector Training Plan |

April 2024 |

|

6.3 Data Collector Training Memorandum |

June 2024 |

7 |

Collect and Analyze Case Study Data |

|

|

7.1 Draft Case Study Memoranda |

November 2024 |

|

7.2 Final Case Study Memoranda |

December 2024 |

|

7.3 Weekly Data Collection Updates |

Weekly throughout data collection expected to begin in June 2024 |

8 |

Final Report |

|

|

8.1 Draft Final Report |

March 2025 |

|

8.2 Revised Draft Final Report |

April 2025 |

|

8.3 Final 508-Compliant Report and 5 bound copies |

May 2025 |

9 |

Prepare and Submit Data Files |

|

|

9.1 Draft Data Files and Documentation |

May 2025 |

|

9.2 Final Data Files and Documentation |

May 2025 |

10 |

Final Briefing |

|

|

10.1 Draft Presentation Handouts |

June 2025 |

|

10.2 Final Presentation and Handouts |

June 2025 |

11 |

Monthly Progress Reports |

Study Schedule Gant Chart

References

AbuSabha, R., Shackman, G., Bonk, B., & Samuels, S. (2011). Food security and senior participation in the Commodity Supplemental Food Program. Journal of Hunger & Environmental Nutrition, 6(1), 1–9. Retrieved from https://doi.org/10.1080/19320248.2011.549358

Bartlett, S., & Burstein, N. (2004). Food Stamp Program access study: Eligible nonparticipants. Abt Associates Inc. Retrieved from http://abtassociates.com/reports/efan03013-2.pdf

Cody, S., & Ohls, J. (2005). Evaluation of the USDA Elderly Nutrition Demonstrations: Volume I, evaluation findings. Washington, D.C.: Mathematica Policy Research. Retrieved from http://www.mathematica-mpr.com/~/media/publications/PDFs/reachingoutI.pdf

Gabor, V., Williams, S. S., Bellamy, H., & Hardison, B. L. (2002). Seniors’ views of the Food Stamp Program and ways to improve participation–focus group findings in Washington state. U.S. Department of Agriculture, Economic Research Service. Retrieved from http://162.79.45.195/media/1772673/efan02012.pdf

Ganong, P., & Liebman, J. B. (2018). The decline, rebound, and further rise in SNAP enrollment: Disentangling business cycle fluctuations and policy changes. American Economic Journal: Economic Policy, 10(4), 153-76.

Grönroos, C. (2019). Reforming public services: does service logic have anything to offer?. Public Management Review, 21(5), 775-788.

Heflin, C., & Mueser, P. (2010). Assessing the impact of a modernized application process on Florida’s Food Stamp caseload. University of Kentucky Center for Poverty Research Discussion Paper Series. 52. Retrieved from http://ukcpr.org/research/snap/assessing-impact-modernized-application-process-floridas-food-stamp-caseload

Klerman, J. A., & Danielson, C. (2009). Determinants of the Food Stamp Program caseload. U.S. Department of Agriculture, Economic Research Service and RAND Corporation. Retrieved from https://naldc.nal.usda.gov/download/32849/PDF

Levin, M., Negoita, M., Goger, A., Paprocki, A., Gutierrez, I., Sarver, M., & Kauff, J. (2020). Evaluation of Alternatives to Improve Elderly Access to SNAP. Washington, DC: United States Department of Agriculture, Food and Nutrition Service.

Mabli, J., Martin, E. S., & Castner, L. (2009). Effects of economic conditions and program policy on state Food Stamp Program caseloads 2000 To 2006. Washington, D.C.: Mathematica Policy Research. Retrieved from https://www.mathematica-mpr.com/our-publications-and-findings/publications/effects-of-economic-conditions-and-program-policy-on-state-food-stamp-program-caseloads-2000-to-2006

Marimow, Ann E. “Lawsuit alleges widespread problems in District-run food stamp program.” Washington Post, August 28, 2017. https://www.washingtonpost.com/local/public-safety/widespread-problems-in-district-run-food-stamp-program-alleged-in-new-lawsuit/2017/08/28/d4cb6c9c-8bf5-11e7-84c0-02cc069f2c37_story.html?utm_term=.a1a296867850

Osborne, S. P., & Strokosch, K. (2022). Participation: Add‐on or core component of public service delivery? Australian Journal of Public Administration, 81(1), 181-200.

Ratcliffe, C., McKernan, S. M., & Finegold, K. (2007). The Effect of state Food Stamp and TANF policies on Food Stamp Program participation. Washington, D.C.: Urban Institute. Retrieved from http://www.urban.org/sites/default/files/alfresco/publication-pdfs/411438-The-Effect-of-State-Food-Stamp-and-TANF-Policies-on-Food-Stamp-Program-Participation.PDF

Rutledge, M.S. & Wu, A.Y. (2014). Why Do SSI and SNAP Enrollments Rise in Good Economic Times and Bad? Center for Retirement Research, Boston College Working Paper http://crr.bc.edu/wp-content/uploads/2014/06/wp_2014-10.pdf

Valdivia, Sebastián Martínez. “Advocates sue Missouri Department of Social Services over call center dysfunction.” KBIA, February 22, 2022 https://www.kbia.org/health-wealth/2022-02-22/advocates-sue-missouri-department-of-social-services-over-call-center-dysfunction

Veteran Affairs and U.S. Digital Service, 2020, Customer Experience Cookbook https://www.va.gov/ve/docs/cx/customer-experience-cookbook.pdf

White House,

2021. Fact Sheet:

Putting the Public First: Improving Customer Experience and

Service

Delivery for the American People.

https://www.whitehouse.gov/briefingroom/statements-releases/2021/12/13/fact-sheet-putting-the-public-first-improvingcustomer-experience-and-service-delivery-for-the-american-people/

1 In county-administered States, we will research both the State and county levels and include relevant information from both. Approaches in specific counties may be the focus in county -administered States, rather than the State as a whole. This will be reflected in the State Selection Index.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Caleb van Docto |

| File Modified | 0000-00-00 |

| File Created | 2023-11-01 |

© 2026 OMB.report | Privacy Policy