APEC IV OMB Part A (Revised 2-7-22)_PRAO clean

APEC IV OMB Part A (Revised 2-7-22)_PRAO clean.docx

Fourth Access, Participation, Eligibility, and Certification Study Series (APEC IV)

OMB: 0584-0530

Supporting Statement – Part A

for

OMB Control Number 0584-0530

Fourth Access, Participation, Eligibility, and Certification Study (APEC IV)

February 2022

Project Officer: Amy Rosenthal

Office of Policy Support

Food and Nutrition Service

United States Department of Agriculture

1320 Braddock Place

Alexandria, VA 22314

Email: amy.rosenthal@usda.gov

Table of Contents

Part A. Justification A-1

A.1 Circumstances that Make the Collection of Information Necessary A-1

A.2 Purpose and Use of the Information A-3

Information to Measure Certification Error A-8

Information to Measure Aggregation Error A-11

Information to Measure Meal Claiming Error A-12

Online Application Sub-Study A-13

A.3 Use of Information Technology and Burden Reduction A-19

A.4 Efforts to Identify Duplication A-20

A.5 Impacts on Small Businesses or Other Small Entities A-21

A.6 Consequences of Collecting Information Less Frequently A-21

A.7 Special Circumstances Relating to the Guidelines of 5 CFR 1320.5 A-21

A.8 Comments to the Federal Register Notice and Efforts for Consultation A-22

Consultations Outside of the Agency A-24

A.9 Explanation of Payments and Gifts to Respondents A-24

A.10 Assurance of Confidentiality A-28

A.11 Justification for Questions of a Sensitive Nature A-29

A.12 Estimates of the Hour Burden A-30

A.13 Estimates of Other Annual Cost Burden A-32

A.14 Estimates of Annualized Cost to the Federal Government A-32

A.15 Explanation of Program Changes or Adjustments A-33

A.16 Plans for Tabulation and Publication A-34

A.17 Displaying the OMB Approval Expiration Date A-39

A.18 Exceptions to Certification for Paperwork Reduction Act Submissions A-39

Table of Contents (continued)

Appendices

A Applicable Statutes and Regulations

A1. Richard B. Russell National School Lunch Act of 1966

A2 Payment Integrity Information Act of 2019 (PIIA)

A3 M-15-02 – Appendix C to Circular No. A-123, Requirements for Effective Estimation and Remediation of Improper Payments

B Data Collection Instruments

B1 SFA Request for E-Records Prior School

Year

(Non-CEP Schools)

B2 SFA Reminder for E-Records Prior School

Year

(Non-CEP Schools)

B3 SFA Request for E-Records Current School

Year

(Non-CEP Schools)

B4 SFA Reminder for E-Records Current School

Year

(Non-CEP Schools)

B5a Household Survey – English

B5b Household Survey-Spanish

B6a Household Survey Income Verification Worksheet – English

B6b Household Survey Income Verification Worksheet – Spanish

B7a Instructions to De-Identify and Submit Income Documentation – English

B7b Instructions to De-Identify and Submit Income Documentation – Spanish

B8 Application Data Abstraction Form

B9 Request for E-Records (CEP Schools for ISP Data Abstraction)

B10 Reminder for E-Records (CEP Schools for ISP Data Abstraction)

B11 SFA Meal Participation Data Request

B12 SFA Director Survey

B13 SFA Pre-Visit Questionnaire

B14 School Pre-Visit Questionnaire

B15 School Meal Count Verification Form

B16 SFA Meal Claim Reimbursement Verification

Form –

All Schools

B17 State Meal Claims Abstraction

B18 Meal Observation – Paper Booklet

Table of Contents (continued)

B19 Meal Observation Pilot – Dual Camera and Paper Booklet Observations Protocol

C Recruitment Materials

C1 Official Study Notification from FNS Regional Liaisons to State CN Director

C2 SFA Study Notification Template

C3 SFA Study Notification and Data Request

C4 School Data Verification Reference Guide

C5 APEC IV FAQs (for States, SFAs, and Schools)

C6 SFA Follow-Up Discussion Guide (Study Notification and School Data Verification)

C7 Automated Email to Confirm Receipt of School Data

C8 SFA Confirmation and Next Steps Email

C9 SFA School Sample Notification Email

C10 School Notification Email

C11 SFA Follow-Up Discussion Guide (School Sample Notification)

C12 School Study Notification Letter

C13 School Follow-Up Discussion Guide

C14 School Confirmation Email

C15 School Notification of Household Data Collection

C16a Household Survey Brochure – English

C16b Household Survey Brochure –Spanish

C17 SFA Initial Visit Contact Email

C18 SFA Data Collection Visit Confirmation Email

C19 SFA Data Collection Reminder Email

C20 School Data Collection Visit Confirmation Email

C21a School Data Collection Visit Reminder Email, Including Menu Request—1 month

C21b School Data Collection Visit Reminder Email, Including Menu Request—1 week

C22a Household Survey Recruitment Letter – English

C22b Household Survey Recruitment Letter – Spanish

C23a Household Survey Recruitment Guide – Virtual Survey – English

C23b Household Survey Recruitment Guide – Virtual Survey – Spanish

C24a Household Confirmation and Reminder of Virtual Survey– English

C24b Household Confirmation and Reminder of Virtual Survey– Spanish

Table of Contents (continued)

C25a Household Consent Form – Virtual Survey– English

C25b Household Consent Form – Virtual Survey– Spanish

C26a Household Survey Recruitment Guide – In-Person Survey– English

C26b Household Survey Recruitment Guide – In-Person Survey– Spanish

C27a Household Fact Sheet Regarding In-Person Survey– English

C27b Household Fact Sheet Regarding In-Person Survey–Spanish

C28a Household Confirmation and Reminder of In-Person Survey– English

C28b Household Confirmation and Reminder of In-Person Survey–Spanish

C29 Crosswalk and Summary of the Study Website

D Public Comments

D1 Public Comment 1

D2 Public Comment 2

E Response to Public Comments

E1 Response to Public Comment 1

E2 Response to Public Comment 2

F National Agricultural Statistics Service (NASS) Comments and Westat Responses

G Westat Confidentiality Code of Conduct and Pledge

H Westat IRB Approval Letter

I Westat Information Technology and Systems Security Policy and Best Practices

J APEC IV Burden Table

Tables

A2-1 APEC IV data collection instruments A-5

A2-2 Comparison of APEC IV instruments to APEC III instruments A-7

A9-1 Parent/guardian incentive amounts A-25

A9-2 Parent/guardian incentive amounts for mode effect sub-study A-27

Part A. Justification

A.1 Circumstances that Make the Collection of Information Necessary

Explain the circumstances that make the collection of information necessary. Identify any legal or administrative requirements that necessitate the collection. Attach a copy of the appropriate section of each statute and regulation mandating or authorizing the collection of information.

This information collection request is a reinstatement with change of a previously approved collection (Third Access, Participation, Eligibility, and Certification Study Series (APEC III); OMB Number 0584-0530, Discontinued: 10/31/2020).

The National School Lunch Program (NSLP) and the School Breakfast Program (SBP), administered by the Food and Nutrition Service (FNS) of the U.S. Department of Agriculture (USDA), are authorized under the Richard B. Russell National School Lunch Act of 1966 (42 U.S.C. 17651 et seq.) (Appendix A1). The NSLP is the second largest of the nutrition assistance programs administered by FNS. Any public school, private non-profit school, or residential childcare institution (RCCI) is eligible to participate in the NSLP or SBP and receive federal reimbursement for meals served to children which meet specified nutrition standards. In fiscal year (FY) 2020, the NSLP provided nutritionally balanced meals daily to over 22 million children across nearly 100,000 public and non-profit private schools and RCCIs nationwide.1 The SBP served over 12 million children each day.2

Children attending schools that participate in the NSLP and SBP can be certified for school meals at the free, reduced-price, or paid rate, based on their household income or participation in means-tested programs, such as the Supplemental Nutrition Assistance Program (SNAP) or Temporary Assistance for Needy Families (TANF). Children from households with incomes below 130 percent of the federal poverty level (FPL) are certified at the free rate, children from households with incomes between 130 and 185 percent FPL are certified at the reduced-price rate, and children from households with incomes above 185 percent FPL are certified at the paid rate. The majority of meals provided through the NSLP and SBP, at 76 percent and 87 percent, respectively, were served at the free or reduced price rates in school year (SY) 2019-2020.3

The Payment Integrity Information Act of 2019 (PIIA) (P.L. 116-117)4 requires that FNS identify and reduce improper payments in the NSLP and SBP, including both underpayments and overpayments (Appendix A2). FNS relies upon the Access, Participation, Eligibility, and Certification (APEC) Study series to provide reliable, national estimates of improper payments made to school districts participating in the NSLP and SBP. Program errors fall into three broad categories:

Certification Errors: Incorrectly determining the eligibility of a student for a given level of reimbursement (free, reduced-price, or paid meals);

Meal Claiming Errors: Mistakenly approving as reimbursable a meal that does not meet the federal meal pattern requirements; and

Aggregation Errors: Incorrectly totaling the meal counts by reimbursement category.

Certification and aggregation errors contribute to improper payments, while meal claiming error is considered an operational error that does not result in an improper payment. The majority of improper payments are due to certification errors (6.52% of payments for the NSLP and 6.29% of payments for the SBP were improper payments due to certification errors, based on APEC III), while improper payments due to aggregation errors are relatively small (less than 0.5%). In addition to their effect on improper payments, these errors have impacts on households as well. For example, a student certified at the reduced-price level rather than the free level is paying more for a meal than they should under law. Although the APEC II and III findings show substantial improvement in certain types of error since APEC I, levels of program error remain significant. The fourth study in the APEC series will continue to support FNS’s mission to reduce improper payments by estimating error rates in SY 2023-24.

A.2 Purpose and Use of the Information

Indicate how, by whom, and for what purpose the information is to be used. Except for a new collection, indicate how the agency has actually used the information received from the current collection.

To comply with PIIA requirements, FNS needs updated, reliable measures to estimate national improper payments on a regular basis. In addition, FNS needs reliable measures to estimate program errors, both for the programs as a whole and among key subgroups, to develop technical assistance aimed at reducing error.

Results from previous APEC studies have informed strategies to reduce errors in the school meal programs, including (a) approaches to reduce certification error; (b) the implementation of training programs and professional certifications; (c) funding investments in State technology improvement; and (d) creating the Office of Program Integrity for Child Nutrition Programs. To continue developing strategies for the reduction of improper payments and program error, FNS needs up-to-date, accurate, and precise estimates of the error rates and their sources.

APEC IV will provide national estimates of improper payments in the NSLP and SBP during SY 2023-24. The findings can help FNS respond to Federal mandates, improve processes, and increase program integrity through the development of strategies to reduce errors in the future. The specific study objectives of APEC IV are:

Generate a national estimate of the annual amount of improper payments for SY 2023-2024 by replicating and refining the methodology used in prior APEC studies.

Provide a robust examination of the relationship of student (household), school, and school food authority (SFA) characteristics to error rates.

Conduct two sub-studies that will test the effect of data collection methods on the responses.

Online Application Sub-Study: Evaluate whether USDA’s online application prototype generates a more accurate and complete accounting of household size and income compared with other application types.

Household Survey Mode Effect Sub-Study: Assess the mode effect of conducting the household survey in-person versus virtually.

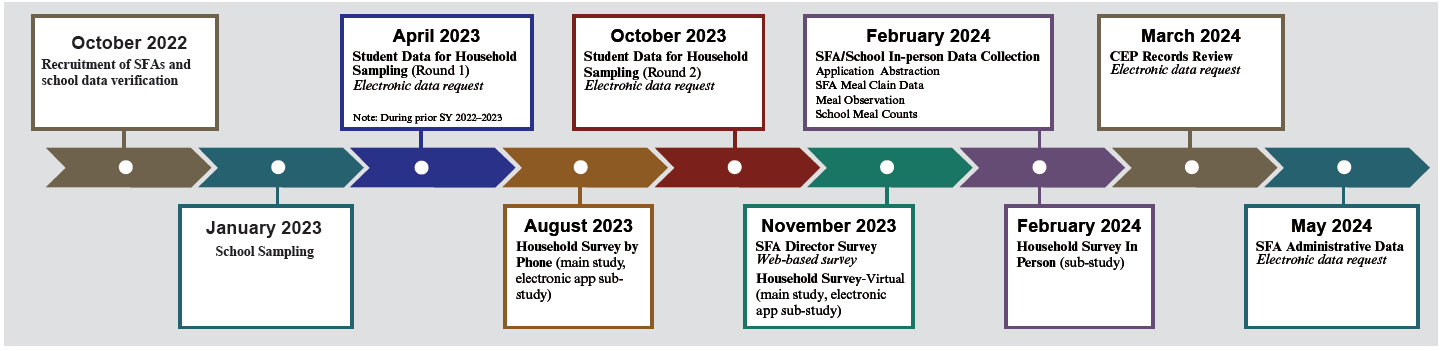

The findings from APEC IV will be shared through publicly available reports, but the findings will be presented in the aggregate so as not to identify any individual entity. An overview of the data collection timeline is provided in Figure A2-1.

Figure A2-1. Recruitment and data collection timeline |

|

The specific information to be collected in APEC IV is organized below by error type: certification error, aggregation error, and meal claiming error (see Table A2-1). The respondents from whom the information will be collected, how the information will be collected (including the frequency of collection), and how the information informs error rate calculations are discussed below. All information collected is voluntary.

Table A2-1. Data collection instruments

Instrument |

Source |

Key data elements |

Method |

Frequency of collection |

SFA Director Survey (Appendix B12) |

SFA Director |

• SFA characteristics • Training procedures • Technology used |

Web survey |

Once |

To Measure Certification Errors |

||||

Request and Reminder for E‑records in non-CEP schools (Appendix B1-B4) |

SFA records |

|

Remote submission |

Twice |

SFA Meal Participation Data Request (Appendix B11) |

SFA |

|

Remote submission |

Once |

Income-Eligibility Application Abstraction Form (Appendix B8) |

SFA records |

|

In-person OR remote submission |

Once |

Table A2-1. APEC IV data collection instruments (continued)

Instrument |

Source |

Key data elements |

Method |

Frequency of collection |

CEP Request and Reminder for E-Records (Appendices B9 & B10) |

SFA records |

|

Remote submission |

Once |

Household Survey (Appendices B5a and B5b) |

Parents/ Guardians |

|

Internet video call |

Once |

To Measure Aggregation Errors |

||||

School Meal Count Verification Form (Appendix B15) |

School records |

|

In-person abstraction OR remote submission |

Once |

SFA Meal Claim Reimbursement Verification Form – All Schools (Appendix B16) |

SFA records |

|

In-person abstraction OR remote submission |

Once |

State Meal Claims Abstraction Form (Appendix B17) |

State records |

|

Remote submission |

Once |

To Measure Meal Claiming Errors |

||||

Meal Observation (Appendix B18) |

School meal trays as they pass the cashier |

|

In-person |

Once |

The information collection in APEC IV is largely consistent with the methodology used in previous APEC studies. One change in the data collection approach from APEC III is that Westat will conduct the household survey virtually (via an internet video call) rather than in person. A second change is that, where feasible, Westat will increase the use of remote data collection from SFAs by developing a secure online portal through which SFAs can submit information to the study team. Both of these changes are expected to reduce burden for households and SFA staff. Table A2-2 provides an overview of the changes made to the APEC IV instruments compared to those approved in APEC III (OMB Number 0584-0530, Discontinued: 10/31/2020).

Table A2-2. Comparison of APEC IV instruments to APEC III instruments

Instrument |

Changes compared to APEC III |

SFA Director Survey (Appendix B12) |

|

SFA Pre-Visit Questionnaire (Appendix B13) |

|

School Pre-Visit Questionnaire (Appendix B14) |

|

To Measure Certification Errors |

|

Request

and Reminder for E-records in non-CEP schools |

|

SFA Meal Participation Data Request (Appendix B11) |

|

Income-Eligibility Application Abstraction Form (Appendix B8) |

|

CEP Request and Reminder for E-Records (Appendices B9 & B10) |

|

Household Survey (Appendices B5a/B5b) |

|

Household Income Verification Worksheet (Appendices B6a/B6b) |

|

Instructions to De-Identify and Submit Income Documentation (Appendices B7a/B7b) |

|

Table A2-2. Comparison of APEC IV instruments to APEC III instruments (continued)

Instrument |

Changes compared to APEC III |

To Measure Aggregation Errors |

|

School Meal Count Verification Form (Appendix B15) |

|

SFA Meal Claim Reimbursement Verification Form – All Schools (Appendix B16) |

|

State Meal Claims Abstraction Form (Appendix B17) |

|

To Measure Meal Claiming Errors |

|

Meal Observation –Camera Pilot Protocol (Appendix B19) |

Added the camera data collection pilot protocol. |

Information to Measure Certification Error

All SFAs will be asked to complete the SFA Director Survey (Appendix B12) beginning in November 2023, which will provide contextual information on the SFA and its processes. The survey will include questions on SFA policies, procedures, and characteristics that may be related to error rates or the understanding of errors. The survey will be offered via the web; however, a hardcopy version will be available upon request.

Certification error occurs when students are certified for levels of benefits for which they are not eligible. For non-Community Eligibility Provision (CEP) schools, program eligibility and the associated reimbursement rate is determined separately for each student. In CEP schools, the CEP group’s eligibility is determined jointly, resulting in one reimbursement rate for all the schools in the group. Therefore, the data collection processes for measuring certification errors differ by CEP status, as outlined below.

Non-CEP Schools

We will collect the following data from SFAs, schools, and households, to measure certification error in non-CEP schools:

SFAs:

Student data on eligibility for free and reduced-price meals in SY 2022-23 and SY 2023-24

Administrative data on school meal participation in SY 2023-24

SFAs and/or schools: school meal applications and direct certification data for SY 2023-24

Parents or guardians: Information on household size, income and experience with school meal applications

Data collection for measuring certification error in APEC IV will begin with two rounds of sampling of households for application abstraction and household surveys (Appendices B1-B4). The list of applicants and directly certified students from sampled schools (Request for E-Records in non-CEP Schools, Appendix B9) will be requested at the end of SY 2022-23, and again after the start of SY 2023-24. The data request will include students’ application date, direct certification data, eligibility status, and parent contact information. The reason for requesting the data twice is to overcome a challenge encountered in APEC III, wherein delays in receiving the data from SFAs led to delays in creating the sample for the household survey and conducting the household survey. It is critical to conduct the household surveys as soon as possible after the school meal application is submitted (targeted for within 6 weeks of certification in the fall). However, it is burdensome for SFAs to provide, within the busy first few weeks of school, the records with student eligibility for free and reduced-price meals, which is used to create the sample for the household survey. Therefore, we will first request the list of students who received free or reduced-price meals in SY 2022-23 in order to get a head start on creating the sample frame and reduce the burden on SFAs to provide the data quickly in the fall, which is their busiest time of year (students with no application and not directly certified are not eligible for sampling). We will finalize the sample using the SY 2023-24 lists provided by SFAs.

Toward the end of SY 2023-24, we will collect administrative data from SFAs on student meal participation (SFA Meal Participation Data Request, Appendix B11). Meal participation data will provide information on how many meals the sampled students received during the school year. Data will be submitted using a secure file transfer protocol (SFTP) website, and reviewed by study staff and analysts upon receipt.

Unlike prior APEC studies, the household survey will be administered by internet video call in APEC IV (Appendices B5-B7). Household surveys will be conducted in both English and Spanish from August 2023 through February 2024. In addition to completing the household survey, respondents will be asked to submit their income documentation to verify their school meal eligibility status. Respondents will be given two options for submitting their income documentation: (1) showing the interviewer the documentation through the video call, or (2) submitting pictures of the documentation via text or email after redacting their personally identifiable information (e.g., name, address, SSN) from the documents. Instructions for redacting and submitting income documentation will be provided to all respondents who are either unable or unwilling to show their documentation via video call (Appendices B7a/B7b).

School meal application and direct certification data will be collected primarily in person during SFA/school data collection visits (Appendix B8), but SFAs and schools will be given the option to submit these data via the SFTP site. Applications will only be abstracted for respondents to the household surveys. The on-site data collector will abstract key data elements from either the hard copy application or the electronic application record for each responding household, and enter the data directly into a web-based data entry form using a laptop. Application and direct certification abstractions will take place from February 2024 through June 2024 (or end of the school year). The data will be used to determine certification error.

CEP Schools

CEP records review will take place remotely from March 2024 through June 2024. We will collect the following data from SFAs to measure certification error in CEP schools:

Data on students enrolled at each school

Student first name and last name

Student date of birth

Whether identified as eligible for free meals

For identified students, their direct certification source (e.g., SNAP, TANF)

Administrative data on student meal participation

Beginning in March 2024, we will request electronic records from each SFA that contain information on student eligibility and certification (Appendix B9-B10). Toward the end of SY 2023-24, we will collect administrative data from SFAs on student meal participation (Appendix B11). Meal participation data will provide information on how many meals the sampled students received during the school year. Data will be submitted using a SFTP site and reviewed by study staff and analysts upon receipt.

Information to Measure Aggregation Error

Aggregation errors occur during the process of tallying the number of meals served each month (by claiming category) and then reporting the tally from school to SFA, from SFA to State, and from State to FNS for reimbursement. To measure aggregation error for meal claiming, data collectors will abstract records using the same approach as in APEC III:

From schools (Appendix B15). Monthly meal count data for each eligibility tier (free, reduced-priced, paid) for the target month. On-site data collectors will abstract meal counts by cashier and/or for the school and those submitted to the SFA.

From SFAs (Appendix B16). Meal claims reported to the State by the SFA for the target month for each eligibility tier.

From States (Appendix B17). Meal claims reported to FNS for SFAs/schools for the target month for each eligibility tier. States will also be asked two questions about their processes for obtaining, processing, and submitting meal counts to FNS.

SFA and school data will be collected during SFA/school data collection visits beginning in February 2024. SFAs and schools will also be allowed to submit the records via a secure website, which the data collector will assist them in accessing during the site visit. States will submit the records electronically.

One new, additional set of data will be collected from FNS to measure aggregation error: disaggregated FNS-10 data. Each month, States submit the total number of meals served across all of their SFAs to FNS via the FNS-10 form. These data determine the meal reimbursement amounts paid from FNS to the States, and the data has historically been reported at the aggregate. The disaggregated FNS-10 meal claim data, collected for the first time as part of the School Meal Operations Study information collection (OMB # 0584-0607), will be compared to the FNS disbursement amounts to States to develop an estimate of improper payments based on aggregation error. The disaggregated FNS-10 meal claim data will contain meal counts from every SFA nationally and the amount that FNS reimbursed to the States. This estimate will be compared to the estimate derived using the APEC III method described above. If the two methods yield comparable results, future iterations of APEC will no longer need to collect monthly meal count data from SFAs and States to measure aggregation error.

Information to Measure Meal Claiming Error

Meal observations will be conducted using the meal observation booklets (Appendix B18) as part of the in-person data collection visits that commence in February 2024. Meal observation data will be used to determine meal claiming errors by identifying meals incorrectly claimed as “reimbursable” based on meal components and/or the meal recipient. The following information will be recorded for each meal tray observed: (1) the items on each tray and the number of servings of each item; (2) whether the meal was served to a student or a non-student/adult; and (3) whether the cashier recorded the tray as a reimbursable meal. In APEC II and III, data collectors recorded meal observations in a hardcopy booklet and then transferred the data electronically to a laptop after each day of observation.

During the main data collection in SY 2023-24, we will also pilot test in nine schools a method of meal observation that uses cameras to record the items on the trays instead of a data collector (see Supporting Statement B and Appendix B19B19 for more detail). Results will be used to inform the use of the camera method in future FNS studies.

Analysts will use the meal observation data to determine if meals were reimbursable (based on USDA meal requirements) and compare this to how the meals were claimed. Meal claiming errors are estimated to examine the extent to which schools meet the meal pattern requirements and are not included in estimates of improper payments.

Online Application Sub-Study

The Online Application Sub-Study will assess whether the USDA’s integrity-focused online application prototype, which leads applicants through the application form using a question-by-question approach, generates a more accurate accounting of income and household size than other application modes. To answer this question, APEC IV will compare the household income reporting error rate by the following three types of applications that households use to apply for school meals:

Online application that incorporates characteristics of USDA’s integrity-focused online application prototype;

Other online application; and

Paper application.

As noted in Supporting Statement Part B2, we expect that the sample of households will be large enough to support this sub-study without having to draw a new sample. The analyses will compare the reporting error rates at the household level across the three application types (see also A16 for more detail around the planned analyses).

Household Survey Mode Effect Sub-Study

APEC IV will be the first in the APEC series to conduct the household survey primarily virtually. APEC III included some successful telephone surveys but did not compare them to in-person surveys. Therefore, it is unknown to what extent there is a mode effect, if any, in when households report virtually versus in-person. The Household Survey Mode Effect Sub-Study will compare the potential impact of conducting the household survey virtually versus in-person. For example, it may be that more income documentation is provided during the in-person survey because it is less burdensome to provide it in that circumstance. However, whether that additional documentation results in changes to certification status is unclear and will be tested in this sub-study.

All households will complete the household survey via internet videocall (with or without the video turned on, or by using the dial-in option). A subsample will be asked to complete the survey a second time, in person (see Supporting Statement Part B2 for more details on the sampling for the Household Survey Mode Effect Sub-Study). We will compare the consistency in reporting across the two modes, with a focus on income, household size, and certification status. We will test for both statistical differences and meaningful differences that change meal eligibility status.

Recruitment

Successful recruitment of SFAs, schools, and student households will help maximize response rates, ensure the analytic integrity of the study, and generate unbiased, reliable estimates. After SFA sampling is complete (see Supporting Statement Part B for more detail), State Child Nutrition Directors will be notified in an email from the study’s FNS Regional Liaisons (Appendix C1) of the SFAs in their State who have been sampled for study participation. The email will include the APEC IV FAQs (Appendix C5) and will request contact information for the SFA directors sampled for APEC IV. The email will also provide an optional template letter/email (Appendix C2) that States can use to contact the SFAs identified in the spreadsheet and inform them that they have been selected into the APEC IV sample, explain why their participation in the study is important, and encourage their participation.

Once Westat receives SFA contact information from the FNS Regional Liaisons, Westat will contact the SFA director to notify them of their selection for the study and confirm their participation. Beginning in October of 2022, Westat will send a recruitment packet by Federal Express and email to the SFA director. The recruitment packet will include: an introductory letter explaining the purpose and components of the study (SFA Study Notification and Data Request, Appendix C3); the SFA School Data Verification Reference Guide (Appendix C4); and the APEC IV FAQs (Appendix C5). The SFA Study Notification and Data Request (Appendix C3) will include a link for SFAs to access a list of their schools sampled for the study, obtained from the National Center for Education Statistics’ Common Core of Data (CCD). SFAs will be asked to provide updates and/or corrections to the list via the APEC IV secure web-based portal. Westat recruitment staff will conduct a follow-up phone call one week after sending the recruitment packet to ensure receipt of the request, answer any questions about the study, and provide assistance as needed (Appendix C6).

Upon SFAs’ confirmation of participation and verification of school data (Appendix C7), Westat will send a “confirmation of study participation letter” via email to the SFA (Appendix C8), which will include a summary of the data collection timeline, assurances of data privacy, and a link to the study website (see Crosswalk and Summary of Study Website, Appendix C29). Next, we will send participating SFAs a separate email (Appendix C9) that includes the list of schools sampled from the SFA, a request for the principal or other point of contact’s information for each of the sampled schools, and Westat’s schedule for contacting the schools. The email will also request that the SFAs notify their sampled schools about the study, using a template letter (Appendix C10). Approximately 1 week after this mailing, Westat will conduct a follow-up telephone call to the SFA (Appendix C11). During this call, the SFA will have an opportunity to ask any additional questions about the study and review the school contact information, providing updates as necessary. In addition, they will be asked to identify schools that may require special recruitment efforts and provide suggestions for increasing the likelihood of success.

School recruitment will begin once Westat has received from the SFAs the contact information for the school principal or other point of contact. Westat will send a recruitment packet to the sampled schools via email and by Federal Express. The materials (Appendices C5 and C12) will introduce the study, emphasize the mandate for the study, inform schools of their selection, and explain what their participation would entail. We will conduct follow-up calls to schools approximately 1 week after the mailing of the recruitment packet (Appendix C13). During these calls, recruiters will confirm receipt of the recruitment packet, answer questions about the study, and confirm participation. Upon confirmation of participation, we will send a school-specific confirmation of participation email that delineates the study components, tasks for data collection, and the study timeline (Appendix C14).

Westat will also work to ensure that school administrative staff confirm the legitimacy of the study if/when households recruited for the household survey contact the school for verification. We will notify schools regarding household survey recruitment (Appendix C15) and provide the household survey brochure (Appendices C16a/C16b) so that it can be shared with school staff and/or parents to inform them about the study and direct interested individuals to the study website.

Schools and SFAs will be notified of the in-person data collection visits via email (Appendices C17-C21). SFAs will be asked to complete the pre-visit SFA and school questionnaires, which ask about school and SFA operations relevant to on-site data collection (Appendices B13 and B14).

As discussed above under “Information to Measure Certification Error” in non-CEP schools, the household sample will be drawn from SFA’s SY 2022-23 list of students who applied or were directly certified for free or reduced-price meals. (Students with no application and not directly certified (i.e., paid with no application), are not eligible for sampling.) Household eligibility will be verified by checking the final list for SY 2023-24 received from the SFA and updating the sampling frame as needed as well as confirmed at the outset of the survey.

Westat will mail a recruitment packet to sampled households. The recruitment packet will introduce the household survey component of the study—referred to as the National School Meals Study—and will encourage participation in the household survey. The recruitment packet will include an introductory letter (Appendices C22a/C22b) and study brochure tailored to households (Appendices C16a/C16b) that provide the following information: (1) a study summary; (2) an explanation of the benefits of participation; (3) information about the types of questions that will be asked in the study, including questions about income; (4) the study website; (5) information about incentives and privacy; and (6) notification that they will be contacted in the next few days to schedule the a time to take the survey.

Trained interviewers will recruit households for participation within 1 week of Westat’s mailing of the recruitment packages (Appendices C23-C25). We expect that conducting the household survey via internet video call will improve response rates, in part due to the convenience of completing the survey virtually compared to in-person (as in APEC III).

At the time of the initial survey, respondents will not know if they have been selected for the Household Survey Mode Effect Sub-Study (described above). Only households from 20 purposively selected SFAs (from an average of three schools per SFA) will be asked at the end of the virtual survey if they are willing to participate in the sub-study (Appendices C26-C28). Those who agree to participate will form the sampling frame for the sub-study. We are targeting 300 household surveys for both modes of administration. To the extent feasible, based on respondent availability, the in-person household surveys will be administered by data collectors within 2 to 4 weeks of their virtual interview to minimize errors introduced due to the time lag between the virtual and in-person surveys. Sampling for the full study and the sub-studies is described in more detail in Supporting Statement Part B2.

A.3 Use of Information Technology and Burden Reduction

Describe whether, and to what extent, the collection of information involves the use of automated, electronic, mechanical, or other technological collection techniques or other forms of information technology, e.g., permitting electronic submission of responses, and the basis for the decision for adopting this means of collection. Also describe any consideration of using information technology to reduce burden.

The use of technology has been incorporated into the data collection to reduce respondent burden in the following ways:

Household Survey (Appendices B5a/B5b and B6a/B6b). The household survey will be conducted via secure internet video call (respondents may choose whether to turn on the video or not, or to use the dial in option) using a computer-assisted telephone interview (CATI) system. Thus, all of the 4,103 household survey responses will be collected electronically. Use of CATI automates skip patterns, customizes wording, completes response code validity checks, and applies consistent editing checks. Prior to the start of the survey, data collectors will administer informed consent and an electronic copy of the consent form, with the assurance of confidentiality, will be provided to the respondent. As part of the household survey, participants will be asked to use their camera to show the interviewer their income verification documentation. Participants will also be given the option to submit their de-identified income verification documentation (with all personally identifiable information redacted) through email or text using a unique ID (Appendices B7a/B7b).

SFA Director Survey (Appendix B12). The SFA director survey will be a web-based survey, and all 286 responses will be collected electronically.

Records Submitted by States, SFAs, and Schools (Appendices B1-B4, B8-B11, and

B13-17). States, SFAs, and schools will have the option of electronically submitting all records discussed in Question A2 (e.g., student data for household sampling, income eligibility and direct certification data, school meal participation data, CEP records for ISP, and meal claims). We estimate that 40 percent will be submitted electronically.

A.4 Efforts to Identify Duplication

Describe efforts to identify duplication. Show specifically why any similar information already available cannot be used or modified for use for the purposes described in Question 2.

There is no similar information collection. Every effort has been made to avoid duplication of data collection efforts.

As described in A2, the current APEC study will compare data submitted by SFAs and States on FNS-10 form to the meal count data collected as part of this study. If these data are comparable, future APEC iterations will be able to estimate aggregation error in a manner that is less burdensome for SFAs and States. In other words, APEC IV is purposefully duplicating the meal count data collection in an effort to reduce burden on the public in the future.

FNS has reviewed USDA reporting requirements, State administrative agency reporting requirements, and special studies by other government and private agencies. FNS solely administers the school meal programs.

A.5 Impacts on Small Businesses or Other Small Entities

If the collection of information impacts small businesses or other small entities (Item 5 of OMB Form 83-I), describe any methods used to minimize burden.

Excluding the largest SFAs (described further in Supporting Statement Part B), approximately 236 SFAs in the sample, or 83 percent of the SFAs participating in the study, fall below the threshold to be considered a small entity. Although there are small SFAs involved in this data collection effort, they deliver the same program benefits and perform the same functions as any other SFA. Thus, they maintain the same kinds of information on file. The information being requested is the minimum required for the intended use.

A.6 Consequences of Collecting Information Less Frequently

Describe the consequence to Federal program or policy activities if the collection is not conducted, or is conducted less frequently, as well as any technical or legal obstacles to reducing burden.

If this data collection were not performed, FNS would be unable to meet its Federal reporting requirements under PIIA to annually measure erroneous payments in the NSLP and SBP and identify the sources of erroneous payments as outlined in M-15-02 – “Appendix C to Circular No. A‑123, Requirements for Effective Estimation and Remediation of Improper Payments” (Appendix A3).

A.7 Special Circumstances Relating to the Guidelines of 5 CFR 1320.5

Explain any special circumstances that would cause an information collection to be conducted in a manner:

Requiring respondents to report information to the agency more often than quarterly;

Requiring respondents to prepare a written response to a collection of information in fewer than 30 days after receipt of it;

Requiring respondents to submit more than an original and two copies of any document;

Requiring respondents to retain records, other than health, medical, government contract, grant-in-aid, or tax records for more than 3 years;

In connection with a statistical survey, that is not designed to produce valid and reliable results that can be generalized to the universe of study;

Requiring the use of a statistical data classification that has not been reviewed and approved by OMB;

That includes a pledge of confidentiality that is not supported by authority established in statute or regulation, that is not supported by disclosure and data security policies that are consistent with the pledge, or which unnecessarily impedes sharing of data with other agencies for compatible confidential use; or

Requiring respondents to submit proprietary trade secret, or other confidential information unless the agency can demonstrate that it has instituted procedures to protect the information’s confidentiality to the extent permitted by law.

There are no special circumstances. The collection of information is conducted in a manner consistent with the guidelines in 5 CFR 1320.5.

A.8 Comments to the Federal Register Notice and Efforts for Consultation

If applicable, provide a copy and identify the date and page number of publication in the Federal Register of the agency’s notice, required by 5 CFR 1320.8 (d), soliciting comments on the information collection prior to submission to OMB. Summarize public comments received in response to that notice and describe actions taken by the agency in response to these comments. Specifically address comments received on cost and hour burden.

Describe efforts to consult with persons outside the agency to obtain their views on the availability of data, frequency of collection, the clarity of instructions and recordkeeping, disclosure, or reporting format (if any), and on the data elements to be recorded, disclosed, or reported. Consultation with representatives of those from whom information is to be obtained or those who must compile records should occur at least once every 3 years, even if the collection of information activity is the same as in prior years. There may be circumstances that may preclude consultation in a specific situation. These circumstances should be explained.

A notice was published in the Federal Register on August 4, 2021 (Volume 86, Number 147, pages 41938-41943). The public comment period ended on October 4, 2021. FNS received a total of two comments (Appendices D1-D2), one of which was germane to APEC IV and summarized below. Appendices E1 and E2 include FNS’s response to these comments. Neither of the comments resulted in changes to the study.

One commenter, writing on behalf of a professional organization, expressed concern about the study burden and suggested using data available through State agencies to minimize duplication of data collection and the impact on school food authorities. FNS shares the concern over the study burden and has significantly streamlined study instruments by removing unnecessary questions and eliminating some data collection requests altogether. In addition, APEC IV will explore the feasibility of using meal claim data submitted by States as part of another annual survey. If successful, future APEC studies will no longer request these data from SFAs, thereby minimizing SFA burden.

Consultations Outside of the Agency

The information request has also been reviewed by Jeffrey Hunt with the USDA National Agricultural Statistics Service (NASS) with reference to the statistical procedures. Those comments are in Appendix F and are incorporated appropriately throughout the OMB supporting statement.

Consultations about the research design, sample design, data collection instruments, and data sources occurred during the study’s design phase and will continue throughout the study. The following individuals/organizations have been or will be consulted about burden estimates and other characteristics associated with this data collection:

Name |

Title |

Organization |

Phone number |

Corby Martin, PhD |

Director; Ingestive Behavior, Weight Management & Health Promotion Lab |

Pennington Biomedical Research Center |

(225) 763-2585 |

Karen Kempf |

Food Service Director |

Fairview School District |

(773) 963-8346 |

Sharon Foley |

Director of School Nutrition |

Whitley County School District |

(606) 549-6349 |

Ms. Kempf and Ms. Foley both provided feedback on data collection instruments and recruitment materials that will be used with SFA Directors participating in the main study, and gave feedback on the expected burden of each request. Dr. Martin provides ongoing input on all aspects of the pilot study, from its design and data collection strategy to the analytic approach.

A.9 Explanation of Payments and Gifts to Respondents

Explain any decision to provide any payment or gift to respondents, other than remuneration of contractors or grantees.

FNS is requesting incentives for parents/guardians participating in the household survey and household income verification process. The use of incentives is part of a multidimensional approach to promoting study participation and minimizing nonresponse bias among households. Other approaches include conducting the household survey virtually rather than in-person (described in more detail in A2), communicating the importance of the study to households, developing a study-specific website, and attempting to reach non-respondents multiple times (see Supporting Statement B3 for strategies to increase response rates).5,6 The proposed incentives are designed to increase sample representativeness by encouraging participation among those who may have less interest in participating in a study about school meals. The parents/guardians to be recruited for APEC IV are from low-income households, and research has shown that providing incentives, particularly monetary incentives, increase cooperation rates and minimize non-response bias among low-income populations.7,8,9 In addition, improved cooperation rates reduce the need for call backs, which decreases survey costs. Finally, encouraging those less interested in the research to participate reduces non-response bias. The proposed incentives are listed in Table A9-1.

Table A9-1. Parent/guardian incentive amounts

Instrument or activity |

Appendix |

Incentive amount |

Hours per response |

Household Survey (video internet call) |

B5-B8 |

$40 gift card |

45 minutes |

Household Income Verification, including gathering the income documents (video call, text, or email) |

$20 gift card |

20 minutes |

|

Total |

|

$60 gift card |

65 minutes |

The incentives requested in APEC IV are similar to those approved in APEC III (0584-0530, Discontinued: 10/31/2020). APEC III incentives were based on experience with household recruitment and participation in APEC II. Specifically, only a small percentage of households provided documentation to verify income in APEC II. This was a large limitation to the study because the amount of missing data affected the accuracy of the study’s estimate of improper payments due to certification error, a key objective of the APEC study series. Therefore, a $20 incentive (in the form of a gift card) was added in APEC III to reflect the added level of effort needed for respondents to gather and supply income documentation. The additional incentive improved response rates of income documentation in APEC III compared to APEC II (89.3% for APEC III compared to <1% for APEC II).

APEC III, conducted in SY 2017-2018, was approved by OMB to provide an incentive of $50 to participating households, including the additional $20 gift card for the income verification documentation. For APEC IV, we propose a slightly higher incentive of a $60 gift card to account for 6 years of inflation and differences in the mode of data collection between APEC III and IV. Specifically, the household survey will be conducted virtually in APEC IV, rather than in person (described in more detail in A2). The virtual approach poses a different set of challenges compared with the in-person interviews in APEC III. During the household survey, respondents will have the opportunity to show the interviewer their income documentation via the video function. If the respondent is unable or unwilling, they can submit their de-identified documentation via text or email using a unique ID. The increase in the proposed incentive is designed to compensate participants for the costs they incur while participating in the survey virtually. These costs include internet, cell phone and data usage charges associated with completing the survey and sending the required income documentation to the study team (including the download of the video call software if the respondent chooses this method); cell phone and data usage costs associated with calls and texts needed to set up appointments and reminders to complete the survey; and child care that may be needed during the 65 minutes required to gather the income documentation and complete the household survey.

Study participants will be given their incentive in the form of a Visa Gift Card, with the option for it to be delivered through the mail or electronically (Visa eGift Card), after the interview is complete. Respondents who provide income documentation after the interview will receive an additional gift card with the second part of the incentive.

As part of the Household Survey Mode Effect Sub-Study discussed in A2, respondents within select SFAs will be invited to participate in an in-person administration of the survey. Households who agree to participate will form the sampling frame for the sub-study. Approximately 300 households will be targeted for participation in the sub-study. Respondents will complete the household survey and income verification a second time—in person rather than virtually—two to four weeks after the virtual survey administration. We propose an additional incentive of a $40 gift card to account for the completion of the household survey a second time, and an additional $20 gift card for the second provision of income verification documentation.

Table A9-2. Parent/guardian incentive amounts for Household Survey Mode Effect Sub-study

Instrument or Activity |

Appendix |

Incentive amount |

Hours per response |

Additional Household Survey (in person) |

B5-B6 |

$40 gift card |

45 minutes |

Additional Household Income Verification (in person) |

$20 gift card |

20 minutes |

|

Total |

|

$60 gift card |

65 minutes |

A.10 Assurance of Confidentiality

Describe any assurance of confidentiality provided to respondents and the basis for the assurance in statute, regulation, or agency policy.

All respondents’ information will be kept private and not disclosed to anyone except the analysts conducting this research, except otherwise required by law. Section 9(6B-C) of the National School Lunch Act (42 U.S. Code § 1758) (Appendix A1) restricts the use or disclosure of any eligibility information to persons directly connected with the administration or enforcement of the program.

The study team will ensure the privacy and security of electronic data during the data collection and processing period following the terms of protections outlined in the system of record notice (SORN) titled FNS-8 USDA/FNS Studies and Reports (volume 56, pages 19078–19080).

This information collection request asks for personally identifiable information and includes a survey that requires a Privacy Act Statement. The individuals at the SFA or school level participating in this study will be assured that the information they provide will not be released in a form that identifies them. Individual study participants will also be informed that there is no penalty if they decide not to respond to the data collection as a whole or to any particular questions. In addition, all members of the study team will sign a confidentiality and nondisclosure agreement (Appendix G).

No identifying information will be attached to any reports or data supplied to USDA or any other researchers. Names and phone numbers will not be linked to participants’ responses; survey respondents will have a unique ID number; and analyses will be conducted on datasets that include only these ID numbers. All data will be securely transmitted to the study team, and it will be stored in locked file cabinets or password-protected computers and accessible only to study team staff. Names and phone numbers of respondents will be destroyed within 12 months after the end of the data collection period. Westat’s Institutional Review Board (IRB) serves as the organization’s administrative body, and all research involving interactions or interventions with human subjects is within its purview. The IRB approval letter from Westat is in Appendix H.

Finally, the Privacy Act Statement was added to all instruments that collect personally identifiable information (PII) (Appendices B1-5, B8-11, B13-14). After the FNS Privacy Officer, Michael Bjorkman, reviewed the package, we also added the Privacy Act Statement to the Household Survey Consent Form (Appendices C25a/C25b) to ensure respondents viewed the statement before the survey began.

A.11 Justification for Questions of a Sensitive Nature

Provide additional justification for any questions of a sensitive nature, such as sexual behavior or attitudes, religious beliefs, and other matters that are commonly considered private. This justification should include the reasons the agency considers the questions necessary, the specific uses to be made of the information, the explanation to be given to persons from whom the information is requested, and any steps to be taken to obtain their consent.

Some of the questions in the household survey may be considered sensitive. These include questions on the following topics: household composition, household income, employment status, receipt of Federal or State public assistance, and race and ethnicity.

Questions on income, household composition, and the receipt of public assistance are necessary to establish the family’s eligibility for free or reduced-price school meal benefits, and will be used to estimate certification error and derive estimates of erroneous payments, which is a key objective of this study. Questions on race and ethnicity are necessary to provide demographic information on those participating in NSLP and SBP. Similar sensitive questions were asked in the previous APEC studies, with no evidence of harm to the respondents.

The household recruitment letter, brochure, consent form (Appendices C16a/C16b, C22a/C22b, C25a/C25b), and the data collector (using the recruitment call guide/protocol, Appendices C23a/C23b), will inform participants that their participation, and any information they provide, will not affect any benefits they are receiving. The consent forms will also inform all respondents of their right to decline to answer any question they do not wish to answer without consequences. In addition, the following Privacy Act Statement was added to all instruments that collect PII (Appendices B1-5, B8-11, B13-14). After the FNS Privacy Officer, Michael Bjorkman, reviewed the package, we also added the Privacy Act Statement to the Household Survey Consent Form (Appendices C25a/C25b).All survey responses will be held in a secured manner and respondents’ answers will not be reported or accessible to FNS. This study will adhere to Westat’s Information Technology and Systems Security Policy and Best Practices (Appendix I). These protections include management (e.g., certification, accreditation, and security assessments, planning, risk assessment), operational (e.g., awareness and training, configuration management, contingency planning), and technical (e.g., access control, audit and accountability, identification, and authentication) controls that are implemented to secure study data. This research will fully comply with all government-wide guidance and regulations as well as USDA Office of the Chief Information Officer (OCIO) directives, guidelines, and requirements.

A.12 Estimates of the Hour Burden

Provide estimates of the hour burden of the collection of information. Indicate the number of respondents, frequency of response, annual hour burden, and an explanation of how the burden was estimated.

12A. Indicate the number of respondents, frequency of response, annual hour burden, and an explanation of how the burden was estimated. If this request for approval covers more than one form, provide separate hour burden estimates for each form and aggregate the hour burdens in Item 13 of OMB Form 83-I.

With this reinstatement, there are 13,068 respondents (9,899 respondents and 3,169 non-respondents), 118,361 responses, and 21,013 burden hours. The estimated burden for this information collection, including the number of respondents, frequency of response, average time to respond, and annual hour burden, is provided in Appendix J. The average frequency of response per year for respondents and non-respondents is 9.27 and 8.39, respectively. The total annualized hour burden to the public is 21,013 hours (including 20,710 for respondents and 303 for nonrespondents). The estimates are based on prior experience with comparable instruments on APEC I, II, and III.

12B. Provide estimates of annualized cost to respondents for the hour burdens for collections of information, identifying and using appropriate wage rate categories.

The estimates of respondent cost are based on the burden estimates and use the U.S. Department of Labor, Bureau of Labor Statistics, May 2020 National Occupational and Wage Statistics.10 Annualized costs were based on the mean hourly wage for each job category. The hourly wage rate used for the State CN director is $48.64 (Occupation Code 11-9030, Education and Child Care Administrator). The hourly wage rate used for the State CN data manager is $47.80 (Occupation Code 15-1240, Database Administrator). The hourly wage rate used for the SFA director, SFA staff, and the school principal or other school administrator is $45.11 (Occupation Code 11‑9039, Other Education Administrator). The hourly wage rate used for the SFA-level data manager is $46.51 (Occupation Code 15-1299, Database Administrator). The hourly wage rate used for the food service (cafeteria) manager in schools is $29.33 (Occupation Code 11-9051, Food Service Manager). The estimated annualized cost for the household survey respondent uses the mean hourly wage for All Occupations (Occupation Code 00-0000), $27.07. The total estimated base annualized cost is $595,454.41. An additional 33 percent of the estimated base cost must be added to represent fully loaded wages, equaling $196,499.96. Thus the total respondent cost is $791,954.37.

A.13 Estimates of Other Annual Cost Burden

Provide estimates of the total annual cost burden to respondents or record keepers, resulting from the collection of information (do not include the cost of any hour burden shown in questions 12 and 14). The cost estimates should be split into two components: (a) a total capital and start-up cost component annualized over its expected useful life; and (b) a total operation and maintenance and purchase of services component.

Given that the household surveys will be conducted virtually in APEC IV, we will use a process for APEC IV that maintains some of the APEC III income verification procedures but adds an option for transitioning to a secure video call to “show” the interviewer the income documentation on video. Parents and caregivers who choose this option may need to acquire and install the video call software. The total estimated burden of downloading video call software is 15 minutes, or 0.25 hours. There is no additional burden associated with maintaining the software.

We estimate that 75 percent of households (6,594 parents) will choose to provide their income verification documentation through a video call, 15 percent of which (989 parents) we estimate will need to download the appropriate software. Therefore, the total annualized burden for acquiring video call technology for APEC IV is estimated to be: 247.3 hours.

There are no capital/start-up or ongoing operation/maintenance costs associated with this information collection.

A.14 Estimates of Annualized Cost to the Federal Government

Provide estimates of annualized cost to the Federal government. Provide a description of the method used to estimate cost and any other expense that would not have been incurred without this collection of information.

The annualized government costs include the costs associated with the contractor conducting the project and the salary of the assigned FNS project officer. The estimated cost of conducting the study is $9,991,714. An additional 3,000 hours of Federal employee time are assumed (2,500 hours for a GS-13, Step 1 program analyst at $44.15 per hour and 500 hours for a GS-14, Step 1 branch chief at $52.17 per hour for supervisory oversight11): $110,375 + $26,085 = $136,460 (estimated base cost to the Federal government). An additional 33 percent of the estimated base cost ($45,031.80) must be added to represent fully loaded wages, equaling $181,491.80 over the course of the contract. Thus the total cost to the Federal government across 5 years is $10,173,205.80, or an average of $2,034,641.16 annually for 5 years..

A.15 Explanation of Program Changes or Adjustments

Explain the reasons for any program changes or adjustments reported in Items 13 or 14 of the OMB Form 83-1.

This collection is a reinstatement of a previously approved information collection (APEC III; OMB Number 0584-0530, Discontinued: 10/31/2020) as a result of program changes, and will add 21,013 hours of annual burden and 118,361 responses to OMB’s inventory. This reinstatement reflects greater burden hours and a greater number of estimated responses compared to APEC III (13,042 hours and 59,016 responses). Specifically, there is an increase in 7,971 total burden hours and an increase in 59,345 responses in APEC IV compared to APEC III. The increase in burden is primarily due to a larger household sample in APEC IV than APEC III, which is necessary to account for the low response rate to the household survey in APEC III (31 percent). Also, the two sub-studies and the meal observation pilot study will add a small amount of burden (96 hours) to APEC IV. Finally, SFAs and States will be asked to provide meal count data for all of their schools and SFAs, respectively, in an effort to validate a less burdensome method for future APEC series (see Supporting Statement A2 and A4 for more detail). We have worked to offset the additional burden by thoroughly reviewing and streamlining the data collection instruments. In particular, the SFA director survey and the household survey were heavily edited to remove redundant questions.

A.16 Plans for Tabulation and Publication

For collections of information whose results are planned to be published, outline plans for tabulation and publication.

Many of the tabulations in APEC IV will mirror those in APEC III to ensure comparability in error rates. The key data to be tabulated and published are:

A national estimate of the annual amount of erroneous payments due to certification and aggregation error, based on SY 2023-2024 (Objective 1).

A robust examination of the relationship of student, school, and SFA characteristics to error rates (Objective 2).

The effect of the data collection mode on the responses to the household survey and whether the USDA’s online application prototype generates more accurate and complete accounting of household size and income (Objective 3).

Objective 1

Weighted data will be used to derive the national estimates of error rates due to certification and aggregation error. Erroneous payment rates and associated dollar amounts of erroneous payments, including overpayments, underpayments, and gross and net erroneous payments, will be estimated separately for NSLP and SBP as well as combined.

Calculation of Certification Rate Errors

Certification error occurs when students are certified for levels of benefits for which they are not eligible (e.g., certified for free meals when they should be certified for reduced-priced meals, or vice versa). For non-CEP schools, program eligibility and the associated reimbursement rate is determined separately for each student. In CEP schools, the CEP group’s eligibility is determined jointly, resulting in one reimbursement rate for all the schools in the group.

For non-CEP schools, once the applicant’s true certification status is independently determined, it will be compared with their current certification status. For CEP schools, we will identify certification errors by independently estimating the variation between the verified ISP and the current ISP being used by the school and SFA for reimbursement purposes. Once we estimate the certification errors for each meal type, we will compute the per-meal erroneous payments for a given student. The next steps are to (a) calculate the erroneous payment per meal, (b) sum over students to get national level error estimates, (c) estimate student or school level erroneous payments, (d) calibrate the weights, and (e) estimate national erroneous payments.

Calculation of Aggregation Error Rates.

Aggregation errors occur in the process of tallying the number of meals served each month by claiming tier and reporting it from the point-of-sale to the school, the school to SFA, the SFA to State, and from the State to USDA for reimbursement (we refer to each as an “aggregation stage”). For non-CEP schools, at each stage there can be meal overcounts or undercounts within each reimbursement tier (i.e., free, reduced-price, or paid), leading to potentially six types of errors at each of the four aggregation stages. For CEP schools, all reimbursable meals are free, so they only report the total number of reimbursable meals. As such, they can only make two types of errors (under- or overreporting) at each aggregation stage.

We will identify errors from daily meal count totals not being summed correctly at each of the stages of aggregation. To maintain comparability with prior studies in the APEC series, we will first follow the methodology used by prior studies. Because the meals for this analysis are reported at the school level, a school-level analysis weight will be derived for each sampled school. Once the errors are identified, we will estimate error rates by applying school weights; we will estimate errors at each stage of aggregation by (a) estimating school level errors, (b) estimating SFA-level errors, and (c) estimating state-level errors. To estimate national improper payment rates, we will use the FNS-10 disaggregated data to compare reimbursements made by FNS to reimbursements calculated independently using SFA data. The national estimates based on FNS-10 data will not require weights because they include all SFAs in the nation.

Calculation of Meal Claiming Errors

These errors occur when a meal is incorrectly classified as reimbursable or not, based on whether the meal served meets the specific meal patterns required for NSLP or SBP. This error occurs in school cafeterias at the point of sale, with the unit of analysis being a “meal.” That is, through observation we can determine if a cashier correctly classifies meals, but we will not know the student’s eligibility status. Meal claiming errors are operational errors that do not contribute to improper payments.

To identify meal claiming errors, we will determine if each tray is reimbursable based on the food items on the tray, the components offered, and whether the recipient was a student or non-student. Errors result when trays are incorrectly marked reimbursable when they are not, or when trays marked as not reimbursable are in fact reimbursable. We will estimate the national error rates by applying appropriate school weights and calculating the percentage of trays in error out of all trays. We will calculate these errors rates for NSLP and SBP separately.

The analyses for APEC IV will also compare key findings to those found in the previous three APEC studies using tests of significance. These key comparisons include certification and non-certification (meal claiming and aggregation) error rates and sources of error (e.g., point-of-sale, SFA meal counts) for NSLP and SBP separately as well as improper payment rates by each category (i.e., under- and overpayment). A regression model will be used to estimate both the effects of procedural changes and effects of trends on the certification error measures for APEC IV compared to APEC III. These estimates will be used to adjust the data over time to create a consistent series. T-tests will be implemented to test the significance of variation in certification errors across APEC studies. Non-certification errors and rate of improper payments are computed as means or proportions. Standard statistical analyses such as the SURVEY PROC in SAS can be used to conduct the adjusted Wald F statistical tests to such outcomes across studies in different years (i.e., APEC I, II, III, and IV).

Objective 2

The study will also provide a robust examination of the relationship of student/household, school, and SFA characteristics to error rates. FNS will collect information on the administrative and operational structure of SFAs and schools sampled for the study. After applying the appropriate weights, data will be tabulated to provide descriptive summaries that are representative of SFAs and schools participating in the school meals programs nationally during SY 2023-2024. The relationship between student/household, school, and SFA characteristics on each of the key types and sources of error will be examined. Before starting the analyses the distribution of program error data at all levels will be examined, and outliers will be flagged, especially among unusual contextual factors that may be contributing to the program errors observed on the data collection day. Analyses will be conducted on program errors with and without outliers.

Objective 3

Online Application Sub-Study

The analyses will compare the household reporting error rates between those who applied for benefits using (1) a website based on the USDA online application prototype, (2) another online application, or (3) a paper application. We will make adjustments for covariates related to propensity for errors such as household demographics, meal eligibility status, school and SFA-level information, and geography. We will use logistic regression models to estimate the impact the application type (FNS prototype, other online application, or paper application) has on household reporting errors, while controlling for other covariates related to reporting error. We will compare group one to two to measure the effect of organization of the online format and two to three to measure the effect of the mode being online given the same organization of the form. We will also compare group one to three to measure the effect of using the online application prototype versus a traditional paper application.

In addition to estimating the impact of the application type on the incidence of reporting error, we will also examine select responses on the FNS online prototype application as compared to the household survey. Specifically, we will use logistic regression to estimate the impact of FNS online application on the probability of an inaccuracy in the following components: household size, difference in overall household income, difference in number of household members with income, difference in number of types of income, and differences in individual income amounts.

Household Survey Mode Effect Sub-Study

The analyses will determine if responses differ by mode (i.e., in-person versus virtual), with the emphasis being on variables that affect school meal eligibility (income and household size). We will also compare the household reporting error rates for the same households using the two different data points (virtual and in-person surveys). We will test for both statistical difference and meaningful differences in mean household size and income and in the percentages of the sample in each meal eligibility status.

Publication

Dissemination of study findings will cover both interim findings and results. The final report series, which is intended for publication on the FNS website, will include multiple short volumes, each dedicated to a specific type of error as well as an executive summary volume with all key study results. The shorter volumes are intended to make the findings accessible to a wide audience. In addition to traditional methods and materials for presenting study findings, we will develop data visualizations, infographics, and other visually engaging graphics. Data visualizations will be included in the final report series and/or posted on the FNS website. Following the release of the reports, a high-level summary of study results will be sent via email to SFA study participants and will include a link to the FNS website for the full reports.

A.17 Displaying the OMB Approval Expiration Date

If seeking approval to not display the expiration date for OMB approval of the information collection, explain the reasons that display would be inappropriate.

The expiration date for OMB approval of the information collection will be displayed on all instruments.

A.18 Exceptions to Certification for Paperwork Reduction Act Submissions

Explain each exception to the certification statement identified in Item 19 of the OMB 83-I “Certification for Paperwork Reduction Act.”

There are no exceptions to the certification statement.

1Program Information Report (Keydata). U.S. Summary, FY 2020-2021. Food and Nutrition Service (FNS). U.S. Department of Agriculture. https://fns-prod.azureedge.net/sites/default/files/data-files/Keydata%20May%202021.pdf.

2 Ibid.

3 Program Information Report (Keydata). U.S. Summary, FY 2020-2021. Food and Nutrition Service (FNS). U.S. Department of Agriculture. https://fns-prod.azureedge.net/sites/default/files/data-files/Keydata%20May%202021.pdf.

4PIIA repealed and replaced the Improper Payments Information Act of 2002 (P.L. 107-300), the Improper Payments Elimination and Recovery Act of 2010 (P.L. 111-204), and the Improper Payments Elimination and Recovery Improvement Act (IPERIA) of 2012 (P.L. 112-248).

5Groves, R., Singer, E., and Corning, A. (2000). “Leverage-saliency theory of survey participation: Description and an illustration.” Public Opinion Quarterly, 64(3):299–308.

6Singer, E., and Ye, C. (2013). “The use and effectiveness of incentives in surveys.” Annals of the American Academy of Political and Social Science, 645(1):112–141.

7Groves, R., Fowler, F., Couper, M., Lepkowski, J., Singer, E., and Tourangeau, R. (2009). Survey Methodology. John Wiley & Sons, pp 205-206.

8Singer, E. (2002). The use of incentives to reduce non response in household surveys. In Groves, R., Dillman, D., Eltinge, J., Little, R. (Eds.). Survey Non Response. New York: Wiley, pp 163-177.

9James, J. M., and Bolstein, R. (1992). “Large monetary incentives and their effect on mail survey response rates.” Public Opinion Quarterly, 56:442-453.

10Occupational Employment and Wage Statistics. May 2020. U.S. Bureau of Labor Statistics. https://www.bls.gov/oes/current/oes_nat.htm#00-0000.