Supporting Statement Part A

Supporting Statement Part A.docx

Evaluation of the ESSA Title I, Part D, Neglected or Delinquent Programs

OMB: 1875-0281

OMB Information Collection Request

Evaluation of the ESSA Title I, Part D Neglected or Delinquent Programs

U.S. Department of Education

Office of Planning, Evaluation and Policy Development

Policy and Program Studies Service

August 2016

OMB

Information Collection Request

Part A

August 2016

Contents

Supporting Statement for Paperwork Reduction Act Submission 3

A1. Circumstances Making Collection of Information Necessary 3

A3. Use of Improved Technology to Reduce Burden 6

A4. Efforts to Avoid Duplication of Effort 6

A5. Efforts to Minimize Burden on Small Businesses and Other Small Entities 6

A6. Consequences of Not Collecting the Data 6

A7. Special Circumstances Causing Particular Anomalies in Data Collection 7

A8. Federal Register Announcement and Consultation 7

A9. Payment or Gift to Respondents 7

A10. Assurance of Confidentiality 7

A12. Estimated Response Burden 8

A13. Estimate of Annualized Cost for Data Collection Activities 10

A14. Estimate of Annualized Cost to Federal Government 10

A15. Reasons for Changes in Estimated Burden 10

A16. Plans for Tabulation and Publication 10

A17. Display of Expiration Date for OMB Approval 11

A18. Exceptions to Certification for Paperwork Reduction Act Submissions 11

Introduction

The Policy and Program Studies Service (PPSS) of the U.S. Department of Education (ED) requests Office of Management and Budget (OMB) clearance for data collection activities associated with the Evaluation of the ESSA Title I, Part D Neglected or Delinquent Programs. The purpose of this study is for ED to gain a better understanding of how state agencies, school districts, and juvenile justice and child welfare facilities implement education and transition programs for youth who are neglected or delinquent (N or D).

This study will address four primary research questions:

What types of services and strategies do Title I, Part D funds support for children and youth in juvenile justice and child welfare settings?

How do juvenile justice facilities and child welfare agencies assist students in transitioning back to districts and schools, including those outside their jurisdictions?

How do state correctional facilities plan and implement institutionwide Part D projects?

How do grantees assess the educational outcomes of students participating in Part D–funded educational programs?

In order to answer these research questions, this evaluation study proposes two primary types of data collection and analysis:

Nationally representative surveys of all state coordinators of Title I, Part D programs and a nationally representative sample of local coordinators and their partners (juvenile justice and child welfare facility coordinators)

Case studies in five states, which include administrative document review and interviews with school districts, correctional institutions, and child welfare facilities about how they are implementing their grants and subgrants and how they are meeting the needs of students served

Project Overview

Congress enacted the ESEA Title I, Part D Neglected and Delinquent (N or D) Programs1 to address the academic and related needs of youth involved or at risk of involvement with the juvenile justice and child welfare systems. The Part D programs were designed to improve education services for children and youth in local and state institutions, for children and youth who are N or D, to provide them with the opportunity to meet the same challenging academic standards as their peers. The program also aimed to provide youth who are N or D with the services needed to make a successful transition from out-of-home placement to further schooling and employment. As of the 2013–14 school year, nearly 3,000 state- and locally operated facilities and programs received N or D funding and served more than 380,000 youth. Wide variation exists across states and districts in how N or D programs are implemented.

State education agencies (SEAs) receive formula funds based on the number of children in state-operated institutions and per-pupil educational expenditures. Each state’s allocation is generated by child counts in state juvenile institutions that provide at least 20 hours of instruction from nonfederal funds and adult correctional institutions that provide 15 hours of instruction a week. The Part D program contains two subparts, and under Subpart I, SEAs must carry out activities to help ensure that eligible youth have opportunities to meet the same college- and career-ready State academic standards that all children are expected to meet under the ESEA. State agencies may operate programs in juvenile and adult correctional facilities, juvenile detention facilities, facilities for neglected youth, and community day programs. In addition, similar to the school-wide program option under the Title I Grants to Local Educational Agencies program, all juvenile facilities may operate institution-wide education programs in which they use N and D program funds in combination with other available Federal and State funds. This option allows juvenile institutions to serve a larger proportion of their eligible population and to align their programs more closely with other education services in order to meet participants’ educational and occupational training needs. Under Subpart 2, SEAs award subgrants to local educational agencies (LEAs) with high numbers or percentages of children and youth in locally operated juvenile correctional facilities, including facilities involved in community day programs. The school districts may then allocate Subpart 2 funding to programs for neglected or delinquent youth or those at risk of juvenile justice system involvement, academic failure, or both.

Supporting Statement for Paperwork Reduction Act Submission

Justification (Part A)

A1. Circumstances Making Collection of Information Necessary

Access to high-quality education has a critical impact on successful life experiences, from early childhood to adulthood. Research has consistently demonstrated that children “who receive quality education services, meet age-appropriate education milestones, and earn high school and postsecondary school diplomas have significantly brighter outcomes as adults” (p. 5).1 Youth involved in the child welfare and juvenile justice systems are less likely to receive the same high-quality education as their nonsystem-involved peers. 2 In 2012, more than 57,000 youth were housed in juvenile secure care facilities in the United States.3 Although this placement level is the lowest in 40 years, it still represents a substantial population at risk for academic failure, greater recidivism, and sustained poverty. Similarly, the nearly 56,000 youth in congregate care in the child welfare system4 are similarly vulnerable and face many of the same challenges.

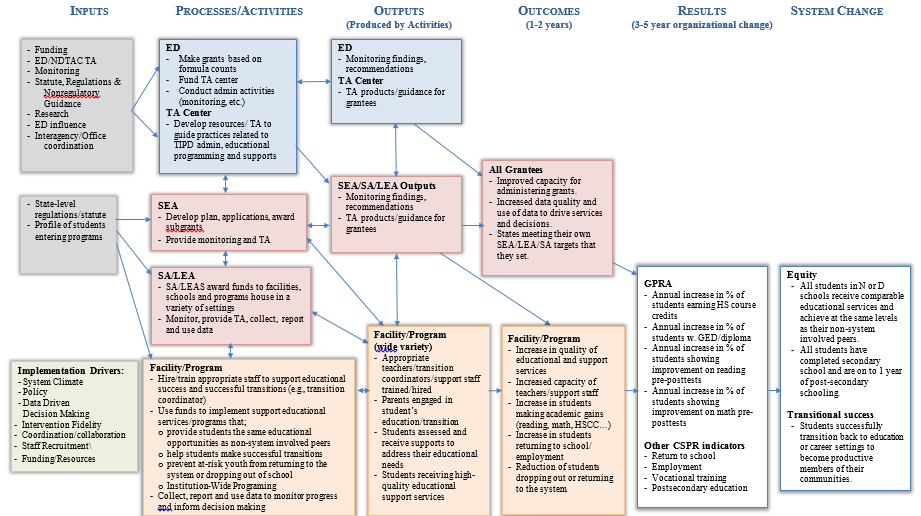

ED, in partnership with the National and Delinquent Technical Assistance Center (NDTAC), developed a comprehensive Title I, Part D Neglected or Delinquent Program (TIPD) logic model to portray the relationship among program goals, objectives, activities and outcomes (see Exhibit 1). The TIPD logic model also provides guidance to state and local coordinators pertaining to resource and program planning, data collection, continuous quality improvement, and expected federal and youth outcomes. Although the TIPD logic model has been developed, understanding the implementation complexity of the TIPD program has historically been limited to annual data collections (e.g., the Annual Child Count, CSPR/EDFacts) and periodic monitoring of state and local agencies and programs. Although these provide evidence of program scope and compliance, they are incomplete in demonstrating the full nature of the TIPD planning and implementation.

Exhibit 1. Title I, Part D Neglected or Delinquent Program Logic Model

Conceptual Approach

The study design incorporates a conceptual framework that has been developed from the field of implementation science.5 It consists of the following three phases to address the study questions and help determine at what level TIPD aligns with the practices outlined in the logic model. See Exhibit 2 for an overview of the study phases.

Exhibit 2. Evaluation of Title I, Part D Neglected or Delinquent Programs: Study Design Overview

TIPD

Study Phases

Implementation

Study Questions

This study’s collection of data from multiple sources regarding how TIPD programs are implemented will be used to answer the four research questions listed earlier. In addition to the four overarching study questions, more specific subquestions are associated with each. Exhibit 3 includes the study questions as well as the study components intended to address each.

Exhibit 3. Detailed Study Questions

Study Questions |

Study Components |

||

Surveys |

Case Studies |

||

Admin Docs |

Interviews |

||

1. What types of services and strategies do Title I, Part D funds support for children and youth in juvenile justice and child welfare settings? |

|||

|

ü |

ü |

ü |

|

ü |

ü |

ü |

|

ü |

ü |

ü |

|

ü |

ü |

ü |

|

ü |

ü |

ü |

|

ü |

ü |

ü |

2. How do juvenile justice facilities and child welfare agencies assist students in transitioning back to schools, including those outside their jurisdictions? |

|||

|

ü |

ü |

ü |

|

ü |

ü |

ü |

|

ü |

ü |

ü |

|

ü |

|

ü |

|

ü |

ü |

ü |

3. How do state correctional facilities plan and implement institutionwide Part D projects? |

|||

|

ü |

ü |

ü |

|

ü |

|

ü |

|

ü |

|

ü |

4. How do grantees assess the educational outcomes of students participating in Part D–funded educational programs? |

|||

|

ü |

ü |

ü |

A2. Use of Information

The information will be used by ED to produce and disseminate a report detailing how state agencies, school districts, and juvenile justice and child welfare facilities implement education and transition programs for youth who are neglected or delinquent (N or D). The last evaluation of Title I, Part D was completed in 2000. ED has a need to learn how programs are meeting the educational needs of these vulnerable populations. This will further provide opportunities to identify programs and practices that can be repeated and replicated to achieve desired youth outcomes.

A3. Use of Improved Technology to Reduce Burden

The recruitment and data collection plans for this project reflect sensitivity to issues of efficiency and respondent burden. The study team will use a variety of information technologies to maximize the efficiency and completeness of the information gathered for this study and to minimize the burden on respondents at the state and local levels:

When possible, data will be collected through ED’s and states’ websites and through sources such as EDFacts and other Web‑based sources. For example, prior to case study data collection activities, the team will compile comprehensive information about each state, including demographics, subgrantee types and characteristics, N or D program strategies and services, and the number and demographics of children and youth who participate in Part D–funded programs.

State and local coordinator surveys will be administered through a Web‑based platform to streamline the response process.

A4. Efforts to Avoid Duplication of Effort

There are no other federal studies of the TIPD program. Whenever possible, the study team will use existing data, including EDFacts, the Annual Child Count, Consolidated State Performance Reports (CSPRs), and federal monitoring reports. This will reduce the number of questions asked in the case study interviews, thus limiting respondent burden and minimizing duplication of previous data collection efforts and information.

A5. Efforts to Minimize Burden on Small Businesses and Other Small Entities

No small businesses or other small entities will be involved in this project.

A6. Consequences of Not Collecting the Data

Failure to collect the data proposed through this study would prevent ED from gaining insight into how administrators of state and local N or D programs plan for and implement the programs, the challenges they face, and the role administrators play in the process. An evaluation has not been conducted in 16 years, and, without this information, ED will not be describe the intersection of federal, state, and local policy and programs for the N or D populations and replicated and will not be able to provide valuable technical assistance to state and local agencies.

A7. Special Circumstances Causing Particular Anomalies in Data Collection

None of the special circumstances listed apply to this data collection.

A8. Federal Register Announcement and Consultation

Federal Register Announcement. A 60-day notice to solicit public comments was published in the Federal Register on May 12, 2016 (Volume 81, No. 92, p. 29552).2 ED did not receive any relevant public comments.

Consultations Outside the Agency. A technical working group (TWG) of researchers and former state officials and was convened in February 2016 as part of this project to provide input on the data collection instruments developed for this study. Exhibit 4 lists the TWG members and their affiliations.

Exhibit 4. TWG Members

Name |

Title |

Affiliation |

Candace Mulcahy |

Associate Professor |

Binghamton University Graduate School of Education |

Anthony Petrosino |

Senior Research Associate |

WestEd |

Cherie Townsend |

Consultant, Former Executive Director |

The Moss Group; Texas Youth Commission (Former) |

Darryl Washington |

Founder and Director; Former Title I, Part D State Coordinator |

Vision Makers, LLC; Alabama Department of Education (Former) |

Lois Weinberg |

Professor of Special Education and Counseling |

California State University, Los Angeles |

A9. Payment or Gift to Respondents

Respondents will not receive a payment or a gift as a result of their participation in this project.

A10. Assurance of Confidentiality

The study team is vitally concerned with maintaining the anonymity and security of its records. The project staff has extensive experience collecting information and maintaining the confidentiality, security, and integrity of interview data. All members of the study team have obtained their certification on the use of human subjects in research. This training addresses the importance of the confidentiality assurances given to respondents and the sensitive nature of handling data. The team also has worked with the Institutional Review Board (IRB) at American Institutes for Research (AIR) to seek and receive approval of this study, thereby ensuring that the data collection complies with professional standards and government regulations designed to safeguard research participants.

Confidentiality Assurance Statement: Responses to this data collection will be used only for statistical purposes. The reports prepared for this study will summarize findings across the sample and will not associate responses with a specific individual. We will not provide information that identifies you to anyone outside the study team, except as required by law.

The following data protection procedures are in place:

The study team will protect the identity of individuals from whom we will collect data for the study and use it for research purposes only. Respondents’ names will be used for data collection purposes only and will be disassociated from the data prior to analysis.

Although this study does not include the collection of sensitive information (the only data to be collected directly from case study participants focuses on state and local policies and practices rather than on individual people), a member of the research team will explain to participants what will be discussed, how the data will be used and stored, and how their confidentiality will be maintained. Participants will be instructed that they can stop participating at any time. The study’s goals, data collection activities, participation risks and benefits, and uses for the data are explained during the introduction of case study data collection activities. This consent information will be included as part of the Web survey programming and will be sent with paper questionnaires that are mailed.

All electronic data will be protected using several methods. The contractors’ internal networks are protected from unauthorized access, including firewalls and intrusion detection and prevention systems. Access to computer systems is password protected, and network passwords must be changed on a regular basis and conform to the contractors’ strong password policies. The networks also are configured so that each user has a tailored set of rights, granted by the network administrator, to files approved for access and stored on the local area network. Access to all electronic data files and workbooks associated with this study is limited to researchers on the case study data collection and analysis team.

A11. Sensitive Questions

No questions of a sensitive nature are included in this study.

A12. Estimated Response Burden

It is estimated that the total hour burden for the data collection for the project is 1,586 hours, or 528.67 hours annually over the three-year clearance period. This totals to an estimated cost of $68,029.19 based on the average hourly wage of participants, or $22,676.40 annually over the three-year clearance period. Exhibit A.1 summarizes the estimates of respondent burden for the various project activities for each topic area.

Exhibit A.1. Summary of Estimated Response Burden

Data Collection Activity |

Respondent |

Total Sample Size |

Estimated Response Rate |

Number of Respond-ents |

Time Estimate (in hours) |

Total Hour Burden |

Hourly Rate |

Estimated Monetary Cost of Burden |

Compile sample of local coordinators |

State coordinators |

160 |

100% |

160 |

0.5 |

80 |

$44.23 |

$3,538.40 |

Nationally representative survey

|

State Education Agency (SEA) coordinators |

52 |

85% |

44 |

0.5 |

22 |

$44.23 |

$973.06 |

State Agency (SA) coordinators |

108 |

85% |

92 |

1 |

92 |

$44.23 |

$4,069.16 |

|

Local Education Agency (LEA) coordinators |

466 |

85% |

396 |

0.5 |

198 |

$39.46 |

$7,813.08 |

|

Local Facility Program (LFP) coordinators |

934 |

85% |

794 |

1 |

794 |

$39.46 |

$31,331.24 |

|

Recruitment for site visits |

SEAs |

5 |

100% |

5 |

4 |

200 |

$58.99 |

$11,798.00 |

SAs |

10 |

100% |

10 |

2 |

20 |

$58.99 |

$884.60

|

|

School districts |

10 |

100% |

10 |

2 |

20 |

$44.23 |

$884.60 |

|

Correctional institutions |

10 |

100% |

10 |

2 |

20 |

$43.36 |

$867.20 |

|

Child welfare facilities |

10 |

100% |

10 |

2 |

20 |

$43.36 |

$867.20 |

|

Case study site visits |

State N or D coordinator |

5 |

100% |

5 |

2 |

10 |

$44.23 |

$442.30 |

State Title I coordinator |

5 |

100% |

5 |

1 |

5 |

$44.23 |

$221.15 |

|

SEA administrator (instruction) |

5 |

100% |

5 |

1 |

5 |

$58.99 |

$294.95 |

|

SEA administrator (data) |

5 |

100% |

5 |

1 |

5 |

$58.99 |

$294.95 |

|

State Dept. of Social Services (i.e., Child Welfare) administrators |

10 |

100% |

10 |

1 |

10 |

$58.99 |

$589.90 |

|

State Dept. of Juvenile Justice administrators |

10 |

100% |

10 |

1 |

10 |

$58.99 |

$589.90 |

|

LEA administrators |

10 |

100% |

10 |

1 |

10 |

$39.46 |

$394.60 |

|

Local facilities administrators |

10 |

100% |

10 |

1 |

10 |

$43.36 |

$433.60 |

|

Local child welfare program administrators |

10 |

100% |

10 |

1 |

10 |

$43.36 |

$433.60 |

|

Instructional staff |

20 |

100% |

20 |

0.75 |

15 |

$29.06 |

$435.90 |

|

Local facilities staff (e.g., case managers) |

20 |

100% |

20 |

0.75 |

15 |

$29.06 |

$435.90 |

|

Counselors/ social workers |

20 |

100% |

20 |

0.75 |

15 |

$29.06 |

$435.90 |

|

TOTAL |

|

1,895 |

|

1,661 |

27.75 |

1,586 |

|

$68,029.19 |

Annualized Basis |

|

632 |

|

554 |

9.25 |

528.67 |

|

$22,676.40 |

A13. Estimate of Annualized Cost for Data Collection Activities

No additional annualized costs for data collection activities are associated with this data collection beyond the hour burden estimated in item A12.

A14. Estimate of Annualized Cost to Federal Government

The estimated annualized cost of the study to the federal government is $582,974. This estimate is based on the total contract cost of $1,311,691, amortized over a 27-month performance period. It includes costs already invoiced and budgeted future costs that will be charged to the government, including data collection, analysis, and report preparation.

A15. Reasons for Changes in Estimated Burden

This is a new data collection; thus, no program changes or adjustments are reported.

A16. Plans for Tabulation and Publication

Data collection for the Evaluation of the ESSA Title I, Part D Neglected or Delinquent Programs will begin in October 2016 and conclude in May 2017. During this time, members of the study team will travel to selected sites to conduct interviews. The study team will ensure accuracy of the data by analyzing the data as described in the analytic approach section of this submission. Data analysis will begin shortly after data collection starts, once interview transcripts and observations notes are ready for coding. Findings will be reported to ED by the contractor in a final report. The timeline for data collection activities and data dissemination is summarized in Exhibit A.2.

Exhibit A.2. Timeline for Data Collection Activities and Reporting

Activity or Deliverable |

Date |

Notify selected respondents |

October 2016 |

Begin survey data collection |

October 2016 |

Begin case study data collection |

February 2017 |

Complete survey data collection |

May 2017 |

Complete case study data collection |

May 2017 |

Data tables |

June 2017 |

Interview transcripts |

June 2017 |

Final report |

November 2017 |

A17. Display of Expiration Date for OMB Approval

All data collection instruments will display the OMB approval expiration date.

A18. Exceptions to Certification for Paperwork Reduction Act Submissions

No exceptions to the certification statement identified in Item 19, “Certification for Paperwork Reduction Act Submissions,” of OMB Form 83-I are requested.

References

1 The Title I, Part D, Subpart 1 State Agency program was first authorized with P.L. 89-750, the Elementary and Secondary Amendments of 1966. The Subpart 2 local education agency program came into being in its present form with the Improving America Schools Act of 1994.

2 This will be updated when available.

1 Leone, P. & Weinberg, L. (2012). Addressing the Unmet Educational Needs of Children and Youth Involved with the Juvenile Justice and Child Welfare System (Second Edition). Washington, DC: Center for Juvenile Justice Reform. Retrieved from http://cjjr.georgetown.edu/wp-content/uploads/15/03/EducationalNeedsofChildrenandYouth_May2010.pdf

2 Leone, P. & Weinberg, L. (2012). Addressing the Unmet Educational Needs of Children and Youth Involved with the Juvenile Justice and Child Welfare System (Second Edition). Washington, DC: Center for Juvenile Justice Reform. Retrieved from http://cjjr.georgetown.edu/wp-content/uploads/15/03/EducationalNeedsofChildrenandYouth_May2010.pdf

3 Hockenberry, S., Sickmund, M., & Sladky, A. (2015). Juvenile Residential Facility Census, 2012: Selected Findings. Juvenile Offenders and Victims: National Report Series Bulletin. Washington, DC: Office of Juvenile Justice and Delinquency Prevention. Retrieved from http://www.ojjdp.gov/pubs/247207.pdf

4 U.S. Department of Health and Human Services, Administration for Children and Families, Children’s Bureau. (2015). A National Look at the Use of Congregate Care in Child Welfare. Washington, DC: Author. Retrieved from http://www.acf.hhs.gov/sites/default/files/cb/cbcongregatecare_brief.pdf

5 Fixsen, D. L., Naoom, S. F., Blase, K. A., Friedman, R. M., & Wallace, F. (2005). Implementation Research: A Synthesis of the Literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network (FMHI Publication #231).

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Information Technology Group |

| File Modified | 0000-00-00 |

| File Created | 2021-01-23 |

© 2026 OMB.report | Privacy Policy