PRA Supporting Statement A - SULI LFS - Final Clean

PRA Supporting Statement A - SULI LFS - Final Clean.docx

Science Undergraduate Laboratory Internship (SULI) Long-term Follow-up Study

OMB:

Supporting Statement for Science Undergraduate Laboratory Internship (SULI) Long-term Follow-up Study

OMB No. 1910-XXXX

Form DOE-F 413.42 / 413.43, Survey of Former SULI Program Participants and Applicants

July

2025

U.S.

Department of Energy Washington,

DC 20585

Table of Contents

A.4. Efforts to Identify Duplication 5

A.5. Provisions for Reducing Burden on Small Businesses 6

A.6. Consequences of Less-Frequent Reporting 7

A.7. Compliance with 5 CFR 1320.5 7

A.8. Summary of Consultations Outside of the Agency 8

A.9. Payments or Gifts to Respondents 9

A.10. Provisions for Protection of Information 10

A.11. Justification for Sensitive Questions 11

A.12A. Estimate of Respondent Burden Hours 11

A.12B. Estimate of Annual Cost to Respondent for Burden Hours 13

A.13. Other Estimated Annual Cost to Respondents 14

A.14. Annual Cost to the Federal Government 14

A.15. Reasons for Changes in Burden 15

A.16. Collection, Tabulation, and Publication Plans 15

A.17. OMB Number and Expiration Date 17

A.18. Certification Statement 17

Introduction

Provide a brief introduction of the Information Collection Request. Include the purpose of this collection, note the publication of the 60-Day Federal Register Notice, and provide the list of forms within this collection.

The Science Undergraduate Laboratory Internships (SULI) program is a federally-funded research internship that encourages undergraduate students and recent graduates to pursue science, technology, engineering, and mathematics (STEM) careers by providing research experiences at the Department of Energy (DOE) laboratories. Selected students participate as interns appointed at one of 17 participating DOE laboratories/facilities. They perform research under the guidance of laboratory staff scientists or engineers on projects supporting the DOE mission. The SULI program is sponsored and managed by the DOE Office of Science, Office of Workforce Development for Teachers and Scientists (WDTS) in collaboration with the DOE laboratories/facilities.

This study is a long-term follow-up evaluation of the SULI program conducted by Oak Ridge Institute for Science and Education (ORISE) on behalf of DOE/WTDS. The purpose of the study is to answer key questions about the long-term impact of SULI on participants and to ensure that the study’s rigor and the information it provides will be comparable to national peer programs or studies, such as those conducted by the National Science Foundation (NSF).

Post-program surveys from SULI and other DOE WDTS-programs have consistently measured the immediate gains in knowledge and skills, as well as satisfaction with the experience. However, a well-designed longitudinal and long-term follow-up study of SULI cohorts through their career trajectory is needed to document long-term outcomes and valuable avenues for program development through continuous improvement.

The collection instruments that will be used are DOE F 413.42 for alumni of the SULI program and DOE F 413.43 for the comparison cohort (non-participants in the SULI program), which are included as part of the Information Collection Request Package.

A.1. Legal Justification

Explain the circumstances that make the collection of information necessary. Identify any legal or administrative requirements that necessitate the collection. Attach a copy of the appropriate section of each statute and regulation mandating or authorizing the information collection.

This evaluation study will answer questions concerning long-term impact on SULI participants as compared to their peers who did not participate in the SULI program. SULI is a mentoring program established pursuant to 42 U.S. Code, Subchapter XIII, §7381r, Subsection (a), (“Department of Energy Science Education Enhancement Act of 1991” - Public Law 101-510), which requires the creation of programs to recruit and provide mentors for students who are interested in careers in science, engineering, and mathematics. This data collection and evaluation study are required under 42 U.S. Code, Subchapter XIII, §7381r, Subsection (c), which mandates the use of metrics to evaluate the success of programs established under Subsection (a).

A.2. Needs and Uses of Data

Indicate how, by whom, and for what purpose the information is to be used. Except for a new collection, indicate the actual use the agency has made of the information received from the current collection

The information obtained from this data collection will be used to inform the WDTS program of outcomes for former SULI participants. The practical utility of this data collection is that it will allow the federal government to evaluate and improve the SULI program. The study is designed specifically to answer a set of evaluation questions that are intended to help investigators understand 1) key educational, career, and other outcomes for SULI participants and how these outcomes relate to participants’ preparation, personal background, and prior experiences, 2) how key outcomes may differ for SULI participants as compared to a sample of non-participants drawn from among SULI applicants, and 3) how key outcomes for SULI participants compare to a national benchmark of U.S. scientists and engineers drawn from the National Survey of College Graduates (NCSG). Data will be collected via online survey using Novi survey platform, a commercial off-the-shelf survey tool. Expectations and anecdotal evidence suggest that participants in research internship programs are more likely to complete science and engineering (S&E) degrees, more likely to seek graduate education in S&E, and ultimately work in S&E occupations. However, these program benefits need to be confirmed, with appropriate comparison groups drawn from similar applicants that did not participate in SULI and/or other sources of national data on scientists and engineers that will allow SULI to place program outcomes in a broader context. Even more important, confirmation that these participants contribute to the DOE headquarters and national laboratory workforce or continue to engage in DOE-related research through their industry or academic employment in the years following their participation, will provide a new sense of urgency and excitement about SULI and other similar programs. It will also provide information needed by the program sponsor to conduct ongoing monitoring and improvement activities.

Data collection is planned to commence in FY 2027 and will proceed in 3 waves. There are two different reasons for us to reach out to participants after initial contact: (1) to make first contact with individuals whose available contact email addresses failed; and (2) to solicit responses from individuals who received, but did not respond to, the initial invitation. We anticipate that certain cases will require more effort to contact and to convince them to respond: SULI non-participants; individuals without email addresses or with only a school/employer address at time of participation, etc. The study’s reliability depends on having a representative sample that includes a fair proportion of women and individuals from backgrounds underrepresented in STEM. We will focus our contact interventions by increasing effort to locate and incentive values.

First contact will be attempted through a rigorous contact protocol. The number of failed contacts will not be known until the pre-invitation notice goes out. Evaluators will gather “bounce back” email notifications, document which emails were unreachable, and then exhaust all available email addresses in an effort to reach the participant. If an individual cannot be reached via any of these addresses, we will provide their information to a contact tracing service in an attempt to retrieve a more recent email address. If this fails, we will contact them via phone and use a standard phone script to solicit their participation in the survey. Similarly, after the first wave, evaluators will attempt second and third email addresses for non-respondents. Exhausting all contact methods and giving each potential respondent at least two weeks to receive and respond to the invitation will comprise the first wave of survey administration. This approach will help us not only secure as many responses as possible, but eliminate a source of non-response bias, as we expect that there will be differences between those with usable email addresses and those without, such as their degree advancement at time of last contact.

Once the first wave is complete, we will shift our attention to soliciting responses from non-respondents (both SULI alumni and the comparison group of non-participants) in two additional waves. We will use two research-backed strategies: responsive design, which modifies data collection plan based on monitoring incoming data, and a “prevention” approach in which we attempt to convert non-respondents into respondents. Evaluators will carefully track which individuals have already completed the survey and those who have not.

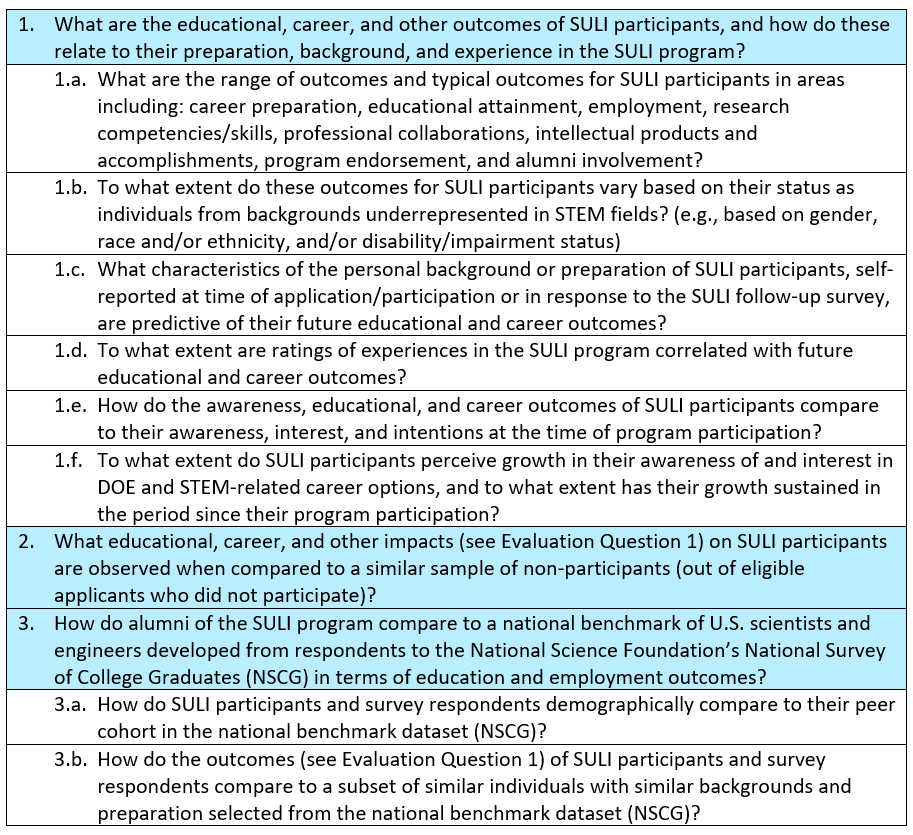

Each Evaluation Question and its related sub-questions are summarized in Table A1.

Table A1. Summary of Evaluation Questions

A.3. Use of Technology

Describe whether, and to what extent, the collection of information involves the use of automated, electronic, mechanical, or other technological collection techniques or other forms of information technology, e.g., permitting electronic submission of responses.

The Novi survey platform, a commercial off-the-shelf survey tool, will be used for survey data collection. Although some hard-to-reach survey respondents may be contacted by phone in order to invite their participation, collection of survey responses will be 100% electronic. All potential respondents who are invited to participate in the survey will be sent an email containing a link to the online survey, and their responses must be entered using the online Novi webform. ORISE regularly uses this improved information technology platform to administer a variety of surveys to government agencies, universities, and research centers. Web-based instruments allow for rapid data collection and extensive use of skip patterns that require respondents to answer only questions that are pertinent to their specific situations. This provides an abbreviated survey for many respondents and minimizes the need for follow-up after the survey has been submitted. The self-administered Web-based technology for the survey also permits greater expediency with respect to data processing and analysis (e.g., a number of back-end processing steps, including coding and data entry). Data are transmitted and immediately stored electronically, rather than by mail. These efficiencies save time due to the speed of data transmission, as well as receipt in a format suitable for analysis.

Additionally, Novi allows the use of custom, individualized links that can match each survey response to known characteristics of the invitees. The use of such administrative data will assist in providing pre-internship information and in assessing any non-response. Two separate survey instruments will be used for the administration of the survey to SULI alumni and to the comparison group of non-participants, in order to cleanly separate record-keeping and tracking between these two groups.

This study will involve extensive tracking, storage, and accessibility of fundamental program and participation data. ORISE has engaged in extensive data tracking and data management for Department of Energy workforce development portfolio evaluations. The use of the Novi platform will also ensure that data collected are stored on ORISE’s secure, encrypted server, helping to ensure the protection of participant data.

A.4. Efforts to Identify Duplication

Describe efforts to identify duplication.

As a part of participation in the SULI program, individuals were asked to complete pre- and post-appointment surveys (Covered under OMB Control No. 1910-5202). These existing data are available through the WDTS data system and will be used as short-term measures of SULI participants’ overall impressions of the program, educational and career plans, hands-on research experiences, DOE knowledge and awareness, and research skills.

This information collection does not duplicate previous efforts. Existing data maintained by WDTS will provide baseline characteristics/covariates for both the SULI participants and the comparison group and will also provide updated contact information for any participants who have later entries in the WDTS data system. This includes any variables that are thought to be related either to admission into the SULI program or to ultimate outcomes. These are particularly important when conducting comparative analyses. Including baseline measures will allow ORISE to isolate the effect of the program on the outcomes of interest. Based on the information provided to ORISE, the existing data maintained by WTDS includes multiple items that assess demographic information, current educational enrollment, field of study, GPA, prior internship experience, and family connections to DOE laboratories.

The follow-up aspect of this study is completely new. To date, there has been no in-depth long-term evaluation of this program, and there are no existing data sources that contain measures of interest for this specific population of interest over the long term. This information collection therefore is not duplicative of previous research efforts. In designing the data collection activities, we have taken several steps to ensure that this effort does not duplicate ongoing data collection and that no existing data sets would address the study questions that are specific to former SULI participants. We have carefully reviewed existing data sets to determine whether any are sufficiently similar or could be modified to meet the need for information on long-term outcomes for participants in the SULI program, but none of these existing data sources can adequately meet the data collection needs of the long-term follow up study. This is primarily due to two factors: the need for metrics that can be compared across program alumni and non-alumni, and the length of time needed from program completion to capture longer-term outcomes. WDTS has not collected follow-up data from the planned comparison group consisting of individuals who applied to the SULI program but did not participate. Obtaining data from these individuals is necessary in order to answer Evaluation Question 2, which asks what the longer-term career, educational and other impacts are on SULI participants compared to a similar sample of non-participants. Furthermore, although WDTS has access to limited short-term outcomes data on former SULI participants, these data are limited to the time period immediately following their internship, and thus are not sufficient for answering any of the Evaluation Questions having to do with longer-term outcomes. The existing WDTS Highlights package is limited in scope and was never intended to provide metrics that could be used to perform cross-group comparisons. The Highlights data collection uses open-ended questions that are meant to provide qualitative and descriptive information to be used to provide “highlights” of former participants’ trajectory since participating in SULI. This type of data is not sufficient to provide a basis for comparison with the study’s comparison group, nor is it precise enough to examine potential correlations as specified in the SULI LFS Evaluation Questions. The types of questions asked in the SULI LFS instruments are more specific (most are pre-defined response options with free-entry fields only if respondents wish to provide optional additional detail), for example, specific questions are asked about the type of employment, the primary tasks of employment, and the employer. Questions at this level of detail were not asked in the Highlights data collection. Also, the Highlights collection does not include non-SULI alumni, a group which is essential to being able to conduct the planned evaluation and group comparison. Thus, we are seeking to conduct the SULI LFS under a new collection, which will seek to collect comparable, specific data from both groups (SULI alumni and non-alumni).

Through these efforts we will seek to ensure representativeness of our study sample without duplication of data collection.

A.5. Provisions for Reducing Burden on Small Businesses

If the collection of information impacts small businesses or other small entities, describe any methods used to minimize burden.

Respondents in this study will be members of the general public, specific subpopulations or specific professions, not business entities. No impact on small businesses or other small entities is anticipated.

A.6. Consequences of Less-Frequent Reporting

Describe the consequence to Federal program or policy activities if the collection is not conducted or is conducted less frequently, as well as any technical or legal obstacles to reducing burden.

The is a one-time data collection. Participants in this evaluation will be surveyed once. While there are no legal obstacles to reduce burden, lack of information needed to evaluate long-term outcome of participation in the SULI program may impede the federal government’s efforts to improve the SULI program. Without the information collection requested for this evaluation study, it would be difficult to determine the value or impact of SULI on program participants. Failure to collect these data could reduce effective use of DOE’s program resources to benefit the target population. Careful consideration has been given to how the intended audience should be surveyed for evaluation purposes. We believe the longitudinal evaluation design will provide sufficient data to evaluate the program effectively.

A.7. Compliance with 5 CFR 1320.5

Explain any special circumstances that require the collection to be conducted in a manner inconsistent with OMB guidelines:

(a) requiring respondents to report information to the agency more often than quarterly;

Not applicable. This is a one-time data collection.

(b) requiring respondents to prepare a written response to a collection of information in fewer than 30 days after receipt of it;

Not applicable. Although timely completion of the survey will be encouraged, there is no specific requirement for respondents to reply in fewer than 30 days.

(c) requiring respondents to submit more than an original and two copies of any document;

Not applicable. This will be an online survey. Copies of the survey will not be requested.

(d) requiring respondents to retain records, other than health, medical government contract, grant-in-aid, or tax records, for more than three years;

Not applicable. This will be an online survey. This data collection will not require respondents to retain any records for any duration of time.

(e) in connection with a statistical survey, that is not designed to product valid and reliable results that can be generalized to the universe of study;

Not applicable. The data collection and analysis procedures are carefully designed to generate a representative sample (to the extent possible given the limitations of the data) and to produce results that are generalizable to the target population of former SULI participants.

(f) requiring the use of statistical data classification that has not been reviewed and approved by OMB;

All demographic data will be collected using classifications that are approved by the OMB. ORISE A&E will defer to OMB guidance on classifications for other data types that are collected in the survey.

(g) that includes a pledge of confidentially that is not supported by authority established in stature of regulation, that is not supported by disclosure and data security policies that are consistent with the pledge, or which unnecessarily impedes sharing of data with other agencies for compatible confidential use; or

Information provided to survey participants regarding confidentiality is supported by statute. Only aggregate or otherwise de-identified data will be shared within the agency to the degree permitted by statute and applicable agency regulations.

(h) requiring respondents to submit proprietary trade secrets, or other confidential information unless the agency can demonstrate that it has instituted procedures to protect the information’s confidentiality to the extent permitted by law.

Not applicable. No proprietary information will be collected.

There are no special circumstances that would require evaluation to be conducted in a manner inconsistent with the guidelines in 5 CFR 1320.5. This is a one-time data collection and does not require submission of any information that is not OMB-approved. Consent procedures include obtaining broad consent for use of the data collected, and no proprietary information will be collected.

A.8. Summary of Consultations Outside of the Agency

If applicable, provide a copy and identify the date and page number of publication in the Federal Register of the agency’s notice, required by 5CFR 320.8(d), soliciting comments on the information collection prior to submission to OMB. Summarize public comments received in response to that notice and describe actions taken in response to the comments. Specifically address comments received on cost and hour burden. Describe efforts to consult with persons outside DOE to obtain their views on the availability of data, frequency of collection, the clarity of instructions and recordkeeping, disclosure, or reporting format (if any), and on the data elements to be recorded, disclosed, or report.

The 60-day Federal Register Notice was published on May 16, 2024 (Vol. 89, No. 96, page 42865) and the public comment period ended on July 15, 2024. No relevant comments were received. The Department has published a notice in the Federal Register soliciting public comments to OMB for a period of 30 days on June 25, 2025 (Vol. 90, No. 120, page 27011). The data collection plan for this study was peer reviewed and approved by a panel of experts knowledgeable in long-term follow-up study design and data collection. The study will be conducted in accordance with input from the reviewers, legal counsel, guidance from the DOE/Institutional Review Board (IRB), and OMB.

A.9. Payments or Gifts to Respondents

Explain any decision to provide any payment or gift to respondents, other than remuneration of contractors or grantees.

Incentives are a key tool for increasing response rates and will be especially important in the SULI context given that these are long-term follow-up surveys for participants who have not otherwise had ongoing contact with the program and non-participants who are otherwise not intrinsically motivated to assist. ORISE sought guidance from legal counsel and from the IRB to determine the specific details of the incentive structure. The incentive strategy will follow three different tracks for the first administration wave and for subsequent waves. Based on a review of incentive plans for federally funded surveys with similar data collection methodologies, WDTS and ORISE selected a plan that includes three different tracks for the first administration wave, with increasing incentive amounts for two of these tracks on the second and third waves. In the first administration wave, potential respondents will be randomly assigned into three tracks: 25% will be offered $30, 25% will be offered $20, and 50% of potential respondents will not be offered an incentive. On the second wave, potential respondents who were not previously offered an incentive will be offered $20, and those who were previously offered $20 will be offered $25. On the final administration wave, those who were offered $20 or $25 on the previous wave will be offered $30. The maximum total incentive a respondent can receive is $30. Table 2 provides a summary of the incentive plan for all 3 administration waves.

Table A2. Incentive Amounts by Administration Wave

Wave |

Incentive Strategy for Alumni |

Incentive Strategy for Non-Participants |

First Wave – Initial Email Invitation + 2 Reminders |

Initial Email addresses $30 (25%) $20 (25%) $0 (50%) |

Initial Email addresses $30 (25%) $20 (25%) $0 (50%)

|

Second Wave – Targeted Email Invitation + 2 Reminders |

$30 (25%*) $25 (25%*) $20 (50%*) |

$30 (25%*) $25 (25%*) $20 (50%*)

|

Final Wave – Targeted Phone Call + Email Invitation + 2 Reminders |

$30

|

$30

|

*Approximate percentages

In the second and third administration waves, we will attempt to address non-response bias through our incentive strategy. We will assess non-response bias in demographic categories determined by previous analysis of the demographic composition of the study population, then selectively reach out to a stratified random sampling of non-respondents to encourage them to re-consider. Evaluators will select a balanced stratified random sample of remaining non-respondents in both the SULI alumni and the comparison group and contact them for the following wave of administration with increased incentive values and a modified invitation. The second wave will target approximately 2,000 non-respondents (1,000 SULI participants and 1,000 non-participants) in this way, and the third wave will target an additional 1,000 (500 SULI participants and 500 non-participants). Specific counts will depend on the number of non-respondents and the degree of non-response bias measured following the previous wave. Non-respondents who are in a track with increasing incentives and who are selected into the stratified random sample for the second wave will be contacted separately.

A.10. Provisions for Protection of Information

Describe any assurance of confidentiality provided to respondents and the basis for the assurance in statute, regulation, or agency policy.

This information is collected by ORISE on behalf of DOE WDTS, the Privacy Act is applicable and the DOE System of Record is System 82. Information will be collected in identifiable form and data can be retrieved by individually identifiable data elements. Individually identifiable data collected to facilitate the collection of response data (names, telephone numbers, e-mail addresses) will be kept by ORISE in a password protected file. ORISE will delete this file after the project is completed. Subcontractors sign a pledge not to release information.

Survey data will be maintained in password protected files on ORISE’s secure servers. It will be accessible only to staff directly involved in the project. Upon project completion, the dataset containing de-identified survey responses will be maintained as a digital file and maintained indefinitely. This study will use ORISE’s comprehensive secure servers and systems for data collection and storage to ensure confidentiality and protection of all human data and PII/PHI. To meet requirements of the Federal Information Security Management Act (FISMA), ORISE maintains an Authority to Operate (ATO) from the U.S. Department of Energy. The ATO ensures that the ORISE General Support System (GSS) meets federal security requirements and will continue to do so throughout the system life cycle. This authorization enables ORISE to process U.S. Government information on its computer systems. The ORISE GSS is currently authorized to process up to, and including, FIPS-199 Moderate data. ORISE uses a NIST 800-53 (revision 4) Moderate baseline to ensure that security controls appropriate to the information security category are in place. Periodic self-assessments, peer reviews, independent assessments, and continuous monitoring are performed to ensure controls are performing properly.

Respondents will receive information under each of the following rubrics prior to data collection:

PII Collection

Your personal data will be used for research and evaluation purposes. The categories of personal data you are being asked to consent to ORISE’s collection and use are your name, address, email address, telephone number, demographic information, education and employment history, citizenship status, and disability status.

Confidentiality

All information obtained in this study is strictly confidential. If you choose to provide contact information for future research studies or to learn more about SULI alumni opportunities, that information will be separated from your survey responses, stored separately, and shared with the Workforce Development for Teachers and Scientists (WTDS) program that manages SULI.

Data Use

Contact information will only be used for the purposes noted in the survey. The results of this research study may be used in reports, presentations, and publications, but the researchers will not identify you unless you are contacted and specifically provide additional permission for specific data to be released. In order to maintain confidentiality of your records, the investigators will keep the names of the subjects confidential. The information will be stored on a secure server and will be password protected. Only the researchers will have access to disaggregated, confidential information.

Third-Party Accountability

ORISE may share some of your personal data with third parties who collect, store, and process your personal data on behalf of ORISE and who are contractually obligated to keep your personal data confidential subject to appropriate protections to prevent it from unauthorized disclosure. If you choose to provide contact information for future use, that information will be separated from your survey responses, stored separately, and shared with the Workforce Development for Teachers and Scientists program that manages SULI.

Data Security

Your personal data will be stored on an encrypted secure server. Your personal data will be stored in accordance with the record retention requirements applicable to ORISE as a United States Department of Energy contractor, and any other applicable U.S. laws. You have the right to request access to, rectify, erase, and restrict the processing of your personal data. You also have the right to revoke this consent to use your personal data.

A.11. Justification for Sensitive Questions

Provide additional justification for any questions of a sensitive nature, such as sexual behavior and attitudes, religious beliefs, and other matters that are commonly considered private. This justification should include the reasons why DOE considers the questions necessary, the specific uses to be made of the information, the explanation to be given to persons from whom the information is requested, and any steps to be taken to obtain their consent.

No sensitive questions are asked.

A.12A. Estimate of Respondent Burden Hours

Provide estimates of the hour burden of the collection of information. The statement should indicate the number of respondents, frequency of response, annual hour burden, and an explanation of how the burden was estimated. Unless directed to do so, DOE should not conduct special surveys to obtain information on which to base hour burden estimates. Consultation with a sample fewer than 10 potential respondents is desirable.

The estimate for burden hours is based on a pilot test of the survey instrument by ORISE personnel. In the pilot test, the average time to complete the survey including time for reviewing instructions, gathering needed information, and completing the survey was 25-30 minutes. Based on these results, the estimated time range for actual respondents to complete the survey is 25-30 minutes. For the purposes of estimating burden hours, the upper limit of this range is assumed. Furthermore, we expect that some potential respondents will need to be contacted by telephone to attempt to solicit their participation in the online survey. The phone script adds up to 5 additional minutes to the completion time. Since it is not possible to predict ahead of time what proportion of survey respondents will need to be contacted by telephone, we assume that each respondent will be contacted by phone to solicit their participation, thus increasing the average time for completion to 35 minutes.

Based on an analysis of a sample of SULI alumni conducted by ORISE, using only publicly available data, an anticipated 40% of respondents are in the “Life, Physical, and Social Scientist Technicians” category, 30% are in the “Computer and Mathematical Occupations” category, and 30% are in the “Architecture and Engineering Occupations” category. The breakdown of occupations for non-alumni is expected to be similar, based the fields of study of applicants to the SULI program.

The respondent universe is based on: (1) approx. 3000 SULI alumni, and (2) approx. 3,000 members of the comparison pool (non-participation SULI applicants). With an expected response rate of 40% in each group, an estimated 2,400 respondents are anticipated to participate in the survey. With an average completion time of 35 minutes, the annual respondent burden is estimated to be 1,400 hours.

Table A3. Estimate of Respondent Burden Hours

Form Number/Title (and/or other Collection Instrument name) |

Type of Respondents |

Occupation Group |

Number of Respondents |

Annual Number of Responses |

Burden Hours Per Response |

Annual Burden Hours |

Annual Reporting Frequency |

Alumni Survey |

SULI Alumni |

Science Graduates |

480 |

480 |

0.583 |

280 |

1 |

Engineering Graduates |

360 |

360 |

0.583 |

210 |

1 |

||

Computer and Math Graduates |

360 |

360 |

0.583 |

210 |

1 |

||

Alumni Subtotal |

1,200 |

1,200 |

|

700 |

|

||

Non-Alumni Survey |

Comparison Group (Non-Alumni) |

Science Graduates |

480 |

480 |

0.583 |

280 |

1 |

Engineering Graduates |

360 |

360 |

0.583 |

210 |

1 |

||

Computer and Math Graduates |

360 |

360 |

0.583 |

210 |

1 |

||

Non-Alumni Subtotal |

1,200 |

1,200 |

|

700 |

|

||

TOTAL (Alumni + Non-Alumni) |

2,400 |

2,400 |

|

1,400 |

|

||

A.12B. Estimate of Annual Cost to Respondent for Burden Hours

Provide estimates of annualized cost to respondents for the hour burdens for collections of information, identifying and using appropriate wage rate categories. The cost of contracting out or paying outside parties for information collection activities should not be included here. Instead, this cost should be included under ‘Annual Cost to Federal Government’.

Respondents (SULI alumni and members of the comparison group) will respond as individuals, and the cost to them is the opportunity cost if they were to undertake paid work for the time that they spend responding to surveys. Therefore, the cost estimate for these groups is based on the Bureau of Labor Statistics’ May 2023 National Occupational Employment and Wage Estimates national wage averages. Based on an analysis of a sample of SULI alumni conducted by ORISE, using only publicly available data, an anticipated 40% of respondents are in the “Life, Physical, and Social Scientist Technicians” category (mean hourly wage: $42.24), 30% are in the “Computer and Mathematical Occupations” category (mean hourly wage: $54.39), and 30% are in the “Architecture and Engineering Occupations” category (mean hourly wage: $47.64). The breakdown of occupations for non-alumni is expected to be similar, based the fields of study of applicants to the SULI program.

A multiplier of 1.4 is factored into the cost estimate, as preliminary evidence indicates that most respondents are in non-government employment. Thus, the fully burdened hourly wage for respondents in the “Life, Physical, and Social Scientist Technicians” category is 1.4 x $42.24 = $59.14. The fully burdened hourly wage of respondents in the “Computer and Mathematical Occupations” category is $54.39 x 1.4 = $76.15; and the fully burdened hourly wage of respondents in the “Architecture and Engineering Occupations” category is 1.4 x $47.64 = $66.70. The total annual burden hours for each of these groups, together with the fully burdened hourly wage and the total respondent costs for each group are shown in Table A4. The annualized response burden cost for the survey is estimated to be $93,115.40.

Table A4. Estimated Annual Cost to Respondent |

||||

Type of Respondents |

Occupation Group |

Annual Burden Hours |

Fully Burdened Hourly Wage Rate |

Total Respondent Costs |

SULI Alumni |

Science Graduates |

280 |

$59.14 |

$16,559.20 |

Engineering Graduates |

210 |

$66.70 |

$14,007.00 |

|

Computer and Math Graduates |

210 |

$76.15 |

$15,991.50 |

|

Alumni Subtotal |

700 |

|

$46,557.70 |

|

Comparison Group (Non-Alumni) |

Science Graduates |

280 |

$59.14 |

$16,559.20 |

Engineering Graduates |

210 |

$66.70 |

$14,007.00 |

|

Computer and Math Graduates |

210 |

$76.15 |

$15,991.50 |

|

Non-Alumni Subtotal |

700 |

|

$46,557.70 |

|

TOTAL (Alumni + Non-Alumni) |

1,400 |

|

$93,115.40 |

|

*Subtotals rounded to nearest dollar may result in a displayed total of $93,116, although the exact calculated total is $93,115.40.*

A.13. Other Estimated Annual Cost to Respondents

Provide an estimate for the total annual cost burden to respondents or recordkeepers resulting from the collection of information.

Not applicable. This information collection does not place any capital costs or costs of maintaining capital equipment on respondents or recordkeepers.

A.14. Annual Cost to the Federal Government

Provide estimates of annualized cost to the Federal government.

There are no equipment or overhead costs. Contractors are being used to support this data collection. The cost to the federal government will be the cost of the contract with ORISE.

The estimated contractual cost to the federal government for the proposed information collection activities is $770,000. The duration of the study from start of data collection to close-out is expected to be 2 years; thus, the estimated annualized cost to the federal government is $385,000.

Table A5. Estimated Annualized Burden Cost

SULI LFS Tasks |

Burdened Labor Cost |

Burdened Other Direct Costs |

Project Setup, WDTS Review & Regulatory Compliance |

$85,000 |

none |

Preparation for Study |

$160,000 |

$15,000.00 |

Survey Administration and Data Collection Waves |

$95,000 |

$38,000.00 |

Data Cleaning and Analysis |

$150,000 |

none |

Reporting |

$110,000 |

$27,000.00 |

Dissemination and Closeout |

$90,000 |

none |

Subtotal |

$690,000 |

$80,000.00 |

Labor + ODC: TOTAL |

$770,000.00 |

|

A.15. Reasons for Changes in Burden

Explain the reasons for any program changes or adjustments reported in Items 13 (or 14) of OMB Form 83-I.

Not applicable. There are no program changes. This is a first-time data collection. There is no pre-existing data collection program for this study.

A.16. Collection, Tabulation, and Publication Plans

For collections whose results will be published, outline the plans for tabulation and publication.

A series of additional analyses will be carried out to respond to each of the evaluation questions provided in the Summary of Evaluation Questions (see Table A1). In each case, we will select techniques appropriate to the characteristics of the data, as currently known, and to the overall quasi-experimental research design that will depend on propensity score estimation. Some methodological and technical decisions can only be made once data collection is completed and the full the data are in hand, based on the patterns and distributions in the dataset. In designing the study, we considered and consulted key literature in STEM workforce development, combined with our own deep experience in conducting LFS studies and our suite of findings from those studies, to identify the variables that would be important to understanding and interpreting outcomes. Each analysis technique depends on a set of key assumptions about data distributions, and we will test those assumptions once data are available and before deciding how to proceed.

Open-ended (qualitative) questions included in the survey will be analyzed to provide additional insight and provide context for interpreting the quantitative survey data. Qualitative data analysis will be guided by grounded theory as we will use the method of constant comparison to generate themes from the data. We will review responses to each open-ended question and generate a coding scheme based upon identified themes. The coding schemes will include nodes (top-level themes) and sub-nodes (sub-themes) as applicable. Once a coding scheme has been applied to an open-ended question, nodes and sub-nodes will be tallied to identify the most prevalent themes among responses. Frequencies of themes will be calculated for the total respondent population and then further explored using the analytical framework of intersectionality to explore sub-groups of the SULI alumni based upon various gender, ethnic, and racial identities.

WDTS's application and pre/post survey systems are incredibly robust, so the data collected on the survey will be limited to outcomes data that we need to collect for the study, and a very small number of additional stable variables that will be important for the analysis but were not collected at time of application.

Propensity score estimation variables are those variables, available for both the SULI participant alumni and the comparison group, that will be used to calculate propensity scores and to then carry those scores through all analyses to properly weight or balance the comparison group. Outcome variables are the variables we are interested in exploring and align to outcomes on the SULI logic model. These include key educational, career, and other outcomes. Predictor variables are characteristics of the individual that can affect the outcome, such as preparation or personal background. Moderators are variables, like confounders, that can also affect the outcome by playing a "moderating" role that changes the relationship between the independent and dependent variables; for instance, the quality of mentoring reported by the participant at the end of their SULI experience could certainly moderate their eventual outcomes, all other factors being equal.

Some variables, such as the quality of mentoring reported during a SULI appointment, are only applicable to participants, and not to the comparison group; these variables can therefore only be used in analyses focused on the participants without comparison or benchmarking, i.e., Evaluation Question 1. In single group analyses, like those associated with Evaluation Question 1, we will examine the outcomes of the SULI alumni group and how those depend on the independent (predictor) and moderator variables. Two-group analyses, like those associated with Evaluation Question 2 and 3, will involve comparing the outcomes of the SULI alumni group to the comparison group or to the national benchmark sample in order to understand and attempt to quantify the effect of SULI.

Throughout these analyses, there will be some cases in which we segment analyses by key demographic variables. For instance, we will cross-tabulate outcomes by sex and race/ethnicity, and apply statistical tests to examine significance of any differences between these groups. In other cases, however, we propose to use multivariable regression models that can account for multiple variables and for the intersectional dynamics likely to affect outcomes. Such models will allow us to characterize the magnitude of the influence that predictor variables have on outcomes.

When assessing continuous/quantitative outcomes we plan to apply linear regression; for binary categorical outcomes we plan to apply logistic regression; and then for categorical outcomes, we plan to apply multinomial logistic regression. In many cases, we will layer in additional techniques, such as stepwise variable selection and propensity score estimation. A main goal of the study will be to carry out rigorous analyses with best practices in mind, and to then present findings with clear, cogent explanations that provide key insights and interpretations to a variety of stakeholder audiences without requiring high levels of statistical knowledge.

For a more detailed description of analytical techniques to be applied, see Supporting Statement B.

The estimated timeline for data analysis and reporting activities is shown in Table A6. Preparation of the codebook and the statistical analysis plan will overlap with data collection but is expected to be concluded by 3 weeks after data collection is complete. Analysis activities are expected to take approximately 27 weeks (6 months). Results in the form of written reports, presentations, briefings and recommendations will be shared with WDTS and SULI in support of the SULI program. WDTS reserves the option to request additional deliverables in the form of conference papers, abstracts, or posters that are suitable for publication in scholarly journals or presentation at scientific conferences. These activities are optional; a final determination on such activities will be made in consultation between ORISE and WDTS during the reporting phase.

Table A6. Estimated Timeline for Data Analysis and Reporting Activities

Activity |

Approximate Duration |

Prepare codebook and data analysis plan; create statistical code and review |

3 weeks |

Merge and align final survey data |

1 week |

Conduct cleaning and finalize dataset |

3 weeks |

Carry out non-response bias analysis |

2 weeks |

Complete analysis of demographics/background variables |

3 weeks |

Analyze qualitative data |

4 weeks |

Pull needed data and cross-tabs from NSCG |

2 weeks |

Conduct all analysis, Evaluation Questions 1 - 3 |

5 weeks |

Populate report shell |

2 weeks |

Finalize report draft |

2 weeks |

TOTAL |

A.17. OMB Number and Expiration Date

If seeking approval to not display the expiration date for OMB approval of the information collection, explain the reasons why display would be inappropriate.

WDTS does not request exemption from display of the OMB expiration date. All data collection instruments will display the OMB expiration date.

A.18. Certification Statement

Explain each exception to the certification statement identified in Item 19 of OMB Form 83-I.

Not applicable. No exceptions to the certification statement are requested or required.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | Supporting Statement for Science Undergraduate Laboratory Internship (SULI) Long-term Follow-up Study |

| Subject | Improving the Quality and Scope of EIA Data |

| Author | Stroud, Lawrence |

| File Modified | 0000-00-00 |

| File Created | 2025-07-15 |

© 2026 OMB.report | Privacy Policy