NFIP Compliance Audit Usability Tests

NFIP Compliance Audit_Usability Tests_JG_PR.docx

National Flood Insurance Program (NFIP) Compliance Audit Reports

NFIP Compliance Audit Usability Tests

OMB: 1660-0023

USABILITY TESTING OF NFIP COMPLAINCE AUDIT PROGRAM TOOLS

Ex Sum: The Floodplain Management Division (FPMD) at FEMA HQ is engaged in a multi-year effort to redesign the way we assess and gain compliance with National Flood Insurance Program (NFIP) regulations adopted by participating communities. On July 1, 2025 we will be replacing how we did this by going from Community Assistance Visits (CAVs) and Community Assistance Contacts (CACs) to the NFIP Compliance Audit Program(audits). In May 2022 we piloted the new process and tools with 15 communities across 7 states, by testing against past CAC/CAVs and simultaneously with new CAC/CAVs.

Do the tools capture the requirements we are holding communities accountable to? Yes

Do the tools make the process more consistent, transparent, and fair? Yes

Are the questions easy to understand? Yes

Do the tools allow us to “off-ramp” communities that do not need a full, comprehensive audit? Yes (6/7 states agreed)

How long does it take the auditor to complete each stage and tool of the process? Same or less.

After the pilot we received approximately 450 comments and were able to incorporate approximately 97% of the suggested improvements to the tools.

Background: FPMD at FEMA HQ is engaged in a multi-year effort to redesign the way we assess and gain compliance with NFIP regulations adopted by participating communities. Losses avoided data show that communities that adopt and enforce minimum NFIP regulations suffer 65% less damages after a flood, saved over $100 Billion of taxpayer money over the last 40 years, help return individuals to their houses faster post flood, help businesses recover faster, and maintain their tax base and community livability. We can truly say that compliance equals resilience. This transformative work gets accomplished through the regular meetings and efforts of the Floodplain Management Compliance Committee which consists of SMEs from each FEMA regional office, a HQ FPMD lead, and a regional FM&I Branch Chief, and is supported through a national contract. Together we co-created the new NFIP Compliance Audit process and tools to replace the current method known as CAVs and CACs. The redesign consists of a progressive audit process and the use of a standardized set of tools that help ensure more consistency, transparency, fairness and efficiency. The process consists of three phases: 1 Audit, 2 Audit Follow Up, and 3 Enforcement. At the end of the audit a community will have a score that represents the health of their community’s FPM program. Scores will show auditors where they need to focus their technical assistance to help build the community’s capabilities and technical skills so they can improve their compliance and therefore be more flood-resilient. As part of the planned work of this committee, we conducted a pilot in summer 2022 to test the new tools, and we submit the results of this as a usability test for our PRA.

In May 2022 we asked states participating in the CAP-SSSE grant program, in which FEMA provides money to States to perform CAVs and CACs, to help us conduct a pilot of the new NFIP compliance Audits that will soon replace the current CAVs and CACs. In June 2022 we gave two just-in-time trainings for state volunteers in how to use the tools and how to perform the program assessment with the new tools. We also gave each auditor a set of evaluation questions so they could critique the new tools and process. The states had until August 2022 to conduct the pilot and report out. The findings were compiled in September 2022. Seven states participated which include Advanced TSF States and Foundational, coastal and non-coastal, urban and rural, CONUS and non-CONUS: California, Montana, Nebraska, Arkansas, South Carolina, Puerto Rico and New Hampshire. Fifteen communities were piloted. The participants were given two methods by which to test the new tools against CAVs and CACs: The Recently Completed CAC/CAVs or the Blind Observer methodology. Pilot participants filled out a feedback and workload assessment survey and a comments matrix that we used to guide our updates to the process and scoring tools.

Methodologies: Auditors were allowed to choose between two different methodologies: 1) Recently Completed Comparison or 2) Blind Observer Comparison. As part of the recently completed CAV/CAC pilot approach, the auditor reviews previously-completed CACs/CAVs, assesses the results and data gathered on the community’s compliance using the new approach, and then compares the results. The second approach used to test the new audit process is the Blind Observer method. Using this method, one auditor conducts a new CAV/CAC in real time while another auditor uses the new audit tool to score the community. The results are then compared. Specific steps are as shown below in graphics and narrative text:

Recently Completed Comparison

Recently Completed: A Compliance Committee member fills out the audit tools using documentation from a recently completed CAC/CAV. Step 1: Identify a community that has recently completed a CAV. Preferably, a community that recently completed both a CAC & CAV. Step 2: Using your expert judgement and knowledge about the community, fill in all the questions in the Diagnostic and Full Evaluation tools. There will likely be gaps in the line of questioning. Step 3: Compare the results of the audit scores with your region’s determination of the community's compliance. Step 4: Complete intake form and return to FPMD HQ along with a copy of the completed tools.

Blind Observer Comparison

Blind Observer: Step 1: Identify a community that has an upcoming CAV. If possible, schedule a CAC before the full CAV. Step 2: Identify POCs for the 1.) main CAV interviewer; and 2.) someone to sit-in and observe. Step 3: Before the CAV, coordinate with the interviewer to ensure all the questions in the tools will be asked during the CAC/CAV. Step 4: During the CAC/CAV, fill out all the questions in the tools (or answer using a best guess if a community response is not possible). Step 5: After the CAV, complete the intake form and return to FPMD HQ along with a copy of the completed tools.

The use of each method was fairly balanced. Most participants were experienced SMEs. Even so, two states chose to use the tools to train new employees on how to audit. In fact, the Arkansas State NFIP Coordinator that participated in the pilot co-presented in Raleigh NC at the 2023 ASFPM Conference with FEMA HQ staff on the pilot process, sharing feedback on the beneficial use of the new tools, especially for training purposes.

Key Results: the following section provides a summary of the pilot research questions and overall results.

1) Do the tools capture the requirements we are holding communities accountable to?

Community Self Assessment Form

Participants were generally encouraged by the new tools/process and most believe it will lead to more consistency, transparency, & fairness; we aim to address issues raised in development of Version 2.0 of the tools

Most participants agreed with or were neutral about the tool’s questions, applicable to all NFIP communities, and ability to capture in the key outcomes of interest as part of the audit

Some participants were concerned that lower capacity communities may have challenges answering some questions but did not offer concrete solutions

Diagnostic Assessment Tool

Most participants agreed or were neutral about the tool being able to create a more consistent, transparent, and fair process for evaluating all NFIP Communities

One pilot reviewer wrote: “We think the [Diagnostic Assessment baselines] are the best part about the entire tool. Rather than guessing what they should score, we know exactly where they are lacking.”

Most participants appreciate that all communities will be evaluated on the same criteria

Some participants expressed concern around scoring questions not directly in the CFR. OCC expressed concerns with this too.

The FPM Compliance Committee has decided, and FPM Leadership has approved, that the score is valuable data for assessing a community’s compliance program. This data shows where the community needs assistance and documents the compliance health of NFIP communities nationwide, as well as tracking compliance changes over time. Note that a high score on the Diagnostic Assessment does not provide any tangible advantages or benefits to a community. Additionally, a low score does not provide any tangible punishments to a community. Rather, the scores help identify specific areas where a community may struggle administering and enforcing NFIP regulations and where a more rigorous evaluation may be needed.

Full Evaluation Tool

Most participants feel the questions in the Full Evaluation tool are fair and consist with the questions that are currently asked by the States in CAVs

2) Do the tools make the process more consistent, transparent, and fair?

Diagnostic Tool

Most participants agreed or were neutral that the score was accurate based on their assessment of the community’s compliance with NFIP regulations

Many participants thought the score accurately represented the community; some thought it was less useful for low-capacity communities but could not articulate how nor how to refine the tools to be more useful.

There was a desire to score the ordinance review, factor results of document review into the score, and refine questions to validate the community is following a process (not just that they have an SOP).

We refined all appropriate questions to get at the heart of the matter better. Leadership decided to not score ordinance reviews or document reviews into the score at this time mainly due to resource constraints.

Full Evaluation Tool

Most participants agreed or were neutral that the tool captured all of the questions necessary to assess the community’s compliance with the NFIP

Most auditors believe the tool contains the questions necessary to comprehensively assess NFIP compliance

Some auditors would like to capture additional criteria including recovery processes and other information

The Committee and Leadership decided that this is a tool that assess the community’s administration and enforcement of the minimum NFIP requirements, however guidance materials will incorporate best practices on dialogue promoting grants, HM planning, joining CRS program, and other FEMA resources/issues.

3) Are the questions easy to understand?

Both Tools:

Participants generally thought the new tools and processes are easy to follow, but they highlighted the need to better understand the scoring criteria for the Diagnostic Assessment.

Scores are created by the tool using a formula involving weighted scores. Some aspects are considered more crucial to having a functional program so they would be weighted more. More minor aspects of a functional program are weighted less.

The scores have a couple of purposes. First and foremost, it is to help the auditor determine if there are enough issues or questionable items that a deeper dive into their program is necessary. This is the main point of the new phased audit system; it helps with right-sizing the auditor’s efforts to the needs of the community.

Another purpose of the score is to have a more tangible concept of the health of a community’s program as compared to how we evaluate programs today. The community’s audit score can be used to show where more outreach and assistance is needed to gain compliance – and therefore community resilience.

Scores can also be tracked across states and regions to identify patterns and help focus our technical assistance efforts. For instance, this data can be used to create community “profiles” that will help with predictive analysis.

Finally, scores can be tracked over time to better understand and communicate the value of strong administration and enforcement of floodplain management requirements. As noted above, a community will not be rewarded or punished individually for the outcomes of their compliance score.

Participants said even though it was relatively easy to follow, more detailed guidance/training will be needed.

The Implementation Rollout Strategy planned for July 1, 2024 will go into more detail about how and when auditors will be trained on the new process.

Since the pilot, FPMD has given many presentations about the process and tools to states and regions at CAP-SSE conferences, ASFPM conferences, and at HM Workshops put on by FEMA.

FPMD produced a webinar for states and regions in October 2023 which went over the process again, as refined, and demonstrated the use and some of the functionality of the tools.

FPMD will have two videos in 2024 explaining the process in detail and how to use the tools.

A guidance document was drafted that explains the process and tools in detail. FPMD will release it publicly after it is approved by the 111-12 process.

The option to fast track a community to a Full Evaluation was well received

Checklist-style functionality was well received

There was a request to add additional options for state nuances.

It was decided at a later date after a FPM Leadership decision that this initial implementation of the tool will not contain higher standards or state nuances.

4) Do the tools allow us to “off-ramp” communities that do not need a full, comprehensive audit?

Diagnostic Assessment Tool

Participants generally thought the tools provide the information needed to complete the audit earlier in the process (at diagnostic stage) if no red flags are raised; however, they highlighted the need for some additional elements to improve the predictive accuracy.

Once we collect real world data we plan to work with our GIS and Data department to analyze the findings in hopes of making community profiles that should help predict what level of audit a certain type of community needs so that we can plan workloads better. This is not meant to pigeonhole anyone, just predict workload.

Many participants welcomed the opportunity to complete the audit without a Full Evaluation (a small number did not and gave no explanation as to why).

Some noted the Diagnostic Tool went into more depth than a traditional CAC; others want the tool to have even more scrutiny still.

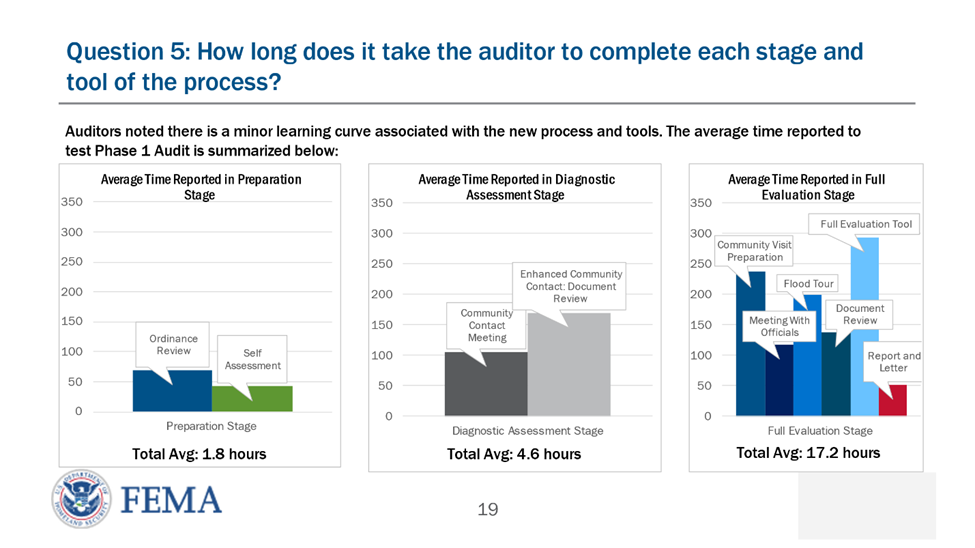

5) How long does it take the auditor to complete each stage and tool of the process?

After discussing these findings with FEMA’s CAP-SSSE Coordinator, we agree that these workloads are fairly similar to current levels. They will only reduce with time as auditors become more familiar with the process.

Pilot Findings and Recommendations: We adjudicated over 450 comments from the pilot participants and some Compliance Committee members, enabling us to edit the tools to their current form. We can confidently state that the Compliance Committee and the seven pilot states helped to co-create the audit tools. The majority of the comments were on the Diagnostic Assessment and most were minor in nature and therefore easy to add to the tools. We addressed all critical comments, and most major and minor comments. Overall we incorporated approximately 97% of the comments into the new tools. Comments that we did not concur with were due to limited time and resources of the contract or because FEMA’s OCC advised against the suggestion. Remaining comments reflected personal opinions rather than actionable ideas for improvement.

Moving Forward: Plans to improve the Tools: FPM plans to monitor the overall implementation of the process, with contractor support, to continually update and refine the tools as stakeholder feedback is collected after implementation begins July 1, 2025.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Gleisberg, Jack [USA] |

| File Modified | 0000-00-00 |

| File Created | 2024-07-30 |

© 2026 OMB.report | Privacy Policy