Supporting Statement B

0648-0646 SUPPORTING STATEMENT PART B _ USVI.docx

Generic Clearance For Socioeconomics of Coral Reef Conservation

Supporting Statement B

OMB: 0648-0646

SUPPORTING STATEMENT PART B

U.S. Department of Commerce

National Oceanic & Atmospheric Administration

Socioeconomics of Coral Reef Conservation

U.S. Virgin Islands 2025 Survey

1. Describe (including a numerical estimate) the potential respondent universe and any sampling or other respondent selection method to be used. Data on the number of entities (e.g., establishments, State and local governmental units, households, or persons) in the universe and the corresponding sample are to be provided in tabular form. The tabulation must also include expected response rates for the collection as a whole. If the collection has been conducted before, provide the actual response rate achieved.

The potential respondent universe for this broader study is household residents of each U.S. coral reef jurisdiction, aged 18 years or older. The total population of individuals in all jurisdictions is 11,458,480 (U.S. Census 2020). The potential respondent universe for this jurisdictional study is household residents of the U.S. Virgin Islands (USVI), aged 18 years or older. The estimated number of occupied households is 39,642, and the estimated population aged 18 and older is 70,060 (U.S. Census, 2020). Respondents will be stratified by the islands of St. Croix, St. Thomas, and St. John (Table 1). The strata were defined by NOAA and jurisdictional partners, and were informed by the jurisdiction’s coastal zone as defined by the Coastal Zone Management Act (1972).

A stratified sampling methodology is proposed for all seven jurisdictions, and sample sizes will be selected proportionately across strata/sub-strata to allow for the estimation of jurisdiction-level parameters (see more information on sampling in Section B.2). A random subset of households will be selected from each stratum and one residing individual (18 years or older) will be randomly selected from each household. Multiple members of a household will not be surveyed because this can diminish the precision of estimates, as a consequence of homogeneity within the household (Cochran, 1977; Kish, 1949). Selecting more than one person per household also increases the costs and burden to the household and the impact on response rates (Krenzke, Li, & Rust, 2010). Final sample sizes (completes and adjusted) will be calculated with the assistance of the vendor selected to conduct the data collection, based on their expertise in conducting similar surveys in the region of interest. Additionally, sample sizes may be adjusted based on updated Census data or on information gained during initial stages of survey administration.

Table 1: Total Population (2020) of Study Jurisdictions and Strata

Jurisdiction |

Strata |

Population (2020) |

|

Puerto Rico1

|

|

3,285,874 |

|

Florida2

|

|

|

6,379,638 |

U.S. Virgin Islands3 |

|

|

87,146

|

Guam4 |

|

153,836 |

|

American Samoa5

|

|

|

49,393 |

Hawai’i6 |

|

|

1,455,271 |

Commonwealth of the Northern Mariana Islands (CNMI)7 |

|

47,322

|

|

TOTAL |

11,458,480 |

||

In each of the jurisdictions, we intend to hire qualified surveying contractors with databases of contact information in order to allow for the greatest possible randomization and coverage of survey participants. NOAA will also work with these contractors to select the most cost-effective survey methodology that will resonate with the population measured. Based on the response rates from telephone surveys conducted in previous years (Table 2), telephone surveys are not preferred. Geographic stratification with a telephone-based sample has also been difficult in previous years. Telephone surveys have faced a number of challenges over the years due to far fewer households having a landline phone and the fact that people are generally less amenable to answering survey questions over the telephone than they used to be (Dillman, Smyth & Christian, 2014).

The use of face-to-face surveys remains the most appropriate mode to use in American Samoa, Guam, CNMI, Puerto Rico, and USVI, and has been sufficient in previous information collections despite low response rates (Table 2). The second round of surveys conducted in Hawai’i in 2020 used a mail push-to-web approach and yielded a response rate 17.3% higher than the first round of telephone surveys, demonstrating that this is a much more efficient approach.

Table 2: Previous survey modes and response rates obtained in each jurisdiction

Jurisdiction |

First Round |

Second Round |

||

Total Number of Responses |

Survey Mode and Response Rate |

Total Number of Responses |

Survey Mode and Response Rate |

|

1. American Samoa |

448 |

Face-to-face: unavailable |

1,318 |

Face-to-face: 19% |

2. CNMI |

722 |

Telephone: 20% (702 responses) Face-to-face: 29% (20 responses) |

|

|

3. Guam |

712 |

Telephone: 13% Face-to-face: 60% |

653 |

Total: 19.7% Face-to-face: 15.2% Online: 4.4% |

4. Hawai’i |

2,240 |

Telephone: 1.5% |

2,700 |

Mail push-to-web: 18.8% |

5. Florida |

1,210 |

Telephone: 12% |

2,201 |

Telephone: 13.5% |

6. Puerto Rico |

2,494 |

Telephone: 2% |

980 |

Total: 21.6% Face-to-face: 19.8% Online: 1.8% |

7. U.S. Virgin Islands |

1,188 |

Telephone: 28% Face-to-face:15-20% |

|

|

The survey methodology proposed for this information collection is based on the previous NCRMP survey response rates achieved in the jurisdictions (Table 2) and recommendations by jurisdictional partners (Table 3). While this is the proposed plan, each jurisdictional survey will also be informed by the local contractors and any new advancements in survey methodology and technology as they arise in future information collections. Face-to-face surveys will be implemented in five of the jurisdictions to achieve the targeted sample size based on previously achieved response rates in round 2 of NCRMP studies and a 10% non-deliverable/ineligible rate. These response rates also incorporate the budget and estimated costs of conducting face-to-face surveys.

There are only a few studies in the jurisdictions that have reported in-person response rates, and the rates and methodological approaches vary. For example, in-person household surveys conducted in Puerto Rico achieved a 71.5% (Perez et al., 2008) and 85.5% (Gravlee & Dressler, 2005); in Guam and CNMI, 48% (Guerrero et al., 2017); in American Samoa, 55% (Fiaui & Hishinuma, 2009) and 80% (American Samoa Department of Health, 2007); and in Hawai’i, 37.3% (Fiaui & Hishinuma, 2009). The first round of NCRMP surveys in CNMI and USVI achieved low response rates using a combination of telephone and face-to-face surveys (Table 2). Data collection in USVI and American Samoa in round 1 were opportunistic. The response rate for USVI was an approximation, and no rate was reported for American Samoa. In round 2, the response rates in American Samoa, Guam, and Puerto Rico were lower than the external studies cited above. Since telephone surveys will no longer be used, more effort will be allocated to maximizing response rates to face-to-face and mixed-mode surveys. As stated in other sections of the Supporting Statement, NCRMP data collections use a rigorous and proportional address-based sampling approach with multiple levels of randomization. This ensures high data quality standards, but may reduce response rates.

Table 3: Proposed survey methodology and estimated response rates by U.S. coral reef jurisdiction

Jurisdiction |

Survey Mode |

Total Number of Contacts Required |

Estimated Sample Size (excluding 10% non-deliverables and ineligibles) |

Estimated Response Rate |

Expected Number of Respondents |

1. American Samoa |

Face-to-face (with internet option) |

4,527 |

4,075 |

20% |

815 |

2. CNMI |

Face-to-face (with internet option) |

8,888 |

8,000 |

20% |

1,600 |

3. Guam |

Face-to-face (with internet option) |

4,444 |

4,000 |

20% |

800 |

4. Hawai’i |

Mail push-to-web |

10,493 |

9,444 |

18% |

1,700 |

5. Florida |

Mail push-to-web |

15,872 |

14,285 |

14% |

2,000 |

6. Puerto Rico |

Face-to-face (with mail or internet option) |

9,090 |

8,181 |

22% |

1,800 |

7. U.S. Virgin Islands |

Face-to-face (with mail or internet option) |

6,248 |

5,624 |

20% |

1,125 |

To take advantage of various methods of communication and information access, as well as provide respondents with alternative survey options (and maximize response rates), a combination of face-to-face, mail, and online surveys will be utilized based on the feasibility and effectiveness of each mode in the jurisdiction. The mail push-to-web methodology will be used as a mixed mode approach to data collection in Hawai’i and Florida. This approach involves the use of mail contact to direct people to go online and complete a web survey. Alternatively, respondents may be given the option of completing either a mail or online survey to accommodate the respondent’s preference (depending on the jurisdiction, budget, and feasibility). The use of mail surveys to contact respondents is not preferred in American Samoa, Guam, CNMI, and Puerto Rico due to non-standardized or unreliable addresses.

Table 4 highlights the percent of households with a broadband internet subscription in each of the seven jurisdictions. While many households in the jurisdictions have internet subscriptions, the use of online surveys alone is not the most effective mode for data collection. Local jurisdictional partners in American Samoa, CNMI, Guam, USVI, and Puerto Rico have suggested that online surveys may not always be feasible or efficient due to internet reliability, availability, and access disparities. Face-to-face surveys were recommended as the most effective mode in these jurisdictions. Accordingly, surveys will be conducted face-to-face or supplemented with a mixed mode to capture non-internet users where feasible.

Table 4: Internet Usage in each U.S. Coral Reef Jurisdiction

Jurisdiction |

Total Number of Households (U.S. Census 2020) |

Percent of Households with a broadband internet subscription |

1. American Samoa |

49,710 |

69% |

2. CNMI |

14,208 |

84% |

3. Guam |

51,555 |

85% |

4. Hawai’i |

478,413 |

89% |

5. Florida (Southeast) |

2,463,333 |

87% |

6. Puerto Rico |

1,196,790 |

68% |

7. U.S. Virgin Islands |

39,642 |

79% |

Source: U.S. Census Bureau, 2020 Island Areas Censuses: Computer and Internet Use

https://www.census.gov/library/stories/2022/10/2020-island-areas-computer-internet-use.html#:~:text=Demographic%20profiles%20for%20the%202020,to%20a%20broadband%20internet%20subscription.

2. Describe the procedures for the collection, including: the statistical methodology for stratification and sample selection; the estimation procedure; the degree of accuracy needed for the purpose described in the justification; any unusual problems requiring specialized sampling procedures; and any use of periodic (less frequent than annual) data collection cycles to reduce burden.

An adequate sample size is necessary to ensure that it is representative and is important for statistical power and meaningful analyses of subgroup data. This is particularly important when interpreting the results or communicating implications for natural resource and visitor management. To account for anticipated refusal rates, “over-sampling” is often necessary to achieve a desired sample size (see total number of contacts required in Table 3). When there are too few subjects, it becomes difficult to detect statistically significant effects, thus providing inconclusive inferences. On the other hand, if there are too many subjects, even trivially small effects could be detected. Statistical power is the probability that a statistical significance test will lead to the correct rejection of the null hypothesis for a specified value of an alternative hypothesis (Cohen, 1988). In other words, it is the probability of detecting an effect or a change in a variable in the sample when that effect or change actually occurs in the population.

For each of the jurisdictional populations, we intend to select a random sample of individuals over the age of eighteen, stratified geographically as described in Table 5. More detail on the current jurisdictional information collection is found in Table 5a. The random sample will be obtained from the selected survey firm using standard sample selection tools. The sample frame will be developed from address mailing lists obtained and maintained by the survey firms and other sources as needed, depending on the coverage of these sources. These strata have been designed to account for the differing sizes of the populations in the areas close to coral reefs.

We have used the standard approach to estimating the sample size for a stratified population:

n = [t2 N p(1-p)] / [t2 p(1-p) + α2 (N-1)]

where n is the sample size, N is the size of the total number of cases, α is the expected error, t is the value taken from the t-distribution corresponding to a certain confidence interval, and p is the proportion of the population.

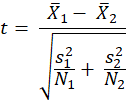

Based on the calculated sample size, the following formula is used to determine the margin of error, c, for each jurisdiction and stratum:

![]()

where z is the critical z-value (here, 1.96 at 95% CI), p is the sample proportion of the population (here, 0.5), and n is the sample size. Since there are multiple variables of interest, the sample proportion was set at 0.5 to provide the most conservative (largest) sample size. The final sample size will be determined based on the margin of error, as well as jurisdictional partner needs (for example, desired resolution of data) and the project budget.

Table 5: Sample size requirements for each surveyed jurisdiction

Jurisdiction |

Total Sample Size |

Total Margin of Error (95% CI) |

Strata |

Sample Size by Strata |

Strata Margin of Error (95% CI) |

1. Puerto Rico |

1,800 |

2.3% |

Coastal North Municipalities |

730 |

3.6% |

Coastal South Municipalities |

401 |

4.9% |

|||

Inland Municipalities |

669 |

3.8% |

|||

2. CNMI |

1,600 |

2.4% |

Saipan |

1,396 |

2.6% |

Tinian |

140 |

8.1% |

|||

Rota |

64 |

12.0% |

|||

3. Guam |

800 |

3.4% |

Northern villages |

660 |

3.8% |

Southern villages |

140 |

8.3% |

|||

4. Hawai’i |

1,700 |

2.4% |

Hawaii County |

425 |

4.8% |

Oahu County |

425 |

4.8% |

|||

Kauai County |

425 |

4.8% |

|||

Maui County |

425 |

4.8% |

|||

5. Florida |

2,000 |

2.2% |

Monroe County |

350 |

5.2% |

Miami-Dade County |

600 |

4.0% |

|||

Martin County |

350 |

5.2% |

|||

Broward County |

350 |

5.2% |

|||

Palm Beach County |

350 |

5.2% |

|||

6. American Samoa |

815 |

3.4% |

Urban villages of Tutuila |

218 |

6.6% |

Semi-urban villages of Tutuila |

376 |

5.1% |

|||

Rural villages of Tutuila |

107 |

9.5% |

|||

Village of Aua |

114 |

9.2% |

|||

7. USVI |

1,125 |

2.9% |

St. Croix |

385 |

5.0% |

St. Thomas |

385 |

5.0% |

|||

St. John |

355 |

5.5% |

|||

TOTAL |

9,840 |

9.8% |

|

|

|

Table 5a. USVI strata and sample sizes (Census, 2020).

Strata |

Substrata |

18+ Population |

Households |

Expected Completes |

Adjusted Sample Size* |

Margin of Error (95% CI) |

St. Croix |

N/A |

32,422 |

18,083 |

385 |

2,117 |

4.99% |

St. Thomas |

N/A |

34,338 |

19,705 |

385 |

2117 |

4.99% |

St. John |

N/A |

3,300 |

1,854 |

355 |

1952 |

4.91% |

TOTAL |

N/A |

70,060 |

39,642 |

1,125 |

6,186 |

2.92% |

*Assuming a 20% response rate and a 10% non-eligible/non-deliverable rate. If either of these assumptions need revision, the resulting adjusted sample size may vary.

Periodicity

This survey will be conducted approximately every five to seven years to minimize the cost burden (Table 6).

Table 6. Periodicity of Surveys Implemented in each U.S. Coral Reef Jurisdiction

Jurisdiction |

First Cycle of Surveys |

Second Cycle of Surveys |

Third Cycle of Surveys |

1. American Samoa |

2014 |

2021 |

2028 |

2. CNMI |

2016-2017 |

2024 |

2031 |

3. Guam |

2016 |

2023 |

2030 |

4. Hawai’i |

2015 |

2020 |

2027 |

5. Florida |

2014 |

2019 |

2026 |

6. Puerto Rico |

2014-2015 |

2022 |

2029 |

7. U.S. Virgin Islands |

2017 |

2025 |

2032 |

3. Describe the methods used to maximize response rates and to deal with nonresponse. The accuracy and reliability of the information collected must be shown to be adequate for the intended uses. For collections based on sampling, a special justification must be provided if they will not yield "reliable" data that can be generalized to the universe studied.

Methods designed to maximize response rates will be employed at every phase of the data collection effort. The surveys are user-friendly, with clear, easy to comprehend questions. Each survey can be completed in no longer than 20 minutes (see Supporting Statement Part A). The survey topic and related questions were developed to be interesting to respondents. Each survey makes use of listing options to allow the respondent to answer questions by checking appropriate boxes, which may aid in recall and analysis. To take advantage of various methods of communication and information access, as well as provide respondents with alternative response options, and maximize response rates, a combination of face-to-face, mail, and online surveys will be utilized based on the feasibility and effectiveness of each mode in the jurisdiction. For each approach, particular protocols will also be taken to minimize bias and reduce error.

Face-to-face Interviews

Prior to sampling, training of interviewers will cover sampling protocols to ensure quality and successful implementation for maximum response rates and minimum interviewer bias. The training program will cover sampling schedules, standard field operations and protocols for contacting household residents, backup procedures for field interruptions or missed sampling days or sites, log forms (response rates, nonresponse, and unoccupied households), survey instruments, data recording and storage, and supporting documentation of study procedures. Interviewers will be provided with a handbook and supplies, and go through multiple rounds of practice interviews. The interviewers will also be required to wear a uniform or display evidence of a local affiliation (e.g., professional shirt with logo, nametags labeled with institution). This serves as a visual cue to household residents regarding the purpose of the interviewer’s visit, trust, and legitimacy of the research (Groves & Couper, 2008).

The importance of interviewers adhering to the designed process to collect consistently reliable and accurate data will be emphasized. Interviewers can unintentionally introduce bias into the respondent’s answers. Interviewers will be trained on how to avoid bias that can be introduced through body language, facial expressions, voice inflection, or voiced opinions. Interviewers need to ask the questions on the survey form exactly as they are written and stay neutral while respondents give their answers. This will help ensure the data are collected consistently and accurately.

Before an interview proceeds, each potential respondent is informed about the purpose of the survey and asked if they are willing to participate in the research project. The interviewer assures individuals that their contact information will remain confidential and never be associated with their responses. Individuals are eligible to participate in the interview if they are a resident of the household and at least 18 years of age. The eligible person who has had the most recent birthday is selected to participate and be included in the sample. This random selection process prevents interviewer selection bias and helps the interviewer avoid picking the same type of person to interview all the time (English et al., 2001).

Mail Surveys

There may be instances (subject to budget constraints) where the mode of survey delivery or contact in the given jurisdiction will be via mail. If this is the case, to maximize response rates, materials are sent to individuals according to a specific pattern using the Dillman Tailored Design Method (Dillman, 2000; Dillman et al., 2014). This method uses personalization and repeated contacts to increase the likelihood that an individual will complete the survey. Efforts at personalization are designed to make the survey distinct from “junk mail” (which typically goes into the trash unopened), and to make sure that potential respondents feel that the research project is legitimate and that they are truly important to the success of the project. All persons in the sample receiving the survey by mail are sent an initial survey packet containing the survey, a detailed cover letter, and a business reply envelope. The envelope and cover letter are addressed and refer to each individual’s name and mailed using a first-class postage stamp for more personalized contact. The cover letter will explain the purpose of the project, why a response is important, a statement indicating that all personal information will be kept confidential, and instructions for completing and returning the completed survey (via mail or online).

Survey responses and subsequent contacts will be tracked using individual identification numbers. One week after the first mailing, a follow-up postcard is sent to all recipients. The post-card serves as a thank you if they have already completed and returned the survey, or as a reminder that they should complete and return the survey. Three weeks after the initial mailing, recipients who have not yet returned their survey are sent a second complete packet of survey materials. For this mailing, the survey packet is identical to the first, except the cover letter is slightly altered to further emphasize the importance of their participation. Five weeks after the initial mailing, all non-respondents are sent a third packet of survey materials, with a cover letter that further emphasizes the importance of their participation.

Mixed Mode

While postal mail surveys have been the standard mode for collecting social science data, online surveys are becoming more practical to use considering advances in modern technology and widespread uses of the Internet. Online surveys have the advantages of timeliness, reduced costs, and reaching large populations. Survey platforms also allow for more flexibility and possibilities for the design of questions, user interaction, and tracking of responses. Using the Internet as a stand-alone mode for surveys does not currently seem feasible due to the lack of a representative email sampling frame and limited Internet service in some jurisdictions, but it can be effective in maximizing response rates when used in combination with other methods. In a mail push-to-web approach, for example, survey administrators contact individuals via postal mail to invite them to participate in an online survey and then direct them to respond via a secure link. Previous studies using this approach have suggested that the U.S. Postal Delivery Sequence File (DSF), an address-based sampling (ABS) frame with near complete coverage of U.S. households, may provide the best source of coverage for household surveys (Link et al., 2008; Messer & Dillman, 2011). This approach will be considered in jurisdictions where an ABS and Internet service area available. The design and distribution of online surveys will follow the same approach used for the mail surveys (Dillman et al., 2014).

Nonresponse Bias

Nonresponse may also occur due to refusals to participating in the survey, failure to locate or reach units in the sample, or if a resident is temporarily away from the house (Cochran, 1977; Groves, 2006; Groves & Couper, 1998). To account for anticipated survey refusals or nonresponse, sampling above the targeted sample sizes will be required. Standard tests for nonresponse bias will be conducted to determine the representativeness of the population sample. The first set of tests will examine potential nonresponse bias based on residents who refuse to participate in face-to-face interviews. If the individual does not wish to participate in the survey, they are asked if they would be willing to share the reason(s) for their decline (open-ended question format), lasting approximately two minutes (see non-response questions in Supporting Statement Part A, 2.5). The interviewer will have a prepopulated checklist of nonresponse reasons that have been documented in previous research on nonresponse to household interviews (Groves & Couper, 1998). Nonresponse bias tests will be based on observed and recorded characteristics (e.g., gender and age) of the individuals refusing to participate. Additional data on the social and economic ecology of sample households can also be examined. For example, the U.S. census geographical units and measures, such as crime and population density or observations of the physical condition of a property, can be used to analyze nonresponse bias at the household, block, or county level (Groves, 2006; Groves & Couper, 1998). If significant differences are observed for specific variables, further analyses will be conducted to determine the extent of potential bias and whether statistical techniques such as weighting are necessary to provide unbiased results.

The second set of non-response tests will focus upon individuals who were mailed a survey instrument (or link to an online survey) but did not complete it. The standard way to test for non-response bias is to compare the responses of those who return the first mailing of a survey to those who return the second mailing to compare the characteristic under investigation (age, gender and race). Those who return the second questionnaire are, in effect, a sample of nonrespondents to the first mailing and the assumption is that these individuals are representative of that group. Differences between the results will be determined by comparing the means of each group for a random sample of variables. Additional weighting to demographic characteristics can be performed to help correct for differences in nonresponse and ensure that the final weighted sample is representative of the population of interest (Dillman et al., 2014).

The survey will also be translated and administered in multiple languages to address any potential issues with language barriers as a cause of nonresponse (Table 7). We expect that there will be some variation in languages and culture within a jurisdiction. Table 7 shows there are several major languages beyond English that are spoken by the populations of each jurisdiction. Certain concepts may entail culture-specific attributes and meanings which need to be explicitly taken into account to ensure sound interpretation of cross-cultural data (Peng et al., 1991; Singh, 1995). To accommodate multiple languages, increase response rates, and ensure that the sample is representative of the population, where appropriate the survey contractors will ensure that the questions posed in the survey are translated into the proper language and cultural contexts. For face-to-face interviews, local interviewers will conduct the interview in the appropriate language. Responses will be tracked to see if there are statistically significant differences in the survey results between those who speak English at home and those who do not.

Table 7: Major Languages Spoken in each U.S. Coral Reef Jurisdiction

Jurisdiction |

Major Languages Spoken |

1. American Samoa |

English, Samoan |

2. CNMI |

English, Chamorro, Carolinian, Chinese Mandarin, Tagalog |

3. Guam |

English, CHamoru, Chuukese, Tagalog, Korean |

4. Hawai’i |

English, Hawaiian Pidgin |

5. Florida |

English, Spanish |

6. Puerto Rico |

English, Spanish |

7. U.S. Virgin Islands |

English, Spanish, Negerhollands, Virgin Islands Creole |

4. Describe any tests of procedures or methods to be undertaken. Tests are encouraged as effective means to refine collections, but if ten or more test respondents are involved OMB must give prior approval.

The majority of the variables collected are continuous, using 5-point Likert scales to approximate normality so that means and standard errors can be estimated for each variable. Scales with five response values and a distribution that is approximately normally distributed in the population sampled are considered continuous variables (Morgan et al., 2006; Revilla, Saris, & Krosnick, 2014). It is a common and widely accepted practice in social science to use these scales in parametric statistics (Baker, Hardyck, & Petrinovich, 1966; Borgatta & Bohrnstedt, 1980; Gaito, 1980; Havlicek & Peterson, 1977; Kempthorne, 1955; Vaske, 2008). Binary and categorical variables will also be used to segment the population into subgroups (e.g., by activity participation, occupation, county) and run mean comparisons on variables of interest (e.g., coral reef importance, condition, and threats). The data will be weighted to best represent the jurisdiction’s population where appropriate.

Subgroups

Subgroups will be segmented from certain variables of interest. For example, subgroups based on activity participation will be determined from Q1, providing a way of categorizing respondents and analyzing data across activity type. Users of different activity groups have been shown to hold differing values (Ditton, Loomis, & Choi, 1992; Loomis & Paterson, 2014), and therefore, can inform subgroup variation on topics such as reef importance to quality of life (Q8), on support for management options (Q16), engagement in pro-environmental behaviors (Q18, Q20, Q22), and other topics measured in this survey.

For example, Q3 data (reasons why fishing/gathering is important to residents), could be compared by different types of fishing (e.g., recreational fishing, spearfishing, gathering of marine resources) indicated in Q1, and these fishing categories may vary by jurisdiction. This analysis would indicate whether different fishing categories are associated with different fishing motivations (Leong et al., 2020). Sub-group information also informs local jurisdictional management on how to target communication and outreach to stakeholder groups (Loomis et al., 2008a, 2008b, 2008c; Petty & Cacioppo, 1986).

Descriptive Statistics

Frequency distributions (counts, percentages, bar graphs/histograms) will be examined for normality and the extent to which the data are skewed. Cross-tabulations (n-way) will be an initial examination of the relationship between two or more variables. The Pearson chi-square (X2) will be used to test the hypothesis that the row and column variables are independent of each other (for example, p ≤ .10; .05; .01, or .001). This test for independence evaluates statistically significant differences between proportions for two or more groups/variables,

![]()

where: fo = the observed frequency in each cell, and fe = the expected frequency for each cell.

Other descriptive statistics will include measures of central tendency (Mean, Median, and Mode) and variability (standard deviation, variance, range) of responses. For example, mean values will be calculated for each perceived resource condition (Q9) and weighted by importance. The same will be done for perceptions of future conditions (Q10), importance of reefs (Q11), and other indicators measured in the survey. In this example, the data for Q9 and Q10 would be analyzed for correlations or gaps between perceptions of resource condition and the actual condition reported by NCRMP benthic, climatic, or fisheries data.

Independent Samples t-tests

Independent samples t-tests will test the hypothesis that the difference between means of two independent random samples differ statistically. For example, do diving and fishing sub-groups differ in their perception of resource conditions? Have resident perceptions changed in the second round of monitoring? The overall equation for a t-test is:

where:

![]() and

and

![]() are the means of the two samples,

are the means of the two samples,

![]() and

and

![]() are the sample variances, and N1

and

N2 are

the sample sizes.

are the sample variances, and N1

and

N2 are

the sample sizes.

The degrees of freedom associated with the t statistic (assuming unequal variances in the two samples) is calculated as:

where:

![]() and

and

![]() are the means of the two samples,

are the means of the two samples,

![]() and

and

![]() are the sample variances, and N1

and

N2 are

the sample sizes.

are the sample variances, and N1

and

N2 are

the sample sizes.

One-way Analysis of Variance (ANOVA)

One-way analysis of variance (ANOVA) will test mean scores for statistically significant differences between sub-groups for each relevant question. If there are statistically significant differences, Tukey’s post-hoc tests will assess pairwise differences between each sub-group. The strength of the relationship or the effect size statistic will also be estimated (Rosenthal, 1994; Rosenthal, Rosnow, & Rubin, 2000). For example, an ANOVA could test mean perception (Q9) scores for statistically significant differences between divers, anglers, and general participation in coastal recreation activities (Q1). If there are significant differences, Tukey’s post-hoc tests will assess pairwise differences between each sub-group. This type of information on different user groups could inform local jurisdictional management on differing social values or motivations, and how to target communication and outreach to stakeholder groups (Loomis et al., 2008a, 2008b, 2008c; Petty & Cacioppo, 1986).

Regressions

Multiple Regression is one example of a test that could be used to examine the effects of independent variables (e.g., values, perceptions, and attitudes) on a dependent variable (e.g., participation in pro-environmental behavior). This type of analysis could show the links between these constructs that are grounded in the theory of planned behavior (Ajzen & Fishbein, 1980) and cognitive hierarchy (Vaske & Donnelly, 1999). The basic regression model is:

where:

![]() = the predicted value of the dependent variable, X1

through Xp

= p

distinct independent or predictor variables, a

= the value of Y

when all of the independent variables (X1

through Xp)

are equal to zero, b1

through bp

= the estimated regression coefficients, and ε

= the error term.

= the predicted value of the dependent variable, X1

through Xp

= p

distinct independent or predictor variables, a

= the value of Y

when all of the independent variables (X1

through Xp)

are equal to zero, b1

through bp

= the estimated regression coefficients, and ε

= the error term.

Other models, such as Poisson or Negative Binomial models, may also be used depending on the types of variables (categorical, binomial, or continuous) and how they are measured, and what information is needed.

Indicator Scores

Finally, the data will be used to measure pre-defined indicators of human use of coral reef resources, knowledge, attitudes, and perceptions of coral reefs and management. Indicator scores will be calculated based on indices developed for each indicator component (grouped survey questions). There are several different ways to create an index, but the most appropriate method will depend on how the component was measured and the types of variables used. For example, Q11 could be used to develop an overall index of reef importance to ecosystem services. The index could be derived by adding up responses to the seven items asked in Q11, respectively, then normalizing to a 0-100 scale using the minimum-maximum scaling method defined by the following equation:

where: x is the value of a given variable, min is the minimum value in the distribution, and max is the maximum value in the distribution.

Per indicator development following the first round of NCRMP monitoring, a respondent needed to have answered every question contained in the index to receive an index value and answers of “not sure” are considered missing when constructing the additive index (Abt Assoc., 2019). The average indicator value is then calculated. 8 At the end of every monitoring cycle, primary and secondary data will be used in combination to update the scores of the 13 socioeconomic indicators for NCRMP. The secondary data analysis plan can be found in the 2019 Indicator Development Report.

5. Provide the name and telephone number of individuals consulted on the statistical aspects of the design, and the name of the agency unit, contractor(s), grantee(s), or other person(s) who will actually collect and/or analyze the information for the agency.

Individuals consulted on the statistical aspects of the design:

Don English, Ph.D.

National Visitor Use Monitoring Program Manager

USDA Forest Service

202-205-9595

Don.English@usda.gov

Mary Allen and Chloe Fleming will supervise data collection, with Sarah Gonyo serving as a statistical consultant. Survey vendors will be secured prior to start of each jurisdiction’s data collection and have not yet been assigned. Data analysis will be completed by Amanda Alva.

Mary Allen, Ph.D.

Socioeconomics Coordinator

Lynker, under contract to NOAA

Coral Reef Conservation Program

NOAA National Ocean Service, Office for Coastal Management

1305 East West Highway

Silver Spring, MD, 20910

240-528-8151

Mary.Allen@noaa.gov

Chloe Fleming, MPS

Coastal and Marine Social Scientist & Policy Specialist

CSS, under contract to NOAA

NOAA National Ocean Service, National Centers for Coastal Ocean Science

Hollings Marine Laboratory

Charleston, SC

843-481-0445

Chloe.Fleming@noaa.gov

Sarah Gonyo, Ph.D.

Economist

Biogeography Branch, Marine Spatial Ecology Division

National Centers for Coastal Ocean Science

NOAA National Ocean Service

1305 East-West Highway

Silver Spring, MD 20910

240-533-0382

Sarah.Gonyo@noaa.gov

Amanda Alva

Social Science Research Analyst

CSS, under contract to NOAA

National Centers for Coastal Ocean Science

NOAA National Ocean Service

1305 East-West Highway

Silver Spring, MD 20910

202-936-5856

References

Abt Associates, Inc. (2019). National Coral Reef Monitoring Program: Socioeconomic Indicator

Development. Final report submitted to NOAA’s Office for Coastal Management. 142 p.

Ajzen, I., & Fishbein, M. (1980). Understanding attitudes and predicting social behavior. Englewood Cliffs, NJ: Prentice-Hall.

Alessa, L., Kliskey, A., & Brown, G. (2008). Social-ecological hotspots mapping: A spatial approach for identifying coupled social-ecological space. Landscape and Urban Planning, 85, 27-39.

American Samoa Department of Health. (2007). American Samoa NCD Risk Factors STEPS Report. American Samoa Government and World Health Organization. 63 pp.

Andrew, R.G., Burns, R.C., & Allen, M.E. (2019). The influence of location on water quality perceptions across a geographic and socioeconomic gradient in Appalachia. Journal of Water, 11, 2225.

Baker, B.O., Hardyck, C.D., & Petrinovich, L.F. (1966). Weak measurements vs. strong statistics: An empirical critique of S. S. Steven’s proscriptions on statistics. Educational and Psychological Measurement, 26, 291-309.

Borgatta, E.F., & Bohrnstedt, G.W. (1980). Level of measurement: Once over again.

Sociological Methods & Research, 9(2), 147-160.

Brown, G. (2005). Mapping spatial attributes in survey research for natural resource management: Methods and applications. Society and Natural Resources, 18(1), 1-23.

Cochran, W.G. (1977). Sampling techniques, 3rd edition. New York: John Wiley & Sons.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences. Second Edition. Hillsdale, NJ: Lawrence Erlbaum Associates, Publishers.

Dalton, T., Thompson, R., & Jin, D. (2010). Mapping human dimensions in marine spatial planning: An example from Narragansett Bay, Rhode Island. Marine Policy, 34, 309-319.

Dillman, D. A. (2000). Mail and Internet surveys: The total design method (2nd ed.). New York: Wiley.

Dillman, D. A., Smyth, J., & Christian, L. (2014) Internet, Mail and Mixed‐Mode Surveys: The Tailored Design Method. New York: John Wiley & Sons.

Ditton, R., Loomis, D., & Choi, S. (1992). Recreation specialization: reconceptualization from a social world's perspective. Journal of Leisure Research, 24, 33-51.

English, D., Kocis, S.M., Zarnoch, S.J., & Arnold, J.R. (2001). Forest Service National Visitor Use Monitoring Process: Research method documentation. Gen. Tech. Rep. SRS-57. Asheville, NC: U.S. Department of Agriculture Forest Service, Southern Research Station. 14 p.

Fiaui, P.A., & Hishinuma, E.S. (2009). Samoan adolescents in American Samoa and Hawaii:

Comparison of youth violence and youth development indicators. A study by the Asian/Pacific Islander Youth Violence Prevention Center. Aggression and Violent Behavior, 14(6), 478-487.

Gaito, J. (1980). Measurement scales and statistics: Resurgence of an old misconception.

Psychological Bulletin, 84, 564-567.

Gravlee, C.C., & Dressler, W.W. (2005). Skin pigmentation, self-perceived color, and arterial blood pressure in Puerto Rico. American Journal of Human Biology, 17, 195 – 206.

Groves, R.M. (2006). Nonresponse rates and nonresponse bias in household surveys. The Public Opinion Quarterly, 70(5), 646-675.

Groves, R.M., & Couper, M.P. (1998). Nonresponse in household interview surveys. New York: John Wiley & Sons, Inc.

Guerrero, R.T.L., Novotny, R., Wilkens, L.R., Chong, M., White, K.K., Shvetsov, Y.B., Buyum,

A., Badowski, G., & Blas-Laguana, M. (2017). Risk factors for breast cancer in the breast cancer risk model study of Guam and Saipan. Cancer Epidemiology, 50, 221 – 233.

Havlicek, J.E., & Peterson, N.L. (1977). Effect of the violation of assumptions upon significance

levels of the Pearson r. Psychological Bulletin, 84, 373-377.

Kempthorne, O. (1955). The randomization theory of experimental inference. Journal of the

American Statistical Association, 50, 946-967.

Kish L. (1949). A procedure for objective respondent selection within the household. Journal of the American statistical Association, 44(247), 380–387.

Krenzkle, T., Li, L., & Rust, K. (2010). Evaluating within household selection rules under a multi-stage design. Survey Methodology, 36(1), 111-119.

Leeworthy, V.R., Schwarzmann, D., Goedeke, T.L., Gonyo, S.B., & Bauer, L.J. (2018). Recreation use and spatial distribution of use by Washington households on the outer coast of Washington. Journal of Park and Recreation Administration, 36, 56-68.

Leong, K.M., Torres, A., Wise, S., & Hospital, J. (2020). Beyond recreation: When fishing motivations are more than sport or pleasure. NOAA Admin Rep. H-20-05, 57 p. doi:10.25923/k5hk-x319.

Link, M.W., Battaglia, M.P., Frankel, M.R., Osborn, L., & Mokdad, A.H. (2008). Comparison of address-based sampling (ABS) versus random digit dialing (RDD) for general population surveys. Public Opinion Quarterly, 72(1), 6-27.

Loomis, D.K., & Paterson, S.K. (2014). The human dimensions of coastal ecosystem services: Managing for social values. Ecological Indicators, 44, 6 – 10.

Loomis, D.K., Anderson, L.E., Hawkins, C., & Paterson, S.K. (2008a). Understanding coral reef use: SCUBA diving in the Florida Keys by residents and non-residents during 2006-2007. The Florida Reef Resilience Program, (p. 141).

Loomis, D.K., Anderson, L.E., Hawkins, C., & Paterson, S.K. (2008b). Understanding coral reef use: recreational fishing in the Florida Keys by residents and non-residents during 2006-2007. The Florida Reef Resilience Program, (p. 119).

Loomis, D.K., Anderson, L.E., Hawkins, C., & Paterson, S.K. (2008c). Understanding coral reef use: snorkeling in the Florida Keys by residents and non-residents during 2006-2007. The Florida Reef Resilience Program, (p. 135).

Messer, B.L., & Dillman, D.A. (2011). Surveying the general public over the internet using address-based sampling and mail contact procedures. The Public Opinion Quarterly, 75(3), 429-457.

Morgan, G.A., Gliner, J.A., & Harmon, R.J. with Kraemer, H.C., Leech, N.L., & Vaske, J.J. (2006). Understanding and evaluating research in applied and clinical settings. Mahwah, NJ: Lawrence Erlbaum Associates.

Peng, T. K., Peterson, M. F., & Shyi, Y. P. (1991). Quantitative Methods in Cross-National Management Research: Trends and Equivalence Issues. Journal of Organizational Behavior, 12(2), 87-107.

Perez, C.M., Guzman, M., Ortiz, A.P., Estrella, M., Valle, Y., Perez, N., Haddock, L., & Suarez, E. (2008). Prevalence of the metabolic syndrome in San Juan, Puerto Rico. Ethnicity and Disease, 18(4), 434 – 441.

Petty, R.E., & Cacioppo, J.T. (1986). Communication and persuasion: Central and peripheral routes to attitude change. New York: Springer-Verlag.

Revilla, M. A., Saris, W. E., & Krosnick, J. A. (2014). Choosing the Number of Categories in Agree-Disagree Scales. Sociological Methods & Research, 43(1), 73–97.

Rosenthal, R. (1994). Parametric measures of effect size. In H. Cooper & L. Hedges (Eds.), The

handbook of research synthesis (pp. 231-244). New York, NY: Russell Sage Foundation.

Rosenthal, R., Rosnow, R.L., & Rubin, D.B. (2000). Contrasts and effect sizes in behavioral

research: A correlational approach. Cambridge, UK: Cambridge University Press.

Singh, J. (1995). Measurement Issues in Cross-Cultural Research. Journal of International Business Studies, 26(3), 597-619.

Vaske, J.J. (2008). Survey research and analysis: Applications in parks, recreation and human

dimensions. State College, PA: Venture Publishing.

Vaske, J.J., & Donnelly, M.P. (1999). A value-attitude-behavior model predicting wildland preservation voting intentions. Society and Natural Resources, 12, 523 – 537.

1 https://www.census.gov/quickfacts/fact/table/PR/PST045222 [Census Total Population]

2 https://www.census.gov/library/stories/state-by-state/florida-population-change-between-census-decade.html [Census Total Population for five counties]

3 https://www.census.gov/data/tables/2020/dec/2020-us-virgin-islands.html [Census Total Population for three islands only]

4 https://www.census.gov/data/tables/2020/dec/2020-guam.html [Census Total Population]

5 https://www.census.gov/data/tables/2020/dec/2020-american-samoa.html [Census Total Population for Eastern & Western Districts; Ofu, Olosega, Tau Counties]

6 https://census.hawaii.gov/census_2020/data/ [Census Total Population for Hawai’i, Honolulu, Kauai, and Maui Counties]

7 https://www.census.gov/data/tables/2020/dec/2020-commonwealth-northern-mariana-islands.html [Census Total Population for Saipan, Tinian, Rota]

8 The NCRMP socioeconomics team is exploring modification to this approach for handling missing data (e.g., multiple imputation).

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Tadesse Wodajo |

| File Modified | 0000-00-00 |

| File Created | 2024-09-09 |

© 2026 OMB.report | Privacy Policy