SCSEP CSS 1205-0040 Supporting Statement Part B_ clean

SCSEP CSS 1205-0040 Supporting Statement Part B_ clean.docx

Senior Community Service Employment Program (SCSEP)

OMB: 1205-0040

Senior Community Service Employment Program (SCSEP)

OMB Control No. 1205-0040

November 30, 2024

ATTACHMENT B

GENERAL INSTRUCTIONS FOR COLLECTION

OF INFORMATION EMPLOYING STATISTICAL METHODS

1. Describe (including a numerical estimate) the potential respondent universe and any sampling or other respondent selection methods to be used. Data on the number of entities (e.g., establishments, State and local government units, households, or persons) in the universe covered by the collection and in the corresponding sample are to be provided in tabular form for the universe as a whole and for each of the strata in the proposed sample. Indicate expected response rates for the collection as a whole. If the collection had been conducted previously, include the actual response rate achieved during the last collection.

Title V of the Older Americans Act requires that Senior Community Service Employment Program (SCSEP) include in its core measures “Indicators of effectiveness in serving employers, host agencies, and project participants.’’ In its Final Rule published on June 30, 2018, the Department defined this core measure as “the combined results of customer assessments of the services received by each of these three customer groups.” (See section 641.710.) In the preamble to this section of the regulations, the Department stated that although the Workforce Innovation and Opportunity Act (WIOA) was piloting a new measure of effectiveness in serving employers, that measure does not have obvious application to SCSEP’s other two customer groups. As a result, the Department announced that it would continue to conduct the existing customer satisfaction surveys for each of its three customer groups “as an interim measure at least until the WIOA pilot is complete and a WIOA measure is defined in final form.” SCSEP has been conducting these three customer satisfaction surveys nationwide since 2004.

The interim measure adopted by SCSEP to satisfy the statutory requirement is the American Customer Satisfaction Index (ACSI). The survey approach employed allows the program flexibility and, at the same time, captures common customer satisfaction information that can be aggregated and compared among national and state grantees. The measure is created with a set of three core questions that form a customer satisfaction index. The index is created by combining scores from the three specific questions that address different dimensions of customers' experience. Additional questions that do not affect the assessment of grantee performance are included to allow grantees to effectively manage the program.

The ACSI measures customer experience in 10 economic sectors and 47 key industries that together represent a broad swath of the national economy. (https://www.theacsi.org/national-economic-indicator/national-sector-and-industry-results). In addition, over 100 Federal government agencies and services have used the ACSI to measure citizen satisfaction. (https://www.theacsi.org/industries/government)

As stated in the preamble to section 641.710, during this interim period, the Department will explore with grantees, and with its three customer groups, options for best measuring the effectiveness of SCSEP’s services. The Department will also explore ways to improve the efficiency of the current customer surveys (including the use of online surveys and changes to the administration of the employer survey) and will examine what, if any, new or revised questions would support an index of effectiveness as an alternative to the current index of satisfaction.

Based on feedback from its customers and its grantees, the Department is requesting approval of several changes to the participant, host agency, and employer surveys, including the addition of one question to the participant and host agency surveys, creating two versions of the employer survey and administering both versions centrally, and administering the host agency survey and a portion of the employer survey digitally. The move to digital administration is not only an opportunity to reduce the costs of collecting data; it is also an opportunity to reduce the time needed to collect the information, reduce the burden on respondents, and improve the quality of that information. Current research confirms that data quality is comparable or better.1 2 The projected cost savings along with improved data quality, make the move to digital obvious, especially as the population of the United States and the world moves online for everything from medicine and education to entertainment and shopping. See Part A for a detailed explanation of the proposed changes.

SCSEP currently funds 71 grantees, (19 national grantees (two of which have both general and set-aside grants) operating in 47 states), the District of Columbia (DC) and Puerto Rico; and 52 state grantees, including DC and Puerto Rico; the three overseas territories and USVI do not participate in the surveys):

NATIONAL GRANTEES |

AARP Foundation |

Asociación Nacional Pro Personas Mayores |

Associates for Training and Development, Inc. (A4TD) |

Center for Workforce Inclusion (formerly SSAI, Senior Services America, Inc.) |

Easter Seals, Inc. |

Goodwill Industries International, Inc. |

Institute for Indian Development |

International Pre-Diabetes Center Inc. |

National Able Network |

National Asian Pacific Center on Aging (General and Set-Aside) |

National Caucus and Center on Black Aged, Inc. |

National Council on Aging, Inc. |

National Indian Council on Aging (General and Set-Aside) |

National Older Worker Career Center |

National Urban League |

Operation A.B.L.E of Greater Boston, Inc. |

SER – Jobs for Progress National, Inc. |

Vantage Aging (formerly Mature Services) |

The Workplace, Inc. |

STATE GRANTEES |

Alabama Department of Senior Services |

Alaska Department of Labor and Workforce Development |

Arizona Department of Economic Security |

Arkansas Department of Human Services, Division of Aging and Adult Services |

California Department of Aging |

Colorado Department of Human Services, Aging and Adult Services |

Connecticut Department of Aging and Disability Services |

Delaware Division of Services for Aging and Adults with Physical Disabilities |

District of Columbia Department of Employment Services |

Florida Department of Elder Affairs |

Georgia Department of Human Services |

Hawaii Department of Labor and Industrial Relations |

Idaho Commission on Aging |

Illinois Department on Aging |

Indiana Department of Workforce Development |

Iowa Department on Aging |

Kansas Department of Commerce |

Kentucky Cabinet for Health and Family Services |

Louisiana Governor's Office of Elderly Affairs |

Maine Department of Health and Human Services |

Maryland Department of Labor, Workforce Development & Adult Learning |

Massachusetts Executive Office of Elder Affairs |

Michigan Aging and Adult Services Agency |

Minnesota Department of Employment and Economic Development |

Mississippi Department of Employment Security |

Missouri Department of Health and Senior Services |

Montana Department of Labor and Industry |

Nebraska Department of Labor |

Nevada Aging and Disabilities Services Division |

New Hampshire Business and Economic Affairs |

New Jersey Department of Labor and Workforce Development |

New Mexico Aging and Long-Term Services Department |

New York State Office for the Aging |

North Carolina Dept. of Health and Human Services, Div. of Aging and Adult Services |

North Dakota Department of Human Services |

Ohio Department of Aging |

Oklahoma Department of Human Services |

Oregon Department of Human Services, Aging and People with Disabilities |

Pennsylvania Department of Aging, Bureau of Aging Services |

Puerto Rico Department of Labor & Human Resources |

Rhode Island Department Labor and Training |

South Carolina Department on Aging |

South Dakota Department of Labor and Regulation |

Tennessee Department of Labor And Workforce Development |

Texas Workforce Commission |

Utah Department of Human Services |

Vermont Department of Disabilities, Aging and Independent Living |

Virginia Department for Aging and Rehabilitative Services |

Washington Department of Social and Health Services, State Unit on Aging |

West Virginia Bureau of Senior Services |

Wisconsin Department of Health Services, Bureau of Aging and Disability Resources |

Wyoming Department of Workforce Services |

A. Participant and Host Agency Surveys

1. Who is Surveyed

Participants - All SCSEP participants who are active at the time of the survey or have been active in the previous 12 months are eligible to be chosen for inclusion in the random sample of records. In the survey for PY 2021, there were approximately 39,235 participants eligible for the survey, of whom 16,929 were selected for the participant sample.

Host Agency Contacts - Host agencies are public agencies, units of government, and non-profit agencies that provide subsidized employment, training, and related services to SCSEP participants. All host agencies that are active at the time of the survey or that have been active in the preceding 12 months are eligible for inclusion in the sample of records. In the survey, for PY 2021, there were approximately 22,386 host agencies eligible for the survey, of which 11,093 were selected for the host agency sample.

2. Sample Size and Procedures

The national and state grantees have parallel, but complementary procedures. The national grantees have a target of 370 at the first stage of sampling and, at the second stage, a target of 70 for each state in which the grantee provides services. The state grantees have only one stage to their sampling procedure. It is anticipated that a sample of 370 will yield 185 completed interviews at a 50 percent response rate. Following the sampling procedure, for each state grantee, 185 completed surveys should be obtained each year for both participants and host agencies. At least 185 completed surveys for both customer groups will be obtained for each national grantee, depending on the number of states in which each national grantee is operating. For all but the smallest national grantees, the number of completed surveys exceeds 185. In cases where the number eligible for the survey is small and 185 completed interviews are not attainable (a situation common among state grantees), the sample is actually the population of all participants or host agencies. The surveys of participants and host agencies are conducted through a mail house once each program year. The target of 185 completed surveys produces a sufficiently narrow confidence interval to provide an accurate estimate of the population values for the ACSI. See table below for illustrative example.

-

Population

Stage One Sample Target

Sample Size

Achieved Sample Size

National Grantee

2956

370 +

420

210

California

300

70

35

Colorado

600

70

35

Kansas

550

70

35

Missouri

350

70

35

Texas

451

70

35

Wisconsin

705

70

35

State Grantee Large

400

370

370

185

State Grantee Small

200

200

200

100

The actual number of participants sampled in PY 2021, the year of the last completed survey analysis, was 16,929. There were 8,712 returned surveys that had answers to all three ACSI questions, for a response rate of 50.5%. 11,093 host agencies were surveyed in PY 2021. There were 4,645 returned surveys, for a response rate of 41.9%. For both surveys, all participants and host agencies that were active within the 12 months prior to the drawing of the samples were eligible to be surveyed. See table below.

-

Population

Sample Size

Percent Sampled

Achieved Sample Size

Response Rate

Participants

39,236

16,929

43.1%

8,712

51.5%

Host Agencies

22,386

11,093

49.6%

4,645

41.9%

The number of actual responses differs from the number expected by the sampling procedure because the large national grantees operate in many states, and sampling 70 from each of those states yields far more than the minimum sample of 370 would yield. At the same time, most state grantees do not have sufficient participants or host agencies to permit sampling, so all participants and host agencies are surveyed.

3. Response Rates

Response rates achieved for the participant and host agency surveys since 2004 have ranged from 41 percent to 70 percent.

(Note: There were no surveys for PY 2007 and 2016 due to the national grantee competition in those program years, and in PY 2011, there was no host agency survey.)

B. Employer Surveys

1. Who Is Surveyed

Employers that hire SCSEP participants and employ them in unsubsidized jobs were included in the Employer Survey from 2004-2019.To be considered eligible for the survey, the employer: 1) must not have served as a host agency in the past 12 months; and 2) must have had substantial contact with the

sub-grantee in connection with hiring of the participant; and 3) must not have received another survey from this program during the current program year.

All employers that met the three criteria described above were surveyed at the time the sub-grantee conducted the first case management follow-up, which typically occurs 30-45 days after the date of placement. The employer survey was suspended in PY 2019 due to insufficient potential employer respondent to produce usable ACSI scores for the SCSEP performance measures. With this submission, SCSEP is proposing to create two versions of the employer survey, ETA-9124C1 and ETA-9124C2, and administer both centrally rather than having the sub-grantees deliver them. See Part A and pages 10-11 below for details.

With these new surveys, all employers will be surveyed once each program year if they have one or more placements in that program year. The total number of placements and thus employer surveys is variable (especially during the pandemic) and is decreasing annually as the number of SCSEP participants decreases. See Part A. We estimate that the annual number of host agency employers will be 1500, and the number of non-host agency employers will be 4500, for a total of 6000 potential respondents in a non-pandemic environment.

2. Sample Size and Procedures

All employers meeting the three criteria described above were surveyed. No sampling was used

because the number of qualified employers is relatively low. Employers qualified for the survey if they did not also serve as host agencies, if the grantee was directly involved in making the placement, and the employer was aware of the grantee’s involvement. Employers are only surveyed for the first hire they make in each 12 month period. The number of qualified employers in any given program year is estimated to be approximately 1500. That should yield 750 returned surveys. Although there are many more placements than 1500, roughly 30% of them are with host agencies, and most of the remainder were self-placements by the participant. In a 12-month period from March 2016-March 2017, there were 271 returned employer surveys. It was not possible to calculate a response rate because of grantee variations in implementing the survey. The Department developed new procedures, an enhanced management report, and new edits to the data collection system to improve consistency with survey administration and increase the number of employers surveyed. As set forth below, however, the Department is proposing additional changes for further consistency.

In order to obtain an adequate number of responses for the core performance measure, we propose to survey the universe of employers for both of the employer survey versions.

3. Response Rates

Response rates for the employer survey have been difficult to track because of variations in the administration of the survey, including inconsistencies in tracking the survey number and date of mailing. In prior years, the Department made several changes to the administration of the employer survey.

The changes included: 1) an enhanced management report that showed grantees when each qualified employer needs to be surveyed; 2) additional edits in the data collection system that warned grantees when they failed to deliver surveys; 3) edits that prevented the entry of inaccurate data, such as the entry of the same survey number more than one time; and 4) revised procedures that required closer monitoring of survey administration. These changes did not result in the expected increase in usable surveys for individual grantees. As a result, the Department suspended administration of the employer survey. As stated in Section 1 above, we have been working with the grantees to explore changes to survey administration that will produce an adequate number of employer responses and are now submitting a request to revise the employer survey and its administration based on grantee and customer input.

2. Describe the procedures for the collection of information including:

* Statistical methodology for stratification and sample selection,

* Estimation procedure,

* Degree of accuracy needed for the purpose described in the justification,

* Unusual problems requiring specialized sampling procedures, and

* Any use of periodic (less frequent than annual) data collection cycles to reduce burden.

A random sample is drawn annually from all participants and host agencies that were active at any time during the prior 12 months. The data come from the data collection and reporting system. We are now also proposing to survey all employers that have hired a SCSEP participant during the prior 12 months.

A. Design Parameters:

There are currently 21 national grantees operating in 47 states, DC, and Puerto Rico; two of the national grantees have two separate grants and thus two separate samples

There are 52 state grantees, including DC and Puerto Rico

The four territories -- three overseas territories and USVI -- have been unable to participate in the mailed surveys because of mail difficulties; these territories will be able to participate in the surveys to the extent that our request to use digital surveys is approved.

There are three customer groups to be surveyed (participants, host agencies, and employers)

Surveying each of these customer groups should be considered a separate survey effort.

The major difference between the three groups is the number of participants served in any given year by the national, state, and territorial grantees. Using the sampling from PY 2021, 16 of the 21 national grantees funded at that time had sufficient participants to provide a sample of 370. Only 3 state grantees had sufficient participants to be able to provide a sample of 370. Regarding the host agency sampling, 8 national grantees had sufficient numbers of host agencies to allow a sample of 370. Only one of the state grantees had a sufficient number of host agencies to permit sampling. Where sampling is not possible, all participants or host agencies are surveyed. American Samoa, Guam, the Northern Mariana Islands and the Virgin Islands did not participate in the surveys due to the inability to conduct time-sensitive mail surveys overseas. The methodology requires tight turnaround times for three waves of surveys.

a. Participant Surveys:

A point estimate for the ACSI score is required for each national grantee, both in the aggregate and for each state in which the national grantee is operating. The calculations of the ACSI are made using the formulas presented in Section 4, page 15, near the end of this document.

A point estimate for the ACSI score is required for each state grantee.

A sample of 370 participants from each national and state grantee will be drawn from the pool of participants who are currently active or have exited the program during 12 months prior to the survey period.

Many state grantees may not have a total of 370 participants available to be surveyed. In those cases, all participants who are active or who have exited during the 12 months prior to the survey will be surveyed.

As indicated above, 370 participants will be sampled from each national grantee. With an expected response rate of 50 percent, this should yield 185 usable responses. However, a single grantee sample may not be distributed equally across the states in which a national grantee operates. We, therefore, aim for a sample of 70 in each state, with a potential of 35 responses. Where there are fewer than 70 potential respondents in the sample for a state, we select all participants. If the overall sample for a national grantee is less than 370 and there are additional participants in some states who have not been sampled, we will over-sample to bring the sample to 370 and the potential responses to at least 185.

The returns from PY 2021 were analyzed using the random sampling function within SPSS to determine the impact on the standard deviation of the ACSI for differing number of returns. The function allows the analyst to choose the number of records to be sampled for each run. The average standard deviation was calculated for grantees with 200 or fewer returns and compared to those grantees that had more than 200 returns. The average standard deviation for grantees with less than 200 was 23.6. The average standard deviation for participant grantee with returns of 200 or more was 22.7. The analysis of standard deviations was used to provide greater confidence that grantees with smaller numbers of participants or host agencies would not have significantly different variability.

b. Host Agency Surveys:

A point estimate for the ACSI score is required for each national grantee, both in the aggregate and for each state in which the national grantee is operating.

A point estimate for the ACSI score is required for each state grantee.

A sample of 370 host agency contacts from each national and state grantee will be drawn from the pool of agencies hosting participants during 12 months prior to the survey period.

Most state grantees do not have a total of 370 host agency contacts available to be surveyed. In those cases, all agencies hosting participants during the 12 months prior to the survey will be surveyed.

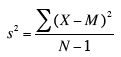

As indicated above, 370 host agencies will be sampled from each national grantee. With an expected response rate of 50 percent, this should yield 185 usable responses. However, a single grantee sample may not be distributed equally across the states in which a national grantee operates. We, therefore, aim for a sample of 70 in each state, with a potential of 35 responses. Where there are fewer than 70 potential respondents in the sample for a state, we select all host agencies. If the overall sample for a national grantee is less than 370 and there are additional host agencies in some states that have not been sampled, we will over-sample in those states to bring the sample to 370 and the potential responses to at least 185. To determine the impact of different sample sizes on standard deviations, a series of samples was drawn from existing host agency data, the returns from PY 2021 were analyzed. The average standard deviation was calculated for grantees with 200 or fewer returns and compared to those grantees that had more than 200 returns. The average standard deviation for grantees of less than 200 was 16.8. The average standard deviation for those with returns of more than 200 was 18.3. The standard deviation formula used in SPSS is:

The reason that the number surveyed is as high as it is stems from the fact that the sampling for grantees with a large number of participants and host agencies is a two-stage sample. The first stage seeks a total grantee sample of 370. The second stage seeks to ensure that, where possible, 70 participants or host agencies from each state served by a national grantee are included in order to provide data from all states served without unduly increasing the overall sample size. Since much of our customers’ experience is determined by local management of the program, surveying each state captures that dimension of the customer experience. The oversampling at this second stage also contributes to large numbers being surveyed among those national grantees that serve participants in many different states. National grantees currently operate in as few as one state and as many as 14 states.

We routinely conduct analyses to determine if any of the standard demographic variables have a significant influence on the participants’ responses. This weighting is, first of all, taking place in a setting in which there are random samples taken; for the smaller grantees where there is no sampling, the total population of participants eligible for surveying is used. Using PY 2021 data, there is evidence of small variation in ACSI by gender, race, and ethnicity. Those with the highest education levels (those with Bachelor and post-Baccalaureate degrees) also express lower satisfaction than those with lower levels of education. It should be noted that the difference in ACSI scores for race shows no differences among minority categories; only White participants express lower overall satisfaction. Those who are not Hispanic also express lower satisfaction compared to Hispanics.

For the employer surveys, all qualified employers are surveyed because the number of qualified employers is relatively low. Employers are only surveyed if they did not also serve as host agencies and if the grantee was involved in making the placement and the employer was aware of the grantee’s involvement. Employers are only surveyed for the first hire they make in each 12-month period. The number of qualified employers in any given program year is estimated to be approximately 1500. In 2013, there were 552 returned employer surveys; from March 2016-March 2017, there were 271. It was not possible to calculate a response rate for either period because of grantee non-compliance with the procedures for survey administration. As set forth above, revised procedures, an enhanced management report, and new edits to the data collection system did not yield the expected compliance with survey administration and did not result in an increase in employers surveyed. As a consequence, the Department is now seeking approval to use two separate versions of the employer surveys. We anticipate that this change in the survey populations and administration would yield 6000 potential respondents in a non-pandemic environment.

c. Employer Surveys:

We are proposing two versions of the employer survey: one for host agencies that hire participants as employees upon their exit from SCSEP (ETA-2194C1), and one for all non-host-agency employers that hire participants upon their exit from the program (ETA-2194C2). There would be no sampling, and all employers would be surveyed. The non-host agency employer survey would have skip questions for those employers that received a substantial service from the grantee and thus have a relationship with the grantee (ETA-2194C2B).

Qualified employers would be surveyed following the first time they hire a participant in a given program year. Once an employer has been surveyed, the employer will only be surveyed again when it has another placement and at least one year has passed since the last survey.

Both surveys would be administered by the same mail house that administers the participant and host agency surveys. The host agency employer survey would be administered digitally, as we have proposed for the regular host agency surveys. The non-host-agency employer survey would be administered by mail to non-host agency employers that did not receive a substantial service from the grantee (ETA-C2A) as a pilot project in PY 2022 to determine the most effective ways to increase responses from employers. The ETA-C2B employers would receive the survey digitally.

The statute and regulations include the surveys of all three customer groups as a core measure of performance for SCSEP that must be negotiated with the grantees and reported each year. Survey procedures ensure that no customer is surveyed more than once each year. Less frequent data collection would require a change in the law.

B. Degree of Accuracy Required

The 2000 amendments to the OAA designated customer satisfaction as one of the core SCSEP measures for which each grantee had negotiated goals and for which sanctions could be applied. In the first year of the surveys, PY 2004, baseline data were collected. The following year, PY 2005, was the first year when evaluation and sanctions were possible. Because of changes made by the 2006 amendments to the OAA, starting with PY 2007, the customer satisfaction measures became additional measures (rather than core measures), for which there are no goals and, hence, no sanctions. With the OAA amendments of 2016, the surveys are used as indicators of effective in serving employers, host agencies, and participants and are again core measures for which goals must be established each year.

As set forth on pages 4 and 5, where the population of participants or host agencies for a particular grantee is less than 370, the whole population is surveyed. Where the population of participants or host agencies is greater than 370, a random sample is taken. The sample size of 370 per grantee and the projected 50 percent response rate, yields 185 useable responses for the ACSI (participants or host agencies are only considered responsive if they return the survey and all three questions that make up the ACSI are completed). The 185-respondent target is important for at least two reasons. First, the scores obtained for any random sample are assumed to reflect the score if one surveyed the whole population. To test for this, confidence intervals around the ACSI are regularly calculated to ensure that the target provides “an estimated range of values with a given high probability of covering the true population value” (Yays, 1988, p. 206). The confidence interval for the ACSI for the last participant survey report (PY 2021) was 87.2± 2.7 for a random sample of 185 respondents with ACSI scores.

The second reason for the target of 185 is to have a sufficient number of cases for conducting multivariate analyses to better understand how the way the program delivers its services interacts with the measure of overall program quality (ACSI).

3. Describe methods to maximize response rates and to deal with issues of non-response. The accuracy and reliability of information collected must be shown to be adequate for intended uses. For collections based on sampling, a special justification must be provided for any collection that will not yield "reliable" data that can be generalized to the universe studied.

A. Maximizing Response Rates

a. Participant and Host Agency Surveys:

The responses are obtained using a uniform mail methodology. The rationale for using mail surveys includes individuals and organizations that have a substantial relationship with program operators, in this case, with the SCSEP sub-grantees, are highly likely to respond to a mail survey; and mail surveys are easily and reliably administered to potential respondents. The experience in administering the surveys by mail since 2004 has established the efficacy of this approach. However, in order to obtain higher response rates, ease the burden on respondents, and reduce cost, the Department has explored ways in which Internet surveys can be more successful with these client groups and is now seeking approval to use digital surveys for: all host agencies (ETA-9124B, host-agency employers (ETA-9124C1), and non-host agency employers that received a substantial service from the grantee (ETA-9124C2A). Non-host agency employers that did not receive a substantial service (ETA-9124C2B) would receive paper surveys. We also seek to pilot digital surveys with select groups of participants (ETA-9124A).

As with other data collected on the receipt of services, the responses to the customer satisfaction surveys must be held private as required by applicable state law. Before promising respondents privacy of results, grantees must ensure that they have legal authority under state law for that promise.

The survey procedures set forth below include a set of contact strategies that have been shown to boost response rates. First, participants receive up to five contacts if needed, starting with oral notification by sub-grantee personnel that the survey will be coming in the mail soon. Second, participants included in the sample receive a personalized letter signed by the sub-grantee director informing them that they will receive a survey within a week and urging them to complete the survey and mail it back in the postage-paid envelope that will be included. Third, potential respondents receive personalized letters from the grantee director with the survey instrument and return envelope. Fourth, a second personalized letter accompanies the second mailing of the survey (if the potential respondent’s first survey is not received within four weeks). Fifth, another personalized letter with the third survey mailing is sent if required. Host agency contact persons receive all but the pre-survey letter because testing has established that the pre-survey letter does not significantly increase the response rate for host agencies. This contact strategy follows closely the strategies recommended by Dillman, Smyth, and Christian (2014).

SCSEP has also experimented with three different legends on the mailing envelope that were designed to encourage recipients to open the envelope and complete the survey. These legends were also found not to increase response rates. The Department will continue to follow the research on survey

administration and explore features that can be added to the current survey administration to enhance response rates.

To ensure ACSI results are collected in a consistent and uniform manner, the following standard procedures are used by grantees to obtain participant and host agency customer satisfaction information:

ETA’s survey research contractor, The Charter Oak Group, determines the samples based on data in the SCSEP case management system. There are smaller grantees where 370 potential respondents will not be achievable. In such cases, no sampling takes place, and the entire population is surveyed. See the Design Parameters in Section 2 above for details.

Grantees are required to ensure that sub-grantees notify customers of the customer satisfaction survey and the potential for being selected for the survey. Sub-grantees are required to:

Inform participants at the time of enrollment and exit.

Inform host agencies at the time of assignment of a participant.

Inform employers at the time of the hiring of a participant.

Mail or email a customized version of a standard letter prepared by the Charter Oak Group to all customers selected for the survey informing them that they will be receiving a survey shortly.

When discussing the surveys with customers for any of the above reasons, refresh contact information, including mailing and email address.

Grantees are required to ensure that sub-grantees prepare and send pre-survey letters or emails to those participants selected for the survey.

Grantees provide the sample list to sub-grantees about 3 weeks prior to the date of the delivery of the surveys.

Letters and emails are personalized using a mail merge function and a standard text.

Each letter is printed on the sub-grantee’s letterhead and signed in blue ink by the sub-grantee’s director to provide the appearance of a personal signature; each email is sent by the sub-grantee manager on the managers email template and url.

Grantees are responsible for the following activities:

Provide letterhead, signatures, and correct return address information to DOL for use in the survey cover letters, mailing envelopes, and emails.

Send participant samples to sub-grantees with instructions on preparing and sending pre-survey letters and emails.

Contractors to the Department of Labor are responsible for the following activities:

Provide sub-grantees with list of participants to receive pre-survey letters.

Print personalized cover letters and design personalized emails for first mailing of survey. Each letter is printed on the grantee’s letterhead and signed in blue ink with the signatory’s electronic signature; emails contain the name and logo of the grantee.

For mail surveys:

Generate mailing envelopes with appropriate grantee return addresses.

Generate survey instruments with bar codes and preprinted survey numbers.

Enter preprinted survey numbers for each customer into worksheet.

Assemble survey mailing packets: cover letter, survey, pre-paid reply envelope, and stamped mailing envelope.

Mail surveys on designated day. Enter the date of mailing into worksheet.

Send survey worksheet to the Charter Oak Group.

From list of customers who responded to first mailing, generate list for second mailing.

Print second cover letter with standard text (different text from the first letter). Letters are personalized as in the first mailing.

Enter preprinted survey number into worksheet for each customer to receive second mailing.

Assemble second mailing packets: cover letter, survey, pre-paid reply envelope, and stamped mailing envelope.

Mail surveys on designated day. Enter date of mailing into worksheet.

Send survey worksheet to the Charter Oak Group.

Repeat tasks above if third mailing is required.

For digital surveys:

Prepare digital survey with formatting compatible with computers and smartphones

Prepare cover letters for first and subsequent contact attempts that will accompany survey link with necessary and appropriate instructions

With the sampling lists generated from the SCSEP administrative data base, prepare digital survey lists with codes attached to ensure the ability to match returns to administrative data (e.g., grantee and subgrantee identification, organization type, organization location)

Track bad email addresses (bounce backs)

Track number of contacts needed prior to response

Administer additional contacts as determined necessary Prepare data files with data required for matching to administrative data and analysis in a format compatible with SPSS

Employer surveys:

Grantees are required to ensure that sub-grantees notify employers of the customer satisfaction survey and the potential for being selected for the survey. Employers should be informed at the time of the first placement of a participant in the program year.

Grantees and sub-grantees are responsible for the following activities:

For mail surveys to non-host agency employers, grantees and sub-grantees follow the same procedures as above for the participant survey.

For digital surveys to host-agency employers, grantees and sub-grantees follow the same procedures as above for the regular host agency survey.

B. Nonresponse Bias

The potential problem of missing data was a concern; even with the relatively high response rates presented in Section 1, non-response bias still must be addressed. A study of non-response bias and the impact of missing data was conducted at the University of Connecticut Statistics Department in 2013-2014.

Missing data are carefully controlled in regard to the ACSI. The index is composed of three separate questions. However, the ACSI score is not calculated unless valid responses are recorded to all three questions (the scale is from 1-10 and “Don’t know.” Only if the respondent records one of the 1-10 scores on all three questions is the ACSI score complete.

The objective was to obtain bias-adjusted point estimates of:

Overall ACSI score

ACSI score for each national grantee

ACSI score for each state grantee

The author of the study used two different modeling approaches to determine the degree to which nonresponse biased the ACSI results. Below are the predicted nationwide ACSI scores by each of the models and without any adjustment:

No adjustment: 82.40

Ordinal logistic adjustment: 85.58

Multinomial logistic adjustment: 84.73

Although the ordinal and multinomial logistic adjustments use different methodologies for estimating non-response bias, their estimates are within three-quarters of a point of each other. While they are both regression models, the major distinction is that the multinomial method assumes independence of choices and the ordinal method does not. As the statistician notes in his report: “Both of the regression models considered have assumptions that are difficult to validate in practice. However, the good news is that since the primary objective is score prediction (and not interpretation of regression coefficients), the models considered are quite robust to departures from ideal conditions.”

The fact that the scores change very little indicates that the non-respondents’ satisfaction is very similar to that of those who responded to the survey.3 Adjusting for nonresponse would slightly increase the scores of some but not all grantees. Given the relatively minor impact of nonresponse, we consider the adjustments unnecessary.

In order to continue monitoring of the potential for nonresponse bias, we will annually compare respondents and non-respondents on variables that might differentially influence the ACSI score. In the past, we have noted that some demographic characteristics, for example, racial and ethnic differences, have small but significant impact on ACSI scores. Other characteristics, such as gender and education, have had a significant impact on the ACSI score. We will test for those characteristics that have a significant impact, assess the potential for differences on those characteristics between respondents and non-respondents, and make adjustments where necessary.

4. Describe any tests of procedures or methods to be undertaken. Testing is encouraged as an effective means of refining collections of information to minimize burden and improve utility. Tests must be approved if they call for answers to identical questions from 10 or more respondents. A proposed test or set of tests may be submitted for approval separately or in combination with the main collection of information.

The core questions that yield the single required measure for each of the three surveys are from the ACSI and cannot be modified. The supplemental questions included in this submission were revised in 2015 for the first time since OMB approved them in 2004. As set forth above, based on grantee and customer input we are requesting a few revisions to the participant and host agency surveys. We are also exploring the use of digital surveys for these customer groups.

The ACSI model (including the weighting methodology) is well documented. (See http://www.theacsi.org/about-acsi/the-science-of-customer-satisfaction ) The ACSI scores represent the weighted sum of the three ACSI questions’ values, which are transformed into 0 to 100 scale value. The weights are applied to each of the three questions to account for differences in the characteristics of the state’s customer groups.

For example, assume the mean values of three ACSI questions for a state are:

1. Overall Satisfaction = 8.3

2. Met Expectations = 7.9

3. Compared to Ideal = 7.0

These mean values from raw data must first be transformed to the value on a 0 to 100 scale. This is done by subtracting 1 from these mean values, dividing the results by 9 (which is the value of range of a 1 to 10 raw data scale), and multiplying the whole by 100:

1. Overall Satisfaction = (8.3 -1)/9 x 100 = 81.1

2. Met Expectations = (7.9 -1)/9 x 100 = 76.7

3. Compared to Ideal = (7.0 -1)/9 x 100 = 66.7

The ACSI score is calculated as the weighted averages of these values. Assuming the weights for the example state are 0.3804, 0.3247 and 0.2949 for questions 1, 2 and 3, respectively, the ACSI score for the state would be calculated as follows:

(0.3804 x 81.1) + (0.3247 x 76.7) + (0.2949 x 66.7) = 75.4

Weights are calculated by a statistical algorithm to minimize measurement error or random survey noise that exists in all survey data. State-specific weights are calculated using the relative distribution of ACSI respondent data for non-regulatory Federal agencies previously collected and analyzed by CFI and the University of Michigan.

Specific weighting factors have been developed for each state. New weighting factors are published annually. It should be noted that the national grantees have different weights applied depending on the states in which their sub-grantees’ respondents are located.

5. Provide the name and telephone number of individuals consulted on statistical aspects of the design and the name of the agency unit, contractor(s), grantee(s), or other person(s) who will actually collect and/or analyze the information for the agency.

The Charter Oak Group, LLC:

Barry A. Goff, Ph.D., (860) 659-8743, bgoff@charteroakgroup.com; and Ron Schack, (860) 478-7847, rschack@charteroakgroup.com.

Barry Goff was consulted on the statistical aspects of the design; Barry Goff and Ron Schack will collect the information.

Ved Deshpande, Department of Statistics, University of Connecticut, (860) 486-3414, ved.deshpande@uconn.edu, was also consulted on the statistical aspects of the design.

1 https://blogs.worldbank.org/impactevaluations/electronic-versus-paper-based-data-collection-reviewing-debate?cid=SHR_BlogSiteEmail_EN_EXT.

2 IOSR Journal of Humanities and Social Sciences (IOSR-JHSS) Volume 24, Issue 5, Ser.5 (May-2019), PP 31-38

3 Ved Deshpande, Department of Statistics, University of Connecticut, “Bias-adjusted Modeling of ACSI scores for SCSEP” (2014)

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | adams.anita.i@dol.gov;bpudlin |

| File Modified | 0000-00-00 |

| File Created | 2024-07-25 |

© 2026 OMB.report | Privacy Policy