SSB - Components Study final

SSB - Components Study final.docx

Components Study of REAL Essentials

OMB: 0990-0480

Components Study of REAL Essentials Curriculum

Part B: SS Justification for the Collection

October 2021 (finalized)

|

|

Office of

Population Affairs U.S. Department of Health and Human Services 1101 Wootton Parkway, Suite 700 Rockville, MD 20852 Point of Contact: Tara Rice, tara.rice@hhs.gov |

|

Contents

B1. Respondent Universe and Sampling Methods 2

B2. Procedures for Collection of Information 3

B3. Methods to Maximize Response Rates and Deal with Non-Response 6

B4. Test of Procedures or Methods to be Undertaken 7

B5. Individuals Consulted on Statistical Aspects and Individuals Collecting and/or Analyzing Data 8

Attachments

ATTACHMENT A: Section 301 of the Public Health Service Act

ATTACHMENT B: YOUTH OUTCOME SURVEY ITEM SOURCE LIST

ATTACHMENT C: STUDY PARENTAL CONSENT FORM

ATTACHMENT D: YOUTH OUTCOME SURVEY ASSENT FORM

ATTACHMENT E: YOUTH FOCUS GROUP ASSENT FORM

ATTACHMENT F: PERSONS CONSULTED

ATTACHMENT G: 60 DAY Federal Register Notice

Instruments

INSTRUMENT 1: YOUTH OUTCOME SURVEY

INSTRUMENT 2: YOUTH FOCUS GROUP TOPIC GUIDE

INSTRUMENT 3: YOUTH ENGAGEMENT EXIT TICKET

INSTRUMENT 4: FIDELITY LOG

INSTRUMENT 5: FACILITATOR INTERVIEW TOPIC GUIDE

INSTRUMENT 6: DISTRICT/CBO LEADERSHIP INTERVIEW TOPIC GUIDE

Part B

B1. Respondent Universe and Sampling Methods

This Information Collection Request (ICR) includes the following activities associated with the core components outcome and implementation study: youth outcomes surveys; youth focus groups; youth engagement exit tickets; facilitator fidelity logs; and interviews with facilitators and district or community-based organization (CBO) leaders.

The study will be conducted at approximately 40 sites interested in offering the REAL Essentials Advance (REA) curriculum. Sites will include high schools and CBOs. They will be recruited from a variety of sources, including Office of Population Affairs (OPA) Teen Pregnancy Prevention (TPP) grantees; Family and Youth Services Bureau Sexual Risk Avoidance Education or Healthy Marriage and Relationship Education grantees currently or previously implementing REA; sites identified by the REA developer as delivering REA; and outreach to facilitators trained in REA. The sites will not be randomized. All sites will implement the REA curriculum; however, we expect the lessons they choose to vary. We will use this natural variation in program content and other factors (such as facilitator experience and study setting) to predict youth outcomes. Below we describe the respondent universe for each instrument.

Youth outcome surveys (Instrument 1). Eligible study youth are enrolled in the classrooms offering REA—about 80 classrooms across the sites (40 sites, 2 classrooms each) with as many as 2,000 youth total. We expect that 80 percent of the eligible youth will be given parental permission to participate in the study (n = 1,600). We expect the youth outcome sample to have an equal number of males and females.

Youth focus groups (Instrument 2). Focus groups will be conducted in person or virtually with a subset of program participants who have parental consent (did not opt out of focus groups in main study consent). The study team will conduct no more than one focus group per site, recruiting 8 to 10 youth per site, based on a random sample of youth at that site with parental consent to participate in the focus group.

Youth engagement exit tickets (Instrument 3). All youth with parental consent for the study will be asked to complete a short exit ticket survey after each REA session.

Fidelity logs (Instrument 4). All trained REA program facilitators will complete the fidelity logs after each REA session.

Facilitator interviews (Instrument 5). The study team will interview all REA program facilitators from all schools and CBOs.

District or CBO leadership interviews (Instrument 6). The study team will interview as many as four members of the leadership staff per participating district or CBO. Leaders might be district administrators, CBO leaders, or school principals. The study team will interview staff who are most knowledgeable about the decision to participate in the components study of REA, who are familiar with the school or CBO context, and who had a key role in determining the sequence of lessons to be used at their sites.

Video and audio recording of youth. The study team will collect video and audio recordings in up to two of the study schools. We will aim to record as many REA sessions as possible during a single semester.

B2. Procedures for Collection of Information

Statistical Power

This study will estimate the relationship between components of REA and youth outcomes using a multi-level model that accounts for variation at the site and youth levels. It will adjust for contextual variables and key baseline measures. Outcomes for this analysis will be measured using immediate follow-up program exit surveys and six-month follow-up surveys. We will examine how outcomes vary according to site-level components (for example, the content categories selected for implementation in a site, the modules offered, and facilitator training and background) and variables representing individual experiences (for example, dosage and content received, quality and fidelity of delivery, and other factors). We will focus the estimation analysis on the partial r2 statistic, which can be used to identify which individual or combinations of core components are the best predictors of outcomes. The analysis can include interactions among components, recognizing that the whole collection of components might be greater than the sum of its parts.

We have achieved high response rates in similar studies of TPP programs in schools with group administration of follow-up surveys, such as the Federal Evaluation of Making Proud Choices (MPC; OMB Control Number 0990-0452), the Personal Responsibility Education Program (PREP; OMB Control Number 0970-0398), and the Evaluation of Adolescent Pregnancy Prevention Approaches (PPA; OMB Control Number 0990-0382). For example, two of the school-based PREP studies had response rates greater than 90 percent at first follow-up (12 months after baseline). The follow-up response rate at the PPA Chicago school-based site was 94 percent. We anticipate similar response rates for this study. Based on our experiences with MPC, PREP, and PPA, we expect to retain all sites in the study. We therefore base the power calculations described below on retaining all the sites in this study.

This study will not randomly sample schools or youth to participate. Thus, the findings are not intended to generalize to a broader population of focus or to be more broadly representative. Still, our planned approach for recruitment will attempt to maximize variability in the content and activities offered across participating sites. Through careful site selection, we will recruit schools that differ in goals and needs for the populations we seek to engage. Thus, we will observe a great deal of natural variation in the components of REA that participating sites offer. We will work with schools to identify the populations in greatest need of the REA programming, and we expect to serve about 50 youth per site (two classrooms of about 25 youth).

We designed this study to detect a partial r2 statistic of 1 to 2 percentage points. Goesling and Lee (2015) found relationships of a similar magnitude in a study on the roles risk behaviors play in predicting sexual behavior outcomes, suggesting that this target was appropriate for the REA components study. We estimated that with 40 schools, and an enrolled sample of 1,600 youth, the study would be well-powered to detect the target (1 to 2 percentage point) partial r2 statistics as statistically significant ( = 0.05, two-tailed). Stated another way, the study is powered to detect as statistically significant the effects of individual components approximately 0.20 standard deviations or larger.

Data Collection

Youth outcome surveys. In each of the sites, all eligible youth in the classroom offered REA will be considered for enrollment in the study. Each site will be asked to provide the study team with a list of eligible youth. Mathematica staff will work collaboratively with the site to recruit youth for the study and obtain active written consent from the responsible parent or guardian. Mathematica staff will conduct an initial visit to the site to distribute consent forms and give a brief introduction to youth, summarizing the study and asking them to return the signed consent form within a specific time frame. Mathematica will thoroughly and efficiently train staff to ensure they can properly inform study participants. We will train staff on explaining the study, answering questions about the study, and collecting informed consent.

Additional visits to the site will be required until a sufficient number of consent forms have been returned, regardless of whether the parents and guardians provide permission or refuse to let their child participate in the study. Sites might also wish to mail the consent forms home as a separate mailing or as part of a pre-planned mailing, such as those that go out at the beginning of the school year. Mathematica staff will offer help assembling these mailings. Depending on site preferences, and if needed, we can reduce the burden on sites, parents, and children by offering a verbal consent process or by completing the consent form online through a secure website. Both the verbal, online, and mailed consent options can also be used for sites that want to limit outside staff.

Attachment C includes an example consent letter and form. Once consent collection has ended, Mathematica will prepare a final roster of youth at each site with parental consent.

The data collection plan for the outcome surveys (Instrument 1) is the same across all study sites and reflects sensitivity to issues of efficiency, accuracy, and respondent burden. The REA facilitator will administer the baseline survey to consented youth shortly before REA programming begins and until the first day of programming. The REA facilitator will administer the immediate follow-up survey on the last day of programming. The study team will work with the program facilitators to coordinate make-up surveys as needed. Mathematica field staff will administer the survey a third and final time about six months after the end of programming. The surveys will be web-based and smartphone-compatible, and trained program facilitators or Mathematica field staff will administer the surveys in a group setting at each site. The study team will provide participants with smartphones and a unique URL to access the survey from the device. At the administration of the six-month follow-up survey, we expect a small percentage of the participants will either no longer be enrolled at the study school or no longer attend the CBO. If we cannot reach these youth for the group administrations, we will conduct nonresponse follow-up by emailing these youth a link to the survey, and following up with phone outreach to encourage completion of the survey.

Mathematica staff will carefully train REA program facilitators to administer baseline and immediate follow-up surveys in school. Facilitators will begin by reviewing the details of the study and obtaining youth assent. Participants who opt out of the survey will sit in an area with youth who do not have permission to participate in the study. Youth who agree to take the survey will be given a unique URL to access the web survey application on the smartphone handed out by facilitators. Staff will be instructed to have youth sit with as much space between them as possible for privacy while completing the survey; for additional protection, the smartphones will be equipped with privacy screens. The surveys will be self-administered, and youth will be instructed to work at their own pace. Trained local Mathematica field staff will oversee the administration of the six-month follow-up surveys. We will coordinate with sites to schedule a group administration of the survey at a convenient time. The administration process will be the same as for baseline and immediate follow-up surveys.

The survey will ask all youth for background information. No personally identifying information will be pre-programmed into the survey. Attachment B lists sources for each survey question.

Once youth have completed the survey, they will close the web survey application and return the smartphone to the facilitators.

Youth focus groups. Focus groups will be conducted with a subset of program participants, either in person at the sites or virtually, depending on site preference. The objective of the focus groups will be to explore participants’ perspectives on the quality and value of the program they received. The focus groups will be used to learn about participants’ perceptions of the benefits of the program and their overall satisfaction with the program content, activities, and facilitation.

Each site will have no more than one focus group, and the focus group will last about one hour. Participants will be recruited randomly from youth enrolled in the study, and parental consent will be required for focus group participation. For in-person focus groups, the study team will work with site staff to arrange the groups at convenient times and locations and to recruit 8 to 10 youth for each group. For virtual focus groups, study staff will use contact information from the consent forms to recruit participants. Virtual focus groups will be conducted through a secure online platform. Two researchers from the study team will conduct the interviews (one as lead facilitator and one as notetaker). Before each interview, the interviewers will ask for each respondent’s verbal assent (Attachment E) to participate in the focus group and for the sessions to be audio recorded. Youth can still participate in the focus group if they decline to be audio-recorded and they will be reminded that they do not need to answer any questions they do not want to. Groups will only be audio-recorded if all participants in the group agree. We have used this approach successfully on other studies with audio-recorded focus groups and most youth agree to the recording. The study team will use the recordings to ensure accuracy of notes and to minimize burden on respondents by asking them to repeat their answers.

Youth engagement exit ticket surveys. After each session of REA, program facilitators will hand out the hardcopy exit ticket survey to all consented youth to measure their engagement with the session. The exit ticket will include each participant’s first and last name and a unique study ID. Names are included (on a detachable cover sheet or removable label) to assist program facilitators in handing out the forms after each lesson; students will remove their name before they return the form. Program facilitators will collect completed exit tickets, verify that all names have been removed, and seal the tickets in an envelope marked with the school, class period, and teacher name. Completed tickets will be shipped to Mathematica’s Survey Operations Center using prepaid FedEx materials provided by Mathematica. Staff at the Survey Operations Center will check the forms for completeness, receipt them, and enter the data.

Fidelity logs. Facilitators delivering REA will also maintain fidelity logs to record attendance and the components they completed for each session. This will enable the study team to better understand the degree to which the program was implemented with fidelity (and the degree to which fidelity might have differed by type of facilitator). The logs will be entered into a web-based platform. The fidelity log is a valuable tracking tool based on existing materials developed for the program. It is designed to minimize burden on staff by using checkboxes and limiting open-ended responses as feasible.

Facilitator interviews. All program facilitators will be invited to complete two semi-structured phone interviews: one before they begin implementing REA at site schools or CBOs, and one after programming ends for the semester or quarter at each site. Two researchers from the study team will conduct the interviews (one as lead interviewer and one as notetaker). Before each interview, the interviewers will ask for each respondent’s verbal consent to participate in the interview and for interviews to be recorded.

District or CBO leadership interviews. The study team will interview leaders at each district, CBO, or school recruited for the study. We anticipate leadership interviews might include as many as four people at each district or CBO, depending on who helped decide to implement REA and select which REA lessons would be covered at the site. District or CBO leaders will be invited to complete two semi-structured phone interviews individually: one before they begin implementing REA at site schools or CBOs, and one after programming ends for the semester or quarter at each site. Before each interview, the interviewers will ask for each respondent’s verbal consent to participate in the interview and for the interviews to be recorded.

Video and audio recording of youth. The study team will use multiple high-definition cameras and audio recorders to collect the recordings. To get the resolution required for the machine coding we will use SWIVL kits and standalone iPads. The SWIVL kits have a tracker that the facilitator wears and the camera will move to follow the teacher around the room. We will also have stationary iPads set up around the room to capture students from multiple angles.

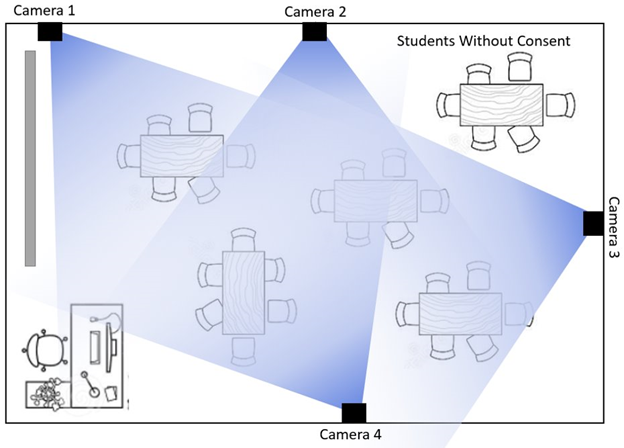

Youth that do not consent to participate in the study will be asked to sit in a certain part of the room. The cameras will be placed so that non-consented youth are outside the frames of the stationary cameras (see Exhibit 1). The facilitator will interact with non-consented youth in a way that will keep the youth out of the camera angles. Audio devices will be placed either on group tables or scattered around the room in such a way as to collect audio from consented youth. We will use microphones designed to collect audio from the voices of people directly next to the microphone so as to minimize the likelihood of capturing the voices of non-consenting youth. We will do our best to ensure non-consented youth are not included in the audio or video data, but we may unintentionally capture this information. No individual level measures of youth will be analyzed, they will all be aggregated to the classroom level.

Exhibit 1. Sample classroom camera layout

B3. Methods to Maximize Response Rates and Deal with Non-Response

Youth outcome survey data collection. OPA expects to achieve a response rate of 95 percent for the pre- and post-survey and a response rate of 90 percent for the six-month follow-up survey. This expectation is based on response rates achieved in prior school-based surveys with similar populations, such as with the MPC (OMB Control Number 0990-0452), PREP (OMB Control Number 0970-0398), and PPA (OMB Control Number 0990-0382) studies. We can expect to achieve these completion rates for the components study of REA for several reasons, including the timing of the baseline and follow-up surveys, the processes Mathematica will put in place with the sites, the fact that most youth will be in the same school for the six-month follow-up, and plans for multiple modes of data collection to pick up cases that miss the first administration. The baseline survey administration will take place on the first day of REA programming, shortly after we receive active parental consent. This timing will help minimize the likelihood that youth have moved out of the school or stopped attending the CBO before the start of programming. It will also ensure that surveys can be administered to most youth when and where the programming will take place. The program exit survey will be administered on the last day of programming, in the same quarter or semester as the baseline survey.

To help achieve high response rates for the program exit surveys, Mathematica will coordinate with program facilitators at each site to arrange additional make-up sessions for youth who are absent on the initial day of survey administration. In addition, we expect that obtaining each site’s willing assistance will be very important to maximizing the response rate; we will therefore invest significant effort in gaining cooperation, minimizing the burden on sites, integrating an effective consent process, and ensuring the privacy of youth participants. Sites will be given detailed information about the surveys, the process and schedule for administration, staff involvement and time requirements, and data use and protection. Bringing sites into the process while minimizing burden will assure site support of the survey data collection.

The six-month follow-up survey will follow a process nearly identical to the baseline and program exit surveys; however, we do not expect youth to be grouped in similar classes. To help achieve high response rates, trained Mathematica field staff will collaborate with each site to arrange a time for a group administration, and additional make-up sessions for youth who are absent on the initial day of the survey. In addition, participants who cannot complete the survey during the on-site data collection will be sent advance letters, postcards, emails, and texts (with permission) that include instructions for logging in to the web survey and completing it online at their convenience. These participants will also have an option to complete the survey over the phone with a trained Mathematica interviewer.

Implementation and fidelity data collection. We expect that 80 percent or more of the selected facilitators and leaders will choose to participate in the interviews. For fidelity logs and youth engagement exit ticket surveys, we anticipate a nearly 100 percent response rate among program facilitators and youth who attend the session. We are basing these response rates on experience with similar instruments in studies with similar populations. We will use the following strategies to maximize our data collection efforts:

Conduct site leadership interviews with heavily invested stakeholders. We aim to interview stakeholders who are heavily invested in implementing REA in their sites. We anticipate that respondents will be eager to engage in discussions.

Send advance and reminder emails to facilitators and site leaders for interviews. We will send advance emails to facilitators and site leaders requesting their participation, and a reminder email a few days before the scheduled interview.

Design exit tickets in a manner that minimize burden. The youth exit ticket is designed to take youth participants only about two minutes to complete. Youth will complete the ticket during the last part of each session, not imposing any additional burden during their own time.

Design fidelity logs that minimize respondent burden. To minimize burden on program facilitators, the fidelity log is web-based and structured with checkboxes and limited open-ended responses. Program facilitators will be able to complete the log at their convenience, on a computer, tablet, or mobile device.

Video and audio recordings collected passively to ensure zero burden on participants. The study team will set up and collect the video and audio data. Participants will simply participate in class as normal. This means the response rate will be 100% of consented youth.

B4. Test of Procedures or Methods to be Undertaken

Youth outcome survey. Where possible, we drew items on the youth outcome surveys from established sources, such as prior healthy relationship education, TPP evaluations, and other federal surveys of adolescents (see Attachment B). However, the study team was not always able to find an established measure that fully encompassed some of the constructs proximal to specific REA lessons. We therefore developed measures aligned to specific curriculum content as needed (listed in Attachment B as “Mathematica developed”).

Mathematica pretested the youth survey with nine youth to ensure that questions were understandable, use language and terms familiar to respondents, and were consistent with the concepts they aim to measure; to identify typical instrumentation problems, such as question wording and incomplete or inappropriate response categories; to measure the response burden; and to confirm that there were no unforeseen difficulties in administering the instrument. We collected parental permission for youth to participate in the pre-test. We administered the pre-test using a hard copy, paper version of the survey, and then youth participated in a virtual debriefing session with Mathematica staff. We made changes to the survey based on their participants’ feedback. The final version of the survey will be programmed as a web-based survey. Mathematica staff will thoroughly test the web survey before fielding.

Implementation and fidelity assessment. We based the topic guide for the focus groups (Instrument 2), the fidelity logs (Instrument 4), and the topic guides for interviews with program facilitators and site leadership (Instruments 5 and 6) on the instruments we used successfully for MPC (OMB Control Number 0990-0452). We based the youth engagement exit ticket (Instrument 3) on an established scale (Troy et al. 2020).1 To ensure that the exit ticket is understandable and there are no difficulties in administration, Mathematica incorporated the exit ticket into the pretesting of the youth outcome survey instrument. No changes were made based on the exit ticket pretest.

Video and audio recording of youth. As a first step in the pilot, we wanted to test the equipment and consider the feasibility of collecting enough high-quality data for the machine coding to work. For this feasibility assessment of video/audio recording, we created a mock classroom at the Mathematica offices and had Mathematica staff act as a teacher and students, while we used the proposed iPad and audio devices to record video and audio data. The group completed worksheets, watched a video, and completed other activities similar to the REA activities. We are currently processing these video and audio data with Rekognition. Lessons learned in the set-up of cameras and microphones, and the analysis of the data will be applied to the full pilot.

B5. Individuals Consulted on Statistical Aspects and Individuals Collecting and/or Analyzing Data

OPA contracted with Mathematica to develop the data collection instruments. Mathematica, along with its subcontractor Decision Information Resources, will lead the data collection activities described in this ICR. Attachment E lists the individuals responsible for instrument design, data collection, and the statistical aspects of the data collection, and includes their affiliations, telephone numbers, and email addresses.

1 B. Troy Frensley, Marc J. Stern, and Robert B. Powell. “Does Student Enthusiasm Equal Learning? The Mismatch Between Observed and Self-Reported Student Engagement and Environmental Literacy Outcomes in a Residential Setting.” The Journal of Environmental Education, vol. 51, no. 6, 2020.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | Mathematica Report Template |

| Author | Sharon Clark |

| File Modified | 0000-00-00 |

| File Created | 2022-01-14 |

© 2026 OMB.report | Privacy Policy