SSA - Components Study final

SSA - Components Study final.docx

Components Study of REAL Essentials

OMB: 0990-0480

Components Study of the REAL Essentials Curriculum

Part A: SS Justification for the Collection

August 2021

|

|

Office of

Population Affairs U.S. Department of Health and Human Services 1101 Wootton Parkway, Suite 200 Rockville, MD 20852 Point of Contact: Tara Rice, tara.rice@hhs.gov |

|

Contents

A.1. Circumstances Making the Collection of Information Necessary 1

Legal or Administrative Requirements that Necessitate the Collection 1

A.2. Purpose and Use of the Information Collection 2

A.3. Use of Information Technology to Reduce Burden 6

A.4. Efforts to Identify Duplication and Use of Similar Information 7

A.5. Impact on Small Businesses 7

A.6. Consequences of Not Collecting the Information/Collecting Less Frequently 7

A.8. Federal Register Notice and Consultation Outside the Agency 8

A.9. Payments to Respondents 9

A.10. Assurance of Confidentiality 9

A.11. Justification for Sensitive Questions 11

A.12. Estimates of the Burden of Data Collection 11

Annual Burden for Youth Study Participants. 12

Annual Burden for Program Facilitators. 12

Annual burden for District/CBO Leadership. 12

A13. Estimates of Other Total Annual Cost Burden to Respondents and Record Keepers 13

A.14. Annualized Cost to Federal Government 14

A.15. Explanation for Program Changes or Adjustments 15

A16. Plans for Tabulation and Publication and Project Time Schedule 15

2. Time Schedule and Publications 16

A17. Reason(s) Display of OMB Expiration Date is Inappropriate 17

A18. Exceptions to Certification for Paperwork Reduction Act Submissions 17

A2.1 Data collection activities 4

A2.1 Constructs for the implementation and outcome study and data sources 6

A8.1 Experts Consulted During Instrument Development 8

A8.2 Experts Consulted on the Youth Engagement Pilot Study Design 8

A.12 Calculations of Annual Burden Hours 13

A.13 Calculations of Annual Cost Burden 14

A.14 Annualized Cost to Federal Government by Cost Category 14

Exhibits

Attachments

ATTACHMENT A: Section 301 of the Public Health Service Act

ATTACHMENT B: YOUTH OUTCOME SURVEY ITEM SOURCE LIST

ATTACHMENT C: STUDY PARENTAL CONSENT FORM

ATTACHMENT D: YOUTH OUTCOME SURVEY ASSENT FORM

ATTACHMENT E: YOUTH FOCUS GROUP ASSENT FORM

ATTACHMENT F: PERSONS CONSULTED

ATTACHMENT G: 60 Day Federal Register Notice

Instruments

INSTRUMENT 1: YOUTH OUTCOME SURVEY

INSTRUMENT 2: YOUTH FOCUS GROUP TOPIC GUIDE

INSTRUMENT 3: YOUTH ENGAGEMENT EXIT TICKET

INSTRUMENT 4: FIDELITY LOG

INSTRUMENT 5: FACILITATOR INTERVIEW TOPIC GUIDE

INSTRUMENT 6: DISTRICT/CBO LEADERSHIP INTERVIEW TOPIC GUIDE

Part A: Introduction

The Components Study of the REAL Essentials Advance (REA) curriculum is a descriptive implementation and outcome study conducted by the Office of Population Affairs (OPA). REA is a relationship education curriculum for high-school–aged youth that covers topics such as healthy relationships, effective communication, commitment, planning for the future, job readiness, and sexual health. The study will examine program components (for example, content and dosage), implementation components (for example, attendance and engagement), and contextual components (for example, participant characteristics) to determine which ones have the most influence on participant outcomes (for example, knowledge and attitudes about effective communication, relationship skills, and risky sexual behaviors). In addition, the study will use an embedded pilot measure youth engagement in programming from various perspectives and examine the role of engagement as a mediating factor to achieving youth outcomes.

With this new Information Collection Request (ICR), OPA seeks Office of Management and Budget (OMB) approval for data collection activities for the Components Study of REA over three years. These activities include administering a youth outcome survey, holding focus groups with youth, and giving youth engagement exit ticket surveys after they receive REA in study sites;1 collecting fidelity logs from and interviewing REA program facilitators; and interviewing leaders at the district, community organization, or school level.

A.1. Circumstances Making the Collection of Information Necessary

Legal or Administrative Requirements that Necessitate the Collection

The Office of Population Affairs (OPA) supports programming for youth through a variety of funding streams. For the Teen Pregnancy Prevention (TPP) Program and Pregnancy Assistance Fund (PAF) in particular, most of the evidence about the effectiveness of programs in these funding streams has come from rigorous evaluations of curricula or program models. Although there is a growing body of evidence about program effectiveness, much less is known about which elements of those programs drive improved youth outcomes. For example, is the program content alone effective, or is a dynamic facilitator or certain type of school environment a critical component for changing youth outcomes? Or is a particular combination of all three components necessary?

Rather than continue to fund wide-scale rigorous evaluations of grant-funded programs, the Office of the Assistant Secretary for Health (OASH) and OPA are taking an opportunity to better understand which component of programs drive youth outcomes. This understanding will enable program developers to create more effective programs and will enable schools and organizations to use time and resources effectively to focus on the components that matter most. We see similar attempts to focus on the effectiveness of core components broadly across many federal agencies, including TPP and sexual risk avoidance (SRA) programming, as well as in adolescent opioid use disorder programming and national efforts to improve after-school programs (Blase and Fixen 2013; Ferber et al. 2020; NASEM 2019).

To begin this work, OASH funded a National Academy of Sciences (NAS) panel to identify core components of programs designed to promote the optimal health2 of adolescents (NAS 2020). In the Promoting Positive Adolescent Health Behaviors and Outcomes report, the NAS panel members identified a handful of promising components (including socio-emotional learning and positive youth development approaches to skill building) associated with favorable youth outcomes across different domains of health; however, their main conclusion was that more research needs to be done. The REA Components Study will further OASH’s and OPA’s optimal health framework and learning agenda.

This study, designed to provide a deeper understanding of the program components for adolescent health programs, such as TPP programs, is authorized under Section 301 of the Public Health Service Act (42 U.S.C.241), Attachment A.

A.2. Purpose and Use of the Information Collection

This ICR describes the data collection activities for the Components Study of REA. The study will help OPA and the field understand the program, implementation, and contextual components that matter the most for promoting positive health behaviors and outcomes among adolescents.

Study Objectives

The REA curriculum is a popular program among federal teen pregnancy prevention and sexual risk avoidance education (SRAE) grantees. The goal of the Components Study is to use the flexible nature of the curriculum to learn which core components are most effective at moving the needle on youth outcomes.

The REA curriculum includes 86 lessons organized into 10 units. The units cover topics such as healthy relationships, effective communication, commitment, planning for the future, job readiness, and sexual health. Importantly, the REA curriculum is designed to be flexible, and typical implementation only includes a subset of the 86 lessons. A key feature of REA is the development of a “scope and sequence,” a site-specific collection and sequence of lessons that will meet the needs of a given target population. To create this scope and sequence, providers select a subset of the 86 available lessons to meet the needs of their target populations and their local context, and to fit lessons into the available time for programming. As a result, the intended program content and dosage found in each scope and sequence can vary substantially across implementing sites.

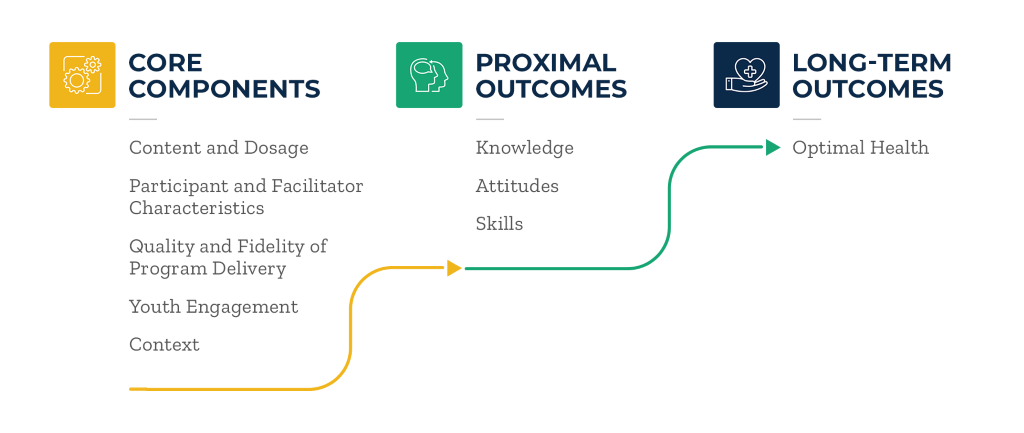

Given the unique scope and sequence developed for each participating site, different lessons and different levels of total dosage will be offered, and facilitators will have different levels of experience with the curriculum and different backgrounds and facilitation quality. The variation in program delivery, coupled with different participant backgrounds, will create variation in REA experiences for participating youth. The study will capitalize on this naturally occurring variation to examine which program components are most predictive of variation in participant outcomes, such as knowledge and skills relevant to healthy relationships. Additionally, the study will examine which lessons or combinations of lessons are particularly important in driving improvement. The study will assess the relationships between differences in outcomes and core component, such as youth characteristics, facilitator experience with REA, facilitator quality, fidelity, and youth engagement. The study will also look at whether these effects persist over time and whether the proximal outcomes of REA are predictors of future sexual behavior outcomes and other measures of optimal health (Exhibit 1).

Exhibit 1. A study to examine the core components of REA

There

will also be a pilot study of the youth engagement component. Youth

engagement is a complex construct that includes distinct yet

interrelated dimensions such as behavioral, emotional, and cognitive

engagement. The pilot study is designed to develop new and innovative

measures of engagement. It will use facial recognition software to

determine youth engagement based on facial features and

audio-recognition software to determine who is speaking (youth versus

facilitators).

There

will also be a pilot study of the youth engagement component. Youth

engagement is a complex construct that includes distinct yet

interrelated dimensions such as behavioral, emotional, and cognitive

engagement. The pilot study is designed to develop new and innovative

measures of engagement. It will use facial recognition software to

determine youth engagement based on facial features and

audio-recognition software to determine who is speaking (youth versus

facilitators).

A key contribution of the pilot study will be to assess the feasibility of using facial recognition software and machine coding of video and audio data to construct measures of youth engagement in REA lessons. Supplementing traditional measurement of youth engagement with video/audio observations of a high-school based relationship education program, along with computer coding of the data, offers an opportunity to address limitations of traditional methods.

Potential for increased reliability through an additional, consistent lens into engagement: The primary goal of the pilot is to assess the feasibility of using machines to detect levels of student engagement from audio and video data. Unlike self-report data, machines are consistent in their coding and have the potential to be unbiased. A consistent and unbiased measure of youth engagement would be significant improvement over current measures of engagement. This new measure would also remove the burden on teachers and students to report data.

Increased frequency/reporting of engagement measurement: With video and audio recordings of the youth participating in the classroom sessions, there will be an opportunity to have a computer continuously code the extent to which youth engagement varies within and across classes. Having a quantitative measure of engagement collected continuously during every classroom session will address the major limitation of the standard “snapshot” assessments of engagement that reflect an observer or facilitator’s assessment of an entire classroom of students and all of the activities that took place. This measurement will let us learn the types of content, teaching styles and activities engage youth most.

This pilot study will identify distinct dimensions of engagement across data sources and by combining measures to produce reliable overall assessments of engagement. The pilot study will also produce data that show how program components influence the dimensions of engagement, how these dimensions of engagement influence outcomes, and which measures of youth engagement are most predictive of youth outcomes.

The full study will answer the following research questions:

What are the components of REA that are offered and received by youth across schools?

Which components are most predictive of proximal and distal optimal health outcomes?

Which components are associated with youth engagement?

To what extent does youth engagement predict outcomes?

The pilot study will also help us answer several measurement research questions:

How correlated are measures of engagement based on different perspectives?

To what extent do measures individually (or collectively) measure behavioral, emotional, and cognitive engagement? How might measures be combined?

Is it feasible to measure engagement using machine-coded video and audio data, and how can we address challenges to this approach?

Study Design

The study will be conducted in about eight school districts or community-based organizations (CBOs) comprising up to 40 individual sites. Sites are defined as the specific schools or CBOs where REA will be delivered. Data collection activities for the study will begin in spring 2022, with additional schools brought into the study in fall 2022. Given the uncertainty around initial recruitment efforts due to the COVID-19 pandemic, and the desire to enroll schools that are implementing the program in person rather than virtually, it may be necessary to enroll additional sites in the study through Spring 2023. Given the timing of the final youth outcome survey (six months after programming), we are requesting a three-year clearance. We expect most youth in the study to be in 9th or 10th grade. The study includes the collection of youth outcome data and program implementation and fidelity data.

Table A2.1. Data collection activities

Data Collection Activity |

Instrument(s) |

Respondent, Content, Purpose of Collection |

Mode and Duration |

Youth outcome survey |

Youth outcome survey baseline Youth outcome Immediate post-survey Youth outcome six-month follow-up |

Respondents: Youth Content: Survey measures that are well aligned with the REA curriculum Purpose: Measures proximal and distal outcomes related to the REA curriculum |

Mode: Web-based, administered in group setting by trained facilitators and trained field staff. Phone option available for six-month follow-up. Duration: 40 minutes |

Implementation and fidelity data |

Youth focus group topic guide |

Respondents: Youth Content: Discussion questions about curriculum satisfaction Purpose: Assesses satisfaction with curriculum and implementation quality |

Mode: In-person or virtual focus groups Duration: 1 hour |

Implementation and fidelity data |

Youth engagement exit ticket |

Respondents: Youth Content: Measure on engagement with each REA session Purpose: Assesses youth engagement |

Mode: Paper and pencil Duration: 2 minutes per ticket (12 tickets per youth) |

Implementation and fidelity data |

Fidelity log |

Respondents: Facilitators Content: Questions about attendance, youth engagement, and content coverage Purpose: Records dosage and the types of content covered |

Mode: web-based Duration: 10 minutes per log (24 logs) |

Implementation and fidelity data |

Facilitator interview topic guide |

Respondents: Facilitators Content: Discussion questions about facilitators’ experience with training and program delivery, site context, challenges and strengths of implementation, and youth engagement with the program Purpose: Assesses implementation of curriculum |

Mode: Phone Duration: 1 hour |

Implementation and fidelity data |

District/CBO leadership interview topic guide |

Respondents: District or community-based organization leadership Content: Discussion question about site context, the selected scope and sequence and its fit for the site, plans for implementation, and key challenges and strengths related to program delivery Purpose: Assesses implementation of curriculum |

Mode: Phone or in person Duration: 45 minutes |

Pilot engagement study |

Video and audio recording of youth |

Respondents: Youth Content: Recordings of REA sessions; youth will be asked to participate in the classroom as normal Purpose: Assess the feasibility of using facial recognition software and machine coding of video and audio data to construct measures of youth engagement in REA lessons |

Mode: In person Duration: multiple REA lessons |

Youth outcome survey (Instrument 1). Youth outcome data will be collected from study participants through a web-based survey administered at three points: baseline (pre-programming), program exit (immediate follow-up), and six months after programming. The baseline and immediate follow-up surveys will be administered by trained program facilitators in a group setting at each site. Trained Mathematica field staff will administer the six-month follow-up surveys to youth at sites. We will follow up by phone and email for nonrespondents that are no longer attending the study school or receiving services at the CBO at the time of the six-month follow-up survey. See B.2 for details on the data collection plan.

The immediate follow-up survey at program exit will enable the researchers to explore how variation in components influences outcomes proximal to the REA content; key outcomes for the immediate follow-up will focus on antecedents to sexual behavior. The six-month follow-up survey will enable the study to explore how program components influence more distal behavioral and optimal health outcomes.

The youth outcome surveys include measures for outcome constructs that are well aligned with the specific lessons in the REA curriculum and can be grouped more broadly into the physical, social and emotional optimal health domains discussed in the NAS report. Where possible, measures were pulled from established surveys used in TPP and healthy relationship program evaluations or other federal surveys. If the study team could not find a measure for a construct, an item was developed based on the content of the proximal lesson. See Attachment B for a question-by-question source list.

Implementation and fidelity data. The implementation and fidelity assessment aspect of the study will collect the necessary data on the components of REA that were offered and received by youth. Data will be obtained from the following sources:

In-person or virtual focus groups3 with participating youth to understand youth experience and satisfaction with the program, and assess implementation quality and engagement (Instrument 2)

Paper-and-pencil exit ticket surveys with youth after each classroom session of REA to assess engagement (Instrument 3)

A protocol for program facilitators to electronically record attendance, youth engagement, and content coverage in a fidelity log after each classroom session of REA (Instrument 4)

Individual phone interviews with program facilitators to document their experience with training and program delivery, site context, challenges and strengths of implementation, and youth engagement with the program. Interviews will be conducted at two points, before and after programming. (Instrument 5)

Individual phone or in-person interviews conducted with district or community-based organization leadership to document site context, the selected scope and sequence and its fit for the site, plans for implementation, and key challenges and strengths related to program delivery. Interviews will be conducted with each individual at two points, before and after programming (Instrument 6)

The topic guide for the focus groups (Instrument 2), the fidelity logs (Instrument 4), and the topic guides for interviews with program facilitators and site/district leaders (Instruments 5 & 6) are based on the instruments used successfully on the Federal Evaluation of Making Proud Choices! (OMB # 0990-0452). The youth engagement exit ticket (Instrument 3) is based on an established scale (Troy et al. 2020).

The data collected through the various instruments will help the field develop insight into several components of REA so variation in components offered and received can be linked to variation in outcomes. Table A.2.2 summarizes the core component and outcome constructs addressed, and the corresponding data source(s).

Table A.2.2. Constructs for the implementation and outcome study and data sources

Constructs |

Description/examples |

Data sources |

Core components of REA implementation |

||

Dosage |

Number of sessions offered/received through a site-specific scope and sequence |

Instruments 4,5,6 |

Content |

The set of lessons offered/received as part of the site-specific scope and sequence |

Instruments 4,5,6 |

Participant characteristics |

Risk and protective factors; motivation |

Instruments 1,5,6 |

Facilitator characteristics |

Facilitator background and supplemental training, attitudes, and beliefs |

Instruments 5,6 |

Context |

Implementation environment (e.g., size of classroom, number of youth); school support of programming (e.g., strong communication with facilitator or lack of scheduling issues); school and community characteristics (e.g., economic opportunity or disciplinary issues) |

Instruments 1,5,6 |

Quality |

Quality of program delivery |

Instruments 1,2,5,6 |

Fidelity |

Adherence to scope and sequence; adaptations |

Instruments 1,5,6 |

Engagement |

Youth engagement in programming |

Instruments 1,2,3,4,5,6 |

Outcomes |

||

Proximal outcomes |

Knowledge and attitudes about effective communication; relationship skills; expectations for romantic relationships |

Instrument 1 |

Long-term outcomes |

Indicators of optimal health, drug and alcohol use and sexual behaviors |

Instrument 1 |

Pilot study of youth engagement. As noted in A.1, the broader study will include a pilot study of youth engagement. We expect a small subset of participating sites (one or two schools or CBOs) to participate in this pilot study. By collecting video and audio data in a small subset of one or two study schools, we hope to examine classroom-level engagement and assess engagement for discrete program activities and content. The study team will use multiple high-definition cameras and audio recorders placed strategically around the room to passively record the REA lessons. We expect to utilize the video/audio data when answering research questions 3 and 4, as well as the measurement research questions 5-7. The video/audio data will supplement and leverage data on youth engagement from other sources that will be collected as part of the main study.

We will use Amazon Web Services (AWS) Rekognition software for facial recognition processing. The facial recognition software will allow us to continuously (roughly 30 times per second) assess facial characteristics, such as whether youths’ eyes are open and which emotions appear to be expressed on their faces. At each time point, AWS Rekognition provides continuous measurements (attributes, emotions, quality, and the pose for each face it detects) for all students in the recordings, along with a confidence score. While the AWS Rekognition output will be at the individual level, the unit of analysis is expected to be an aggregation of these data to the classroom level. For example, the facial data (e.g. the confidence score for a given face showing an emotion associated with engagement) will be aggregated/averaged for the entire group of youth in the class within a period of time associated with an REA activity to provide insight into engagement for that activity. In addition, we are in the process of exploring with AWS whether it is possible to code additional student behaviors, such as raising hands, slouching, or looking at a phone, or additional student emotions that might provide valuable insights into aspects of engagement.

We will utilize federal standards for the use, protection, processing, and storage of data. The systems architecture will safeguard PII and other confidential project information in a manner consistent with National Institute of Standards and Technology (NIST) standards. Data are encrypted in transit and at rest using Federal Information Processing Standard 140-2 compliant cryptographic modules and is securely disposed of according to our contractual and data use agreement obligations. Our corporate security team develops, maintains, and regularly updates Mathematica’s security policies, procedures, and technical safeguards, which are consistent with the Privacy Act, the Federal Information Security Management Act, Office of Management and Budget memoranda regarding data security and privacy, and National Institute of Standards and Technology security standards.

As with all individually identifiable and other sensitive project information, the video and audio data will only be available to project staff on a need-to-know and least privilege basis. For this project we expect a core group of data collection team members and technical programming staff to have access to the video and audio data. Data collection team members will coordinate the transfer of videos and audio files from the recording devices to Mathematica’s secure internal network. Technical programming staff will analyze the video and audio data.

Recordings

will be stored in two places: first, on Mathematica’s secure

internal network; second, on Amazon’s secure cloud. The secure

cloud and the Rekognition software will both be within a Federal Risk

and Authorization Management Program (FedRAMP)-certified Amazon Web

Services (AWS) cloud architected to protect data, identities, and

applications. The security policies, procedures, and technical

safeguards align with federal standards, including the Privacy Act,

the Federal Information Security Management and Modernization Acts

(FISMA, FISMA 2014), Office of Management and Budget (OMB) memoranda

regarding data security and privacy, and National Institute of

Standards and Technology (NIST) security standards and guidance. Data

will be deleted from Amazon servers when the video analysis is

complete, and data will be deleted from Mathematica’s secure

internal network when the project is completed.

The information collected is meant to contribute to the body of knowledge on OPA programs. It is not intended to be used as the principal basis for a decision by a federal decision maker, and is not expected to meet the threshold of influential or highly influential scientific information.

A.3. Use of Information Technology to Reduce Burden

OPA is using technology to collect and process data to reduce respondent burden and make data processing and reporting faster and more efficient.

The contractor will program and administer the youth outcome surveys (Instrument 1) with Confirmit, a state-of-the-art survey software platform that the contractor uses to build and launch multimode surveys. The surveys will be web-based and administered to youth at the sites in a group setting. Trained program facilitators or Mathematica field staff will give participants smartphones along with a unique URL to access the survey from the device. The Confirmit software has built-in mobile formatting to ensure that the display adjusts for device screen size. If needed, respondents can pause and restart the survey, with their responses saved. Confirmit includes tailored skip patterns and text fills. These features allow respondents to move through the questions more easily and automatically skip questions that do not apply to them, thus minimizing respondent burden. Confirmit offers several advantages for respondents completing the six-month follow-up survey outside of school, including enabling web respondents to participate on their own time and use their preferred electronic device (smartphone, tablet, laptop, or desktop computer).

Program facilitator fidelity logs (Instrument 4) will be collected through an electronic data collection system that allows for consistent data entry and easy export for analysis. Unlike paper logs, facilitators will not need to mail any materials back to the study team for data entry.

The pilot study utilizes machine learning technology to assess whether machine learning techniques can be used to measure student engagement. If successful, future projects could use similar techniques in place of surveys and exit tickets, which would greatly reduce burden for teachers, youth and other study participants, and potentially decrease overall project costs.

A.4. Efforts to Identify Duplication and Use of Similar Information

The information collection requirements for the Components Study of Real Essentials Advance have been carefully reviewed to avoid duplication with existing and ongoing studies of TPP program effectiveness. There is a broad focus on the effectiveness of core components across many federal agencies, including TPP and sexual risk avoidance programming, adolescent opioid use disorder programming, and national efforts to improve after-school programs (Blase and Fixen 2013; Ferber et al. 2020; NASEM 2019). However, as noted by the National Academy of Science (NAS 2020) panel, more research needs to be conducted. OPA has contracted with Mathematica to conduct the first large-scale descriptive study of program components in the TPP field. Unlike other studies that look at the impact of full programs or specific pieces of a program, this study will look at the relative influence of all the components of a program (REA) and its delivery simultaneously to determine which ones are most important for moving youth outcomes. Similarly, OPA has acknowledged that for youth to fully reap the benefits of a program, they cannot only be offered or solely attend the delivery of the program, but need to become engaged with the content and activities of the program (Larson 2000; Vendell et al. 2005). The pilot study will examine the role that engagement plays in the relationship between program components and youth outcomes.

A.5. Impact on Small Businesses

Programs in some sites may be operated by nonprofit community-based organizations. To reduce the burden on program leaders, Mathematica will schedule data collection activities at times that are convenient for them. The study also has resources to compensate CBOs for the time facilitators need to provide the program and collect data for the study.

A.6. Consequences of Not Collecting the Information/Collecting Less Frequently

Youth survey data (Instrument 1) will be collected at three points. Baseline and immediate follow-up data are needed to assess changes in youth outcomes that are most proximal to REA. Data from the six-month follow-up survey allow us to see whether these effects persist over time and whether the proximal outcomes of REA are predictors of future sexual behavior outcomes and other measures of optimal health.

Implementation and fidelity data (Instruments 2–6). Implementation and fidelity data are essential for understanding which core components of the REA curriculum are offered and received by youth, and the quality and fidelity they are delivered with. The data will help the study team determine which components are the most effective at moving the needle on youth outcomes. Data collected early in program implementation are crucial for documenting content and dosage components such as the intended scope and sequence of program lessons in each site, the context for each site (for example, needs and challenges, demographic makeup, class sizes, etc.), as well as training and preparation for program delivery. Data collected later in program implementation are essential for learning about actual service delivery and unplanned adaptations, quality of program delivery, fidelity to the intended scope and sequence of curriculum delivery (allowing documentation of any variations), participant engagement, and changes in program context during the study period. Collecting youth engagement data after each session is critical to understanding how youth engagement might vary depending on the content, format (for example, activities), facilitator, or context. Without implementation data at multiple time points, we lose the opportunity to document any variations in key components, such as planned versus actual program delivery, quality, and engagement.

Audio and video recordings. As noted above, machine coding of audio and video data has the potential to be a more reliable measure of student engagement than more traditional measures. At the very least, the audio and video recordings will allow youth engagement to be measured for each activity rather than at the end of the entire class period. OPA is very interested in potentially more reliable measures of student engagement and how engagement varies by REA activity.

A.7. Special Circumstances

There are no special circumstances for the proposed data collection efforts.

A.8. Federal Register Notice and Consultation Outside the Agency

A 60-day Federal Register Notice was published in the Federal Register on April 9, 2021, vol. 86, No. 67; pp. 18544-18545 (see Attachment G). There were no public comments.

To develop the data collection instruments, OPA consulted with national subject matter experts in adolescent health, healthy relationship education, and instrument development. Table A.8.1 and A.8.2 list the experts who were consulted and give their affiliations.

Table A.8.1. Experts consulted during instrument development

Name |

Affiliation |

Alan Hawkins |

Brigham Young University |

Dean Fixsen |

Active Implementation Research Network |

Nicole Kahn |

University of North Carolina |

Randall Juras |

Abt Associates |

Allison Dymnicki |

American Institutes for Research |

Galena Rhoades |

University of Denver |

Table A.8.2. Experts consulted on the design of the youth engagement pilot study

Name |

Affiliation |

Gregg Johnson |

More than Conquerors (MTCI) |

Amy L. Reschly |

University of Georgia |

Catherine McClellan |

Clowder Consulting |

Sidney D’Mello |

University of Colorado |

Sean Kelly |

University of Pittsburgh |

Patti Fitzgerald |

Women’s Care Center of Erie County |

Carla Smith |

Women’s Care Center of Erie County |

A.9. Payments to Respondents

We propose gifts of appreciation to youth for participating in the study activities to help ensure that the study sample will represent most of the youth in the study sites. The team proposes offering either a $5 gift card or a gift bag worth $5 to those youth whose parents or guardians return a signed study consent form, regardless of whether the form gives or refuses consent. A body of literature has revealed that a lack of incentive can result in a less representative sample, and, in particular, that incentives can help overcome a lack of motivation to participate (Shettle and Mooney 1999; Groves, Singer, and Corning 2000). We propose a $5 gift to encourage return and help us achieve an 80 percent consent rate.

In addition to the consent gift, the study team proposes offering a $10 gift card to participants who complete the post-program youth outcome survey. Our surveys include questions on sensitive topics, and thus impose some burden on respondents. Research has shown that such payments are effective at increasing response rates in general populations (Berlin et al. 1992) and with youth (Peitersen 2020). Research also suggests that providing an incentive for earlier surveys may contribute to higher response rates in later ones (Singer et al. 1998). The modest gift of appreciation at the post-programming survey could reduce attrition for six-month follow-up data collection.

The study team proposes offering a $15 gift card to participants who complete the six-month follow-up survey at the site, and $20 to participants who complete it on their own time outside the site. Based on our experiences with similar studies with youth in schools, for example MPC (OMB # 0990-0452), follow up survey administrations are often in a group setting, and need to be done during a lunch period or a similar free period. Offering a gift card may encourage youth who are less motivated to attend the survey administration during a less structured time to complete the survey. The team proposes a slightly larger amount for youth completing outside the site to convey its appreciation for the additional effort completing the survey during the respondent’s personal time, rather than at the school or CBO, might require. The amounts proposed here for the six-month follow-up are identical to those used successfully in the STREAMS Evaluation (0970-0481), sponsored by the Administration for Children and Families. The REA study team expects these gifts of appreciation to help ensure high response rates for REA follow-up surveys.

The team proposes offering a $25 gift card to focus group participants in order to encourage a representative sample of youth to attend to the focus groups to ensure we gather data on varying experiences with the REA curriculum and facilitators. The offer of the gift card may encourage a more diverse group of youth, rather than those who are the most engaged or motivated to participate.

A.10. Assurance of Confidentiality

Before collecting study data from youth participants, Mathematica will seek active consent from a parent or legal guardian (Attachment C). The consent form will explain the purpose of the study, the data being collected, and their use. The form will also state that answers will be kept private to the extent allowed by law, and not seen by anyone outside of the study team, that participation is voluntary, and that youth may refuse to participate at any time without penalty. Participants and their parents or guardians will be told that, to the extent allowable by law, individually identifying information will not be released or published; instead, data will be published in summary form only, with no identifying information at the individual level. The consent form will cover all data collection activities from youth. Parents will be given the opportunity to have their children opt out of the youth focus groups while agreeing to complete the surveys and exit tickets. The study consent form for youth in schools and CBOs participating in the pilot study will have additional information describing the video/audio recording data collection and the ability to opt-out of this component.

On the day of the survey administration, program facilitators will distribute an assent form to participants, giving them with a chance to opt out of the survey data collection without penalty (Attachment D). Similarly, if they are selected to participate in a focus group, youth will be given the chance to opt out of the focus group without penalty.

Institutional review board (IRB) review of the data collection protocol, instruments, consent, and assent forms by Health Media Labs will be initiated upon OMB approval of the study. Due to the private and sensitive nature of some information that will be collected as part of the survey (Section A.11), the study will obtain a Certificate of Confidentiality. The Certificate of Confidentiality helps assure participants that their information will be kept private to the fullest extent permitted by law.

Youth outcome survey. In addition to the study consent process, our protocol during the administration of the youth outcome survey will reassure youth that we take the issue of privacy seriously. It will be made clear to respondents that identifying information will be kept separate from questionnaires. To access the web survey, each questionnaire will require a unique URL; this will ensure that no identifying information will appear on the questionnaire and prevent unauthorized users from accessing the web application. Any personally identifiable information will be stored in secure files, separate from survey and other individual-level data. Program facilitators or Mathematica field staff will collect the tablets or smartphones used for survey administration at the end of the survey, and will be trained to keep the devices in a secure location at all times.

Mathematica has established security plans for handling data during all phases of the data collection. The plans include a secure server infrastructure for online data collection of the web-based survey, which features HTTPS encrypted data communication, user authentication, firewalls, and multiple layers of servers to minimize vulnerability to security breaches. Hosting the survey on an HTTPS site ensures that data are transmitted using 128-bit encryption; transmissions intercepted by unauthorized users cannot be read as plain text. This security measure is in addition to standard user PIN and password authentication that precludes unauthorized users from accessing the web application. Any personally identifiable information used to contact respondents will be stored in secure files, separate from survey and other individual-level data.

Youth exit ticket. The youth exit ticket will be a self-administered hard-copy form, distributed to youth by facilitators at the end of each REA session. The exit ticket will include the participant’s first and last name and unique study ID. Participant name will be included initially (either by using a cover sheet or removable label), to help program facilitators distribute forms. Program facilitators will instruct youth to tear off or remove their name leaving only the unique study ID, prior to turning in the completed form. Completed, de-identified exit tickets will be stored in a sealed envelope marked with the school, class period and facilitator name. Facilitators will be provided paid FedEx materials to ship the completed, de-identified forms to Mathematica’s Survey Operations Center for data entry.

Youth focus group. Youth selected for participation in the focus groups will also be given an assent form to sign and will have an opportunity to refuse participation at the time of the focus group, if they choose to do so. Copies of these forms are in Attachment E. Focus group assent forms state that answers will be kept private and will not be attributed to any participant by name. The forms also state that youths’ participation is voluntary, that they may opt out of audio recordings and still participate in the focus group, and that identifying information about them will not be released or published. Groups will only be recorded if all participants in the group agree to being recorded. The focus group consent forms also include language explaining the unique confidentiality risks associated with participating in a group interview. We will wait to begin recording the discussion until after everyone has introduced themselves. The transcribed notes will not include any names. All notes and audio recordings will be stored on Mathematica’s secure network. No one outside the study team will have access to the data. Mathematica staff are prompted to enter at least two passwords to access the network. Only Mathematica staff working directly on the REA project have access to the project folder on the network where recordings will be saved. All audio recordings will be destroyed as soon as they have been transcribed and notes will be destroyed per contract requirements.

Implementation and fidelity assessment. Program facilitators and site/district leadership staff participating in interviews will receive information about privacy protection as part of the implementation study team’s introductory comments at the start of the interview.

All data from the program fidelity logs will be transmitted with a unique identifier and not with personally identifying information. The unique identifier is necessary to support combining the program attendance data with outcome data. We will also use a password-protected website to exchange the files. All electronic data will be stored in secure files.

Vdeo and audio recording of youth. For the school(s) in which we conduct the pilot, the parent consent form details the plans for the recording and the privacy protections. The study team will coordinate the transfer of videos and audio files from the recording devices to Mathematica’s secure internal network. The video and audio files will never be connected to student names by the study team, though because students and teachers will say each other’s names and may wear name tags, their names may be included in the recordings. Importantly, the study team does not plan to analyze these data at the individual level. Rather, the study team will aggregate the individual data to the classroom level, which will be the primary level of analysis. As noted above, throughout the video analysis, the video and audio files will be stored in secure locations and accessible only to staff on a need-to-know basis.

A.11. Justification for Sensitive Questions

The study seeks to understand what the core components of REA are and how they affect optimal health outcomes for youth, including changes in sexual risk behaviors and alcohol and drug use. When asked to complete surveys, participants will be informed that their identities will be kept private, and they do not have to answer questions that make them uncomfortable.

Table A.11.1 lists the sensitive topics on the surveys, along with justification for including each topic. Questions about sensitive topics will be drawn from previously successful youth surveys and similar federal evaluations (see Attachment B). Although these topics are sensitive, they are commonly and successfully asked of similar populations.

Table A.11.1. Summary of sensitive topics to be included on the youth outcome surveys, and justification for including

Topic |

Justification |

Sexual risk behaviors |

REA includes content on sexual health, healthy decision making, communication skills, and healthy dating behaviors, which are expected to influence sexual risk-taking behaviors in youth and are a focus of the TPP funding. To measure the potential impact of this program component, the REA youth outcome surveys include questions about sexual activity. Similar questions have been approved by OMB for use in federal evaluations of adolescent teen pregnancy prevention programs. |

Drug and alcohol use |

The REA curriculum is designed to increase optimal health of adolescents by, for example, expanding their knowledge of the effects of drug and alcohol use and decreasing drug and alcohol use. To assess the impacts of REA on these targeted outcomes, the youth outcome surveys include questions about drug and alcohol use. All of these questions come from the Centers for Disease Control and Prevention’s Youth Behavioral Risk Surveys of high school youth. |

Sexual orientation |

There is a growing emphasis in healthy relationship education on inclusivity with respect to sexual orientation. For the REA study, we will ask respondents their sexual orientation (based on how they self-identify), both to better understand the populations being served and to statistically adjust for the role of sexual orientation as a predictor of relationship education outcomes. |

Race |

The study will collect demographic information—including race—from youth, because programs are delivered in a range of contexts. It is important to know the racial makeup of the youth receiving the program to understand the population being served and to statistically adjust for role of race as a predictor of relationship education outcomes. |

A.12. Estimates of the Burden of Data Collection

OPA is requesting three years of clearance for the REA study. Table A.12.1 provides the estimated annual reporting burden for study participants.

Annual burden for youth study participants

An expected 2,000 youth will be eligible to participate in the study across all participating sites. We expect to obtain consent for 80 percent of the eligible youth, for a total sample size of 1,600. We expect about 10 percent of the youth sample to be age 18 or older.

Youth outcome surveys. Youth outcome surveys will be administered at three points during the study. For the baseline and immediate follow-up survey, we expect a response rate of 95 percent, for a total of 1,520 completes (1,600 *.95) and 507 annual completes (1,520/3) for each administration. The expected response rate for the six-month follow-up survey is 90 percent, for a total of 1,440 completes (1,600*.9) and 480 annual completes (1,440/3). Based on experience with similar questionnaires, youth should take about 40 minutes (40/60 hours) to complete the surveys, on average, for a total burden of 2,986 hours (1,013 hours + 1,013 hours + 960 hours). The annual burden for the youth outcome survey data collection is estimated to be 996 hours (338 hours + 338 hours + 320 hours).

Youth focus groups. We expect up to 10 youth participants in each of the 40 sites will participate in a focus group, for a total of 400 participants, or about 133 participants annually. Each focus group is expected to take 1.5 hours, yielding a total burden of 600 hours and an annual burden of 200 hours (600/3).

Youth engagement exit tickets. Youth participants with parental consent for the study will fill out a short exit ticket survey after each session of the REA curriculum. It is expected that an average REA curriculum sequence will be delivered over 12 sessions, and each exit ticket will take youth two minutes (2/60 hours) to complete. We expect that almost all youth attending a session will complete the exit ticket, which would be up to 1600 youth total and 533 annually. The total burden is estimated at 640 hours, and the annual burden is estimated to be 213 hours (533 annual respondents * 12 sessions * 2/60 hours).

Video and audio recording of youth. There is no burden associated with the collection of the recordings. The audio and video data will be collected in the background by the study team. Youth study participants will simply participate in class as normal.

Annual burden for program facilitators

Fidelity logs. Program facilitators (1 per site, 40 sites total, where each facilitator teaches two periods/classrooms of REA) will be expected to complete a fidelity log for each session to report on attendance, content covered during the session, and program components that were completed. We expect these data will be reported by up to 40 respondents total and 13 annually. Completion of the fidelity log is estimated to take 10 minutes (10/60 hours). The total burden is estimated to be 160 hours, and the annual burden is estimated to be 52 hours (13 annual respondents * 2 class periods * 12 sessions per class * 10/60 hours).

Facilitator interviews. We expect to have sites in about eight school districts or CBOs. Each participating district or CBO will have up to 2 program facilitators delivering the curriculum at the study sites, for a total of 16 program facilitators, or about 5 annually. We expect to conduct interviews with each of the program facilitators twice, once before programming and once post-programming. Interviews are estimated to last one hour, for an annual estimated burden of 10 hours (5 respondents * 2 responses * 1 hour each).

Annual burden for District/CBO leadership

District/CBO Leadership Interviews. We will interview district or CBO leadership, which we estimate will be up to 4 people at each of the eight districts or CBOs participating in the study, for a total of 32 respondents, or about 11 annually. We expect to conduct interviews with each leader twice, once before programming and once post-programming. Interviews are estimated at 45 minutes (45/60 hours) for a total annual burden of 17 hours (11 respondents * 2 responses * 45/60).

Table A.12.1. Calculations of annual burden hours

Instrument |

Type of respondent |

Annual number of respondents |

Number of responses per respondent |

Average burden hours per response |

Annual burden hours |

1. Youth outcome survey |

Youth |

498 |

3 |

40/60 |

996 |

2. Youth focus group |

Youth |

133 |

1 |

90/60 |

200 |

3. Youth engagement exit ticket |

Youth |

533 |

12 |

2/60 |

213 |

4. Fidelity log |

Program facilitators |

13 |

24 |

10/60 |

52 |

5. Facilitator interview topic guide |

Program facilitators |

5 |

2 |

1 |

10 |

6. District/CBO leadership interview topic guide |

District/school/ CBO leadership |

11 |

2 |

45/60 |

17 |

Estimated annual burden: Total |

|

1193

|

44 |

|

1,488 |

Total Annual Cost

We estimate the average hourly wage for program facilitators to be $25.09, based on the average hourly wage for “Community and Social Service Occupations” as determined by the U.S. Bureau of Labor Statistics Occupational Employment and Wage Statistics for 2020.4 The total annual cost of program facilitators for completing fidelity logs and interviews is estimated to be $1,555.58 ($25.09*62 annual hours). For site leadership, which includes school district administrators, school principals, and leaders of community-based organizations, we estimate the average hourly wage to be $55.38, based on the average hourly wage of “Education Administrators, Postsecondary.” The total annual cost of site leadership is estimated at $941.46 ($55.38*17). We estimate the average hourly wage for youth respondents at $7.25, based on the federal minimum wage. The total annual cost for youth respondents across Instruments 1, 2 and 3 is $10,215.25 ($7.25*1,409 annual hours across all youth instruments).

The estimated total annual cost burden is $12,712.29 (Table A.12.2).

Table A.12.2. Annualized cost to respondents

Type of respondent |

Total burden hours |

Hourly wage |

Total respondent costs |

Youth |

1,409 |

$7.25 |

$10,215.25 |

Program facilitators |

62 |

$25.09 |

$1,555.58 |

District/school/ CBO leadership |

17 |

$55.38 |

$941.46 |

Total |

1488 |

|

$12,712.29 |

A13. Estimates of Other Total Annual Cost Burden to Respondents and Record Keepers

These information collection activities do not place any capital cost or cost of maintaining requirements on respondents.

A.14. Annualized Cost to Federal Government

Data collection and analysis will be carried out by Mathematica under its contract with OPA to conduct the Components Study of REA. OPA staff will not be involved in either data collection or data analysis; thus, there are no agency labor or resources involved in conducting this study. The total and annualized costs to the federal government are in Table A.14.1.

Table A.14.1. Annualized cost to federal government by cost category

Cost Category |

Estimated Costs |

Instrument development and OMB clearance |

$ 481,937 |

Data collection |

$ 502,566 |

Analysis |

$ 227,566 |

Publications/dissemination |

$ 38,655 |

Total costs over the request period |

$1,250,724 |

Annual costs |

$416,908 |

A.15. Explanation for Program Changes or Adjustments

This is a new data collection.

A16. Plans for Tabulation and Publication and Project Time Schedule

1. Analysis Plan

We will analyze implementation data from all sources to address key research questions and present findings in engaging and meaningful ways. Our analysis will be designed to (1) describe the REA core components and how they were operationalized at each site; (2) document how the selected components were implemented, and explore variations in implementation quality and youth engagement; and (3) describe the experience of youth and facilitators with the selected components, and share lessons learned.

For qualitative data, we will use a structured, systematic analytic approach to identify key findings. Our analyses will draw out the variations and similarities in the implementation of the core components to understand and inform the outcome study. We will also conduct quantitative analyses of attendance and fidelity monitoring data, and of quality ratings from observations. These systematic analyses will yield more key details on how implementation of each component varies and of the components’ possible relationships to key outcomes. Where feasible, measures and findings from both the qualitative and quantitative analyses will be refined and incorporated into the outcome study.

Our approach to answering research questions that link core components to outcomes is based on (1) the approach described in Cole and Choi (2020) for estimating which core components predict youth outcomes and (2) the approach described in Deke and Finucane (2019) for interpreting research findings using objective, evidence-based Bayesian posterior probabilities instead of p-values.

We will estimate the relationship between core components and youth outcomes using a multi-level model that accounts for variation at the site and youth levels and adjusts for contextual variables and key baseline measures. Outcomes for this analysis will be measured using follow-up surveys. We will examine how outcomes vary with respect to site-level components (for example, the content categories selected for implementation in a site, the specific lesson content offered, facilitator training/background), as well as variables representing individual experiences (for example, dosage and content received, quality and fidelity of delivery, etc.). We will focus estimation analysis on the partial r2 statistic, which can be used to identify which combinations of core components are the best predictors of the outcomes. The analysis can include interactions among components in recognition that the whole of a collection of components may be greater than the sum of the parts.

Although our estimation will focus on the partial r2 statistic, our interpretation will focus on quantities more meaningful to a broad audience of parents, educators, and policymakers. First, we will report findings in terms of predicted differences in youth outcomes associated with varying core components (based on the coefficient estimates from the multilevel model). For example, we can report the predicted difference in skills associated with healthy relationships between (1) youth who receive a high dose of specific program modules from highly qualified facilitators and (2) youth who receive a low dose of the same program modules from less qualified facilitators.

Second, we will report which of those predicted differences are most likely to be genuine, given our estimates and prior evidence. The probability that a finding is genuine (given our estimates and prior evidence) is a Bayesian posterior probability. With this approach, we can make statements such as “We estimate a 75 percent probability that these core components are genuinely associated with improved healthy relationship skills, given our estimates and prior evidence about healthy relationship behaviors.” A key advantage of this approach is that it avoids the pitfalls of misinterpreting statistical significance or a lack thereof (Wasserstein and Lazar 2016; Greenland et al. 2016). However, we will also report the statistical significance of estimates for readers who are more comfortable with that interpretive approach.

Another advantage of our interpretive approach is that we can draw meaningful distinctions between findings that would be deemed statistically insignificant using the traditional approach. This allows us to learn more with the same sample size. We believe the study will be adequately powered using the traditional approach as well. Given the recruitment targets (see A.12), the study can detect partial r2 statistics of between 1 and 2 percentage points. Goesling and Lee (2015) found relationships of a similar magnitude in a study on the roles sexually risky behaviors play in predicting sexual behavior outcomes.

For the pilot study, once we have the classroom-level engagement metrics, we can compare the metrics against distinct types of activities or content being offered throughout the session. For example, we will be able to examine whether role-play activities are associated with higher levels of youth engagement than facilitator presentations. Or whether certain types of program content (e.g., sexual health content vs. more generic relationship skills content) are associated with higher/lower levels of youth engagement.

Finally, the continuous metric of youth engagement can be aggregated/averaged to the class period or to the entire program, enabling a comparable unit of analysis as the observer or youth exit tickets. With comparable units of analysis we will run correlations to see the strength of the relationships between the different measures of youth engagement.

2. Time Schedule and Publications

Table A.16.1 shows the tentative timeline for data collection and reporting activities. Sample enrollment and baseline and immediate post-survey data collection is expected to begin around January 2022, after obtaining OMB approval, and to continue for up to three semesters through June 2023. Fidelity and implementation data collection will begin as soon as REA program delivery to sample members begins and continue through the end of programming with sample members, approximately January 2022 through June 2023. Data collection for the six-month follow-up survey will begin around September 2022—six months after the end of the first round of programming and continue until six-months after the final round of programming, until December 2023.

The planned reporting activities are: two research-to-practice briefs and technical reports based on implementation and early outcome data; a report on the youth engagement pilot; a research-to-practice brief and brief technical report based on the youth outcome six-month follow-up survey; an interactive data dashboard that leverage the model coefficients from the multilevel analyses to enable users to produce a real-time prediction of outcomes of interest measured in the follow-up survey, based on the variations in implementation they select and a restricted use file.

Table A.16.1. Schedule for the Components Study of Real Essentials Advance

Activity |

Timinga |

Data collection |

|

Sample enrollment and pre-post surveys |

January 2022–June 2023 |

Implementation study data collection |

January 2022–June 2023 |

Youth engagement pilot data collection |

Likely Fall 2022: possibly Spring 2022 |

Six-month follow-up surveys |

September 2022–January 2024 |

Reporting |

|

Research to practice briefs (2) |

March 2023 |

Brief technical reports (2) |

March 2023 |

Interactive website |

March 2023 |

Youth Engagement Pilot Report |

September 2023 |

Long-term follow-up brief technical report |

March 2024 |

Long-term follow-up research-to-practice brief |

March 2024 |

Restricted use file |

March 2024 |

aSubject to timing of OMB approval.

A17. Reason(s) Display of OMB Expiration Date is Inappropriate

All instruments will show the OMB Control Number and expiration date.

A18. Exceptions to Certification for Paperwork Reduction Act Submissions

No exceptions are necessary for this information collection.

References

Blase, K., and D. Fixsen. “Core Intervention Components: Identifying and Operationalizing What Makes Programs Work.” Washington, DC: Office of the Assistant Secretary for Planning and Evaluation, Office of Human Services Policy, U.S. Department of Health and Human Services, 2013. Available at https://aspe.hhs.gov/report/core-intervention-components-identifying-and-operationalizing-what-makes-programs-work.

Berlin, Martha, Leyla Mohadjer, Joseph Waksberg, Andrew Kolstad, Irwin Kirsch, D. Rock, and Kentaro Yamamoto. “An Experiment in Monetary Incentives.” In JSM proceedings, pp. 393–98. Alexandria, VA: American Statistical Association, 1992.

Cole, R., and J. Choi. “Understanding How Components of an Intervention Can Influence Outcomes.” Evaluation technical assistance brief submitted to the Office of Adolescent Population Affairs. Under review. Princeton, NJ: Mathematica, March 2020.

Deke, J., and M. Finucane. “Moving Beyond Statistical Significance: The BASIE (BAyeSian Interpretation of Estimates) Framework for Interpreting Findings from Impact Evaluations.” OPRE Report no. 2019-35. Washington, DC: U.S. Department of Health and Human Services, Administration for Children and Families, Office of Planning, Research and Evaluation, 2019.

Ferber, T., M. E. Wiggins, and A. Sileo. “Advancing the Use of Core Components of Effective Programs.” Washington, DC: The Forum for Youth Investment, 2019. Available at https://forumfyi.org/knowledge-center/advancing-core-components/.

Greenland, S., S. J. Senn, K. J. Rothman, J. B. Carlin, C. Poole, S. N. Goodman, and D. G. Altman. “Statistical Tests, P Values, Confidence Intervals, and Power: A Guide to Misinterpretations.” European Journal of Epidemiology, vol. 31, no. 4, 2016, pp. 337–350.

Groves, R.M., E. Singer, and A.D. Corning. “A Leverage-Saliency Theory of Survey Participation: Description and Illustration.” Public Opinion Quarterly, vol. 64, 2000, pp. 299–308.

Goesling, B., and J. Lee. “Improving the Rigor of Quasi-Experimental Impact Evaluations.” ASPE research brief. Washington, DC: U.S. Department of Health and Human Services, Office of the Assistant Secretary for Planning and Evaluation, Office of Human Service Policy, May 2015.

James, Jeannine M., and Richard Bolstein. “The Effect of Monetary Incentives and Follow-Up Mailings on the Response Rate and Response Quality in Mail Surveys.” Public Opinion Quarterly, vol. 54, no. 3, 1990, pp. 346–61.

National Academies of Sciences, Engineering, and Medicine (NAS). “Promoting Positive Adolescent Health Behaviors and Outcomes: Thriving in the 21st Century.” Washington, DC: The National Academies Press, 2020. https://doi. org/10.17226/25552.

National Academies of Sciences, Engineering, and Medicine (NAS). “Applying Lessons of Optimal Adolescent Health to Improve Behavioral Outcomes for Youth, Public Information Gathering Session: Proceedings of a Workshop in Brief.” Washington, DC: The National Academies Press, 2019.

Pejtersen, Jan Hyld. “The Effect Of Monetary Incentive On Survey Response For Vulnerable Children And Youths: A Randomized Controlled Trial.” PLoS ONE vol.15, 5, 2020, e0233025. https://doi.org/10.1371/journal.pone.0233025.

Shettle, C., and G. Mooney. “Monetary Incentives in Government Surveys.” Journal of Official Statistics, vol. 15, 1999, 231–250.

Singer, Eleanor, John Van Hoewyk, and Mary P. Maher. “Does the Payment Of Incentives Create Expectation Effects?” Public Opinion Quarterly, vol. 62, 1998, pp. 152–64.

Frensley, Troy, Marc J. Stern, and Robert B. Powell. “Does Student Enthusiasm Equal Learning? The Mismatch Between Observed and Self-Reported Student Engagement and Environmental Literacy Outcomes in a Residential Setting,” The Journal of Environmental Education, vol. 51, no. 6, 2020, pp. 449–461, DOI: 10.1080/00958964.2020.1727404.

Wasserstein, R. L., and N. A. Lazar. “The ASA’s Statement on p-Values: Context, Process, and Purpose.” The American Statistician, vol. 70, no. 2, 2016, pp. 129–133.

1 Sites will primarily be high schools but may also be community-based organizations (CBOs).

2 Optimal health is a dynamic balance of physical, emotional, social, spiritual, and intellectual health.

3 Although we may collect some data virtually for the study, we expect program implementation to be in person.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | Mathematica Report Template |

| Author | Sharon Clark |

| File Modified | 0000-00-00 |

| File Created | 2022-01-14 |

© 2026 OMB.report | Privacy Policy