0648-0788 Supporting Statement Part B

0648-0788 Supporting Statement Part B.docx

Economic Analysis of Shoreline Treatment Options for Coastal New Hampshire

OMB: 0648-0788

SUPPORTING STATEMENT

U.S. Department of Commerce

National Oceanic & Atmospheric Administration

Economic Analysis of Shoreline Treatment Options for Coastal New Hampshire

OMB Control No. 0648-0788

B. Collections of Information Employing Statistical Methods

Describe (including a numerical estimate) the potential respondent universe and any sampling or other respondent selection method to be used. Data on the number of entities (e.g., establishments, State and local government units, households, or persons) in the universe covered by the collection and in the corresponding sample are to be provided in tabular form for the universe as a whole and for each of the strata in the proposed sample. Indicate expected response rates for the collection as a whole. If the collection had been conducted previously, include the actual response rate achieved during the last collection.

Potential Respondent Universe and Response Rate

The potential respondent universe for this study includes residents aged 18 and over living in Census Block Groups located 1) in the two coastal counties in New Hampshire, 2) in Massachusetts within Census Block Groups bordering the Hampton-Seabrook Estuary, and 3) in Maine within Census Block Groups bordering the Piscataqua River. The population will be stratified by households living in block groups bordering three water bodies of interest (Great Bay Estuary, Hampton-Seabrook Estuary, and Piscataqua River), as well as by households of the 17 communities located in the coastal zone to enable subgroup analyses of interest.

The estimated total number of occupied households in the study region is 175,020 (US Census Bureau/American FactFinder, 2015a) and the estimated total population 18 years and over is 361,994 (US Census Bureau/American FactFinder, 2015b).

In terms of response rate, as a part of the 2010 decennial census, the U.S. Census Bureau reported mail back participation rates ranging from 51% to 78% for New Hampshire counties (US Census Bureau, 2010). Recent studies conducted in the region on similar topics being investigated in the present study were examined to determine a reasonable response rate. Johnston, Feurt, and Holland (2015) reported a response rate of 32.4% on their mail back contingent choice survey of Maine watershed residents; Myers et al. (2010) and Edwards et al. (2011) reported a 65% response rate from an intercept survey in the Delaware Bay to estimate the economic value of birdwatching; and ERG (2016) reported a 51% response rate from an online survey of New York households to understand preferences and values for shoreline armoring versus living shorelines and a 54% response rate from an online survey of New Jersey households to understand values of salt marsh restoration at Forsyth National Wildlife Refuge. To better understand the social context of the issue in the region of interest, researchers talked with key partners to gather anecdotal information on the level of public knowledge, interest, and awareness of shoreline treatment options. Additionally, researchers attended a public workshop for local property owners interested in learning how to protect their property from coastal flooding.

Response rates for the pre-test ranged from 17% to 31% depending on the region, with an overall response rate of 24%. However, the pre-test was launched in November 2020, shortly after the general election and continued through the holiday season. Therefore, we anticipate higher response rates during the full survey implementation (late-summer through early fall, 2021).

Based on the information gathered, researchers anticipate a response rate of approximately 25%.

Sampling and Respondent Selection Method

A pretest was conducted on 540 individuals using address-based sampling to select residential households randomly within each of the strata. The University of New Hampshire Survey Center secured the address-based frame.

Data will be collected using a two-stage stratified random sampling design. The study region will be stratified geographically. Details of the strata are explained below. Within each stratum, we will be selecting households at random, and within each selected household, the individual with the next upcoming birthday who is aged 18 or older will be selected. Therefore, the primary sampling unit (PSU) is the household, and the secondary sampling unit (SSU) consists of individuals selected within each household. The proposed strata will allow the researchers to examine the influence of geographic proximity on respondent opinions and values.

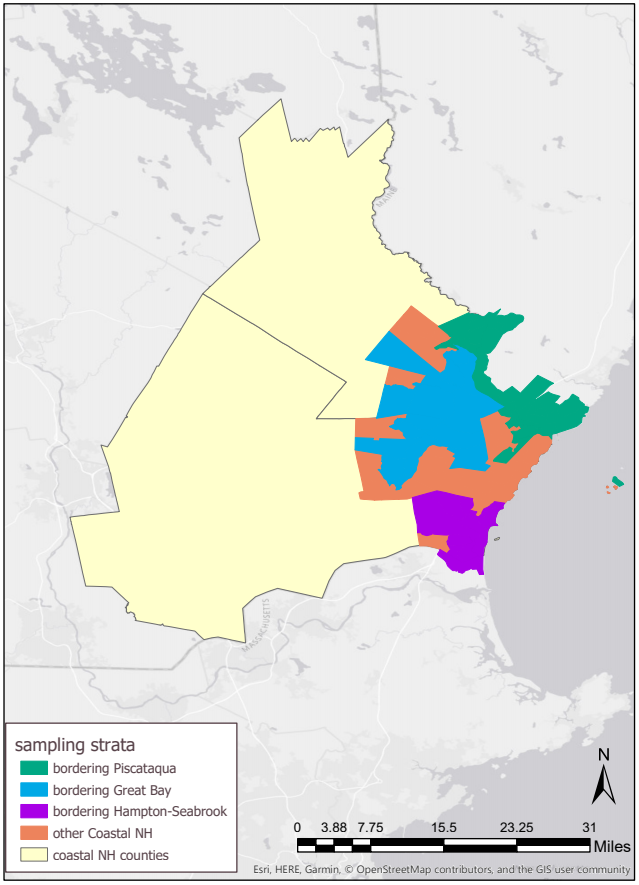

The strata are comprised of the following (Figure 1):

Block groups bordering the Great Bay Estuary (bordering Great Bay)

Block groups bordering the Hampton-Seabrook Estuary (bordering Hampton-Seabrook)

Block groups bordering the Piscataqua River (bordering Piscataqua)

Block groups within the 17 coastal zone communities (other Coastal NH)

Block groups within the two coastal counties (coastal NH counties)

Figure SEQ Figure \* ARABIC 1: Sampling geography by strata

Table 2 provides a breakdown of the tentative estimated number of completed surveys desired for each stratum, along with the sample size per stratum. In order to obtain our estimated minimum number of respondents (d), the sample size needs to be increased to account for both non-response (e) and mail non-delivery (f). Therefore, based on the statistical sampling methodology discussed in detail in Question 2 below, the 25% response rate, and the 10% non-deliverable rate (rate based on expert opinion of the survey vendor and a 6.3% non-deliverable rate for the pre-test), the sample size for the final collection will be 10,242. The “coastal NH counties” strata is further stratified by county to help ensure geographic coverage. As we are interested in comparing differences between strata, an equal allocation is used to minimize the sampling variance. However, the sample size for the “coastal NH counties” is doubled to reduce the variance in sampling weights and proportionately allocated between the two counties to avoid randomly sampling only one of the counties. See Section 2.v. below for more details on determining the minimum sample size.

To approximate random selection of one respondent within the household, instruction will be given in the informational letter accompanying the survey package asking that the survey be completed by the person in the household age of 18 or older who has the next upcoming birthday.

Table 2: Estimates of sample size by strata

Strata |

Sub-strata |

Estimated Population 18 and over (a) |

Estimated Occupied Households (b) |

Pretest Sample (c) |

Estimated Minimum Number of Respondents

(d) |

Sample Size Adjusted for 25% Response Rate (e)=(d) ÷ 25% |

Sample Size Adjusted for 10% Non- Deliverable Rate) (f)=(e) ÷(1−10%) |

Great Bay |

-- |

30,037 |

12,237 |

90 |

384 |

1,536 |

1,707 |

Hampton-Seabrook |

-- |

18,725 |

10,169 |

90 |

384 |

1,536 |

1,707 |

Piscataqua |

-- |

30,638 |

16,891 |

90 |

384 |

1,536 |

1,707 |

other Coastal NH |

-- |

63,165 |

28,511 |

90 |

384 |

1,536 |

1,707 |

coastal NH counties |

Rockingham |

160,393 |

77,121 |

129 |

552 |

2,208 |

2,454 |

Strafford |

59,036 |

30,091 |

51 |

216 |

864 |

960 |

|

TOTAL |

|

361,994 |

175,020 |

540 |

2,304 |

9,216 |

10,242 |

Describe the procedures for the collection of information including:

Statistical methodology for stratification and sample selection,

Estimation procedure,

Degree of accuracy needed for the purpose described in the justification,

Unusual problems requiring specialized sampling procedures, and

Any use of periodic (less frequent than annual) data collection cycles to reduce burden.

Stratification and Sample Selection

Residential households will be randomly selected from each stratum using an address-based frame procured from the U.S. Postal Service. Weighting

For obtaining population-based estimates of various parameters, each responding household will be assigned a sampling weight. The weights will be used to produce estimates that:

are generalizable to the population from which the sample was selected;

account for differential probabilities of selection across the sampling strata;

match the population distributions of selected demographic variables within strata; and

allow for adjustments to reduce potential non-response bias.

These weights combine:

a base sampling weight which is the inverse of the probability of selection of the household;

a within-stratum adjustment for differential non-response across strata; and

a non-response weight.

Post-stratification adjustments will be made to match the sample to known population values (e.g., from Census data).

There are various models that can be used for non-response weighting. For example, non-response weights can be constructed based on estimated response propensities or on weighting class adjustments. Response propensities are designed to treat non-response as a stochastic process in which there are shared causes of the likelihood of non-response and the value of the survey variable. The weighting class approach assumes that within a weighting class (typically demographically-defined), non-respondents and respondents have the same or very similar distributions on the survey variables. If this model assumption holds, then applying weights to the respondents reduces bias in the estimator that is due to non-response. Several factors, including the difference between the sample and population distributions of demographic characteristics, and the plan for how to use weights in the regression models will determine which approach is most efficient for both estimating population parameters and for the stated-preference modeling.

Experimental Design

Experimental design for the choice experiment surveys will follow established practices. Fractional factorial design will be used to construct choice questions with an orthogonal array of attribute levels, with questions randomly divided among distinct survey versions (Louviere et al., 2000). Based on standard choice experiment experimental design procedures (Louviere et al., 2000), the number of questions and survey versions were determined by, among other factors, the number of attributes in the final experimental design and complexity of questions and the number of attributes that may be varied within each question while maintaining respondents’ ability to make appropriate neoclassical tradeoffs.

Based on the models proposed below and recommendations in the literature, researchers anticipate an experimental design that allows for the estimation of main effects and all two-way interaction effects of program attributes (Louviere et al., 2000). Choice sets (Bennett and Blamey, 2001), including variable level selection, were designed with the goal of illustrating realistic policy scenarios that “span the range over which we expect respondents to have preferences, and/or are practically achievable” (Bateman et al. 2002, p. 259), following guidance in the literature. Each treatment (survey question) includes two alternative options (Options A and B) and an “opt-out” option, each characterized by six attributes. Hence, there are 18 attributes for each treatment. Guided by realistic ranges of attribute outcomes, the six attributes would have three potential levels.

The Stata program code DCREATE provided by Hole (2015) was used to generate an optimal design and test the efficiency of the design. There are then two decisions to make: 1) the number of choices any one respondent has to make and 2) the number of different versions of the survey.

Initial runs of the programs indicated that an optimal design would require at least 37 alternatives/options to achieve an orthogonal (attributes are uncorrelated) design. An optimal design ensures we can estimate the marginal effects or marginal values of each attribute for the main effects and all two-way interaction effects. The design will use four choice questions per respondent blocked into 10 versions. Each choice contains two alternative options and an opt-out option with attributes at different levels. The final design can be found in Appendix B.

Estimation Procedures

The model for analysis of stated preference data is grounded in the standard random utility model of Hanemann (1984) and McConnell (1990). This model is applied extensively within stated preference research, and allows well-defined welfare measures (i.e., willingness to pay) to be derived from choice experiment models (Bennett and Blamey, 2001; Louviere et al., 2000). Within the standard random utility model applied to choice experiments, hypothetical program alternatives are described in terms of attributes that focus groups reveal as relevant to respondents’ utility, or well-being (Johnston et al., 1995; Adamowicz et al., 1998; Opaluch et al., 1993). One of these attributes would include a monetary cost to the respondent’s household.

Applying

this standard model to choices among programs to improve

environmental quality in Coastal New Hampshire, a standard utility

function,

,

includes attributes of shoreline treatment programs and the net cost

of the program to the respondent. Following standard random utility

theory, utility is assumed known to the respondent, but stochastic

from the perspective of the researcher, such that:

,

includes attributes of shoreline treatment programs and the net cost

of the program to the respondent. Following standard random utility

theory, utility is assumed known to the respondent, but stochastic

from the perspective of the researcher, such that:

where,

is

a vector of variables describing attributes for alternative

is

a vector of variables describing attributes for alternative

,

as perceived by household respondent

,

as perceived by household respondent

is

a vector of socio-demographic characteristics for household

respondent

is

a vector of socio-demographic characteristics for household

respondent

is

a function representing the empirically estimable component of

utility

is

a function representing the empirically estimable component of

utility is

a stochastic or unobservable component of utility, modeled as an

econometric error

is

a stochastic or unobservable component of utility, modeled as an

econometric error

An

individual,

,

is assumed to choose the option,

,

is assumed to choose the option,

,

that maximizes their utility among

,

that maximizes their utility among

options

in the choice set

options

in the choice set

.

The choice probability of any particular option,

.

The choice probability of any particular option,

,

is the probability that the utility of that option is greater than

the utility of the other option:

,

is the probability that the utility of that option is greater than

the utility of the other option:

If error terms are assumed to be independently and identically distributed, and if this distribution can be assumed to be Gumbel, the above can be estimated as a conditional logit model.

Four choice questions are included within the same survey to increase information from each respondent. While respondents will be instructed to consider each choice question as independent of other choice questions, it is nonetheless standard practice within the literature to allow for the potential of correlation among questions answered within a single survey by a single respondent. That is, responses provided by individual respondents may be correlated even though responses across different respondents are considered independent and identically distributed (Poe et al. 1997; Layton 2000; Train 1998).

There are a variety of approaches to accommodate such potential correlation. Models to be assessed include random effects and random parameters (mixed) discrete choice models, common in the stated preference literature (Greene 2018; McFadden and Train 2000; Poe et al. 1997; Layton 2000). Within such models, selected elements of the coefficient vector are assumed normally distributed across respondents, often with free correlation allowed among parameters (Greene 2018). If only the model intercept is assumed to include a random component, then a random effects model is estimated. If both slope and intercept parameters may vary across respondents, then a random parameters model is estimated. Such models will be estimated using standard maximum likelihood for mixed logit techniques, as described by Train (1998), Greene (2008) and others. Mixed logit model performance of alternative specifications will be assessed using standard statistical measures of model fit and convergence, as detailed by Greene (2008, 2018) and Train (1998).

Standard

linear forms are anticipated as the simplest function form for

,

from which more flexible functional forms (e.g., quadratic) can be

derived and compared.

,

from which more flexible functional forms (e.g., quadratic) can be

derived and compared.

A main effects and two-way interactions effects utility function is hypothesized, and following common practice a linear-in-parameters model will be sought. A generic format of the indirect utility function to be modeled is:

Model fit will be assessed following standard practice in the literature (e.g., Greene, 2003).

Degree of Accuracy Needed for the Purpose Described in the Justification

The

following formula can be used to determine the minimum required

sample size, ,

for analysis

,

for analysis

Where

is the z-value required for a specified confidence level (here, 95%),

is the z-value required for a specified confidence level (here, 95%),

is the proportion of the population with a characteristic of interest

(here, p=0.5 conservatively), and

is the proportion of the population with a characteristic of interest

(here, p=0.5 conservatively), and

is the confidence interval (here, 0.05). Therefore,

is the confidence interval (here, 0.05). Therefore,

This means each strata requires a minimum sample size of 384 to be able to test for differences in means at the 95% confidence level with a 5% confidence interval, which is met by our sampling plan.

In Orme (1998), the following formula is given for determining the minimum sample size for a given design:

where,

is

the minimum sample size required

is

the minimum sample size required is

the largest product of levels of any two attributes (here 9)

is

the largest product of levels of any two attributes (here 9) is

number of alternatives (options) per choice set, (here 2)

is

number of alternatives (options) per choice set, (here 2) is

the number of choice sets per respondent (here 4)

is

the number of choice sets per respondent (here 4)

In this design, the minimum sample size required for statistical efficiency is 563. This design permits the estimation of all two-way interactions in addition to main effects.

In addition to the above, as a rule, six observations are needed for each attribute in a bundle of attributes to identify statistically significant effects (Bunch and Batsell, 1989 and Louviere et al., 2000). Since we have six attributes, we need 36 observations per version. Our survey design includes 10 versions, so we need a minimal sample size of 360. Our total minimum number of respondents of 2,304 (see Table 2) meets both of these criteria. Additionally, we expect a margin of error of approximately 5% for each strata, which is more than adequate to meet the analytic needs of the benefits analysis.

Describe methods to maximize response rates and to deal with issues of non-response. The accuracy and reliability of information collected must be shown to be adequate for intended uses. For collections based on sampling, a special justification must be provided for any collection that will not yield "reliable" data that can be generalized to the universe studied.

Focus Groups

The first step in achieving a high response rate is to develop a survey that is easy for respondents to complete. Researchers met with nine focus group members to determine 1) if they understood the tasks they were asked to complete, 2) their process for responding, 3) if they considered all outcomes or if any were missing, and 4) if enough information was provided for them to confidently respond.

Overall, the responses from the focus group participants were encouraging. Participants generally understood the instructions and made thoughtful, rational choice selections. They understood the outcomes in the experiment as coastal hazards are an important, relevant issue in coastal New Hampshire, and enough information was provided for them to make their selections.

Implementation Techniques

The implementation techniques that will be used are consistent with methods that maximize response rates. Researchers propose a mixed-mode system, employing mail contact and recruitment, following the Dillman Tailored Design Method (Dillman et al., 2014), and online survey administration. To maximize response, potential respondents will be contacted multiple times via postcards and other mailings; this will include a pre-survey notification postcard, a letter of invitation, and follow-up reminders (see Appendix C for postcard and letter text). Final survey administration procedures and design of the survey administration tool will be subject to the guidance and expertise of the vendor hired to provide the data with regard to maximizing response rate, based on their experience conducting similar collections in the region of interest.

Incentives

Incentives are consistent with numerous theories about survey participation (Singer and Ye, 2013), such as the theory of reasoned action (Ajzen and Fishbein, 1980), social exchange theory (Dillman et al., 2014), and leverage-salience theory (Groves et al., 2000). Inclusion of an incentive acts as a sign of good will on the part of the study sponsors and encourages reciprocity of that goodwill by the respondent.

Dillman et al. (2014) recommends including incentives to not only increase response rates, but to decrease nonresponse bias. Specifically, an incentive amount between $1 and $5 is recommended for surveys of most populations.

Church (1993) conducted a meta-analysis of 38 studies that implemented some form of mail survey incentive to increase response rates and found that providing a prepaid monetary incentive with the initial survey mailing increases response rates by 19.1% on average. Lesser et al. (2001) analyzed the impact of financial incentives in mail surveys and found that including a $2 bill increased response rates by 11% to 31%. Gajic et al. (2012) administered a stated-preference survey of a general community population using a mixed-mode approach where community members were invited to participate in a web-based survey using a traditional mailed letter. A prepaid cash incentive of $2 was found to increase response rates by 11.6%.

Given these findings, we believe a small, prepaid incentive will boost response rates by at least 10% and would be the most cost effective means to increase response rates. A $2 bill incentive was chosen due to considerations for the population being targeted and the funding available for the project. As this increase in response rate will require a smaller sample size, the cost per response is only expected to increase by roughly $1.03.

Non-Response Bias Study

In order to determine if and how respondents and non-respondents differ, a non-response bias study will be conducted in which a short survey (Appendix D) will be administered to a random sample of households that receive the main survey but do not complete and return it. The short questionnaire will ask a few awareness, attitudinal and demographic questions that can be used to statistically examine differences, if any, between respondents and non-respondents. It will take respondents about 5 minutes to complete the non-response bias study survey. The samples for the non-response follow up will be allocated proportionately to the number of the original mailings in the geographic division (strata). The return envelope will be imprinted with a stamp requesting the recipient to “Please return within 2 weeks.” Table 3 illustrates the target sample size of the non-response survey across survey regions.

Response rates for the pre-test non-response bias study ranged from 2% to 7% depending on the region, with an overall response rate of 4%. However, the same timing issues that apply to the full survey also apply to the non-response follow-up survey. Due to this low response rate, all sampled households that do not complete the survey will be sent the non-response follow-up survey for the full implementation.

Table 3: Pretest sample, final collection estimated completes needed, and final collection adjusted sample size by strata for non-response bias study

Strata |

Sub-strata |

Pretest Completes |

Final Collection Expected Completes |

Great Bay |

-- |

4 |

46 |

Hampton-Seabrook |

-- |

1 |

46 |

Piscataqua |

-- |

3 |

46 |

other Coastal NH |

-- |

3 |

46 |

coastal NH counties |

Rockingham |

1 |

66 |

Strafford |

2 |

26 |

|

TOTAL |

|

14 |

276 |

A subset of the questions from the main questionnaire was selected for the non-response bias study survey:

Questions 1 and 2 are two questions from the main survey that may influence an individual’s response probability. For example, those who do not live in coastal New Hampshire or those who do not believe coastal storms, flooding, or shoreline erosion are major concerns may be less likely to respond.

The items in question 3 ask about attitudes toward managing estuarine and coastal lands in Coastal New Hampshire, costs to one’s household, and government regulations. Comparing responses to these questions across the main survey study and the non-response bias study will allow researchers to assess whether non-respondents did not complete the main survey for reasons related to the survey topic. In contrast, the last item in question 3 inquires about respondents’ ability or propensity to take surveys in general, comparing responses to this item will help researchers assess whether non-response was related to factors that are likely uncorrelated with the experiment.

What is your sex?

In what year were you born?

Do you own or rent property on or near a body of water, such as a river, stream, wetland, pond, or ocean?

Are you a seasonal or year-round resident of the Seacoast region of New Hampshire?

Are you Hispanic or Latino?

What is your race? (select all that apply)

What is the highest level of education you have completed?

What was your annual household income in 2020?

How many people, including yourself, live in your household?

How many of these people are at least 18 years old? __________

By including demographic questions in both the survey and non-response follow-up survey, statistical comparisons of household characteristics can be made across the samples of responding and non-responding households. These data can also be compared to household characteristics from the population, which are available from the 2010 Census.

Two-sided statistical tests will be used to compare responses across the sample of respondents to the main survey and those who completed the non-response questionnaire. There are two types of biases that can arise from non-respondents: nonresponse and selection bias (Whitehead, 2006). The statistical comparisons above will allow for the assessment of the presence of nonresponse bias, which is when respondents and non-respondents differ for spurious reasons. If found, a weighting procedure, as discussed in Section B.1.ii above, can be applied. An inherent assumption in this weighting approach is that, within weight classes, respondents and non-respondents are similar. This assumption may not hold in the presence of selection bias, which is when respondents and non-respondents differ due to unobserved influences associated with the survey topic itself, and perhaps correlated with policy outcome preferences. Researchers will assess the potential for selection bias by comparing responses to the familiarity and attitude questions discussed above. If the results of such comparisons suggest a potential selection bias, the implications towards policy outcome preferences will be examined and discussed.

Describe any tests of procedures or methods to be undertaken. Testing is encouraged as an effective means of refining collections of information to minimize burden and improve utility. Tests must be approved if they call for answers to identical questions from 10 or more respondents. A proposed test or set of tests may be submitted for approval separately or in combination with the main collection of information.

See response to Part B Question 3 above.

Provide the name and telephone number of individuals consulted on statistical aspects of the design and the name of the agency unit, contractor(s), grantee(s), or other person(s) who will actually collect and/or analyze the information for the agency.

Consultation on the statistical aspects of the study design, including sampling design, survey length, and problematic survey items, was provided by Dr. Robert Johnston (508-751-4619).

Researchers with NOAA’s National Centers for Coastal Ocean Science lead this project. Project Principal Investigators are:

Sarah Gonyo, PhD (Lead)

Economist

NOAA National Ocean Service

National Centers for Coastal Ocean Science

1305 East West Hwy

Building SSMC4, Rm 9320

Silver Spring, MD 20910

Ph: 240-533-0382

Email: sarah.gonyo@noaa.gov

Data will be collected and purchased from the University of New Hampshire Survey Center. Data analysis will be conducted by the project principal investigators along with the following research team member:

Amy Freitag, PhD

Social Science Analyst

NOAA National Ocean Service

National Centers for Coastal Ocean Science

Cooperative Oxford Laboratory

904 S Morris St,

Oxford, MD 21654

CSS, Inc.

Ph: 443-258-6066

Email: amy.freitag@noaa.gov

REFERENCES

Adamowicz, W.L. (1994). Habit Formation and Variety Seeking in a Discrete Choice Model of Recreation Demand. Journal of Agricultural and Resource Economics, 19(1): 19–31.

Adamowicz, W.L, Boxall, P., Williams, M., and Louviere, J. (1998). Stated preference approaches for measuring passive use values: Choice experiments and contingent valuation. American Journal of Agricultural Economics, 80(1), 64-75.

Altman, I., & Low, S. M. (1992). Place attachment. New York: Plenum Press.

Atzmüller, C. and Steiner, P.M. (2010). Experimental vignette studies in survey research. Methodology, 6(3):128-138.

Auspurg, K. and Hinz, T. (2015). Factorial Survey Experiments. Los Angeles: Sage.

Barley-Greenfield, S. and Riley, C. (2017). Buffer Options for the Bay: Synthesis of Relevant Policy Options.

Bateman, I. J., Langford, I. H., Turner, R. K., Willis, K. G., and Garrod, G. D. (1995). Elicitation and truncation effects in contingent valuation studies. Ecological Economics, 12(2), 161-17.

Bauer, D.M. and Johnston, R.J. (2017). Buffer Options for the Bay: Economic Valuation of Water Quality Ecosystem Services in the New Hampshire’s Great Bay Watershed.

Bech, M., Kjaer, T. and Lauridsen, J. (2011). Does the number of choice sets matter? Results from a web survey applying a discrete choice experiment. Health Economics, 20(3), 273-286.

Bennett, J., and Blamey, R. (2001). The choice modelling approach to environmental valuation. Northampton, MA: Edward Elgar.

Boyle, K.J., Welsh, M.P., and Bishop, R.C. (1993). The Role of Question Order and Respondent Experience in Contingent-Valuation Studies. Journal of Environmental Economics and Management, 25(1): S80–S99.

Bricker, K.S. and Kerstetter, D.L. (2002). An interpretation of special place meanings whitewater recreationists attach to the South Fork of the American River. Tourism Geographies: An International Journal of Tourism Space, Place, and Environment, 4(4): 396-425.

Cameron, T.A., and Englin, J. (1997). Respondent Experience and Contingent Valuation of Environmental Goods. Journal of Environmental Economics and Management, 33 (3): 296–313.

Champ, P.A. and Bishop, R.C. (2001). Donation payment mechanism and contingent valuation: An empirical study of hypothetical bias. Environmental and Resource Economics, 19(4), 383-402.

Church, A.H. (1993). Estimating the effect of incentives on mail survey response rates: A meta-analysis. Public Opinion Quarterly, 57(1): 62-80.

Czajkowski, M., Hanley, N., and LaRiveire, J. (2014). The effect of experience on preferences: Theory and empirics for environmental goods. American Journal of Agricultural Economics, 97(1): 333-351.

Davenport, M.A. and Anderson, D. H. (2005). Getting from sense of place to place based management: An interpretive investigation of place meanings and perceptions on landscape change. Society & Natural Resources, 18(7), 625–641.

Dillman, D. A., Smyth, J. D., and Christian, L. M. (2014). Internet, mail, and mixed-mode surveys: The tailored design method. Hoboken, NJ: Wiley.

Dülmer, H. (2007). Experimental plans in factorial surveys: Random or quota design? Sociological Methods & Research, 35(3): 382-409.

Edwards, P.E.T., Parsons, G.R. and Myers, K.H. (2011). The economic value of viewing migratory shorebirds on the Delaware Bay: An application of the single site travel cost model using on-site data. Human Dimensions of Wildlife, 16(6):435-444.

ERG. (2016). Hurricane Sandy and the value of trade-offs in coastal restoration and protection. Prepared for NOAA Office for Coastal Management.

Flanagin, A.J. and Metzger, M.J. (2000). Perceptions of Internet Information Credibility. Journalism and Mass Communication Quarterly, 77(3): 515-540.

Fuji, S. and Gärling, T. (2003). Application of attitude theory for improved predictive accuracy of stated preference methods in travel demand analysis. Transportation Research Part A Policy and Practice, 37(4), 389-402.

Gajic, A., Cameron, D., and Hurley, J. (2012). The cost-effectiveness of cash versus lottery incentives for a web-based, stated-preference community survey. European Journal of Health Economics, 13(6): 789-799.

Gilbert, A., Glass, R., and More, T. (1992). Valuation of Eastern Wilderness: Extramarket Measures of Public Support. In Payne, C., Bowker, J.M., and Reed, P.C. (Eds.), The Economic Value of Wilderness: Proceedings of the Conference, Jackson, WY, U.S. Forest Service, General Technical Report SE-78 (pp.57-70a).

Greene, W. H. (2018). Econometric analysis. New York, NY: Pearson.

Groves, R.M., Singer, E., and Corning, A. (2000). Leverage-Saliency Theory of Survey Participation: Description and an Illustration. Public Opinion Quarterly, 64(3): 299-308.

Haefele, M., Randall A.K., and Holmes, T. (1992). Estimating the Total Value of Forest Quality in High-Elevation Spruce-Fir Forests. In Payne, C., Bowker, J.M., and Reed, P.C. (Eds.), The Economic Value of Wilderness: Proceedings of the Conference, Jackson, WY, U.S. Forest Service, General Technical Report SE-78 (pp.91-96).

Hanemann, W.M. (1984.) Welfare evaluations in contingent valuation experiments with discrete responses. American Journal of Agricultural Economics, 66(3), 332-41.

Hanley, N., Kriström, B., and Shogren, J.F. (2009). Coherent Arbitrariness: On Value Uncertainty for Environmental Goods. Land Economics, 85(1): 41–50.

Hole, A. (2015). "DCREATE: Stata module to create efficient designs for discrete choice experiments," Statistical Software Components S458059, Boston College Department of Economics, revised 25 Aug 2017.

Horrigan, J. (2017) “How People Approach Facts and Information.” Pew Research Center.

Jasso, G. (2006). Factorial survey methods for studying beliefs and judgements. Sociological Methods and Research, 34(3): 334-423.

Johannesson, M., G., Blomquist, K., Blumenschein, P., Johansson, B., Liljas and R., O’Connor. (1999). Calibrating hypothetical willingness to pay responses. Journal of Risk and Uncertainty, 8(1), 21-32.

Johnson, T.J. and Kaye, B.K. (1998). Cruising is believing?: Comparing internet and traditional sources on media credibility measures. Journalism and Mass Communication Quarterly, 75(2): 325-340.

Johnston, R.J., Boyle, K.J., Adamowicz, W.L., Bennett, J., Brouwer, R., Cameron, T.A., Hanemann, W.M., Hanley, N., Ryan, M., Scarpa, R., Tourangeau, R., and Vossler, C.A. (2017). Contemporary Guidance for Stated Preference Studies. Journal of the Association of Environmental and Resource Economists, 4(2): 319-405.

Johnston, R.J., Feurt, C. and Holland, B. (2015). Ecosystem Services and Riparian Land Management in the Merriland, Branch Brook and Little River Watershed: Quantifying Values and Tradeoffs. George Perkins Marsh Institute, Clark University, Worcester, MA and the Wells National Estuarine Research Reserve, Wells, ME.

Johnston, R.J, Grigalunas, T.A., Opaluch, J.J., Mazzotta, M. and Diamantedes, J. (2002a). Valuing Estuarine Resource Services Using Economic and Ecological Models: The Peconic Estuary System Study. Coastal Management, 30(1): 47-65.

Johnston, R.J., Magnusson, G., Mazzotta, M., and Opaluch, J.J. (2002b). Combining Economic and Ecological Indicators to Prioritize Salt Marsh Restoration Actions. American Journal of Agricultural Economics, 84(5), 1362-1370.

Johnston, R.J., Makriyannis, C., and Whelchel, A.W. (2018). Using Ecosystem Service Values to Evaluate Tradeoffs in Coastal Hazard Adaptation. Coastal Management.

Johnston, R.J., Swallow, S.K., Allen, C.W., and Smith, L.A. (2002c). Designing multidimensional environmental programs: Assessing tradeoffs and substitution in watershed management plans. Water Resources Research, 38(7), 1-12.

Johnston, R.J., Swallow, S.K., Tyrrell, T.J., and Bauer, D.M. (2003). Rural amenity values and length of residency. American Journal of Agricultural Economics, 85(4), 1000-1015.

Johnston, R.J., Weaver, T.F., Smith, L.A., and Swallow, S.K. (1995). Contingent valuation focus groups: insights from ethnographic interview techniques. Agricultural and Resource Economics Review, 24(1), 56-69.

Kruger, L.E., and Jakes, P.J. (2003). The importance of place: Advances in science and application. Forest Science, 49(6): 819–821.

Kyle, G.T., Absher, J.D., and Graefe, A.R. (2003). The moderating role of place attachment on the relationship between attitudes toward fees and spending preferences. Leisure Sciences, 25(1):33–50.

Lavrakas, P.J. (2008). Encycopedia of survey research methods. Thousand Oaks, CA: Sage Punlications, Inc

Layton, D.F. (2000). Random coefficient models for stated preference surveys. Journal of Environmental Economics and Management, 40(1): 21-36.

Lesser, V.M., Dillman, D.A., Carlson, J., Lorenz, F., Mason, R., and Willits, F. (2001). Quantifying the influence of incentives on mail survey response rates and nonresponse bias. Presented at the Annual Meeting of the American Statistical Association, Atlanta, GA

Lockwood, M., Loomis, J., and DeLacy, T. (1993). A Contingent Valuation Survey and Benefit Cost Analysis of Forest Preservation in East Gippsland, Australia. Journal of Environmental Management, 38(3): 233-243.

Loomis, J. and Ekstrand, E. (1998). Alternative approaches for incorporating respondent uncertainty when estimating willingness to pay: the case of the Mexican spotted owl. Ecological Economics, 27(1): 29-41.

Louviere, J. J., Hensher, D. A., and Swait, J. D. (2000). Stated choice methods: Analysis and application. Cambridge, UK: Cambridge University Press.

Lundhede T., Olsen, S., Jacobsen, J., and Thorsen, B. (2009). Handling respondent uncertainty in Choice Experiments: Evaluating recoding approaches against explicit modelling of uncertainty. Journal of Choice Modelling, 2(2): 118-147.

Mason, S., Olander, L., and Warnell, K. (2018). Ecosystem Services Conceptual Model Application: NOAA and NERRS Salt Marsh Habitat Restoration. Conceptual Model Series, Working paper.

McConnell, K.E. (1990). Models for referendum data: The structure of discrete choice models for contingent valuation. Journal of Environmental Economics and Management, 18(1), 19-34.

McFadden, D., and Train, K. (2000). Mixed multinomial logit models for discrete responses. Journal of Applied Econometrics, 15(5): 447-470.

Mitchell, R.C., and Carson, R.T. (1989). Using surveys to value public goods: The contingent valuation method. Washington, D.C.: Resources for the Future.

Moore, R. and Graefe, A. (1994). Attachment to recreation settings: The case of rail-trail users. Leisure Sciences, 16(1):17-31.

Moore, C., Guignet, D., Maguire, K., Dockins, C., and Simon, N. (2015). A stated preference study of the Chesapeake Bay and watershed lakes. NCEE Working Paper Series. Working Paper #15-06.

Myers, K.H., Parsons, G.R., and Edwards, P.E.T. (2010). Measuring the recreational use value of migratory shorebirds on the Delaware Bay. Marine Resource Economics, 25(3):247-264.

National Science Foundation. (2012). Chapter 7. Science and Technology: Public Attitudes and Understanding. In Science and Engineering Indicators 2012.

Nelson, P. (1970). Information and Consumer Behavior. Journal of Political Economy, 78 (2): 311–29.

———. (1974). Advertising as Information. Journal of Political Economy, 82(4): 729–54.

Nguyen, T.C. and Robinson, J. (2015). Analysing motives behind willingness to pay for improving early warning services for tropical cyclones in Vietnam. Meteorological Applications, 22: 187-197.

NHCRHC STAP (2014).

Nock, S. L., & Peter H. R. (1978). Ascription versus achievement in the attribution of family social status. American Journal of Sociology, 84(3): 565- 590.

OCM, NHCP, and ERF. (2016). How people benefit from New Hampshire’s Great Bay Estuary.

Olsen, S., Lundhede, T. H., Jacobsen, J. B., and Thorsen, B. (2011). Tough and Easy Choices: Testing the Influence of Utility Difference on Stated Certainty-in-Choice in Choice Experiments. Environmental and Resource Economics, 49(4):491–510.

Opaluch, J.J., Swallow, S.K., Weaver, T., Wessells, C., and Wichelns, D. (1993). Evaluating impacts from noxious facilities: Including public preferences in current siting mechanisms. Journal of Environmental Economics and Management, 24(1): 41-59.

Orme B. (1998). Sample size issues for conjoint analysis studies. Sequim: Sawtooth Software Technical Paper.

Poe, G.L., Welsh, M.P., and Champ, P.A. (1997). Measuring the difference in mean willingness to pay when dichotomous choice contingent valuation responses are not independent. Land Economics, 73(2): 255-267.

Raymond, C.M., Brown, G., and Weber, D. (2010). The Measurement of Place Attachment: Personal, Community, and Environmental Connections. Journal of Environmental Psychology, 30(4): 422-434.

Rossi, P. H. (1979). Vignette analysis: Uncovering the normative structure of complex judgments. In R. K. Merton, J. S. Coleman & P. H. Rossi (Eds.), Qualitative and quantitative social research. Papers in honor of Paul F. Larzarsfeld (pp. 176-186). New York: Free Press.

Rossi, P. H., & Anderson, A. B. (1982). The factorial survey approach: An introduction. In P. H. Rossi & S. L. Nock (Eds.), Measuring social judgments: The factorial survey approach. Beverly Hills: Sage Publications.

Rossi, P. H., Sampson, W. A., Bose, C. E., Jasso, G., & Passel, J. (1974). Measuring household social standing. Social Science Research, 3(3): 169-190.

Samnaliev, M., Stevens, T. H., and More, T. (2006). A comparison of alternative certainty calibration techniques in contingent valuation. Ecological Economics, 57(3): 507-519.

Sauer, C., Auspurg, K., Hinz, T., & Liebig, S. (2011). The application of factorial survey in general population surveys: The effects of respondent age and education on response times and response consistency. Survey Research Methods, 5(3): 89-102.

Singer, E. and Ye, C. (2013). The Use and Effects of Incentives in Surveys. The Annals of the American Academy of Political and Social Science, 645: 112-141.

Smith, J.W., Davenport, M.A., Anderson, D.H., and Leahy, J.E. (2011). Place meanings and desired management outcomes. Landscape and Urban Planning, 101(4): 359-370

Smith, J.W., Anderson, D.H., Davenport, M.A., Leahy, J.E. (2013). Community benefits from managed resource areas: An analysis of construct validity. Journal of Leisure Research, 45(2): 192-213.

Steiner, P. M., & Atzmüller, C. (2006). Experimental vignette designs in factorial surveys. KZfSS Cologne Journal of Sociology and Social Psychology, 58(1), 117-146.

Train, K. (1998). Recreation Demand Models with Taste Differences Over People. Land Economics, 74(2), 230-239.

US Census Bureau. (2010). Final report of mail back participation rate by county for 2010 Decennial Census, U.S. Census Bureau.

US Census Bureau/American FactFinder. (2015a). "DP04: Selected Housing Characteristics." 2010-2015 American Community Survey. US Census Bureau's American Community Survey Office.

US Census Bureau/American FactFinder. (2015b). "DP05: ACS Demographics and Housing Estimates." 2010-2015 American Community Survey. US Census Bureau's American Community Survey Office.

US Census Bureau. (2016). “S2802: Types of Internet Subscriptions by Selected Characteristics.” American Community Survey 1-Year Estimates. US Census Bureau's American Community Survey Office.

US Census Bureau. (2018). Response Outreach Area Mapper (ROAM). Available online: https://www.census.gov/roam

Vogt, C. A. and Williams, D. R. (1999). Support for wilderness recreation fees: The influence of fee purpose and day versus overnight use. Journal of Park and Recreation Administration, 17(3): 85–99.

Whitehead, J.C. (2006). A practitioner’s primer on the contingent valuation method. In: Handbook on Contingent Valuation (pp 137-162). Northampton MA: Edward Elgar.

Whitehead, J.C., Blomquist, G.C., Hoban, T.J., and Clifford, W.B. (1995). Assessing the Validity and Reliability of Contingent Values: A Comparison of On-Site Users, Off-Site Users, and Non-users. Journal of Environmental Economics and Management, 29 (2): 238–51.

Williams, D., Patterson, M., Roggenbuck, J. and Watson, A. (1992). Beyond the commodity metaphor: Examining emotional and symbolic attachment to place. Leisure Sciences, 14(1):29-46.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Adrienne Thomas |

| File Modified | 0000-00-00 |

| File Created | 2021-07-07 |

© 2026 OMB.report | Privacy Policy