30 Day_CMS-10694_Revised Supp Statement A_ CLEAN v2

30 Day_CMS-10694_Revised Supp Statement A_ CLEAN v2.docx

Testing of Web Survey Design and Administration for CMS Experience of Care Surveys (CMS-10694)

OMB: 0938-1370

Generic Supporting Statement A

Testing of Inclusion of the Web-mode Options in CMS Experience of Care Surveys

(CMS-10694; OMB 0938-New)

Contact Information: Elizabeth H. Goldstein, Ph.D.

Director, Division of Consumer Assessment and Plan Performance Center for Medicare

Centers for Medicare & Medicaid Services 7500 Security Boulevard

Baltimore, MD 21244

410-786-6665

Table of Contents

Background

A. Justification

A1. Circumstances Making the Collection of Information Necessary 3

A2. Purpose and Use of Information Collection 8

A3. Use of Improved Information Technology and Burden Reduction 13

A4. Efforts to Identify Duplication and Use of Similar Information 14

A5. Impact on Small Businesses and Other Small Entities 15

A6. Consequences of Collecting the Information Less Frequently 15

A7. Special Circumstances Relating to Guidelines of 5 CFR 1320.5 15

A8. Comments in Response to the Federal Register Notice and Efforts to Consult Outside Agencies 15

A9. Explanation of Any Payment or Gift to Respondents 15

A10. Assurances of Confidentiality Provided to Respondents 16

A11. Justification for Sensitive Questions 16

A12. Estimates of Burden (Time and Costs) 17

A14. Costs to the Federal Government 18

A15. Explanation for Program Changes or Adjustments 18

A16. Plans for Tabulation and Publication and Project Time Schedule 18

A17. Reason(s) Display of OMB Expiration Date is Inappropriate 19

A18. Exceptions to Certification for Paperwork Reduction Act Submissions 19

BACKGROUND

Data from CMS Experience of Care Surveys are publicly reported and many survey results are linked to payment to health and drug plans and to providers and facilities in the effort to improve the quality of care. As such, these survey data come under great scrutiny by the public and the regulated community. The design, testing, and implementation of these surveys is held to the strictest of statistical survey methodologies and standards. This generic clearance request is to support methodological research designed to improve the quality and reduce the burden of the suite of CMS Experience of Care Surveys. This generic clearance request encompasses an array of research activities to support decisions about whether and how to add web administration protocols to a series of surveys conducted by the Centers for Medicare & Medicaid Services (CMS).

The National Quality Strategy developed by the U.S. Department of Health and Human Services (HHS) under the Affordable Care Act established three aims focusing on better care, better health, and lower costs of care. These aims are supported by six priorities: making care safer by reducing harm caused by the delivery of care; ensuring that each person and family are engaged as partners in their care; promoting effective communication and coordination of care; promoting the most effective prevention and treatment practices for the leading causes of mortality, starting with cardiovascular disease; working with communities to promote wide use of best practices to enable healthy living; and making quality care more affordable for individuals, families, employers, and governments by developing and spreading new health care delivery models.

CMS surveys, including CAHPS, measure various aspects of patient experience with care and results can be used by providers, health and drug plans, and facilities as a tool for quality improvement as well as empowering patients to make informative decisions about their care.

JUSTIFICATION

A1. Circumstances Making the Collection of Information Necessary

The regulated community has expressed interest in using web-based approaches to collect data in order to reduce the burden of data collection and to facilitate earlier access to the resulting data. Adding a web mode of data collection in addition to phone administration and self-administered mail forms might have the added benefit of increasing response rates and reducing non-response bias at the younger end of the age spectrum. Determining whether and, if so, how to offer web-based response options requires rigorous testing to understand the potential impact on the quality of the data, including its impact on time series. Activities under this generic are designed to: a) gauge respondent comprehension of questions and explore the impact of alternative formats, b) understand the potential impact of adding alternative modes of survey administration on response rates and nonresponse bias, and c) better understand technology barriers to adding web-response options.

This request seeks burden hours to allow CMS and its contractors to conduct cognitive in-depth interviews, focus groups, pilot tests, and usability studies to support a variety of methodological studies around exploring web alternatives for collection of survey data for programs such as the:

Emergency Department Experience of Care (EDPEC),

Fee-for-Service (FFS) Consumer Assessment of Healthcare Providers and Systems (CAHPS),

Hospital CAHPS (HCAHPS),

Medicare Advantage and Prescription Drug (MA & PDP) CAHPS,

Home Health (HH) CAHPS,

Hospice CAHPS,

In-Center Hemodialysis (ICH) CAHPS,

Health Outcomes Survey (HOS), and

Medicare Advantage and Part D Plan Disenrollment Reasons surveys.

Of significance is that the each of the surveys under consideration requires assessment of a unique setting or organization (i.e., hospitals, emergency rooms, health plans, etc.).

The research activities under this generic umbrella will target each program separately although knowledge gained in one area may well be applicable to others. A generic is a useful tool for PRA compliance in the context of improving CMS Experience of Care Surveys because it allows multiple data collections for similar purposes, using similar methods. This effort will move CMS towards more innovative approaches for data collection with the hope of reducing burden of data collection while improving response rates.

Expansion of existing data collection modes to include a web component requires careful consideration and methodical testing to ensure resulting population estimates are reliable. In addition to the extensively-documented disparities in access to and usage of the internet (and hence inclusion of web-modes), patient and/or beneficiary surveys like the ones administered by CMS bring with them an additional layer of challenges that require attention prior to proceeding with web data collection efforts. Such challenges include required minimum-levels of computer literacy and internet access on the part of respondents, the need for mobile-optimized survey instrumentation, and the ability of providers, plans, or facilities to accurately capture email addresses.

Data collection modes must be inclusive of all segments of the population that use a given healthcare service. Whereas including web options may increase response from some populations, more traditional paper and phone administered options will continue to be important for other subpopulations. For instance, it is well-documented that internet usage is lower among those who are older, less educated, poorer, Hispanic, or Black1 and within certain geographic regions of the United States2--the very segments of the population that account for some of the highest usage rates of CMS services. However, as younger people, more used to the Internet, age into the Medicare program and technological dispersion increases, the percentage of the population that can complete web surveys will increase. At the same time, many adults who are older, less educated, poorer, or from racial/ethnic minorities who prefer to use the internet via smartphone applications or publicly available computers will increase.

In fact, broader CMS-wide efforts are underway to “meet the growing expectations and needs of tech savvy Medicare beneficiaries” in light of the Agency’s recognition that technology plays an “increasing role […] in the lives of people with Medicare.”3 In 2017, the CMS Medicare Current Beneficiary Survey showed 75% of beneficiaries ages 65 to 74 and 48.8% of beneficiaries ages 75 and older reported they use the Internet to get information. Exploring and evaluating the use of web mode among the populations served and surveyed by CMS fits squarely within this larger push to integrate technology into its service provision.

Among survey takers who are older, less educated, poorer, or from racial/ethnic minority backgrounds, being responsive to this Agency-wide effort requires, as a first step, an assessment of whether these groups’ levels of internet access and degree of web literacy are conducive to completing surveys. More broadly, for all demographic groups who complete the suite of health care surveys, identifying factors to facilitate optimization of web mode options for use on a smart phone is critical to ensure no burden is placed on respondents, such as from data usage charges, technical difficulties, readability, etc. In short, the testing and research program proposed herein seeks to ensure that surveys remain state-of-the-science and –technology and that they are being administered in the “formats that our beneficiaries prefer.”4

The results from prior mode experiments indicate that any future inclusion of web into a mixed mode protocol would warrant exploration both of patterns of survey response and patient characteristics of individuals completing by web versus traditional modes.

CMS requests generic clearance for a mixture of qualitative and quantitative research activities (including formative and developmental research studies as well as methodological tests) to determine if mixed modes that include the web:

Reduce survey administration or participant completion burden;

Improve response rates;

Increase representativeness of the sample by increasing the responses from younger patients;

Reduce data collection timeframes;

Ensure the security of data collected via web-based surveys;

Leverage the increasing role of communication technology in health care; and

Support the CMS “Digital Seniors” initiative to ensure patients receive documents and information in a preferred format.

This clearance seeks approval for multiple types of potential research activities: 1) cognitive one-on-one interviewing, 2) focus groups, 3) usability studies and interactive consumer testing of web prototypes, 4) pilot testing, 5) respondent debriefing, and 6) other methodological experiments. CMS will submit individual collections under this generic clearance to OMB, and will provide OMB with a copy of questionnaires, protocols, and analytic research plans in advance of any testing activity.

Individual submissions will provide a mini supporting statement that includes the following:

Description of the target survey –who administers the survey; modes of administration currently used, respondent population for that survey, how the survey results are used

Description of survey response to date:

The response rates, by mode, for each of the last ten years (or for as many years as available).

Nonresponse bias analysis - Responder (vs. nonresponder) characteristics compared to the underlying population of people served at the given type of healthcare facility.

Assessment of the questions on the survey – when was the last time they were tested? Is there anything that could be done to improve the question formatting or wording to optimize current modes of administration before testing web-modes? Are there questions that should be revised, deleted, or added? (We wouldn’t want to web mode test questions that do not perform well (have low variability in terms of response history) in the paper and phone modes.)

Goals of the specific research covered in the submitted IC, and how this builds on prior research (including prior ICs) and how will fit into subsequent research plans and/or implementation plans.

Justification for method, sample selection protocol, sample size, and administration location.

Incentives to be used.

Procedures used to ensure that the data collection and analysis would be performed in compliance with OMB, Privacy Act, and Protection of Human Subjects requirements.

A2. Purpose and Use of Information Collection

The purpose and use of collecting this information fall into these categories:

Research on human-computer interfaces/usability testing/cognitive interviews.

Pilot tests to implement research findings in the context of CMS surveys,

Explore operational issues and determine required/standardized components for vendors, and

Weighting and adjustments.

Research on human-computer interfaces/usability testing/cognitive interviews:

One purpose of this research is to conduct focus groups, usability tests, cognitive interviews, and respondent debriefings to obtain information about the processes people use to answer survey questions; in particular, we would aim to identify any potential problems in the way respondents of different types (older, lower education, or rural populations, for example) are answering and/or interpreting questions using the web mode.

Several existing studies have already demonstrated that differences in the formats and/or type of survey questions can affect web-mode responses. Cognitive interviews can help to identify potential misinterpretations based on such formatting differences.

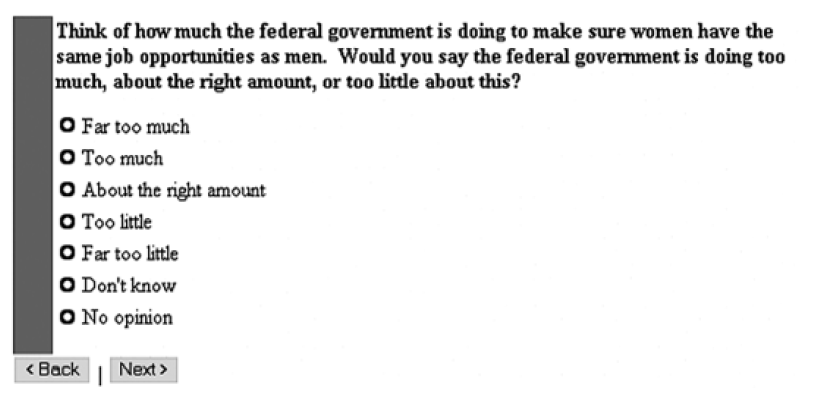

Christian5 found that the order of the survey responses in web surveys can affect responses. They compared results when the most positive answer choice was presented first with results when the most negative answer choice was presented first. These researchers found that respondents were more likely to choose the most negative response when it was presented first, than to choose the most positive response when that was presented first. They also tested a linear layout (all response options presented vertically in a single column, beginning with the positive endpoint and ending with the negative endpoint) vs. nonlinear layout (response options were in the same order, but presented in 2 or 3 columns) of the response scale. Respondents in the nonlinear version group provided more negative ratings compared with respondents in the linear version group. Finally, authors tested the placement of the “don’t know” option, comparing versions where “don’t know” directly followed the substantive responses to a version where “don’t’ know” was separated from the substantive responses by a space. They also found that respondents were no more likely to select “don’t know” when there was a visual separation compared to when there was not.

Tourangeau6 reported that when responses options in web surveys were not ordered in a way respondents expected (i.e. from highly likely to highly unlikely), survey completion times were increased. They also found that respondents make inferences about the meaning of survey items based on visual cues such as the spacing of response options, their order, or their grouping. They report that the visual midpoint of a response scale is often used as a benchmark, so that when non-substantive answers (such as “don’t know”) were added at the end of a list of responses, the visual midpoint of the response scale shifted, and responses shifted as well (see Figure below).

“Illogical Order of Response Options” as Tested in Tourangeau, 2004

Bernard7 compared legibility, reading speed and perception of font legibility for online formats among older adults. Although this work was not specific to surveys, it is relevant because they focused on online reading. Among participants (average age = 70), 14-point fonts were more legible, promoted faster reading, and were generally preferred by study participants to 12-point fonts. Comparing serif to sans-serif font styles, serif fonts at the 14-point font size supported faster reading. However, participants generally preferred sans-serif fonts.

In consulting with other Federal agencies, CMS has drawn on the insights and experiences of the National Center for Health Statistics (NCHS) and their experience with general research on the survey response process, questionnaire design, and pretesting methodology. NCHS held a Question Evaluation Methods Workshop (2009) to examine various question evaluation methods as well as to discuss the impact of question design and evaluation on survey data quality. Broad consensus determined that measurement error in the Federal statistical enterprise requires renewed consideration. Federal statistical agencies have a fundamental obligation to produce valid and reliable data, and more attention needs to be placed on question evaluation and documentation. Furthermore, it was established that validation of measures is a particularly complex, methodological problem that requires a mixed-method approach. While quantitative methods are essential for understanding the magnitude and prevalence of error, they remain dependent on the interpretive power of cognitive interviewing. Unlike any other question evaluation method, cognitive interviewing can portray the interpretive processes that ultimately produce survey data. As it is practiced as a qualitative methodology, cognitive interviewing reveals these processes as well as the type of information that is transported through statistics. Consequently, cognitive interviewing is an integral method for ensuring the validity of statistical data, and the documented findings from these studies represent tangible evidence of how the question performs.

Data collection procedures for cognitive interviewing differ from usability testing. While survey interviewers strictly adhere to scripted questionnaires, cognitive interviewers use survey questions as starting points to begin a more detailed discussion of questions themselves: how respondents interpret instructions and key concepts when items are presented in differing formats and displays, their ability to recall the requested information, the appropriateness of response categories, and the reasons why a particular response is selected. Efforts to minimize task difficulty and maximize respondent motivation are likely to pay off by minimizing satisficing and maximizing the accuracy of self-reports8. Because the interviews generate narrative responses rather than statistics, results are analyzed using qualitative methods. This type of in-depth analysis reveals problems in the formats of the surveys and issues with particular survey questions and, as a result, can help to improve the overall quality of surveys.

Cognitive interviews offer detailed depictions of meanings and processes used by respondents to answer questions—processes that ultimately produce the survey data. As such, the method offers insights that can transform the understanding of question validity and response error.

This data collection aims to conduct research on computer-user interface designs for computer- or web-based instruments, often referred to as “usability testing.” This research examines how survey questions, instructions, and supplemental information are presented on electronic or web-based instruments (e.g., smartphones, tablets or desktops/laptops) and investigates how their presentation affects the ability of users to effectively use and interact with these instruments. A plethora of generic information exists in the literature on a number of key design decisions: how to position the survey question on a computer or mobile phone screen; how to display instructions; the maximum amount of information that can be effectively presented on one screen;9, 10 how supplemental information such as “help screens” should be accessed;11, 12 whether to use different colors for different types of information presented on the screen; 13, 14 positioning of “advance” and “back” buttons;15, 16 use of progress bars; 17, 18 presenting questions in traditional question format vs. in a grid/matrix format, 19, 20 and so on. Research has shown that these decisions can have a significant effect on the time required to complete survey questions, the accuracy of question-reading, the accuracy of response selection, and the full exploitation of resources available to help the user complete his or her task. What is missing is an understanding of whether these established best practices effectively meet the needs of population groups targeted by CMS surveys who represent more non-traditional web users, such as older, lower income, and/or less educated adults.

Usability testing has many similarities to questionnaire-based cognitive research, as it focuses on the ability of individuals to understand and process information in order to accurately complete survey data collection. It is also somewhat different, in that it focuses more heavily on matters of formatting and presentation of information than traditional cognitive testing does. Usability testing efforts (including eye tracking studies) could observe respondents’ real-time interactions with survey instruments quantitatively documenting eye movements that give clues to the cognitive processing involved in interacting with a web-administered instrument while gathering qualitative data on their user experience. Results from such testing could inform, for instance, the most effective way of displaying information about a survey given limited screen real estate; best placement of navigational cues such as survey section headers, and whether or not to group similar questions in a matrix format and/or on a single page to facilitate answering—all of which serve to reduce respondent burden and are likely to increase survey completion rates.

Pilot Testing

A second major purpose of this data collection is to pilot test the web-based administration protocols. The pilot tests will provide a wealth of information on the feasibility of implementing web–based surveys. We plan to propose research in a variety of different areas as listed below:

Pilots that will help determine the feasibility of implementing mixed mode surveys that include web options in settings that serve different segments of the population;

Pilots that focus on challenges in implementing mixed mode surveys that include web options using multiple approved survey vendors; mode experiments to examine whether the mode of data collection systematically affects survey results. This includes built-in split-ballot or A/B experiments (e.g., two different versions of particular questions or modes of administration). Respondents will be randomly assigned to receive questions in multiple different modes (e.g., web or telephone) to assess the impact of mode on how someone responds to the survey and on data quality.

Additionally, the studies will include analyses of response bias and outcome data such as: response rates and response distributions to key items, and paradata (e.g., device type used to complete, respondent movement within a mobile-optimized survey, response time, number of times respondent logged in to survey, time of day survey was completed).

Pilots and mode experiments to assess the impact of adding a web-based mode to existing, ongoing surveys and creating mode adjustments for web modes in this context.

Exploring Operational Issues

Given the structure of many CMS surveys, another critical question is how to operationalize introduction of web mode of administration for these surveys to allow their simultaneous conduct by multiple survey vendors. For example, we need to determine which, if any, web design features should be mandated (such as the number of questions per screen or whether there are areas where there can be flexibility, such as any colors added to the web screens). Given that the web mode of administration would be new for most CMS surveys, we also need to determine if vendors have operational difficulties meeting the requirements we set forth regarding timing of sending emails with a link to a survey and the introduction of follow-up mode(s) for non-respondents to the web mode. Vendors will need to be able to communicate using multiple follow-up modes and be able to create a single case-level outcome code. Additional topics concern the ability of vendors to:

Collect email information from facilities/plans/entities;

Generate personalized email invitations to a web-mode option using personalized links and/or user-friendly PINs;

Track undeliverable emails; and

Explore strategies to avoid vendor communications being flagged as spam by common email providers (e.g. yahoo or Gmail).

These challenges will be explored in this package across a variety of settings and across different segments of the population. For instance, feasibility tests may test the uptake of web-mode options among older adults, while usability testing among both older and younger demographic groups may inform the development of mobile-optimized survey platforms. Cognitive interviews may inform CMS understanding of respondent attitudes toward web completion and whether text contact is considered to be intrusive. The overarching CMS vision for these research efforts is to ensure that we can continue to collect quality data using more innovative, efficient, and analytically powerful modes of data collection.

Weighting and Adjustment

.

In order to achieve the goal of fair comparisons among the facilities that participate in a survey, it is necessary to adjust for factors that are not directly related to each facility’s performance but do affect how patients answer survey items. To ensure that publicly-reported survey scores allow fair and accurate comparisons of individual facilities, which is critical when survey results are publicly reported and used as the basis for performance payments, CMS conducts mode experiments to examine whether mode of survey administration, the mix of patients in participating facilities, or survey non-response systematically affect survey results.

If necessary, statistical adjustments for these factors can be developed. First, for each specific survey if patient responses differ systematically by mode of survey administration, it is necessary to adjust for survey mode. Second, certain patient characteristics that are not under the control of the facility, such as age and education, may be related to the patient’s survey responses. For example, several studies have found that younger and more educated patients provide less positive evaluations of health care. If such systematic differences occur in a specific survey, it is necessary to adjust for such respondent characteristics before comparing facilities’ survey results. Third, there may be systematic differences between patients who are sampled and respond to the survey and patients who are sampled and do not respond to a survey. Adjustments for these systematic differences must also be made.

As an example, to date, the HCAHPS Survey has undergone four mode experiments, the latest in 2016. In one HCAHPS mode experiment, patients randomized to the telephone and active interaction voice response (IVR) modes provided more positive evaluation than patients randomized to mail and mixed (mail with telephone follow-up) modes, with some effects equivalent to more than 30 percentile points in hospital rankings21. HCAHPS adjustments for survey mode are larger than adjustments for patient-mix and thus HCAHPS Survey results are adjusted for mode of survey administration. HCAHPS Survey results are adjusted for the following patient characteristics: education, age, self-rated health status, self-rated mental health, language spoken at home, and service line (i.e., medical, surgical, maternity) by gender.

Again, future inclusion of web into a mixed mode protocol warrants careful exploration both of patterns of survey response and patient characteristics of individuals completing by web versus traditional modes.

A3. Use of Improved Information Technology and Burden Reduction

The purpose of this package is to test more innovative methods of survey administration that use the web in order to lower costs, increase response rates, and reduce respondent burden.

Respondent burden may be reduced through aids and cues that can be provided via web (such as rollover or hover definitions of unfamiliar terminology), automatic handling of question logic and skip patterns, reminders and prompts related to missed questions, and the introduction of graphics or video when appropriate. Respondent convenience may be enhanced as the survey is optimized for a range of devices (mobile phone, laptop, tablet, or PC), may be completed in a variety of settings (at home, while waiting on a line, at work, etc.) and at the respondents’ own preferred time and pace. As needed, respondents can stop the survey and resume the survey at a later time.

Survey administration by web may provide economic efficiencies for survey vendors. While there is a relatively high fixed cost to build and test a web platform, long-term recurring time and costs of survey administration by web have the potential to be lower relative to traditional survey administrative costs. There would be no expense for Computer Assisted Telephone Interview (CATI) staff; no expense for mail clerks, data entry staff, printing, or postage as compared to mail surveys; and minimal per-person administration time and cost. Estimates to date vary widely; one estimate posits a $2 per survey cost for web versus $10 per survey cost for mail22.

The exact cost differential would vary depending on invitation method used (email or mailed invitation letter), number of reminders, vendor overhead costs, and existing vendor infrastructure. Additionally, a survey administered by web is more environmentally friendly than one administered by mail.

A4. Efforts to Identify Duplication and Use of Similar Information

Using mixed mode surveys that include web options and other internet-based modes of data collection are being tested and evaluated by a number of Federal agencies [e.g., the American Community Survey (ACS), the National Health and Nutrition Examination Survey (NHANES), and the National Health Interview Survey (NHIS)]. Among all the Federal efforts underway, we did not identify any that collect information regarding patient experiences of care. Further, as mentioned above, the surveys here under consideration, unlike other Federal surveys, are not only publicly reported but many are tied to pay-for-performance remuneration to health care providers and organizations.

Also, unlike other surveys carried out by other Federal agencies, the surveys under consideration are implemented not by CMS directly, but by private vendors. This requires a greater level of up-front direction and harmonization to ensure the data collected remain of highest quality and free from vendor-based bias.

For these reasons, it is essential that the testing efforts around web data collection described be carried out within the specific parameters of CMS data collection efforts. The survey questions and populations targeted for each survey differ. For example, the Hospital CAHPS Survey is for patients discharged after an overnight stay at the hospital and include patients who are 18 and above, while the average respondent to the Home Health CAHPS Survey is 78 years old. Conversely, the Hospice CAHPS Survey targets caregivers of deceased patients. Failure to carry out this testing for the specific survey and setting may lead to one of two outcomes: web mode being introduced without necessary tailoring, or web to be foregone as a data collection mode altogether. Either of these introduce the risk of jeopardizing data quality, increasing respondent burden, and introducing inefficiencies to the data collection process compared to current practices. Currently, CMS is testing a web mode of data collection for the OAS CAHPS Survey – testing web only, web with mail follow-up of non-respondents, and web with telephone follow-up of non-respondents. Findings from this survey will help inform future testing efforts. When the OAS CAHPS Survey web test was going through OMB Paperwork Reduction Act clearance process, OMB expressed interest in CMS doing a generic package for future web tests in other settings. It is our intent to ensure that a web mode of data collection is fully tested prior to implementation in each setting.

The research program described here will add to the extant knowledge base rather than duplicate existing efforts. In addition, every effort will be made to collaborate with staff from other agencies, where relevant, appropriate, and possible, to proactively combat potential duplication with any future related research efforts.

A5. Impact on Small Businesses and Other Small Entities

There will be no impact on small businesses or other small entities.

A6. Consequences of Collecting the Information Less Frequently

The frequency of each response will be set out under each generic information collection request. However, as indicated below under A12, at this time we anticipate one response per person per test.

A7. Special Circumstances Relating to Guidelines of 5 CFR1320.5

There are no special circumstances.

A8. Comments in Response to the Federal Register Notice and Efforts to Consult Outside Agencies

The 60 Day Federal Register notice for this collection was published to the Federal Register on January 31, 2019 (84 FR 731).

Five comments were received during the 60-day public comment period. Most expressed support for our efforts to test the web mode of survey administration. An attachment to this package provides details about the comments and our responses.

The 30 Day Federal Register notice for this collection was published to the Federal Register on April 16, 2019 (84 FR).

No comments were received.

A9. Explanation of Any Payment or Gift to Respondents

For any cognitive interviews, focus groups, or usability testing participants may receive incentives to take part. Payments would be provided for several reasons:

Typically, respondents are recruited for specific characteristics that are related to the subject matter of the survey (e.g., questions may be relevant only to people with certain health conditions). The more specific the subject matter, the more difficult it is to recruit eligible respondents. Incentives help to attract a greater number of potential respondents.

Cognitive interviews require an unusual level of mental effort, as respondents are asked to explain their mental processes as they hear the question, discuss its meaning and any ambiguities, and describe why they answered the questions the way they did.

Participants are usually asked to travel to the laboratory testing site, which involves transportation and parking expenses. (Many respondents incur additional expenses due to leaving their jobs during business hours, making arrangements for child care, etc.).

Respondents for testing activities conducted in the laboratory (i.e., cognitive interviews, usability tests, and focus groups) under this clearance may receive a small incentive. This practice has proven necessary and effective in recruiting subjects to participate in this type of small-scale research, and is also employed by other Federal cognitive laboratories. We are anticipating the incentive for participation in cognitive interviews, usability testing, and focus groups would be $20-$100. The amount will be tailored to the task and level of respondent burden. For instance, when an individual is asked to complete straightforward tasks like completing a short survey without having to travel to a facility, a $20 incentive should be sufficient. However, if the participant must travel to a facility, most Federal statistical agencies offer $40 for a one-hour appointments. Federal statistical agencies offer $75 for focus groups of the general public where respondents are expected to all be in a specific location at a specific time. Vendors and health care operators who volunteer for focus groups might be offered more.

Respondents for methods that are generally administered as part of pilot test activities will not receive payment unless there are extenuating circumstances that warrant it.

A10. Assurances of Confidentiality Provided to Respondents

All respondents who participate in research under this clearance will be informed that the information they provide is confidential and that their participation is voluntary. All participants in cognitive research will be required to sign written notification concerning the voluntary and confidential nature of their participation. This documentation will be package- specific and will be provided to OMB for review/approval with the submission of each subsequent generic information collection request.

Individuals contacted as part of this data collection will be assured of the confidentiality of their replies under 42 U.S.C. 1306, 20 CFR 401 and 422, 5 U.S.C. 552 (Freedom of Information Act), 5 U.S.C. 552a (Privacy Act of 1974), and OMB Circular A-130.

Any data published will exclude information that might lead to the identification of specific individuals (e.g., ID number, claim numbers, and location codes). CMS will take precautionary measures to minimize the risks of unauthorized access to the records and the potential harm to the individual privacy or other personal or property rights of the individual.

All survey staff directly involved in data collection and/or analysis activities are required to sign confidentiality agreements.

A11. Justification for Sensitive Questions

The focus of the testing is to receive feedback on the patient experiences across a wide spectrum of health care providers, plans, and facilities. The questions included in these surveys are not typically considered sensitive areas. However, there is no requirement to answer any question.

A12. Estimates of Burden (Time and Cost)

Table 1 is based on the maximum number of data collections expected on an annual basis. The total estimated respondent burden is calculated below.

Table 1. Estimated Annual Reporting Burden, by Anticipated Data Collection Methods

Method |

Number of Respondents |

Frequency of Response |

Time per Response (Hours) |

Total Hours |

Cognitive Interviews/Usability Testing Sessions/Respondent Debriefing |

150 |

1 |

2.00 |

300 |

Focus Group Interviews |

50 |

1 |

2.00 |

100 |

Pilot Testing |

75,000 |

1 |

0.22 |

16,500 |

Other Experiments |

50 |

1 |

2.00 |

100 |

TOTAL |

75,250 |

1 |

Varies |

17,000 |

Over the course of OMB’s 3-year approval period, we estimate a burden of 225,750 respondents, 225,750 responses, and 51,000 hours.

The estimated annualized annual costs are outlined in Table 2. The estimated annualized costs to respondents is based on the Bureau of Labor Statistics (BLS) data from October 2018 (“Average hourly and weekly earnings of all employees on private nonfarm payrolls by industry sector, seasonally adjusted,” U.S. Department of Labor, Bureau of Labor Statistics, https://www.bls.gov/news.release/empsit.t19.htm, accessed November 21, 2018). The mean hourly wage for all occupations is $27.30. The employee hourly wage estimates are then adjusted by a factor of 100 percent to account for fringe benefit costs. This is a rough adjustment, both because fringe benefits and overhead costs vary significantly from employer to employer, and because methods of estimating these costs vary widely from study to study. Nonetheless, there is no practical alternative and we believe that doubling the hourly wage to estimate total cost is a reasonably accurate estimation method.

Table 2. Estimated Annual Costs

Method |

Wages |

Fringe Benefits |

Total Hours |

Total Costs |

Cognitive Interviews/ Usability Testing Sessions/Respondent Debriefing |

$27.30 |

$27.30 |

300 |

$16,380 |

Focus Group Interviews |

$27.30 |

$27.30 |

100 |

$5,460 |

Pilot Testing |

$27.30 |

$27.30 |

16,500 |

$900,900 |

Other Experiments |

$27.30 |

$27.30 |

100 |

$5,460 |

TOTAL |

|

|

17,000 |

$928,200 |

A13. Capital Cost

There are no capital costs associated with this collection.

A14. Costs to the Federal Government

At this time, we cannot anticipate the actual number of participants, length of interview, and/or mode of data collection for the surveys to be conducted under this clearance. Thus, it is impossible to estimate in advance the cost to the Federal Government.

A15. Explanation for Program Changes or Adjustments

This is a new collection, so there are no changes or adjustments.

A16. Plans for Tabulation and Publication and Project Time Schedule

This clearance request is for testing of innovative methods for data collection. The majority of laboratory testing (cognitive interviews, usability testing, and focus groups) will be analyzed qualitatively. The survey methodologists serve as interviewers and use detailed notes and transcriptions from the in-depth cognitive interviews to conduct analyses. Final reports will be written that document how the questionnaire performed using alternative methods of data collection. For pilot test activities, qualitative and quantitative analysis will be performed on samples of observational data from respondents to determine where additional problems occur and how response patterns differ by modes of data collection. Analyses of web paradata, or data about the process by which electronic survey data were collected, will be conducted. Web paradata includes, for example, the device used to complete the survey and which access method was used to complete the survey. Because CMS is using state-of-the-science data collection methods, methodological papers will be written that may include descriptions of response problems, and quantitative analysis of frequency counts of several classes of problems that are uncovered through the cognitive interview and observation techniques.

Publication of Results: CMS may confidentially share provider or plan-level estimates with provider or plan administrators for quality improvement purposes. However, facility-level data from this survey will not be made publicly available to Medicare beneficiaries or the general public.

A17. Reason(s) Display of OMB Expiration Date is Inappropriate

No exemption is requested. CMS will display the OMB expiration date and control number.

A18. Exceptions to Certification for Paperwork Reduction Act Submissions

There are no exceptions to this certification statement.

1 http://www.pewresearch.org/2015/09/22/coverage-error-in-internet-surveys/ Accessed 28-Nov 2018

2 http://www.pewhispanic.org/2011/02/09/iii-home-internet-use/ Accessed 28-Nov 2018

4 Ibid.

5 Christian LM, Parsons NL, Dillman DA. Designing Scalar Questions for Web Surveys. Sociol Method Res 2009;37:393-425.

6 Tourangeau R. Spacing, Position, and Order: Interpretive Heuristics for Visual Features of Survey Questions. Public Opinion Quarterly 2004;68:368-93.

7 Bernard M, Liao C, Mills M. Determining the best online font for older adults. Usability News, 2001.

8 JA Krosnick, Question and Questionnaire Design - Stanford University (2009),

https://web.stanford.edu/dept/communication/faculty/.../2009_handbook_krosnick.pd...

9 Peytchev A, Hill CA. Experiments in Mobile Web Survey Design. Social Science Computer Review 2009;28:319-35

10 Sarraf S, Tukibayeva M. Survey Page Length and Progress Indicators: What Are Their Relationships to Item Nonresponse? New Directions for Institutional Research 2014;2014:83-97.

11 Tourangeau R, Maitland A, Rivero G, Sun H, Williams D, Yan T. Web Surveys by Smartphone and Tablets Effects on Survey Responses. Public Opinion Quarterly 2017;81:896-929.

12 Conrad FG, Couper MP, Tourangeau R, Peytchev A. The Use and Non-Use of Clarification Features in Web Surveys.” Journal of Official Statistics 22:245–69. Journal of Official Statistics 2006;22:245–69

13 Liu M, Kuriakose N, Cohen J, Cho S. Impact of web survey invitation design on survey participation, respondents, and survey responses. Social Science Computer Review 2016;34:631-44.

14 Mahon-Haft TA, Dillman DA. Does visual appeal matter? effects of web survey aesthetics on survey quality. Survey Research Methods 2010;4:45-59.

15 Baker RP, Couper MP. The impact of screen size, background color, and navigation button placement on response in web surveys. General Online Research Conference. Leipzig, Germany2007.

16 Couper MP, Baker R, Mechling J. Placement and Design of Navigation Buttons in Web Surveys. Survey Practice 2011;4.

17 Sarraf S, Tukibayeva M. Survey Page Length and Progress Indicators: What Are Their Relationships to Item Nonresponse? New Directions for Institutional Research 2014;2014:83-97.

18 Zong Z. How to draft mobile friendly web surveys. AAPOR; 2018; Denver, CO.

19 Couper MP, Tourangeau R, Conrad FG, Chan Z. The Design of Grids in Web Surveys. Social Science Computer Review 2013;31:322-45.

20 Tourangeau R, Maitland A, Rivero G, Sun H, Williams D, Yan T. Web Surveys by Smartphone and Tablets Effects on Survey Responses. Public Opinion Quarterly 2017;81:896-929.

21 The Effects of Survey Mode, Patient Mix, and Nonresponse on CAHPS Hospital Survey Scores. M.N. Elliott, A.M. Zaslavsky, E. Goldstein, W. Lehrman, K. Hambarsoomian, M.K. Beckett and L. Giordano. Health Services Research, 44: 501-518. 2009.

22 Bergeson, Steven C., Janiece Gray, Lynn A. Ehrmantraut, Tracy Laibson, and Ron D Hays. 2013. “Comparing Web-based with Mail Survey Administration of the Consumer Assessment of Healthcare Providers and Systems (CAHPS®) Clinician and Group Survey.” Primary Health Care: Open Access, 3, 1000132.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Modified | 0000-00-00 |

| File Created | 0000-00-00 |

© 2026 OMB.report | Privacy Policy