SUPPORTING STATEMENT PART B (FINAL after NASS comments)-8-8-17

SUPPORTING STATEMENT PART B (FINAL after NASS comments)-8-8-17.docx

Assessment of the Barriers that Constrain the Adequacy of Supplemental Nutrition Assistance Program (SNAP) Allotments

OMB: 0584-0631

SUPPORTING STATEMENT PART B FOR

OMB Control Number 0584-NEW

Assessment of the Barriers That Constrain the Adequacy of SNAP Allotments

Contract Officer Representative: Rosemarie Downer, PhD

Office of Policy Support

USDA, Food and Nutrition Service

3101 Park Center Drive

Alexandria, VA 22302

February 9, 2017

Table of Contents

Chapter Page

B Collections of Information Employing Statistical Methods 1

B.1. Describe (including a numerical estimate) the Potential Respondent Universe and any Sampling or Other Respondent Selection Method to be Used. 1

B.1.1. Respondent Universe 1

B.1.2. Sampling Methods 1

B.1.3. Response Rates and Sample Size 4

B.2. Describe the Procedures for the Collection of Information 6

B.2.1. Statistical Methodology for Stratification and Sample Selection 6

B.2.2. Estimation Procedures 7

B.2.3. Degree of Precision Needed for the Purpose Described in the Justification 9

B.2.4. Unusual Problems Requiring Specialized Sampling Procedures 12

B.2.5. Any use of Periodic (less frequent than annual) Data Collection Cycles to Reduce Burden 12

B.3. Describe Methods to Maximize Response Rates and to Deal with Issues of Non-Response. 12

B.4. Describe any Test of Procedures or Methods to be Undertaken. 14

B.5. Provide the Name and Telephone Number of Individuals Consulted on Statistical Aspects of the Design and the Name of the Agency, Unit, Contractor(s), Grantee(s), or Other Person(s) Who Will Actually Collect and/or Analyze the Information for the Agency. 14

Tables

B-1 Sample size 5

B-2 Expected 95-percent confidence bounds for selected sample sizes and prevalences 10

B-3 Minimum detectable difference* between subgroups for selected sample sizes and prevalences (P) 11

B-4 Power for 5-percent minimum detectable difference for comparison between unequal-sized subgroups, n = 4,800 12

Figure

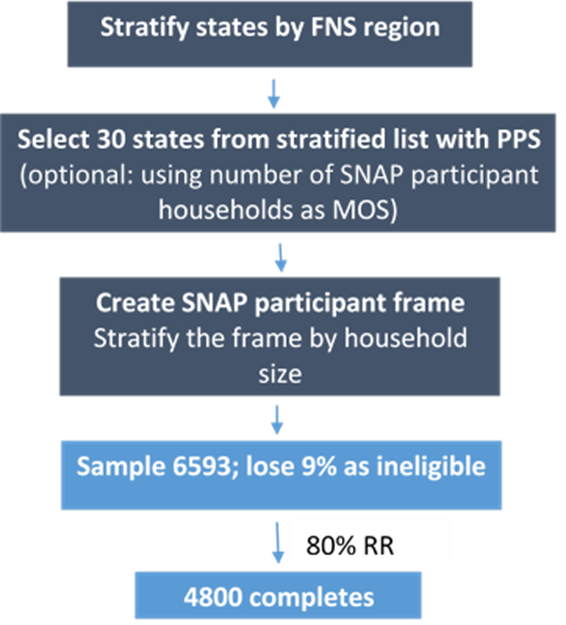

1 Overview of sampling approach 3

PART B. COLLECTIONS OF INFORMATION EMPLOYING STATISTICAL METHODS

B.1. Describe (including a numerical estimate) the Potential Respondent Universe and any Sampling or Other Respondent Selection Method to be Used.

Data on the number of entities (e.g., establishments, State and local government units, households, or persons) in the universe covered by the collection and in the corresponding sample are to be provided in tabular form for the universe as a whole and for each of the strata in the proposed sample. Indicate expected response rates for the collection as a whole. If the collection had been conducted previously, include the actual response rate achieved during the last collection.

B.1.1. Respondent Universe

The universe is defined as individuals and households participating in the SNAP program (that is, receiving SNAP benefits), who have been in the program for at least the previous six months (at time of sampling). We will select a sampling frame from this universe that stratifies participants according to State of participation and household size.

B.1.2. Sampling Methods

This study will implement a two-phase stratified sample design to select a nationally representative probability sample of SNAP participants. In the first phase, we will select a sample of States as primary sampling units (PSUs), and in the second phase, we will select a sample of SNAP participant households from the sample States. Figure 1 provides an overview of the sampling approach. We recommend this approach because the sampling frame data must be obtained from each State which is a resource-intensive process. By limiting the number of States, we reduce the effort needed to build the sampling frame. The consequence of clustering is that we will need a larger sample to achieve the same level of precision but we believe this is a cost-efficient trade-off. Once the States are selected, we will stratify the SNAP participant frame by household size and sort by presence of children and time on SNAP, which will function as implicit strata. Out of 7,283 contacted, we will aim to achieve 4,800 completed surveys.

Select a State Sample. For efficiency we will select a sample of States, which will be a stratified systematic sample of 26 States selected with probabilities proportional to size (PPS), where the number of SNAP participant households of states will be used as the measure of size (MOS). We will stratify the States by FNS region to improve the representativeness of the state sample, and allocate the number of sample states to FNS regions proportionate to state size measures. If a sampled State cannot participate in the study, we will draw a replacement State, thus the total number of sample States will remain as 26. The replacement sample States will be selected from the state frame by using the Keyfitz procedure1, which is a method commonly used for updating the PSU sample. With this procedure, the selection probability for the States will be assigned in such a way that, for the initially selected States, the conditional probability of being retained will equal to unity, while for the States not in the initial sample, the probability of selection will be conditioned on the probability of not being selected into the initial State sample. For data analysis, a State-level weight will be assigned to all sample States, including the replacement sample States, based on their overall probability of being included in the sample.

Figure 1 Overview of sampling approach

Once the State sample is selected, we will speak with each State to review the administrative case record data being requested and the timeframe for the study. We will prepare data use agreements as required. We will limit our data request to variables that are typically maintained in the States’ SNAP enrollment files. This makes it relatively straightforward for States to respond and will increase State’s participation. The data elements collected will include household size, household composition (children/no children, and the number), date benefit started, amount of monthly benefit and contact information. While we initially intended to request the marital status of the head of household in our proposal, we quickly found out that many States do not collect this information. Therefore, marital status will not be among the variables requested.

In-depth Interviews with SNAP Recipients

We will conduct 120 in-person in-depth interviews with English- and Spanish-speaking SNAP recipients to develop a further understanding of the barriers that constrain the adequacy of SNAP allotments in accessing a healthy diet. Working with the Food and Nutrition Service, Westat will identify a purposive sample of 8 to 12 locations to recruit participants for the in-depth interviews. Locations with clusters of survey respondents will be prioritized. Two locations with a large base of Spanish-speaking respondents will also be chosen. A balance of rural and urban locations will also be sought, as well as geographic diversity (locations from multiple geographic regions will be selected). Finally, locations with varying levels of household food security will be selected. Potential in-depth interviewees will be contacted by phone and offered $75 to participate in an interview at their home which will focus on understanding how they meet the needs of their household for nutritious food as SNAP participants. Respondents will be informed that the interview will last for approximately 90 minutes. Agreement to be interviewed will be obtained on the phone to be followed up with written consent in person. We will initially recruit interviewees from among those who have completed surveys. Additional interviewees from the geographic locations selected for the in-depth interviews will be recruited via advertisements in online sources such as Craig’s list. Potential interviewees who respond to advertisements (and who have not completed a survey) will be screened briefly by phone to determine their eligibility to participate in the study, including their geographic location (zip code). The level of food security of potential interviewees will be screened using an appropriate screener. For each scheduled location, we will recruit up to 15 participants who meet the criteria. Appendices J1, J2, K1, K2, P1, P2 present the procedures for recruiting and screening participants and conducting the in-depth interviews.

B.1.3. Response Rates and Sample Size

We will target 4,800 completed questionnaires and assume a response rate of 80 percent. We also expect an eligibility rate of 91 percent as no sampling frame is ever completely up to date. Based on experience in the recent Farmers Market Client Survey (OMB Approval # 0584-0564), we expect 9 percent of the initial sample will come back as ineligible. Therefore, we plan to sample 6,593 (= 4,800/.80 *.91) SNAP participant households.

Table B-1. Sample size

|

TOTAL |

Sample draw |

6,593 |

Attrition (9%) |

593 |

Sample |

6,000 |

Completes (80%) |

4,800 |

It is important to emphasize that the SNAP participants are considered to be a hard to reach population for studies such as this one. Frequently, the data from State agencies have incomplete, missing, or incorrect information on mailing address and telephone numbers. Considering all the information on difficulties of reaching this population, to ensure 4,800 complete interviews, we also plan to select a 50 percent reserve sample (3,297) as part of the sampling process, a standard practice to permit supplementation of the sample in an expedited fashion, if necessary. The reserve sample will be randomly split into 10 equal-sized replicates, and released by replicate if it appears that we will not achieve an 80% response rate with the original release.

B.2. Describe the Procedures for the Collection of Information including:

Statistical methodology for stratification and sample selection,

Estimation procedure,

Degree of accuracy needed for the purpose described in the justification,

Unusual problems requiring specialized sampling procedures, and

Any use of periodic (less frequent than annual) data collection cycles to reduce burden.

B.2.1. Statistical Methodology for Stratification and Sample Selection

Using the data obtained from States, we will develop a sampling frame for selecting the SNAP participants. The frame will be a household-level list of SNAP participants who have been in the program for at least the previous 6 months. We will first stratify the frame by State and create secondary strata by household size categories (1-3 persons vs. 4 or more persons) in each State stratum. Within the explicit strata defined by State and household size, additional implicit stratification2 can be achieved through sorting the list. Such stratification can help reduce sample variation associated with variables of analytic interest or expected to be correlated with survey outcome variables. Sort variables will include household characteristics on the frame, specifically household composition (children/no children) and the time of participation (based on date benefit started).

B.2.2. Estimation Procedures

Sample Weights

The first step will be to calculate the base weight, which is defined to be the reciprocal of the probability of selecting a SNAP household for the survey. Under the stratified two-stage sample design, the base weights of the sample households will include two components - the State-level weight component, and the household-level weight component. At the State level, a State base weight will be calculated that will reflect the selection probability of States, and the State base weight will be adjusted for State non-participation. Then, the household-level base weight will be developed based on State weight, reflecting each SNAP participant household’s probability of selection within a PSU (State).

The next step will be to construct and apply weight adjustments to compensate for differential rates of nonresponse. Dealing with issues of survey nonresponse is a standard part of the weighting effort associated with the estimation and analysis of the survey data. In developing sample weights, weights are adjusted to reduce the potential for bias associated with nonresponse. This generally involves identifying data available for both respondents and nonrespondents obtained from the sampling frame or other sources from which nonresponse adjustment cells can be created. For this study, demographic and geographic characteristics available in the sampling frames and other data sources such as SNAP administrative files could potentially be used for this purpose. An evaluation of these variables will be undertaken to identify those most effective in characterizing the propensity to respond. Approaches for identifying variables to be incorporated into models for nonresponse adjustment include logistic regression and the software package Chi-square Automatic Interaction Detector (CHAID). CHAID is an effective categorical search algorithm for identifying cells that are homogeneous with respect to response propensity and has been used extensively at Westat for this purpose. Nonresponses adjustments will be made within cells defined by characteristics found to be correlated with response propensity and are known for all sampled households. Item nonresponse will be handled through imputation, examining the characteristics of nonresponders and responders on particular items and imputing likely responses. Sensitivity analyses will be performed for all weighting and imputations.

Sampling Error Estimation

When a survey is conducted using a complex sample design, the design must be taken explicitly into account to produce unbiased estimates and standard errors for these estimates. This is accomplished by dividing the complete sample into a number of subsamples known as replicates so that each replicate sample, when properly weighted, will provide appropriate estimates of population characteristics of interest. In general, replicate samples are formed to mirror the original sampling of primary sampling units. In this study, replicate weights using the jackknife methodology will be developed as part of the weighting process to calculate sampling errors of survey estimates and to conduct statistical significance tests of survey findings. A number of popular statistical software for the analysis of complex survey data, including SUDAAN, STATA, SAS (using special survey analysis procedures), R, and WesVar, can be used with replicate weights to take the sample design into account when calculating point estimates, correlation, and regression coefficients and their associated standard errors. A series of jackknife replicate weights will be created and attached to each data record for variance estimation purposes. These replicate weights will then be imported into statistical software to calculate appropriate standard errors for survey-based estimates.

B.2.3. Degree of Precision Needed for the Purpose Described in the Justification

Margin of Error

To determine the sample size, we performed analyses with the goals that the sample size should be adequate (1) to meet the precision requirements of ±5 percent margin of error with a 95 percent level of confidence around a point estimate (proportion) for the full sample, and (2) to satisfy the needs for subgroup analysis with reasonable precision for point estimates and power for detecting difference between groups. As there are many subgroup comparisons of interest and overlap between them (e.g., every head of a household is in a gender, race, age, and education subgroup) it becomes inefficient to treat every subgroup as its own explicit strata as that would result in an unnecessarily large sample. Instead our strategy is to size the sample so that it produces point estimates for subgroups with reasonable precision (+/-8 %) and detect modest differences (6 percentage points) between subgroup prevalence levels.

In sample size estimation, design effect is also a factor to be incorporated into the power and expected precision, if the sample design is different from a simple random sample. The design effect is computed as the ratio of the variance of an estimate obtained from a specified sample design to the variance of the estimate obtained from a simple random sample of the same size. With this sample design, we expect there will be some clustering effect due to States being used as a sampling unit (PSU) in a two-stage design. In addition, there is also an expected design effect resulting from variation in the nonresponse adjustments in the data weighting process. Based on our experience with national sample surveys, we expect the design effect due to State-level clustering and weight variation to be roughly 1.2 for most of the estimates.

Table 2-1 provides estimates of the levels of precision to be expected for different prevalence estimates under the proposed design with a design effect of 1.2, for subgroup sample sizes ranging from 200 to 2,500. As Table B-2 shows even for subgroup samples as small as 200 to 300 households, we would have precision levels under +/- 8 percent margin of error for all prevalence estimates.

Table B-2. Expected 95-percent confidence bounds for selected sample sizes and prevalences

Subgroup sample size* |

P = 20% |

P = 30% |

P = 40% |

P = 50% |

200 |

6.07% |

6.96% |

7.44% |

7.59% |

300 |

4.96% |

5.68% |

6.07% |

6.20% |

400 |

4.29% |

4.92% |

5.26% |

5.37% |

500 |

3.84% |

4.40% |

4.70% |

4.80% |

600 |

3.51% |

4.02% |

4.29% |

4.38% |

700 |

3.25% |

3.72% |

3.98% |

4.06% |

800 |

3.04% |

3.48% |

3.72% |

3.80% |

900 |

2.86% |

3.28% |

3.51% |

3.58% |

1000 |

2.72% |

3.11% |

3.33% |

3.39% |

1200 |

2.48% |

2.84% |

3.04% |

3.10% |

1500 |

2.22% |

2.54% |

2.72% |

2.77% |

2000 |

1.92% |

2.20% |

2.35% |

2.40% |

2500 |

1.72% |

1.97% |

2.10% |

2.15% |

*Assuming design effects (DEFF) = 1.2.

Minimum Detectable Difference

Table B-3 provides estimates of the minimum detectable difference (MDD) between subgroups under the proposed design for subgroup sample sizes ranging from 200 to 2,500. The detectable differences are for a one-sided test with significance level of 0.05 and power of 0.80. For example, comparing samples of 500 respondents per group (e.g., 500 households with female household head vs. 500 households with male household head), the minimum detectable difference between the groups will range from 7.3 percent to 8.6 percent depending on the underlying prevalence being estimated.

Table B-3. Minimum detectable difference* between subgroups for selected sample sizes and prevalences (P)

Subgroup sample size* |

P = 20% |

P = 30% |

P = 40% |

P = 50% |

400 |

8.20% |

9.20% |

9.60% |

9.60% |

500 |

7.30% |

8.20% |

8.50% |

8.60% |

600 |

6.60% |

7.40% |

7.80% |

7.80% |

700 |

6.10% |

6.90% |

7.20% |

7.30% |

800 |

5.70% |

6.40% |

6.70% |

6.80% |

900 |

5.40% |

6.00% |

6.40% |

6.40% |

1000 |

5.10% |

5.70% |

6.00% |

6.10% |

1200 |

4.60% |

5.20% |

5.50% |

5.50% |

1500 |

4.10% |

4.60% |

4.90% |

5.00% |

2000 |

3.60% |

4.00% |

4.30% |

4.30% |

2500 |

3.20% |

3.60% |

3.80% |

3.80% |

* Assuming a one-sided test with a significance level of 0.05, power of 0.80, and DEFF = 1.2.

Based on our precision and MDD analyses, we believe a sample size of 4,800 respondents will exceed our target precision of +/-5 percent at the national level and will also exceed this level for most of the subgroup comparisons. To see the resulting power for subgroup comparison when the subsample sizes are unequal given a total sample size of 4,800, we also performed power analysis for subgroup comparison using selected household characteristics that are important for analysis. As an illustration, Table B-4 shows the results of power analysis with unequal subgroup sample sizes for gender of household head and household size categories with the underlying prevalence ranging from 20 percent to 80 percent. The results indicate that, for example, a two-group test with a 0.050 one-sided significance level will have 91 percent power to detect the difference between households with female household head’s proportion of 0.500 and households with male household head’s proportion of 0.550 for a total of 4,800 survey respondents. Similarly, in our example of comparing household of different sizes using the same test and assumptions on prevalences, we would have a power of 84 percent to detect the difference between households with 3 or fewer people and those with at least 4 people.

Table B-4. Power for 5-percent minimum detectable difference for comparison between unequal-sized subgroups, n = 4,800

Group 1 proportion |

20% |

30% |

40% |

50% |

60% |

70% |

80% |

Power (%) for detecting 5-percent difference between Subgroups |

|||||||

Gender of household head (66% female vs. 34% male)* |

97 |

94 |

91 |

91 |

92 |

95 |

98 |

Household size (77% with 1~3 persons vs. 23% with 4+ persons)* |

93 |

88 |

85 |

84 |

86 |

90 |

96 |

*Distribution of households obtained from USDA, 2013, Measuring the Effect of Supplemental Nutrition Assistance Program (SNAP) Participation on Food Security. Table III.2, p.19.

B.2.4. Unusual Problems Requiring Specialized Sampling Procedures

No specialized sampling procedures are involved.

B.2.5. Any use of Periodic (less frequent than annual) Data Collection Cycles to Reduce Burden

The study design requires a one-time data collection from respondents. All data collection activities will occur within a 4-month period.

B.3. Describe Methods to Maximize Response Rates and to Deal with Issues of Non-Response.

The accuracy and reliability of information collected must be shown to be adequate for intended uses. For collections based on sampling, a special justification must be provided for any collection that will not yield “reliable” data that can be generalized to the universe studied.

By explaining the importance and potential usefulness of the study findings in the introductory letters from FNS, and by implementing a series of follow-up reminders with a final attempt to complete the survey by telephone, we expect to achieve an overall survey response rate of 80%. Specific procedures to maximize response rates include:

A cover letter from USDA/FNS (Appendix A).

A prepaid incentive included with introductory letter and survey (Appendix B).

A promissory incentive discussed in introductory letter (Appendix A).

Two Interactive Voice Response (IVR) calls to respondents who have not completed the survey after two weeks of the first and second survey mailing (Appendices C and E).

A second mail survey sent to non-respondents via Federal Express to underscore the importance of the survey (Appendix D)

Two data collection modes (mail or telephone) for participants’ convenience

Telephone follow-up interview for non-responder (Appendices F.1-F.2)).

Make up to 9 unsuccessful call attempts to a number without reaching someone before considering whether to treat the case as “unable to contact.”

Implement refusal conversion efforts for first-time refusals and use interviewers who are skilled at refusal conversion and will not unduly pressure the respondent (Appendix G).

Provide a toll-free number for respondents to call to verify the study’s legitimacy or to ask other questions about the study.

Implement standardized training for telephone data collectors. The interviewer training will focus on basic skills of telephone interviewing, use of CATI platforms for interviews,and refusal avoidance and conversion.

Use interviewers who have experience interviewing SNAP participants

B.4. Describe any Test of Procedures or Methods to be Undertaken.

Testing is encouraged as an effective means of refining collections of information to minimize burden and improve utility. Tests must be approved if they call for answers to identical questions from 10 or more respondents. A proposed test or set of tests may be submitted for approval separately or in combination with the main collection of information.

The survey instrument has been cognitively tested with 8 SNAP participants for question flow, understandability, ease of completion, and length of administration. Based on the findings, the survey was revised to address concerns regarding the wording of questions or instructions that proved difficult for participants to comprehend. Each cognitive interview took approximately 60 minutes and the participants were given $75 as a token of appreciation.

B.5. Provide the Name and Telephone Number of Individuals Consulted on Statistical Aspects of the Design and the Name of the Agency, Unit, Contractor(s), Grantee(s), or Other Person(s) Who Will Actually Collect and/or Analyze the Information for the Agency.

Name |

Affiliation |

Telephone Number |

|

Maeve Gearing |

Project Director, Westat |

301-212-2168 |

MaeveGearing@westat.com |

Crystal MacAllum |

Senior Study Director, Westat |

301-251-4232 |

CrystalMacAllum@westat.com |

Jocelyn Marrow |

Senior Study Director, Westat |

240-314-5887 |

|

Hongsheng Hao |

Senior Statistician, Westat |

301-738-3540 |

|

Doug Kilburg |

Mathematical Statistician, NASS |

202-720-9189 |

Douglas.Kilburg@nass.usda.gov |

1 Keyfitz, N. (1951), “Sampling with Probabilities Proportional to Size,” Journal of the American Statistical Association, 46, 105-109.

2In contrast with explicit stratification, implicit stratification is a method of achieving the benefits of stratification often used in conjunction with systematic sampling. The sampling frame is sorted with respect to one or more stratification variables but is not explicitly separated into distinct strata.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Sujata Dixit-Joshi |

| File Modified | 0000-00-00 |

| File Created | 2021-01-22 |

© 2026 OMB.report | Privacy Policy