SSB Precision Medicine Final

SSB Precision Medicine Final.docx

The National Physician Survey of Precision Medicine in Cancer Treatment (NCI)

OMB: 0925-0739

Supporting Statement B for

The National Physician Survey of Precision Medicine in Cancer Treatment (NCI)

Date: May 12, 2016

Janet S. de Moor, PhD, MPH

Health Assessment Research Branch, Healthcare Delivery Research Program

Division of Cancer Control and Population Sciences

National Cancer Institute/National Institutes of Health

9609 Medical Center Drive, Room 3E438

Rockville, MD 20850

Telephone: 240-276-6806

Fax: 240-276-7909

Email: demoorjs@mail.nih.gov

Table of contents

|

Page |

B. COLLECTIONS OF INFORMATION EMPLOYING STATISTICAL METHODS |

3 |

B.1 Respondent Universe and Sampling Methods

|

3 |

B.2 Procedures for the Collection of Information

|

7 |

B.3 Methods to Maximize Response Rates and Deal with Non-response

|

8 |

B.4 Test of Procedures or Methods to be Undertaken

|

9 |

B.5 Individuals Consulted on Statistical Aspects and Individuals Collecting |

10 |

and/or Analyzing Data |

|

Attachments

Attachment A Questionnaire

Attachment B Telephone Screener

Attachment C Survey Cover Letters and Emails

Attachment D Telephone Reminder Script

Attachment E Nonresponse Follow-Back Survey

Attachment F Consultants

Attachment G References

Attachment H Privacy Act Memo

Attachment H OHSRP Exemption

Attachment I Privacy Act Memo

Attachment J Screen Shots from Web Survey

Attachment K Privacy Impact Assessment

Attachment L Thank you letter for a contingent incentive

B. Collection of Information Employing Statistical Methods

B.1 Respondent Universe and Sampling Methods

We propose a nationally representative sample that will yield approximately 1,200 completed surveys with medical oncologists who have treated cancer patients in the past 12 months. An analytic sample size of 1,200 was determined to be the minimum sample required to meet the following analytic goals:

provide a national representative sample of medical oncologists, consisting of the following subspecialties: hematologists, hematologists/oncologists, and oncologists.

allow for selection of a stratified random sample by subspecialty, metropolitan (metro) area, geographic region, sex, and age

provide an analytic sample size sufficient to detect a 10-15 percentage point difference in proportions of physicians by practice setting (e.g., academic versus non-academic, metro versus non-metro) at 90% power, 0.05 level of significance, and in a 2-tailed test

meet the Government’s criteria for precision (margin of error not exceeding + 5% around point estimates)

assume a 50-60% response rate as well as an ineligible rate of 15-17% (McLeod, Klabunde, & Willis, 2013)

This sample will be recruited from the American Medical Association (AMA) master file. As shown in Table B.1-1, the AMA Masterfile identified 13,067 oncologists in the target population (as of March 2015), which excludes physicians over age 75, physicians who are deceased, retired, residents, or do not see patients.

Table B.1-1. AMA Population Counts by Primary Specialty

Primary Specialty |

AMA Population Count <76 |

Hematology |

1,610 |

Hematololgy/Oncology |

7,014 |

Oncology |

4,443 |

Total |

13,067 |

The primary analytic objective is to make a national estimate for a binary analytic variable, for example, whether or not oncologists use a specific genomic test. The secondary research objective is to make a two-group comparisons, for example, determine whether there is a statistically significant difference between medical oncologists in large metropolitan areas versus small metropolitan areas.

For the national estimates, proportional allocation is most efficient. That is, the analytic sample size is proportional to the sampling strata population sizes. To compare differences between two groups (oncologists from large metro areas versus small metro areas), equal allocation is most efficient. That is, the analytic sample size is equal in all the sampling strata. However, to allow for both national estimates and group comparisons, a composite allocation is recommended because it allows for both of these research objectives.

Table B.1-2 shows the composite allocation, which is the mean of the proportional and equal allocations. Remaining stratification variables that will not be used for group comparisons such as region, age, and sex can be selected proportional to size.

Table B.1-2. Composite Allocation by Subspecialty and Metropolitan Area

Subspecialty |

Very Large |

Large |

Medium |

Small |

Total Respondents |

Hematology |

109 |

61 |

56 |

56 |

282 |

Hematology/Oncology |

272 |

91 |

72 |

71 |

506 |

Oncology |

205 |

78 |

66 |

65 |

414 |

Total Respondents |

586 |

230 |

194 |

192 |

1,202 |

Based on the composite allocation we calculated the unequal weighting effect, which affects the margin of error, and thus the precision of our survey estimates. After calculating the unequal weighting effect, we calculated the margin of error for national estimates and the power calculations for two-group comparisons.

One of the study’s research objectives is to meet the Government’s criteria for precision, which is a margin of error not exceeding + 5% around point estimates. The use of the composite allocation affects the margin of error by creating an unequal weighting effect (uwe) due to of the increase in variance caused by having sample cases with different probabilities of selection.

The

uwe is

where

n is the sample size,

is the weight for the

is the weight for the

medical oncologist sampled, and

medical oncologist sampled, and

is the mean of weights. Based on the composite sample size

allocation, the uwe is 1.27.

is the mean of weights. Based on the composite sample size

allocation, the uwe is 1.27.

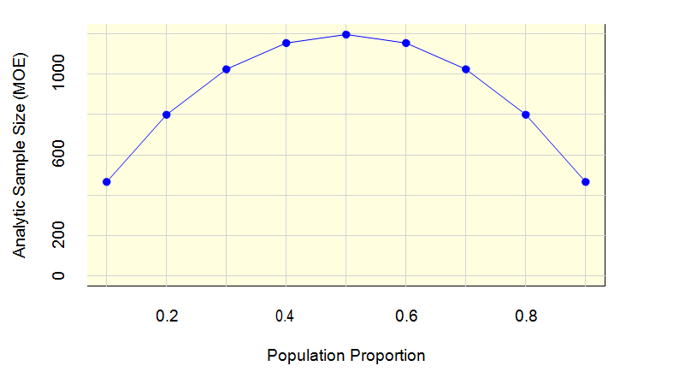

Figure 1 shows that with a uwe of 1.27, an analytic sample size of 1,200 is more than sufficient to meet the margin of error requirement. In this figure, the horizontal axis is the population proportion (e.g., proportion of respondents who have used a genomic test), and the vertical axis is the analytic sample size. When the proportion is 0.5, a sample size of 1,200 will achieve a margin of error of 2.66 percentage points, which is well below the 5 percentage point criterion.

Figure 1: Analytic Sample Size (MOE) by Population Proportion

The power calculations for the two-group comparisons fall into two categories. The first category represents power calculations for variables available on the sampling frame, which will have known sample sizes, e.g., specialty group and metropolitan size. The second category represents power calculations for variables that are not on the sampling frame and thus have an unknown sample size, e.g., academic practice.

Based on the composite allocation, Table B.1-3 shows the power to detect differences between two groups for three separate percentage point differences, 5, 10, and 15. The examples chosen demonstrate the highest and lowest power for detecting differences. For example, an overall sample size of 1,200 is sufficient to detect a 15 percentage point difference between the two largest metropolitan areas with 0.97 power (row 3). The power to detect a difference between the two smallest metropolitan areas (row 4) is 0.85. The power to detect a difference between any two other metro areas will fall somewhere between 0.85 and 0.97.

Table B.1-3. Power Calculations Comparisons between Subspecialty and/or Metropolitan Area

Groups Comparisons |

Sample size 1 |

Sample size 2 |

Power for percent difference |

||

5 |

10 |

15 |

|||

|

506 |

414 |

0.33 |

0.86 |

1.00 |

|

414 |

282 |

0.25 |

0.74 |

0.98 |

|

586 |

230 |

0.25 |

0.73 |

0.97 |

|

194 |

192 |

0.17 |

0.51 |

0.85 |

|

272 |

205 |

0.19 |

0.58 |

0.91 |

|

56 |

56 |

0.08 |

0.18 |

0.36 |

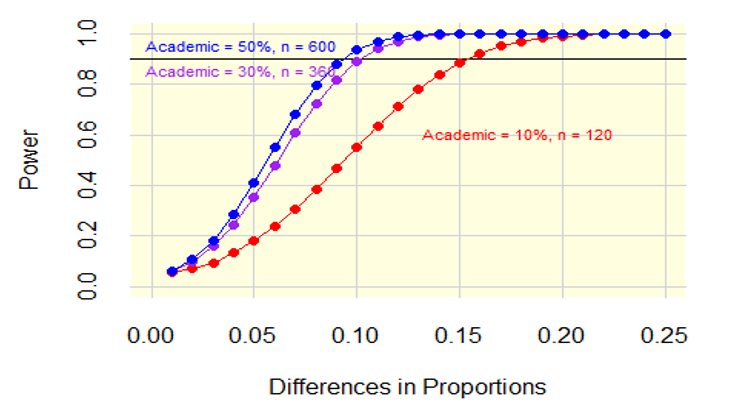

Our sampling frame does not indicate what proportion of the sample will be from an academic setting versus a non-academic setting. Therefore to determine the power of the test for a difference in the two-group comparison, we estimated the percentage of the analytic sample of 1,200 that will fall into the academic practice setting. This proportion was set to either 10, 30, or 50 percent.

Figure 2 shows the power by the difference in proportions with the three lines representing 10, 30, and 50 percent for the analytic samples of 1,200. The horizontal axis, difference in proportions, ranges from a 0.01 to a 0.25 difference in proportions. The vertical axis is the power. To achieve 0.9 power where the percentage of academic physicians is 10 percent, the minimum detectable difference is a difference in proportions of about 0.15. To achieve 0.9 power where the percentage of academic physicians is 30 percent, the minimum detectable difference is a difference in proportions is about 0.10. To achieve 0.9 power where the percentage of academic physicians is 50 percent, the minimum detectable difference is a difference in proportions is about 0.09.

Figure 2: Power Differences in Proportions

Inflating the Analytic Sample

The analytic sample size must be inflated to account for telephone screening of medical oncologists, non-contact with medical oncologists, and non-cooperating medical oncologists. It is anticipated that about 15% of the sample will be deemed ineligible during a telephone verification because they are deceased, retired, do not see patients, or the contact information is not valid. Of the medical oncologists that are eligible and have valid contact information, we will send them an invitation to participate in the survey. For survey invitations that are deliverable, these medical oncologists will then either responded (cooperate) or will not respond. The final set of respondents comprises the analytic data set.

Table B.1-4 contains the final disposition, assumed rate, and inflated sample size for the sample size. The selected sample size is the actual number of medical oncologists that need to be sampled to achieve the analytic sample size of 1,200. The cooperation and analytic samples sizes are the same set of responding medical oncologists. Based on the assumed rates of a minimum of 50%, we need to select 2,882 medical oncologists to get 1,200 responding medical oncologists. If a higher response rate is achieved, we will have a larger analytic sample, which will increase the power to detect smaller differences in proportions.

Table B.1-4. Sample Size by Final Disposition

Final Disposition |

Rate |

Sample Size |

Sample Selection |

NA |

2,882 |

Telephone Screening |

0.85 |

2,449 |

Eligible |

0.98 |

2,400 |

Cooperation |

0.50 |

1,200 |

Analytic Sample |

NA |

1,200 |

B.2 Procedures for the Collection of Information

An estimated of 1,200 surveys (see Attachment A for a copy of the instrument) will be completed for the main study either by paper survey or web survey (Attachment J). Participants will answer questions about their experiences with genomic testing in oncology and their perceptions of the barriers and facilitators of genomic testing within their practice. Demographic information and practice characteristics will also be collected. The entire procedure is expected to last approximately 20 minutes. This will be a one-time (rather than annual) information collection.

To achieve approximately 1,200 completed surveys, we will draw a sample of 2,882 oncologists from the American Medical Association Masterfile. Prior to sending physicians a copy of the survey, the contractor will make telephone calls to the sampled physicians to verify that the sample member is eligible for the survey (e.g., a practicing oncologist under age 75), and that the address is correct. The script for verifying eligibility and contact information is provided in Attachment B. For any sampled physicians who have moved, the contractor will attempt to obtain new address information from the office contacted or through various tracing procedures and internet searches.

We estimate that approximately 15% of the initial sample will be ineligible or unable to be located. If needed, additional sample can be taken to ensure and verified to assure that the survey will yield the analytic sample required for the study.

Eligible, sampled physicians will then be mailed a survey invitation. The initial survey package will be sent by U.S.P.S. first-class mail. It will include a cover letter, survey, postage-paid return envelope, and a prepaid gift of $50.

About 3 weeks after the initial mailing, a follow-up mailing will be sent to all nonresponding physicians by Priority mail. The package will include a new cover letter, replacement copy of the survey, and a postage-paid return envelope.

One-and-half weeks after the follow-up mailing, we will send an email reminder to nonresponders with a link to complete the survey via the web. Within one-and-a-half weeks of sending the email reminder, the contractor’s telephone interviewers will begin making follow-up telephone calls to nonresponders. The interviewers will provide reminders about the study, obtain new mailing addresses or email addresses, and send replacement questionnaires or emails as needed.

Two weeks after the email reminder, we will send the final follow-up mailing to nonresponders via FedEx. The package will include a new cover letter, replacement survey, and a postage-paid envelope.

One-and-half weeks after the FedEx mailing, we will send a final reminder via email to all nonresponding physicians. The email will include a link to the web survey. Reminder phone calls to nonresponding physicians will continue until the end of data collection – approximately 7.5 weeks after the initial survey invitations were sent.

Copies of the survey cover letters and emails are in Attachment C. The telephone reminder script is in Attachment D.

The data collection schedule is shown in Table B.2-1.

Table B.2-1. Data Collection Schedule

Task |

Dates |

Initial Survey Invitation |

-- |

Priority Follow-Up Mailing |

+ 3 weeks |

Email reminder with link to web survey |

+ 4.5 weeks |

Telephone Reminders |

+ 5.5-10 weeks |

FedEx mailing (final mailing) |

+ 6.5 weeks |

Final email reminder |

+ 8 weeks |

End data collection |

+ 12 weeks |

B.3 Methods to Maximize Response Rates and Deal with Nonresponse

To help ensure that the participation rate is as high as possible, NCI and the contractor will employ the following strategies:

Tailored contact materials. All contact materials and emails will be tailored to include the sample member’s name per the Tailored Design Method (Dillman, 2000).

Topic Saliency. Oncologists will be surveyed about their attitudes and experiences with genomic testing, a topic that is salient and relevant to them.

Design a protocol that minimizes burden. The survey will be short in length (approximately 20 minutes), clearly written, and with appealing formatting;

Use of NCI sponsorship. Physicians are more willing to participate when they know the organization sponsoring the survey (Beebe, Jenkins, Anderson & Davern, 2008).

Endorsements. The study will include endorsements from organizations such as the American Society of Clinical Oncology. Endorsements by professional organization have been shown to be effective (Olson, Schneirderman & Armstrong, 1993).

Sequential Mixed Mode. Using a sequential mail and web mixed mode design is shown to increase response compared with concurrent mixed mode designs (Medway and Fulton, 2012)

Reminder telephone calls. Several studies have found that the use of telephone “prompts” to complete a mail or Internet survey were successful (Braithwaite, Emery, Lusignan, & Sutton,2003; Price, 2000).

In the absence of additional information, response rates are often used alone as a proxy measure for survey quality, with lower response rates indicating poorer quality. However, lower response rates are not always associated with greater nonresponse bias (Groves, 2006). Total survey error is a function of many factors, including nonsampling errors that may arise from both responders and nonresponders (Biemer and Lyberg, 2003). A nonresponse bias analysis can be used to determine the potential for nonresponse bias in the survey estimates from the main data collection.

For this project, a brief (1page) nonresponse survey will be conducted with physicians via telephone (Attachment E). The survey will be designed to produce estimates of reasons for nonresponse. In addition to questions on reasons for nonresponse, we will include one or two key items of interest from the main survey, such the use of genomic testing. Statistical comparisons between items that are on both the nonresponse follow up survey and the mail survey will be used to measure the magnitude of nonresponse bias in the main study. We will also include some information on practice characteristics (such as academic versus non-academic practice setting). These characteristics may assist in producing weighting adjustments for nonresponse.

B.4 Test of Procedures or Methods to be Undertaken

The survey instrument was cognitively tested with 9 participants (completed June 2015). PRA OMB Clearance was not needed for this small pilot. In response to the feedback received during testing, some survey questions were dropped, and many survey questions were revised or improved.

Prior to launching the main study, we will conduct a pilot test designed with a sample of 350 oncologists before running the main study. The purpose of the pilot is to verify our assumptions about the response rate and to test the survey instruments as well as the recruitment protocol.

B.5 Individuals Consulted on Statistical Aspects and Individuals Collecting and/or Analyzing Data

Statistical experts both within and outside of NCI contributed to this project. RTI’s senior statistician, Darryl Creel, PhD, is the lead statistician responsible for developing the sampling plan and reviewing the analytic goals. Benmei Liu, PhD, Mathematical Statistician in the Surveillance Research Program of the Division of Cancer Control and Population Sciences at the National Cancer Institute provided statistical consultation on both the sampling plan and analytic goals for this project.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | Supporting Statement 'B' Preparation - |

| Subject | Supporting Statement 'B' Preparation - 03/21/2011 |

| Author | OD/USER |

| File Modified | 0000-00-00 |

| File Created | 2021-01-24 |

© 2026 OMB.report | Privacy Policy