Memo to OMB re: NCVP Field Test of Questionnaire

NSVSP OMB Generic Clearance Memo - FieldTesting.docx

Generic Clearance for Cognitive, Pilot and Field Studies for Bureau of Justice Statistics Data Collection Activities

Memo to OMB re: NCVP Field Test of Questionnaire

OMB: 1121-0339

U.S. Department of Justice

Office of Justice Programs

Bureau of Justice Statistics

Washington, D.C. 20531

MEMORANDUM

To: Shelly Wilkie Martinez

Official of Statistical and Science Policy

Office of Management and Budget

Through: Lynn Murray

Clearance Officer

Justice Management Division

William J. Sabol

From: Lynn Langton, Jessica Stroop

Date:

Re: BJS Request for OMB Clearance to conduct a field test of the questionnaire and data collection procedures for National Survey of Victim Service Providers, (NSVSP), under the OMB generic clearance agreement (OMB Number 1121-0325).

The Bureau of Justice Statistics (BJS), in consultation with RAND, NORC and the National Center for Victims of Crime (NCVC) under cooperative agreement (Award 2012-VF-GX-K025), is requesting clearance for a field test of the questionnaires and data collection procedures that aim to capture data about the organizational attributes, staffing and services provided by Victim Service Providers (VSPs) and entities that have victim services programs. A VSP is any organization which provides services or assistance to victims of crime. This field test is part of BJS’s National Survey of Victim Service Providers (NSVSP), a program that BJS is developing to capture, on a routine basis, information about how victim serving providers respond to criminal victimizations. The results from the field test will be used to inform the design for a full-scale census collection planned for early 2016.

The National Survey of Victim Service Providers (NSVSP), jointly funded by the Office for Victims of Crime (OVC) and BJS, is a major component of a broader effort by BJS to understand the criminal justice system’s and its complementary agencies’ response to victims of crime. Through the NSVSP, BJS will survey VSPs to obtain information about the organization, operations, funding, staffing, and services provided by providers that serve victims of crime. Other prospective components of this BJS effort to understand the response to criminal victimization include redesigning the National Crime Victimization Survey to include more information on use and non-use of victim services by victims of crime; periodic establishment surveys of subsets of victim service providers on special topics related to victim services; and in conjunction with NCVS subnational estimates and National Incident-Based Reporting System (NIBRS) data, assessment of the services delivered in relation to the incidence of victimization at the local area. These efforts aim to fill important gaps in knowledge about which victims receive services, about the capacity of VSPs to provide services, and about the need to expand or modify how services are delivered. For OVC, this type of information is critical to developing an empirically-based approach to delivering victim services, one that is consistent with OVC’s Vision 21 effort to transform the victim services field. 1 Under Title 42, United States Code, Section 3732, BJS is directed to collect and analyze statistical information concerning the operation of the criminal justice system at the federal, state and local levels, and the NSVSP fits within that mission.

The goals of the NSVSP are to develop an understanding of the broad range of organizations that provide victim services as their primary function or through specific programs or personnel, including how they are structured, the types of services they offer, and the types of crime victims they serve. While there are many directories in place, and many lists of organizations serving specific types of victims, they are not all inclusive and many are not routinely updated.

The next phase in the development of the NSVSP, which is the subject of this memo, is to field test the data collection instrumentation and methodology with a targeted sample of VSPs. Because the NSVSP is a new data collection that has not be previously undertaken, the pilot test is a trial run of the survey administration process intended to provide a better understanding of the challenges and costs associated with obtaining cooperation from VSPs.

For this clearance, under the BJS Generic Clearance, we plan to conduct a mixed-mode field test of the instruments and data collection procedures with a sample of approximately 725 of the 21,000 VSPs on the existing roster, and to conduct a pre-contact experiment to measure the effect on cooperation and data collection effort overall and by federally-funded and non-federally funded providers. This field test is designed to address issues related to the accuracy of contact information on the current VSP roster, and administration of the survey, such as mode, response rates, number of follow-up contacts needed, and successful approaches for gaining respondent cooperation. The field test will use three variations of the survey instrument, intended for different categories of VSPs. The three broad category types of VSPs include primary providers (e.g., domestic violence shelters, rape crisis centers, homicide survivor groups, etc.), secondary providers (e.g., prosecutor-based providers, hospital-based providers, campus providers, etc.), and incidental providers (e.g., homeless shelters that provide services to victims but do not have specific programs or staff dedicated to working with crime victims). Within these categories of VSPs exist various types of providers, including, prosecutors’ offices, other criminal justice “system-based” VSPs (like police, special advocates, etc.), “community-based” shelters, domestic violence or sexual assault programs, mental and physical health-related programs, tribal organizations or tribal-focused services, and a sixth “other” group.

The instruments that will be field tested will collect basic contact information for the selected sample of VSPs as well as information about services provided, staffing, and funding. The instruments to be administered will generate currently unknown information on the landscape of the victim service field as a whole, in addition to the information necessary to stratify the sample for subsequent, more in depth data collection efforts. This approach is similar to that which has been successfully employed for years with the BJS Census of State and Local Law Enforcement Agencies and the Law Enforcement Management and Administrative Statistics collection (OMB number 1121-0240).

Description and Purpose of Overall Project

Currently, because no substantive baseline measures exist for victim services provided or the organizations that provide them, there is no way to measure progress in terms of the number and range of victims served by these organizations or the effectiveness of services provided. VSPs also lack any systematic way to benchmark their work against that of their peers. The little information available about how current victim service funding is being used limits the ability of the field to work more effectively in providing assistance to crime victims, to seek future funding, or to identify underserved populations. The goal of the NSVSP is to help establish metrics that will allow for baseline measures about the current services provided or the organizations that provide them so that possible future avenues for data collection and research can include ways to measure progress in terms of the number and range of victims served by these providers or the effectiveness of services provided.

Field Test Design and NSVSP instrument

The objective is to now seek approval to move into the second phase of the data collection process involving a formal field test of the survey data collection approach, with a group of 725 randomly selected VSPs from the existing frame of 21,000. The VSP frame has been compiled from deduplicated rosters of victim service providers from federal and state funding entities and victim service associations, as well as through web-scraping efforts. The first step will be to divide the NSVSP roster into federally funded and non-federally funded VSPs. Due to differences in the size between the two groups, the split will not be even (n=340 nonfederal VSPs and 240 federal VSPs). Next the project team will stratify the sample based on categories of VSPs (e.g., criminal justice-based VSP), using keywords from information included in the roster to create six groups of different VSPs. These six groups are (1) prosecutors’ offices and other criminal justice system-based VSPs (like police, special advocates, etc.), (2) community-based shelters, (3) domestic violence or sexual assault programs, (4) mental and physical health-related programs, (5) tribal organizations or tribal-focused services, and a sixth other group including VSPs for which the type could not be identified.

Given the paucity of data on the willingness and capacity of VSPs to complete surveys and the best modalities for researchers to facilitate their completion of surveys, the field test will explore whether problems emerge in implementing the survey using a multi-mode approach. The field test will address several key methodological issues:

Whether having the name of a point of contact within the organization has an impact on cooperation rates or the resources required to obtain cooperation;

The challenges associated with identifying suitable points of contact within victim service entities;

Differences in response rates and the number of nonresponse follow up attempts needed for different types of VSPs, particularly those that receive federal funding and submit quarterly reports to the federal government and those that have not received federal funding in the past several years;

The strengths and weaknesses of each of the survey modalities (e.g. web, phone and mail) in terms of response rates, cost, time-to-survey completion, and the completeness of information provided by respondents;

Whether the respondents in each of the survey modalities have similar experiences completing the survey;

The time and costs associated with prompts, reminders and other efforts necessary to obtain a high response rate across different types of VSPs, particularly federal versus nonfederal;

Aspects of the data collection methodology that cause excessive burden or create challenges for the respondents;

The accuracy of the roster in terms of the proportion of sampled addresses that are out of scope, incorrect, or duplicative; and

The utility of the telephone modality and related protocols as a follow-up tool and approach to increase response rates.

These issues will be addressed through the four cell design of the field test and by systematically tracking the various steps of the multi-modal nonresponse follow-up protocol. Depending on the results of the field test, a separate OMB clearance for a full scale administration of the NSVSP will be requested in 2016.

Survey Questionnaire to be used in Field Test

RAND and BJS developed the NSVSP by examining existing surveys on victim services including OVC and OVW reporting forms, and by holding discussions with a variety of stakeholders in the victim services field. Questions were modeled based on these documents and conversations in order to reduce the potential burden on respondents. However, the NSVSP also covers significantly different aspects of VSPs than what administrative records show, and it is also designed to reach a wider range of VSPs so the survey contains more items aimed at collecting more detailed information than these other sources.

Discussions with stakeholders have been instrumental to the crafting of the survey. Early and continuing discussions were held with OVC and OVW to better understand the needs of the field, the current state of reporting, and where the most important gaps in data could be found. The team also turned to two panels of experts and stakeholders. First, a Project Input Committee (PIC), made up of representatives of various VSPs across the country, was assembled as part of the NSVSP research effort in order to provide project team members with a real world perspective on the operations, services, client bases, and management information systems of a wide variety of VSPs. Second, two meetings were held with an Expert Panel (EP), made up of 14 VSPs and researchers considered experts in their field. During these meetings, the EP provided feedback on developing drafts of the NSVSP survey instrument. The EP meetings were instrumental in crafting the content and structure of the survey in such a way that it would be accessible and useful to policy-makers and the victim services field.

This phase of data collection effort included an OMB approved cognitive test (OMB number 1121-0339) of the survey items. The cognitive testing, conducted in 2014-15, assessed the completeness of the information collected (were respondents able to furnish the requested information), the uniformity of understanding of the survey from one respondent to the next (e.g., did each respondent define a domestic violence victim in the same way), and the respondents ability to provide case-level data in standard formats. The cognitive testing aided the team in refining the questionnaire for reducing the burden on the recipient, readability and improved understanding of terms. The information collected from the cognitive testing of the survey has been incorporated into the instrument to be used in this pilot test.

Most participants’ cognitive interview feedback resulted in minor edits to question wording or tweaks to particular response items. However there were six major changes that helped refine the NSVSP instrument to be used in the field test. These changes ultimately resulted in the overall completion time being reduced from 50 minutes to 30 minutes or less. The changes made focused on (1) the types of services provided question to create a shorter list response options, making sure the condensed services list still encompasses the bulk of services provided but is easier for providers to complete and questions regarding the types of crime experienced by victims receiving services. (2) Respondents initially were unsure whether to report all crime types experienced by victims or only report the presenting crimes types for which victims initially sought services. While both pieces of information are potentially of importance, one of the main purposes of the full survey is to obtain a better picture of the victim services field and since there are many variations in VSPs, a principal distinguishing feature is their victimization focus. To be able to compare answers by groups of providers with differing victim focuses, we edited the victim type question to ask about crime types for which victims sought services.

The remaining changes focused on the organizational and administrative components of the VSPs and resulted in changes to the way the instrument asked questions on (3) staffing, (4) funding and (5) record keeping. These were all simplified so that respondents didn’t need to spend time accessing their internal records to complete the survey and response options now include a place to indicate where this information is an estimate. Lastly, cognitive interview participants reported some difficulty answering questions according to the reference period of the prior 12 months, as different organization operate on different schedules. To avoid potentially high levels of missing item responses due confusion/difficulty with the time period requirement, the last major change was (6) the addition of a question to the instrument to clarify whether the organization operates/reports data on a calendar year or fiscal year with a follow up question that then asks the date of the beginning of the fiscal year if that response is endorsed. A detailed discussion of the changes made to the survey instrument as a result of the cognitive testing are included as Attachment 6.

The resulting NSVSP instrument has been designed to provide unique insights into VSPs and into the field as a whole. This survey will give a much more complete view of the services being offered to victims, the gaps in services, and the demand for services by demographics of victims than what is currently being collected. It will also provide information on the resources available to VSPs and where more resources are needed to improve services.

The survey instruments will gather information related to these topics:

Screening questions

Provider/Agency Type

Services provided within the past calendar or fiscal year

Number of basic hotline calls in the past calendar or fiscal year.

Number of victims who received services in the past calendar or fiscal year.

Types of crime victims served in the past calendar or fiscal year.

Number of paid staff

Funding by source in the past calendar or fiscal year.

Use of case management systems

(See Attachments 1 – Primary, Attachment 4 – Secondary, and Attachment 5 – Incidental,)

Sampling plan

The universe of VSPs is a diverse one, and contains entities such as police departments, YWCA chapters, tribal coalitions, child protective service agency, family counseling centers, mental health service providers, district attorneys’ offices, and domestic violence shelters. Within the field, some providers’ principal function is to serve crime victims (i.e., primary VSPs). For other providers, providing assistance or services to victims of crime is one of many functions of the provider; some of these providers have programs or staff that are designated to serve crime victims (i.e., secondary VSPs), and still others serve victims as part of their regular services but have no designated programs or staff (i.e., incidental VSPs).

Another key distinction among VSPs is whether they receive federal funding and are attuned to submitting quarterly reports to the Department of Justice. Anecdotal evidence suggests that federally funded VSPs will be an easier group to collect data from and it is a group that can be identified readily and is more likely to have accurate contact information. Knowing about the differences in effort needed to collect data from the federally funded group versus the non-federal group could have important implications for the roll out of the full project. The non-federally funded group is larger and could prove to be a more difficult group to obtain cooperation from if impediments like the absence of contact information and little perceived value and/or interest in federal funds are present.

Table 1 below displays how the 725 VSPs are to be randomly assigned to one of the four study conditions: (1) VSPs receiving a pre-contact prior to being asked to complete a survey that are recipients of federal grants, (2) VSPs receiving a pre-contact that do not receive federal grants, (3) VSPs not receiving a pre-contact that are recipients of federal grants, and (4) VSPs not receiving a pre-contact that do not receive federal grants.

Table 1: 725 cases randomly assigned to four conditions (580 completes expected)

|

Pre-contact |

No Pre-contact |

Federal |

150 (120 completes) |

150 (120 completes) |

Non-Federal |

213 (170 completes) |

212 (170 completes) |

|

363 (290 completes) |

363 (290 completes) |

Because a substantial portion of the assembled roster of VSPs is missing one or more fields of contact information (about 21% are missing contact name, 22% are missing phone info, and 35% are missing email), this poses the potential problem that the instrument will not be received by the most appropriate respondent or at all. The next step will be to randomly assign an equal portion of nonfederal and federal VSPs to a condition in which the VSPs either does or does not receive a pre-survey contact to identify a point of contact and confirm other contact information on the roster. To ensure that the NSVSP survey goes to the most appropriate person able to complete the information, the organization (e.g., the police department or victims unit of a prosecutor’s office) will be contacted prior to receiving the invitation to complete the survey. The goal of the pre-contact is to determine the person most appropriate to receive the survey invitation. Also, it is anticipated that a number of VSPs will have new locations, addresses, or other identifying information that have to be validated or updated. Even for cases where it is strongly suspected that the correct contact information is recorded in the roster, it is likely that at least one call will need to be made to reach the potential respondent. However, engaging in this process for the entire victim service population could prove to be extremely costly. The experimental design will measure the effects of these pre-contacts and allow for assessment as to whether this extra cost can be justified if it results in a significantly higher response rate, more accurate and complete information and higher overall compliance in this survey effort. Additionally, providers that do receive the pre-contact call will be asked which survey modality they would prefer. The proportion of providers that favor paper surveys over web-based administration will also be documented, and will allow for personalized follow up contact efforts. For example, when reminder postcards are sent to the VSPs for the non-respondents, those preferring web-based administration will be directed to the web address, and if the respondent indicated a preference for the paper survey, that can be re-mailed out to them.

NORC will then use the roster to develop a stratified random sample within each of the four cells, to ensure variation in the types of VSPs included in the pilot. NORC will stratify the sample based on the six categories of VSPs outlined above, (e.g., criminal justice-based VSP), using keywords from information included in the roster.

From each of the six strata, NORC will select a random sample of participants based on estimates of the overall percentage that each stratum represents in the total frame (total n= 725 with 580 expected completed survey).

NORC will use these data to conduct exploratory analyses on differences in attempting to survey different types of VSPs. Throughout the field test, these different types of VSPs will be tracked to assess variations related to the objectives of the pilot study. These objectives include, 1) what are the costs and efforts associated with achieving a high response rate (i.e. what is the preferred modality), and 2) what type of information can they provide about the provision of services to their victims.

Field Test Design and Statistical Power

A potential threat to this project is the viability of locating the VSPs. To address this issue, an experiment will be implemented in which 725 VSPs are randomly assigned to the four study conditions (see Table 1 above). The main feature of the field test is conducting a trial run of the planned multi-mode, multi-contact approach using a representative sample of VSP cases. This approach will start with the least expensive contacting mode to complete the maximum number of interviews at minimal cost, and transitioning to more expensive contacts and modes to improve completion rates.

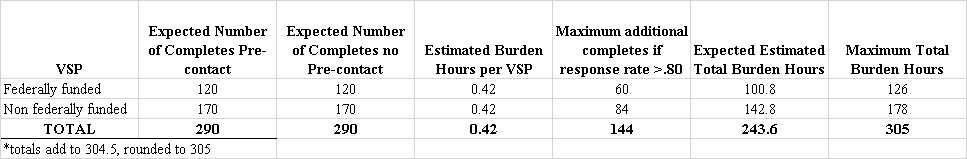

For the main analyses, with a sample size of 580 completed surveys for the pilot test, there will be sufficient power to detect overall small effect size differences of 15% between the pre-contact and no pre-contact groups (n= 290 vs. 290 and power is > .95) and sufficient power to detect small effect size differences of 15% between the federal and nonfederal VSPs (n= 240 vs. 340 and power is > .92).

Due to the assumption that non-federal contact information may have more inaccuracies and may require more effort to obtain cooperation, another priority for the pilot test is to understand the value of pre-contact for non-federal VSPs. The non-federal sample is expected to yield 170 completed surveys for pre-contact cases and 170 completed surveys for no pre-contact cases (total= 340) to yield a higher power level (power=.80) for this key comparison. There will also be reasonable power (>.65) for the federal VSP sample (n= 240), but the impact of pre-contact is less of a priority among this group. The 580 case sample scenario provides a balance between good statistical power and the overall cost of the pilot.

Description of Survey Administration Procedures

The design utilizes a multi-modal approach to administering the NSVSP pilot test modeled after the Dillman approach for nonresponse follow up and adapted for this study.2 The multi-mode approach capitalizes on the strengths of individual modes while neutralizing their weaknesses with other approaches, making the reporting task easier for respondents by offering them alternatives (which has been associated with higher response rates).

Dillman recommends a tailored, hierarchical approach to data collection that begins with the least expensive contacting strategy and mode to complete the maximum number of interviews at minimal cost and transitions to more expensive contacts and modes to improve completion rates. NORC will initially provide sample members with access to a web survey and follow up with paper and pencil and telephone options for those who do not respond or are unable to respond via the web. As seen in Attachment 2, the process will begin with multiple mailing of letters (a pre-notification letter letting the VSP know about the upcoming survey followed by a formal invitation letter, hard copy and email where possible) then follow Dillman’s recommended approach for postcards, additional letter (both hardcopy and electronic) prompts, telephone prompts, an express mailing, and a ‘final chance’ contact by telephone (or mail, email, or fax if no phone number is available). The anticipated field testing process is about 3 months for completion.

Attachment 2 depicts the process to be used in the field test. A distinction is made in Attachment 2 between the methods to be used to contact the VSP and the modality to complete the survey. For contacting the VSP and prompting respondents to complete a survey, there are strategies involving a hard copy mail contact attempt (where either a letter or a post card is sent by regular US Mail or a letter sent by FedEx express mail), an email contact, a fax contact, or a phone contact.

Three main modalities are available for the respondents to complete a survey: a web survey, phone survey, and a paper-and-pencil hard copy survey (most of these are expected to be mailed to the team but a faxed version of the hard copy will be accepted). For this project, web is the strongly preferred method due to its lower costs and its more friendly presentation of the survey (e.g., eases skip patterns for the respondents). The web link will be highlighted in all the contacts. The hard copy version of the survey is only provided to VSPs that request it (but once they request a hard copy all subsequent mailing to that VSP would include a hard copy, except the post card mailings and phone prompts). If a VSP expresses a preference to complete the survey by phone, that mode will be used until a completed survey is obtained or until the VSP request the use of a different modality.

Key steps in the field testing process

Use the project developed population list of VSPs to develop a stratified random sample for the pilot study. The sample will be stratified based on the following categories of VSPs: programs based in a prosecutor’s office, other criminal justice system-based VSPs (such as police, and special advocates) other than prosecutors, community-based shelters, domestic violence or sexual assault programs, community health-based programs, tribal-focused services and other programs not fitting into one of the other five categories.

A sample of 725 cases (with an expectation of securing at least 580 completed surveys from this group) will be run through the randomized experiment (described above).

NORC will implement the multi-modal approach (see Attachment 2). For half of the sample, selected randomly, the pre-contact process will be initiated to track down the correct person to send the survey and verify the correct contact information. Once the correct pre-contact information is obtained, the multi-mode process of data collection will begin. For the other half of the sample, the pre-contact phase will not occur, and the multi-mode data collection phase will begin with the available information. For those VSPs that have new locations, efforts will be made to track them down. Even for cases where the correct contact information is already on the roster (either initially or after searching), it is likely that numerous calls may be necessary to get to the appropriate person.

NORC will work with the expert panel members and Victims of Crime Act (VOCA) administrators located in each state to encourage cooperation among non-responders. Since it is the intention to use VOCA administrators and expert panels members to assist with nonresponse follow-up for the full study in Phase 3, NORC will also draw on these individuals for the pilot.

NORC will process and verify the pilot data according to BJS standards for preparing analytic files, including implementing BJS-approved data entry, verification, and edit procedures. The pilot study dataset will be shared with BJS.

Conduct data analysis and draft a report for review by BJS. The report will cover recommendations to BJS about changes to the roster, questionnaire, survey modes, prompts (number of prompts, types of prompts by phone or mail, and prompts by NORC staff compared to prompts by expert panel members), administration protocols, and outreach efforts that would potentially minimize under-coverage, enhance data quality, and maximize response.

Language

The cognitive interviews will be conducted in English

Burden Hours for Field Test

Protection of Human Subjects

Not applicable. The NSVSP is an establishment survey and all data collected pertain to the organization.

Informed Consent, Data Confidentiality and Data Security

NORC will seek the active consent of all study participants. First, respondents will receive a letter two weeks before the pilot survey begins. The letter will address the purpose and importance of the upcoming pilot survey, the voluntary nature of the study, how they were selected, and a number to call with questions about the study. Next, respondents will receive the formal survey invitation via mail or telephone. The invitation will include the estimated length of the survey and will again note the voluntary nature of the survey, allowing the participant an opportunity to decline if the burden would be unacceptable or for any other reason.

BJS’s pledge of confidentiality is based on its governing statutes. All information obtained during this study will be treated as confidential and will only be used to analyze study results. The data are collected under federal statute (Title 42 USC, Section 3735 and 3789g) and are protected from any request by a law enforcement or any other agency, provider, or individual. All answers will be combined with responses from other study participants when writing up reports and conducting analyses. Pursuant to 42 U.S.C. Sec. 3789g, BJS, RAND and NORC will not publish any data identifiable specifically to a private person. Access to NORC’s secure computer systems is password protected and data are protected by access privileges, which are assigned by the appropriate system administrator. All systems are backed up on a regular basis and are kept in a secure storage facility. To protect the identity of the respondents, no identifying information will be kept on the pilot test data file. Identifying information includes the name of the sampled provider, address, and telephone number. Point of contact names will be collected, but no identifying information will be linked to survey responses. The contact information will be added to the roster.

With respect to NORC personnel, all members of the research team are required to sign a pledge of confidentiality. This pledge requires employees to maintain confidentiality of project data and to follow the above procedures when handling confidential information.

Data Analysis and Reporting

NORC will analyze the survey data to explore the overall cost, resources, and time required to obtain high response rates and provide BJS with a technical report describing the lesson learned about the data collection methodology. BJS will make this report publically available. The report will be focused on technical issues rather than substantive findings and will not identify individual VSPs.

1 http://ovc.ncjrs.gov/vision21/pdfs/Vision21_Report.pdf

2 Dillman, D. A., Smyth, J. D., & Christian, L. M. (2009). Internet, Mail and Mixed-Mode Surveys: The Tailored Design Method (3rd ed.) (3rd. ed.). New Jersey: Wiley.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | September 15, 2005 |

| Author | Jessica Stroop, BJS |

| File Modified | 0000-00-00 |

| File Created | 2021-01-25 |

© 2026 OMB.report | Privacy Policy