Memo to OMB: CCSVS rev

Final CCSVS OMB clearance pilot 032015 with OMB revisions.docx

Generic Clearance for Cognitive, Pilot and Field Studies for Bureau of Justice Statistics Data Collection Activities

Memo to OMB: CCSVS rev

OMB: 1121-0339

U.S. Department of Justice

Office of Justice Programs

Bureau of Justice Statistics

Washington, D.C. 20531

MEMORANDUM

To: Shelly Wilkie Martinez

Official of Statistical and Science Policy

Office of Management and Budget

Through: Lynn Murray

Clearance Officer

Justice Management Division

William J. Sabol

BJS Director

From: Michael Planty, Lynn Langton, and Jessica Stroop

Date:

Re: BJS Request for OMB Clearance for Pilot Testing of the Campus Climate Survey Validation Study under the BJS Generic Clearance Agreement (OMB Number 1121-0339)

The Bureau of Justice Statistics (BJS) is requesting clearance for pilot testing of an instrument to capture data about rape and sexual assault as well as attitudes and perceptions of safety on campus among undergraduate university students. This instrumentation effort is part of BJS’s Campus Climate Survey Validation Study (CCSVS), a study that BJS is developing through a cooperative agreement with Research Triangle Institute (RTI) (Award 2011-NV-CX-K068) and with funding from the Office of Violence Against Women (OVW). The primary purpose of the CCSVS is to develop and test instrumentation and methods for conducting a survey of college and university students that measures the ‘climate’ of the school and student body. Climate is defined as a compilation of indicators related to the percentage of students experiencing rape and sexual assault victimization, perpetration of sexual violence, victim reporting and interaction with school officials and law enforcement, sexual harassment, perceptions of school policies and responsiveness, and bystander attitudes and behaviors. The survey instrument has undergone multiple rounds of cognitive testing and review under OMB Generic Clearance Agreement 1121-0339. The instrument has been revised as a result of the cognitive testing process and we seek to move forward with full implementation of the pilot test to be conducted in March, April, and May of 2015 with a sample of approximately 23,500 undergraduate students at 12 schools to explore methodological issues related to data collection and content validity. For each school, a random, representative sample will be chosen to produce key school-level outcomes and performance indicators for the pilot test including estimates of rape and sexual assault, response rates, item nonresponse, inter-item reliability, and use of incentives to gain cooperation.

The Campus Climate Survey Validation Study (CCSVS) is a component of a broader effort by BJS to improve estimation of the rate and prevalence of rape and sexual assault (RSA). At present, BJS is involved in a number of projects that are aimed at collecting estimates of RSA through different approaches and methodologies including a redesign of our victimization omnibus survey and also developing and piloting two additional alternatives to collection methodologies (Rape and Sexual Assault Pilot Test). The CCSVS pilot test will help inform BJS efforts associated with the redesign of the National Crime Victimization Survey (NCVS) instrument specifically when it relates to measuring RSA in the 18-24 year old demographic, with the use of web-based self-administered survey modes for measuring sensitive topics related to criminal victimization and offending. The CCSVS aims to fill important gaps in knowledge about the prevalence of RSA among a potentially high risk population, the impact of the context of the survey and use of behaviorally-specific questions on prevalence and incidence rates, and information on victim help-seeking behaviors and reasons for not reporting RSA to formal and informal networks and authority figures. Additionally, the CCSVS instrument embeds multiple survey questions on RSA victimization that will enable the use of Latent Class Analysis (LCA) on the sample of students (at the aggregate level). Using LCA, we hope to identify some of the characteristics of subgroups in the sample and explore the rates of potential false positive/false negative responses or response bias. Using LCA will help to inform BJS’s NCVS redesign efforts when we collect data on RSA in understanding which subgroups that may or may not accurately report RSA and identify areas for future research and focus.

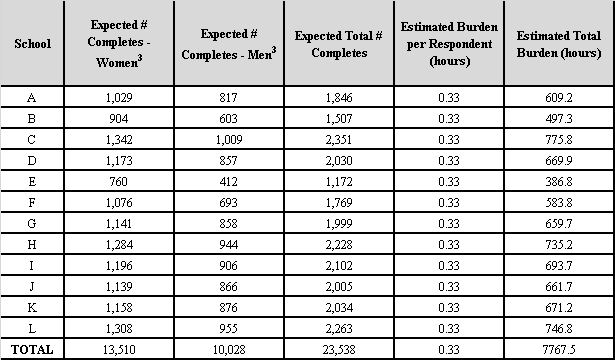

For this clearance, we plan to conduct a web based pilot test of the full CCSVS instrument with approximately 23,500 undergraduate students in 12 universities, and also plan to conduct an incentive experiment within four (4) of the universities to measure the effect of incentives on response rates. We will also be conducting a greeting experiment to determine whether more or less personalized greetings in recruitment materials are associated with higher response rates. Together these tasks will require up to 7,768 burden hours (23,538 students x 0.33 hours each = 7,768 hours) as determined by a series of independent time tests of the web-based instrument conducted by RTI that demonstrated that 20 minutes is likely to be the average time it will take respondents to complete the survey.

Description and Purpose of Overall Project

In January of 2014, the White House Task Force to Protect Students from Sexual Assault was established with goals of developing recommendations for how colleges and universities could better respond to reports of sexual assault and for increasing the transparency of enforcement efforts. As a statistical agency within the Department of Justice and the agency responsible for the NCVS and other projects that seek to reliably measure rape and sexual assault, BJS agreed to develop and test a survey and methods that can be shared with schools and used to conduct reliable and valid climate surveys.

The White House Task Force has developed a "core" set of items for a climate survey, designed to capture key aspects of the problem of sexual assault for campuses using the best available and promising instruments from the research literature (https://www.notalone.gov/assets/ovw-climate-survey.pdf). The key conceptual areas that were mentioned in the instrument were: 1) general climate of the university, 2) perceptions of leadership, policies and reporting, 3) the prevalence of sexual violence, 4) the context around the incidents of sexual violence, 5) bystander confidence and readiness to help, 6) perceptions of sexual assault, 7) rape myth acceptance, and 8) prevalence of interpersonal violence.

The instrument was revised and cognitively tested through both in-person interviews and crowdsourcing that helped further refine the instrument to be used for the CCSVS pilot. The cognitive process helped validate the utility and reliability of items that reflect the conceptual areas outlined in the task force report, though the questions themselves differ from those originally developed by the White House Task Force.

The current version of the instrument to be used in the pilot will allow us to produce university-level key estimates for RSA. Unlike the Task Force instrument, the current version will not capture attempted incidents, and rather than focus on ‘most serious’ incident, the CCSVS allows respondents to report detailed information on up to three incidents which will capture approximately 98% of all incidents experienced by victims (Based on analysis from the 2007 NIJ sponsored College Sexual Assault study) . There are also items that capture information on the number of perpetrators, month in which the incident occurred, and the victim’s help-seeking and reporting behavior and experiences.

The pilot will also assess methodological issues for consideration in conducting a campus climate assessment, including administration and sampling considerations, considerations related to the sensitive nature of the topic, the challenges of obtaining cooperation from a student population, the effect(s) of providing different incentives to survey respondents, and the potential impact of measurement error on survey estimates. To address the latter and estimate potential false positive and false negative bias, the instrument embeds multiple survey questions on RSA victimization that will enable the use of Latent Class Analysis (LCA) on the sample of students (at the aggregate level).

Through the CCSVS cognitive interview process, we have refined both the instrument and methodology for the pilot study knowing more about-

What respondents understand the term ‘unwanted sexual contact’ to mean;

How to collect information about the specific tactics used to achieve unwanted sexual contact;

How better to capture data about intimate partner violence (IPV) experienced by students

How better to capture data about sexual harassment and sexual assault experienced by students

Methodological considerations regarding the use of incentives and how personalized to make recruitment materials and messages

Design and CCSVS instrument

RTI and BJS began the development of the CCSVS instrument by examining items on the White House’s ‘toolkit’. These items comprised best practices from the body of academic literature that sought to measure elements of both RSA, and also climate perceptions of safety, harassment items, interpersonal violence, and bystander behaviors among university students. Many of these items were quickly identified as being too complicated, burdensome, or unclear, such that they had the potential to result in low response rates and collection of invalid data. The instrument was revised to focus on the elements that were of paramount interest but crafted using best practices from the research literature. To the extent possible, we modeled questions after prior studies in an effort to reduce burden on respondents and increase validity, particularly for respondents who may have experienced victimization. However, the CCSVS is also designed to measure students’ perceptions and attitudes related to RSA and safety on a campus, which will be based on data from all respondents.

For these reasons, the project team engaged in a series of discussions with a variety of stakeholders and practitioners in the field to assist with the development of the instrument. Early and continuing discussions were held with OVW and other agencies and stakeholders to better understand the current state of research on RSA, understand what other collections or projects were in development, and to focus and eliminate duplication of efforts wherever possible. The team also relied on the advice and guidance of a variety of experts and practitioners. Drafts of the instrument have been provided to experts who have provided feedback. These conversations and meetings were instrumental in making determinations about the structure of the survey to ensure its utility for adequately measuring RSA among a specific high-risk population.

In an effort to assess if and how to include certain topics in the CCSVS instrument, the project team also sought guidance on an as-needed basis from individuals identified as key researchers on a range of related content areas, such as perpetration, bystander intervention, rape myths, stalking, and interpersonal violence. We also reviewed BJS’s ongoing work on RSA with Westat to ensure that the key concepts of RSA research were informed by the White House areas of interest. We also ensured the CCSVS instrument is consistent with BJS practices and can inform the NCVS redesign as it pertains to measuring incidence and prevalence in a reliable fashion. However, incidence and prevalence of rape/sexual assault and help-seeking behaviors related to college campus resources are primary concerns unique to this study. There are also key mode differences that require different approaches to measurement (e.g., RDD and ACASI, as opposed to web-based self-administered).

The resulting CCSVS instrument is designed to capture in detail essential information about RSA incidents. This information will be used to describe the scope of the issue among university students, and inform efforts at BJS to improve the measurement RSA and the NCVS. The CCSVS will gather information related to these topics:

Demographics including gender identity and sexual orientation

Student perceptions of the extent to which campus police/security, faculty/staff and administration create a secure environment

Whether undergraduate students experienced any unwanted sexual contact since they started college, in the past 12 months, and during the current academic year

Details related to victimization incidents, including location, number of offenders, and use of alcohol or drugs

Whether the victimization incident was or was not reported, to whom, and reasons for not reporting, as applicable

Whether undergraduate students perpetrated any unwanted sexual contact during the current academic year

Whether undergraduate students experienced any sexual harassment during the current academic year

Whether undergraduate students experienced any intimate partner violence during the current academic year

Student exposure to and perceptions of sexual assault reporting or prevention training

Perceptions of acceptance of sexual harassment or confidence with, and willingness to help through bystander intervention

Moreover, administration of the pilot will allow us to contribute meaningfully to the body of literature surrounding best practices of measuring RSA. It will test the impact of different incentive amounts on response rates, use gender-specific survey modules, and examine the effects of personalization versus perceived anonymity in the greeting materials accompanying the instrument. These are substantive research areas that can be built in to the existing task without adding additional burden to the respondents.

Pilot Test Procedures

In this memo, BJS is seeking generic clearance specifically to cover the pilot testing activities involved in administering the CCSVS in 12 universities. The pilot will take place during the Spring academic semester of 2015.

Sample Selection of Universities

Fourteen (14) institutions of higher education were initially invited to participate in the pilot test from a national database of institutions utilizing specific selection criteria. The process of identifying these 14 schools is described herein. All institutions that enrolled at least 1,176 full time undergraduate women—the minimum sampling frame size based on our statistical power/precision needs—were identified (n=1,242 institutions). We then stratified the universities by school size, public vs. private status, and 2-year vs. 4 year status. Selection targets for the number of universities recruited within each strata are shown below. The intent is not to be able to produce a nationally representative sample of schools, but to recognize and select on key areas of institutional diversity that could inform future collections.

School Size |

Public 4-Year |

Private 4-Year |

2-Year |

< 5,000 |

1 |

2 |

|

5,000-9,999 |

2 |

1 |

1 |

10,000-19,999 |

2 |

1 |

1 |

20,000+ |

2 |

1 |

|

The schools within each strata were then randomly ordered and our selection process followed the random order, although some subjective factors were considered, such as geographic region (to ensure some variability along this dimension). Each selected university was approached for participation in the pilot test using a combination of methods, including an outreach letter from BJS, emails, and telephone calls. When a university declined, the next university in the list for that stratum was then approached. A total of 24 schools were ultimately invited to participate in the Pilot Test, and 12 schools agreed to participate.

Student Selection

Participating institutions are asked to upload a roster of all degree-seeking, full- and part-time undergraduate students who are at least 18 years of age. The rosters will be encrypted and uploaded onto an FTP site maintained by RTI, which encrypts the file during transmission. The rosters contain the following information:

Unique Student ID#

First name

Last name

Sex/gender

Birth date (or current age in years)

Race/ethnicity

Year of study (i.e., 1st year undergraduate, 2nd year undergraduate, 3rd year undergraduate, 4th year undergraduate, or 5th or more year undergraduate)

Degree-seeking status

Part-time/full-time status

Email address(es)

Campus/local mailing address

In addition, optional data elements may also be included:

Cell phone number

Transfer status (yes/no)

Major

Highest SAT score

Highest ACT score

GPA

Educational Testing Service (ETS) code or CEEB code

Whether living on or off campus

(If on campus) dorm

Whether studying abroad

The roster data will be used by RTI to recruit students for the study, send follow-up reminders, and conduct a nonresponse bias analysis to determine whether the survey data need to be weighted because certain types of students were more or less likely to participate. Nonresponse bias analyses will be conducted at the school level and will consist of two components: (1) a comparison of respondents to the total population, and (2) if victimization rates vary based on when during the data collection period a student responds, an assessment of whether the characteristics of early responders are different from late responders. For the first analysis, for each student characteristic provided on the roster, RTI will compute a Cohen’s Effect Size (sometimes referred to as Cohen’s d). An effect size measures the strength of association for a phenomenon – in this case, the association between the distribution of respondents and the population. Cohen indicated that an effect size is “small” if it is around 0.2, “medium” if it is around 0.5, and “large” if it is around 0.8. A “medium” or “large” effect size is an indication of potential bias. For the second analysis, RTI will assess the distribution of respondents reporting being a victim over time. If the proportion of respondents who report a victimization is different over time (e.g., victims complete the survey early in the data collection period and more non-victims complete their survey late in the data collection period) then the victimization rate for a school may be dependent on the length of the data collection period. If this is the case for a particular school, we will use the roster characteristics to see if there is a corresponding type of student who is more likely to report early in the data collection period rather than late and if there is a correlation between that type of student and reporting being a victim. If such a correlation exists and if students with the identified characteristics make up a disproportionate amount of the respondent sample, then there is the potential for bias in the victimization estimates. In both cases, weight adjustment can be used to adjust for the bias. For example, if we determine that a particular characteristic is correlated to both victimization and being an early or late responder, we can make sure it is included in the weighting model to account for any disproportionate response patterns for that school. If we then see differences in the weighted and unweighted estimates, that is an indication that the adjustment effectively reduced the bias.

In addition, for students who take the survey, their survey data may be combined with some of the roster data to facilitate analyses that explore characteristics associated with sexual assault (e.g., whether transfer students are more likely to experience sexual assault). By using administrative data for this purpose, we can avoid asking survey respondents for this information.

Data Transfer Agreements will be executed between each participating university and RTI. These agreements outline procedures for the secure transmission and storage of the data, the specific use of the data by RTI, and steps for storing the roster data at the end of the study.

Once the roster is received from an institution, RTI will randomly select the number of undergraduate students necessary to produce valid estimates of sexual assault prevalence (for females) and campus climate estimates (for males). Samples will be selected from two independent target populations: male undergraduate students and female undergraduate students. Both frames will be stratified by year of study and race/ethnicity, which will ensure adequate sample representation across all grades and racial and ethnic groups. (Note some students may be excluded from the sampling frame, such as those taking on-line classes only or those not seeking a degree.) The number of male and female respondents needed to achieve the targeted level of precision will be calculated at each participating school.

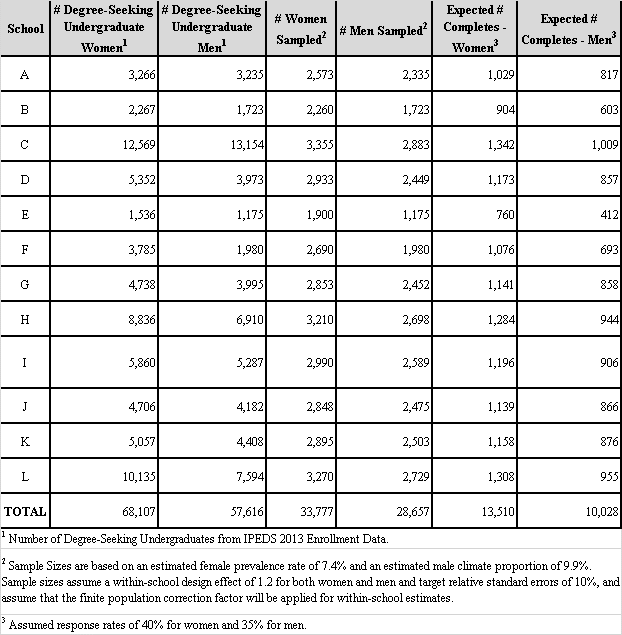

The specific number of students selected will vary based on the size of the institution, but will be based on the number of completed surveys needed from women and men to produce reliable estimates of sexual assault prevalence and campus climate attitudinal measures for a given institution. For example, if we assume a relative standard error (RSE) of 10% and a sexual assault prevalence rate among undergraduate women of 7.4%, and want to describe campus climate using data collected from men and women, the table below presents sample size information for the 12 participating schools.

Table 1

Participant Recruitment Procedures

Prior to fielding the survey, the President or Chancellor of the participating universities will be asked to e-mail all undergraduate students a message describing the study and encouraging their support. A letter of support from each school will also be accessible on the survey website for interested students to access.

Sampled students will be assigned a Case Identification Number and a Survey Access Code (both of which will be added to the sampling frame dataset). Sampled students will be recruited via an introductory e-mail sent to the e-mail address provided on the roster. The recruitment messages are shown in Attachment A. The recruitment e-mail will provide the student with their Survey Access Code and include a hyperlink to the study website. The e-mail will also cover the following points:

The student has been randomly selected to participate in a 20- minute, web-based survey.

The study is being conducted by RTI, an independent, non-profit research organization, with the cooperation of their university.

Participation is completely voluntary.

Students who complete the survey will receive an electronic gift card worth $10, $25, or $40, depending on which incentive experiment they have been randomly assigned.

Answers to survey questions will be kept completely confidential. We will not link their identity to their survey answers.

Nonrespondents (i.e., students whose Survey Access Code has not been used to access the survey) will be sent a follow-up e-mail (see Attachment B). We will follow up via e-mail and text on a weekly basis for approximately 6 weeks. Nonresponse follow-up materials will include the Survey Access Code and a hyperlink to the survey website, and will repeat the same core points made in the recruitment e-mail in addition to stronger language encouraging their participation in the survey. When only a few weeks are left for data collection, nonrespondents will be informed how much time they have left to complete the survey.

Incentive Experiment Procedures

There will be 12 schools participating in the pilot test. At 8 schools, each student will receive a $25 incentive for completing the survey. At the four largest schools we will conduct a tiered incentive experiment: at two schools half of the students will get $10 and half will get $25; and at the other two schools, half the students will get $25 and half will get $40. Because the perceived value of the payment amounts may differ across schools, the experiment requires that incentive amounts vary within some of the schools. We are proposing to conduct the experiment at the four largest schools to minimize the likelihood that sampled students will discover that incentive amounts vary by talking with other sampled students.

Description of Survey Administration Procedures

Once the respondent logs on to take the survey and indicates their consent, he/she will be taken to the first survey question. The survey, shown in Attachment E, will take approximately 20 minutes, on average, to complete. The survey covers the following sections:

Basic demographics

School connectedness, general climate, and perceptions about campus police, faculty, and administrators

Sexual harassment victimization and sexual coercion

Sexual assault victimization gate questions

Incident-specific follow-up (contextual details about the incident, reporting/nonreporting experiences, perceived impact of the incident on academic performance)

Additional sexual assault victimization gate questions (for the latent class analysis)

Intimate partner violence

Sexual harassment perpetration

Sexual assault perpetration

Perceptions about school climate for sexual harassment/assault

Participation in sexual assault prevention efforts

Perceptions of university procedures regarding sexual assault reports

Awareness of university procedures and resources for sexual assault

Perceived tolerance for sexual harassment and sexual assault among the campus community

Individual tolerance for sexual harassment and sexual assault

Bystander behaviors

Additional demographics

The survey will be programmed in Voxco Acuity4 Survey. It will be designed to be easily administered on mobile devices as well as tablets and computers. Because of the voluntary nature of the survey, students will not be forced to enter a response to each question prior to moving forward in the survey. In addition, students can stop and restart the survey whenever they wish.

After the respondents complete the last survey question, respondents will be provided with the opportunity to view a list of national and local student support services and will be provided with instructions on how to claim their electronic gift card.

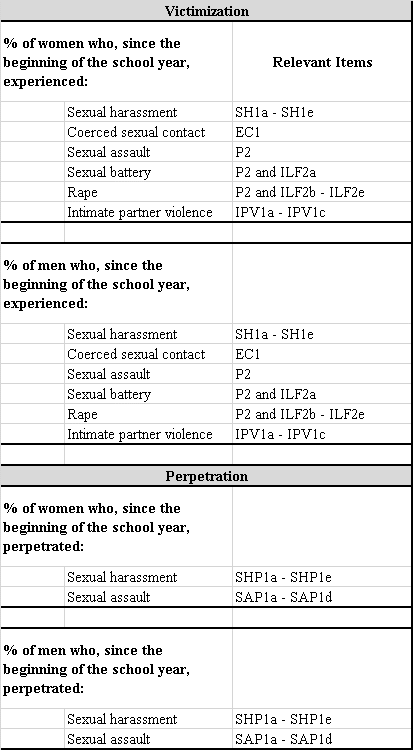

The table below presents the key estimates we will be able to produce with data collected with the survey instrument, as well as the relevant survey items for computing these estimates. It is important to point out, however, that we anticipate having limited statistical power when it comes to producing estimates for male victimization and for male or female perpetration. In addition to prevalence estimates, we will be able to create incidence rates for measures of sexual assault, sexual battery, and rape victimization.

Table 2

Burden Hours for Pilot Testing

The estimated burden for accessing the web-based survey module and completing the survey is about 20 minutes. The burden associated with these activities is presented in the following table.

Table 3

Protection of Human Subjects

There is some risk of emotional distress for the respondents given the sensitive nature of the topic, particularly since the questions are of a personal nature. However, appropriate safeguards are in place, and the study has been reviewed and approved by RTI’s Institutional Review Board (IRB). Participants will be informed about the nature of the survey and that some questions might make them feel upset prior to agreeing to participate. In addition, they will be provided the opportunity to view a list of national, local, and university resources, such as the National Domestic Violence Hotline and The Rape, Abuse, and Incest National Network, after they finish the survey.

Informed Consent, Data Confidentiality and Data Security

Informed Consent

All students who are recruited to participate will be informed—on the survey home page and the consent screen—about the nature of the survey questions prior to deciding whether or not to proceed with the survey. The consent screen will indicate that respondents can skip any questions they want, or discontinue their participation at any time. It will also advise them to take the survey in a private setting. The final page of the survey will provide local and national hotline/helpline telephone numbers that they can call should they need assistance. Many of these resources are staffed 24 hours a day, 7 days a week, and the trained staff who respond can put people who are in distress in touch with local service providers and emergency responders. For the universities selected for the pilot test, lists specific to the geographic locations have been developed.

Data Confidentiality and Security

Data security procedures designed to reduce the risk of a breach of confidentiality are discussed below. In addition, staff members who have access to the sampling frame files and/or survey data will be required to sign a pledge of confidentiality.

Rosters submitted by universities will be transmitted in a secure manner (i.e., by encrypting the files and uploading them to an FTP site maintained by RTI, which uses Secure Socket Layers).

The roster files will be used during the study period to select students for participation, follow-up with nonrespondents, and conduct a non-response bias analysis (and weighting adjustments). As noted in the informed consent bullets on the survey homepage, for students who take the survey, some of the sampling frame data elements (e.g., GPA, transfer status) may be merged with the survey data to facilitate analyses of characteristics associated with sexual assault. At the conclusion of the study, the sampling frame data will be destroyed (except for any data that are merged onto the survey data file.

The Web survey will be hosted by the Acuity4Survey platform, which is run by a company called Voxco (http://www.acuity4survey.com/). Data will be housed in a cloud infrastructure of Voxco, which has passed an SSAE-16 (SAS-70) data security audit. RTI ITS has reviewed Voxco’s data security approaches and has approved RTI staff to use the infrastructure on projects. ITS also maintains a Voxco administrative login so that it can audit project data security procedures. Only RTI project team members will have access to survey response data, and only a couple of RTI staff members will actually be able to download survey response data onto a project share drive. This tight access control of the survey response data is a further step to mitigate risk.

No personally identifying information about students will be linked with their survey response file exported from Voxco. The Survey Access Code and Case Identification Number (which is automatically generated for sampling and is the primary number used to identify an individual in the sampling frame file) will be in both the sampling frame file and the survey data file, and the Case Identification Number is the only link between the two files. Neither the Survey Access Code nor the Case Identification Number are PII.

It is necessary to be able to use the Case Identification Number to link the sampling frame data with the survey data for the following reasons: 1) to ensure that access to the survey is restricted to those who have been selected (i.e., valid Survey Access Codes from the sampling frame file will be preloaded into the survey website so that they can automatically be validated when the students enter them on the survey website) and that each student only completes the survey one time, 2) to allow students to complete the survey in more than one sitting if needed (i.e., they can stop and restart the survey if needed, which is not possible if students do not enter a Survey Access Code), 3) to allow RTI to identify nonrespondents (to follow-up with students in the sampling frame with Survey access Codes that have not yet been entered on the survey website), and 4) to allow us to combine some sampling frame data elements once data collection is complete (e.g., GPA, transfer status) with survey data to facilitate analyses on characteristics associated with campus climate and sexual assault.

Throughout data collection a member of the project staff will export the data from the survey and save them directly onto the project shared drive. At this point, a limited number of sampling frame data elements may be combined with the survey data (using the Case Identification Number) but no identifiers from the sampling frame file will ever be merged with the survey data file. Only RTI project staff directly involved in programming, sampling, recruitment/nonresponse follow-up, or analysis will have access to the survey data or sampling frame files, and these staff will sign a confidentiality agreement. At the end of the study, the survey data file will be archived for storage at RTI for five years. All sampling frame files that contain PII will be deleted. A de-identified data file will be prepared and sent to BJS in the software package of their choosing (e.g., SPSS, SAS, or Stata, etc.).

BJS’s pledge of confidentiality is based on its governing statutes. All information obtained during this study will be treated as confidential and will only be used to analyze study results. The data are collected under federal statute (Title 42 USC, Section 3735 and 3789g) and are protected from any request by a law enforcement or any other agency, organization, or individual. All answers will be combined with responses from other study participants when writing up reports and conducting analyses. Pursuant to 42 U.S.C. Sec. 3789g, neither BJS nor RTI will publish any data identifiable specifically to a private person.

Reporting

Upon completion of all pilot testing, BJS and RTI will publically release a comprehensive report but will not be identifying the participating schools. The report will provide detailed information on the testing methodology, basic characteristics of the respondents, and aggregate and university-level estimates on the rate and prevalence of RSA among the universities as well as recommendations for further research. Participating universities will not receive any student-level data but rather aggregated, university-specific reports if they so choose.

References:

WHITE HOUSE TASK FORCE TO PROTECT STUDENTS FROM SEXUAL ASSAULT (U.S.). (2014). Not alone: the first report of the White House Task Force to Protect Students From Sexual Assault. http://purl.fdlp.gov/GPO/gpo48344.

Christopher P. Krebs, Christine H. Lindquist, Tara D. Warner, Bonnie S. Fisher & Sandra L. Martin, The Campus Sexual Assault (CSA) Study: Final Report, National Institute of Justice, U.S. Department of Justice, (Oct. 2007).

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | September 15, 2005 |

| Author | Jessica Stroop, BJS |

| File Modified | 0000-00-00 |

| File Created | 2021-01-25 |

© 2026 OMB.report | Privacy Policy