2020_OMB_packet_Part_B_(08-13-13)

2020_OMB_packet_Part_B_(08-13-13).docx

2013 Census Test

OMB: 0607-0975

SUPPORTING STATEMENT

U.S. Department of Commerce

U.S. Census Bureau

2013 Census Test

OMB Control Number 0607-<XXXX>

Part B – Collections of Information Employing Statistical Methods

Question 1. Universe and Respondent Selection

General

The Census Bureau will conduct the 2013 Census Test on a sample of 2,000 housing units randomly assigned to four experimental treatments:

(Treatment 1) use of administrative records to reduce workload and a fixed contact strategy;

(Treatment 2) no use of administrative records to reduce workload and a fixed contact strategy;

(Treatment 3) use of administrative records to reduce workload and an adaptive contact strategy;

(Treatment 4) no use of administrative records to reduce workload (records used only to prioritize cases) and an adaptive contact strategy.

Figure 1. 2013 Census Test Treatment Design

|

Administrative Record Group |

No Use of Administrative Records Group |

Fixed Contact Group |

TREATMENT 1 (N=500)

|

TREATMENT 2 (N=500)

|

Adaptive Design Contact Group |

TREATMENT 3 (N=500)

|

TREATMENT 4 (N=500)

|

A self-response component (e.g. paper or email questionnaire) will not be available for this test because of the need to mount this research expeditiously and the need to conserve resources. A self-response strategy to reduce NRFU workload will be tested in subsequent decennial census tests. Instead, a NRFU data collection environment will be simulated in this study using information from the 2010 census. Specifically, the sample will consist of housing units that did not mail back a self-response form during the 2010 decennial census, identified from the 2010 census NRFU universe.

Target Universe

For this exploratory research, the target population will be selected from a universe of all block groups in the Philadelphia Metropolitan Statistical Area. Philadelphia was chosen due to current Census interviewer availability at the time of the test and the operational and cost advantages provided by working with this local Census regional office. The research is not concerned with external validity of the findings: It will not produce population estimates at any level of geography. Rather, the test will examine operational issues and, secondarily, cost and data quality differences among treatment groups. Due to a focus on internal validity, it is appropriate to select a convenient metropolitan area.

Sample Selection

Block groups with more than five percent of the population in group quarters will be removed from the universe because this research is focused on individual housing units. Block groups with more than 10 percent of households that have no one over age 14 who speaks English ‘very well’ or better will also be removed because this small study will have less than 20 interviewers, and cases will not be reassigned between adaptive and fixed contact strategies, limiting the availability of interviewers to conduct interviews in non-English languages.

From the remaining block groups, an iterative process is followed. One block will be randomly selected. A similarity score between this block group and all other eligible block groups will be calculated in order to produce “pairs” of block groups, one assigned to the adaptive contact treatment and the other to the fixed contact strategy. The similarity score is based on a weighted combination of the absolute differences between block groups for the following ten block group variables found in the Planning Database that relate to likelihood of contacting the household:

Percent vacant

Average number of persons per household

Percent owner occupied

Percent single unit

Percent multi-unit (ten or more)

Percent NRFU 2010 cases

Percent black

Percent Hispanic

Percent under age 18

Percent over age 64

The block group with the highest similarity score will be paired with the randomly sampled block group. If the similarity score is above a certain threshold, this pair will be included in the sample and removed from the remaining block group universe. All 2010 NRFU cases from each block group will be included in the sample.

This strategy will be repeated until there is a sample of over 2000 housing units. MAFIDs from (1) in the American Community Survey (ACS) for 2012 and 2013 and (2) currently in a Census survey will then be removed.

Treatment Assignment

For each pair of block groups, one will be assigned to the fixed contact strategy and the other to the adaptive contact strategy treatment in a way that balances sample size between the two groups. Within each sampled block group, housing units will be randomly assigned to:

Treatment 1 or 2 (if in a fixed contact strategy block group) or

Treatment 3 or 4 (if in adaptive contact strategy block group)

Test Goals

The primary goal of the test is to pilot operational procedures using administrative records and different contact strategies for conducting NRFU data collection. The research is conducted in a simulated NRFU data collection environment.

Operational efficiency will be measured on entire test. The following components of operational efficiency will be tested including the ability:

to remove cases that use administrative records to reduce the NRFU workload,

to assign/reassign cases when workload has been reduced using administrative records,

to staff effectively a field operation after cases are removed by CATI,

of interviewers to follow instructions provided in case management,

of interviewers to perform daily manual syncs between their laptops and a central server,

of a model and set of business rules to run and produce information on a daily basis, and

of a centralized paradata repository to receive paradata daily from several systems and transmit data daily to an operations control system,

Secondary goals concern the cost and data quality differences between treatments. One analysis will compare operational efficiency, cost, and data quality between treatments that use and do not use administrative records to reduce the NRFU workload. Another analysis will compare operational efficiency, cost, and data quality between treatments that use an adaptive contact strategy versus a fixed contact strategy.

Cost will be operationalized in four ways:

overall cost, which includes the costs of CAPI and CATI interviewers,

average cost per case,

average cost per contact attempt, and

average case completion per contact attempt

Data quality will be operationalized in four ways:

item nonresponse rates,,

percent proxy,

percent partial completes, and

final response rates

See Attachment F for the analysis plan.

Sample Size

The primary goal of the 2013 Census Test is to examine operations and systems between two groups of treatments. We do not seek to produce population estimates but to observe different methods intended to reduce NRFU workload and manage NRFU contacts. We do not have the resources to conduct a large-scale field test. We do not aim to examine differences between contact methods with great precision but to note larger disparities.

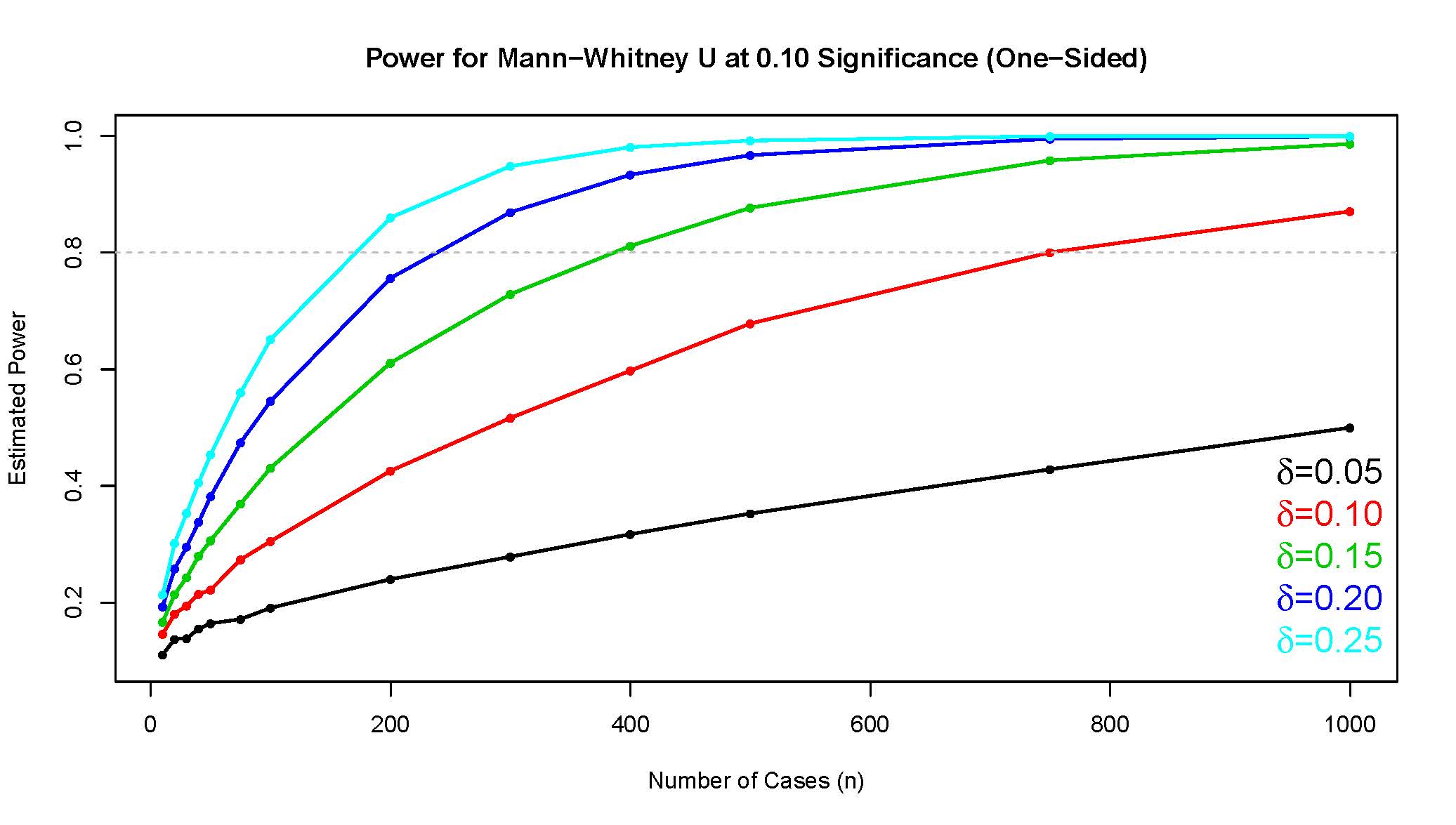

With this primary goal in mind, a secondary objective of the test is to examine cost and data quality differences between each group of treatments. To calculate a sample size, used interviewer production rates as a benchmark. We obtained a sample of production rates from NRFU 2010 crew leader districts in Philadelphia and Baltimore, two likely sites for the test. The distribution of production rates was skewed, with a mean around one case per hour. Since the rates were obtained from actual Census NRFU experience, we used these values to represent the fixed contact strategy treatment in a simulation. First, we sampled with replacement to simulate the distribution of hours in the fixed contact strategy group. Second, we sampled with replacement from the fixed contact strategy group, but with a subtracted delta to represent hypothesized improvement in production rates in an adaptive design condition and the addition of a small random error term to reflect uncertainty in what may be observed in the adaptive design treatment. The error term was normally distributed with a mean of zero and standard deviation of 0.08, representing 5 minutes per case. Third, taking the non-normal distribution of rates into account, we ran a one-sided Mann-Whitney U test at the 0.10 significance level. The Mann-Whitney U test makes no parametric assumptions about the distributions of the two groups. We repeated these three steps in simulation 10,000 times to calculate the proportion of times that the p-value was less than 0.10.

We describe these values in Figure 2. As can be seen, the simulation suggests that a sample of approximately 1000 cases per two groups (e.g., adaptive design v. fixed contact strategy) is sufficient to measure a .1 difference in production rates with a .1 significance level (one-tailed test). This analysis offers one view of the sample size needed to discern small differences in production rates across treatments with reasonable confidence, but it does not take into account the contribution of between-interviewer variance in production rates within treatments. Considering the effect of between-interviewer variance would necessitate a far larger sample, which is neither affordable nor warranted at this stage in the development of the new procedures under consideration. Further, a larger sample would make it more difficult to execute novel procedures and monitor results. Therefore, we determined that 2000 cases – 1000 for each of the two major groups – would be sampled for this research, recognizing that this sample size might not provide sufficient power to conduct significance tests on all differences of interest.

Figure 2. Estimated Power by Number of Cases

Response Rate

Since this is a simulated Census NRFU test, response rates that include proxy interviews will likely be over 90 percent. Based on 2010 NRFU efforts, approximately 75 percent of occupied housing units may respond via a household interview, and a proxy respondent may enumerate 25 percent. A small fraction of occupied housing units, typically around 1 percent, may not be enumerated. We expect slightly lower household and proxy response rates than were achieved in the 2010 Census because a public information campaign does not accompany the test, and it does not span an entire geographic area. These rates will be adequate for our test because the study is exploratory and interested in the effectiveness of contact strategies and administrative records to enumerate populations. Furthermore, this test will not create population estimates.

Data from this research will be included in research reports, and research results may be prepared for presentation at professional meetings or in publications in professional journals. All reports and presentations will explain (1) that the data were produced for strategic decision-making, not official estimates, and (2) the methodology and limitations of the test.

Question 2. Procedures for Collecting Information

Administrative Records Treatments to Reduce NRFU Workload

Two treatments (Treatments 1 and 3 in Figure 1) will employ administrative records to remove occupied housing units with records deemed suitable to enumerate them from the NRFU workload after they are mailed a pre-notice asking for participation in the study. The suitability of records for enumerating these housing units is determined through the Census Bureau’s research on matching administrative records information to 2010 Census NRFU housing units.

The Census Bureau will mail all housing units a prenotice letter two weeks before the start of data collection, alerting residents about the upcoming study. In Treatments 1 and 3, the Census Bureau will remove housing units from data collection whose prenotice letters are not returned with “undeliverable as addressed” United States Postal Service information and which have administrative record evidence of occupancy. These housing units will be classified as “occupied” in these treatments.

In Treatments 1 and 3, the Census Bureau also will remove housing units from data collection whose prenotice letters are returned with “undeliverable as addressed” United States Postal Service information and which have no other record evidence of occupancy. These housing units will be classified as “vacant” in these treatments.

The Census Bureau will not employ administrative records to reduce workload in Treatments 2 and 4. Instead, administrative records will be employed to prioritize cases for contact in the adaptive design condition (Treatment 4). Administrative records will not be employed for any purpose in Treatment 2.

Telephone and In-Person Contacts

Prior to data collection, the Census Bureau will attempt to match all sampled housing units to telephone numbers using purchased vendor files. These numbers will be flagged as landline or cell. The Census Bureau will check all landline telephone numbers, following established confidentiality procedures, to make sure that they are working. Checking for working landline telephone numbers will make calling more efficient (i.e., less costly).

In the fixed contact strategy treatments (1 and 2), the Census Bureau will instruct computer-assisted personal interviewing (CAPI) interviewers to make two telephone calls to sample units before performing personal visits. Interviewers will attempt up to three in-person contacts for sampled housing units not reached by telephone. Interviewers will attempt to contact sample units without telephone numbers via personal visits. If an interviewer cannot complete an interview after telephone calls and three in-person contact attempts, they will be instructed to obtain a proxy interview.

In the adaptive contact strategy treatments, telephone numbers for sampled cases will be sent first to a centralized computer-assisted telephone interviewing (CATI) facility where interviewers will attempt interviews for two weeks. At the end of this period, CATI nonrespondents will be transferred to CAPI interviewers who will attempt personal visits. (Housing units without telephone numbers will be sent immediately to CAPI interviewers during these two weeks). CAPI interviewers in the adaptive contact strategy treatments will be informed on a daily basis what cases are priority for contact and when to perform proxy interviews, as determined by response likelihood models.

Data collection will last six weeks, beginning in October 2013 and ending in November 2013.

Question 3. Methods to Maximize Response

An advance or “prenotice” letter will be sent to all sampled households in order to maximize response and increase the respondent’s understanding of the legitimacy of the survey. (See Attachment B.) This letter will state that cooperation with the study is required by law.

We will attempt either to interview or to enumerate through administrative records all sampled households. In final efforts, housing units that refuse interviews or cannot be contacted will be enumerated using a proxy respondent if possible.

For respondents concerned about the validity of the survey, the Census Bureau will provide the OMB clearance number, so that concerned respondents may verify its legitimacy via the Census Bureau website. Respondents who need help will be able to receive it via Telephone Questionnaire Assistance (TQA), whose number will be supplied on the prenotice letter. Respondents who are unwilling or unable to respond by personal visit can provide their information to a telephone agent by calling the TQA number.

Question 4. Tests of Procedures or Methods

The 2013 Census Test relies on the ACS case management systems and ACS production instrument. Use of ACS was critical as it uses both CATI and CAPI modes and the systems in development for the 2014 Census Test would not be completed in time for the 2013 Census Test. Furthermore, it aligns with Census Bureau efforts to research 2020 methods cost effectively through reuse of existing systems. Using a modified ACS system and questionnaire makes the research possible in the proposed time frame.

The test uses a modified version of the 2013 production ACS instrument that removes all household-level questions except tenure and status of temporarily occupied units and removes all detailed person-level questions except relationship, sex, age/date of birth, Hispanic origin, and race. (See Attachment C.) Other changes include:

updating survey specific items such as survey name, description of purpose, survey length;

adding a proxy interview option for CAPI interviews; and

removing the group quarters path.

The 2013 Census Test instrument has not undergone separate pre-testing because all survey questions are from the 2013 production ACS instrument. The 2013 ACS instrument has undergone separate pre-testing, cognitive testing, and expert review as outlined in ACS OMB packages.

No field-testing or experimentation has been done on the 2013 Census Test methodologies, as the 2013 Census Test is itself a field test of strategies to decrease NRFU workload and a test of contact strategies to increase the efficiency of NRFU.

Question 5. Contacts for Statistical Aspects and Data Collection

For questions on statistical methods or the data collection described above, please contact Peter V. Miller of the Center for Survey Measurement and the Center for Adaptive Design at the Census Bureau (Phone: (301) 763 9593 or email peter.miller@census.gov).

Attachments to the Supporting Statement

Attachment A. Public Comment

Attachment B. Prenotice Mailing Letter

Attachment C. Instrument Specification / Frequently Asked Questions

Attachment D. Field Representative Privacy and Confidentiality Letter

Attachment E. Instrument Flashcards

Attachment F. Analysis Plan

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Gina K Walejko |

| File Modified | 0000-00-00 |

| File Created | 2021-01-28 |

© 2026 OMB.report | Privacy Policy