CBS OMB Part B 01_19_12

CBS OMB Part B 01_19_12.doc

Pilot Study of Community-Based Surveillance of Supports for Healthy Eating/Active Living (HE/AL)

OMB: 0920-0934

OMB SUPPORTING STATEMENT:

PART B

COLLECTIONS OF INFORMATION EMPLOYING STATISTICAL METHODS

Pilot Study of Community-Based Surveillance of Supports for Healthy Eating/Active Living (HE/AL)

Submitted by:

Division of Nutrition, Physical Activity, and Obesity

National Center for Chronic Disease Prevention and Health Promotion

Centers for Disease Control and Prevention

Department of Health and Human Services

Technical Monitor: Deborah Galuska, MPH, PhD

Associate

Director of Science

Division of Nutrition, Physical Activity,

and Obesity

National Center for Chronic Disease Prevention and Health Promotion

4770 Buford Highway, NE

MS-K24

Atlanta, GA 30341

January 19, 2012

Table of Contents

B.1 Respondent Universe and Sampling Methods 1

B.2 Procedures for Collection of Information 3

B.3 Methods to Maximize Responses Rates and Address Non-Response 10

B.4 Tests of Procedures and Methods to be Used 12

B.5 Consultation on Statistical Aspects of the Study Design 13

Exhibits

Exhibit B.2.a. Sample Allocation Strategy for Pilot Study States………………………….... 5 |

Exhibit B.2.b. Summary of Assigned Respondent Recruitment Conditions in Implementing the Pilot Study ………………………………………………………………………………………………..… 9 |

B.1 Respondent Universe and Sampling Methods

The respondent universe for this data collection consists of the universe of municipalities within each of the two pilot states. The sampling strategy for the community-based surveillance system pilot study is designed to produce state-level estimates of the proportion of municipalities that possess a range of specific policies that support physical activity and healthful dietary behaviors in the community at the local government level. Municipal governments, as defined by the U.S. Census Bureau, are the primary sampling unit (PSU) for the data collection.1 The sampling frame is constructed from the most recent U.S. Census of Governments (COG) (2007), which provides a listing of municipalities for each state. COG data for the name, type (e.g., city, town, village), and total population of each municipality or township is used to construct the sampling frame. All municipalities and townships are included in the sampling frame for the pilot study states regardless of population size. The sampling frame does not include a population-based cutoff point, as there is no clear evidence for excluding very small municipalities with certainty from the sample frame. An expected outcome of the methodological component of the pilot study will be the ability to determine a future cutoff point for the sample frame.

In order to eliminate the potential for geographic overlap between municipalities and towns/townships in the sample frame, editing of the COG data is conducted. This editing procedure first identifies whether any geographic areas of the pilot study states are under the jurisdictional authority of both a municipal and a township government. In instances where this occurs, the COG data are edited to exclude the township. This approach prevents double-counting of populations that are under the jurisdiction of both forms of government.

The sample frame is then ordered on the basis of the total population in each municipality. The frame is then divided into five strata, with each stratum encompassing approximately 20% of the state’s population that lives in municipalities. In this manner, the top stratum (S1) includes the largest municipalities that together contain 20% of the state’s municipal population (in the aggregate). Similarly, the bottom stratum (S5) contains the state’s smallest municipalities that (in aggregate) contain 20% of the state’s municipal population. In every state, the top stratum is designated as a “certainty stratum.” Municipalities in this stratum will be selected with certainty into the sample, in order to capture the policy supports that impact the largest population centers in each state. The basic rationale for the stratification is that sampling rates (RS) should be lower in the strata with the smaller municipalities. That is to say, RS {S2} < RS {S1}, RS {S3} < RS {S2}, and so forth.

Determining the Sample Size. The total sample size (n) for each pilot study state is determined by the total number of municipalities in that state (N). The sample design requires that in a state with fewer than 200 municipalities, all municipalities are to be included in the sample. For a state that has more than 200 municipalities, a sample of 200 municipalities will be selected. Larger municipalities will be oversampled with the use of disproportionate allocation. To balance the consequences of unequal probabilities of selection and the unequal weights that lead to variance inflation (i.e., lower precision), the sampling design will assign larger sampling rates to the large-community strata.

Sample sizes were developed to yield 95% confidence intervals within ±5 percentage points for all study estimates. These precision levels are premised on design effects (DEFF) of 2.0 or less. The DEFF measure reflects the impact on sampling variance induced by deviations from simple random sampling. The DEFF is defined as the variance under the actual design divided by the variance that would be achieved by a simple random sample of the same size, and may vary by type of estimate. For this study design, the DEFF reflects the unequal weighting effects brought about by disproportionate sampling (i.e., assigning higher probabilities of selection to specific strata when compared with lower probabilities of selection in other strata). The use of a DEFF estimate of 2.0 or less is a conservative assumption and is expected to be met for this stratified sampling design.

The sample sizes also take into account the finite population correction (FPC), which is a more necessary adjustment when the sample size is greater than 10% of the total population from which the sample is derived (i.e., when the sampling rate exceeds 1/10). The FPC—computed as 1 − n/N, where n and N are the sample size, and the population size (number of municipalities in the state), respectively—generally reflects the reduction in variance that follows from larger sampling rates (n/N). Finally, the sample sizes have been adjusted to account for expected response rates.

B.2 Procedures for Collection of Information

B.2.a. Statistical Methodology for Stratification and Sample Selection

The pilot community-based surveillance system described in this data collection request is meant to inform the potential future development of a national surveillance system to collect representative data from all states. Therefore, it is important that the data collection methodologies used in the pilot study be reasonable for application in the conditions that would likely exist for a national study. In order to answer methodological questions about the level of study recruitment and non-response follow-up required, the pilot study will implement a split-sample approach to assign one of two recruitment conditions to sampled municipalities in each of the five strata in the sample design; a low-intensity recruitment condition and a moderate-intensity condition, each of which is differentiated by the non-response follow-up techniques described below.

To arrive at the split sample proposed for the study, the following procedures will be used. For each of the two states selected for the pilot study, two random subsamples will be drawn. The split-sample consists of two random subsamples selected with the same design as the original larger sample. Each subsample, or half-sample, is a valid representative sample of the state’s municipalities. These two subsamples will first be assigned to one of the recruitment conditions then will be combined to provide estimates with the same precision that is anticipated for the overall sample design.

Sample Allocation. The sample allocation strategy was developed for use with a national sample. As such, the design calls for the assignment of states to sampling groups based on the number of municipalities within the state, and then proposes to allocate municipalities within the state into 5 strata as a means of then determining which municipalities will be a part of the sample within each state. The group a state is assigned to depends on the number of municipalities within the state. The sampling design establishes a range of 4 groups to which a state can be assigned. For the purposes of the pilot study, the two selected pilot states will be assigned to one of four groups based on the number of municipalities in the state overall. It is assumed that one state will have 200 or fewer communities, while the other state will fall into one of the other group categories. After a state has been assigned to a group, the sample allocation approach is employed to assign municipalities to a stratum.

Group 1 is identified as being comprised of “census” states, that is, those with fewer than 200 municipalities where all municipalities will be included in the surveillance system. The allocation in the other groups follows the guiding principle that the sampling rate should decline as one moves from a stratum with larger municipalities to strata with smaller ones. Group 2 is composed of those with 200 to 300 municipalities. In these states, the strategy selects with certainty municipalities in all strata but the last, stratum #5. This final stratum will be assigned the balance of the n = 200 sample municipalities. Group 3 is composed of those with 301 to 1,000 municipalities. In these states, the strategy selects with certainty the municipalities in stratum #1, applies sampling rates of 2/3, 1/3, and 1/4 in strata #2, #3, and #4, respectively, and allocates the balance of the n = 200 to stratum #5. Group 4 is composed of those with more than 1,000 communities. In these states, the strategy selects with certainty the municipalities in stratum #1, applies sampling rates of 1/3, 1/5, and 1/8 in strata #2, #3, and #4, respectively, and allocates the balance of the n = 200 to stratum #5. Note that in each case, (fractional) sample sizes should be rounded up when the sampling rates lead to fractional sample sizes in strata 2 through 4. When selecting the sample, simple random sampling will be used for each of the non-certainty strata. Each municipality within a given stratum will have an equal probability of being selected. The selection of units is non-overlapping between strata. Exhibit 1 presents a summary of the sample allocation strategy to be used for the study.

Exhibit B.2.a Sample Allocation Strategy for Pilot Study States

Assigned Group |

Number

of Municipalities |

S1 |

S2 |

S3 |

S4 |

S5 |

Group 1 |

Fewer than 200 |

Take all |

Take all |

Take all |

Take all |

Take all |

Group 2 |

200 to 300

|

Take all |

Take all |

Take all |

Take all |

Balance of n=200 |

Group 3 |

301 to 1,000

|

Take all |

2/3 rate |

1/3 rate |

1/4 rate |

Balance of n=200 |

Group 4 |

More than 1,000

|

Take all |

1/3 rate |

1/5 rate |

1/8 rate |

Balance of n=200 |

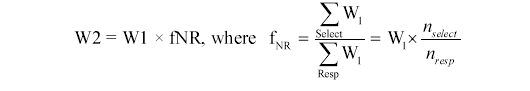

Computing the Sample Weights. Sampling weights will be determined as the reciprocal of the sampling rates in each stratum that were computed in the allocation step. These weights will be adjusted to account for non-response after the conclusion of the data collection. The weight will be adjusted for non-response by multiplying the sampling weight by the inverse of the proportion of sample municipalities that respond to the data collection. The final adjusted weight can be then expressed in terms of the sampling weight W1:

Final adjusted survey weights for each community will be assigned so that unbiased state-level estimates can be computed.

B.2.b Data Collection Methods

This pilot study is designed to assess the methodologies most practical for a potential full-scale national surveillance system with local governments as the key respondents. The targeted survey respondent is the city or town manager/planner or a person with similar responsibilities for the sampled municipality. After the sampling is completed, the sample will be validated to confirm the name and address of the city/town manager/planner, who will then receive the study recruitment invitational packet (Appendices D1-D5) on behalf of the sampled municipality. All municipalities will receive a hard-copy correspondence that includes an invitation letter from the Centers for Disease Control and Prevention (CDC) describing the purposes of the study, instructions on how to access the web-based questionnaire, an informed consent document, and additional study background materials.

The pilot study assesses the methodological issues associated with respondent recruitment and non-response follow-up through the use of a spilt-sample design. Each condition represents a different intensity of recruitment and follow-up that will be employed during the survey fielding period. The pilot study will use a low recruitment condition and a moderate recruitment condition to determine the appropriate combination of non-response follow-up techniques to achieve an acceptable response rate. The low recruitment condition (Study Condition 1) uses a series of e-mail reminders as the main strategy for non-response follow-up, while the moderate condition (Study Condition 2) uses both a series of e-mail reminders and includes a series of telephone follow-up reminders over the 10-week fielding period to encourage respondents to complete the survey and submit their data. For both study conditions, e-mail reminders will be sent on average every two weeks. For Study Condition 2, a minimum of three telephone reminders will be made to encourage a response. If at any time over the course of the data collection a respondent indicates a refusal, e-mail and telephone follow-up will cease. Exhibit B.2.b summarizes the distinctions between the recruitment conditions being tested in this pilot study.

Exhibit

B.2.b Summary of Assigned Respondent Recruitment Conditions

in

Implementing the Pilot Study

Data Collection Activity |

Study

Condition 1 |

Study

Condition 2 |

Sample Validation |

Each sample community will be contacted in order to identify name and contact information for a key informant. |

Same as Condition 1 |

Invitation |

Each sampled community will receive, via Federal Express, an invitational packet containing an invitational letter from CDC, letters of support from state agencies or organizations, a project fact sheet, an informed consent statement, and instructions on how to access the questionnaire.

Receipt of FedEx packets will be tracked online at www.fedex.com. Project staff will place calls to municipalities where packets could not be delivered to obtain updated mailing information, and packets will be re-sent. |

Same as Condition 1 |

Survey Administration |

Each sampled community can participate by (a) completing the online questionnaire or (b) by downloading and returning an MS Word version of the questionnaire directly to project headquarters. |

Same as Condition 1 |

Follow-up |

Non-responsive municipalities will receive automated e-mail reminders at regular, predetermined intervals.

Undeliverable e-mails, or “bounce backs” will be tracked and followed up on to obtain updated information. |

Non-responsive municipalities will receive automated e-mail reminders at regular intervals.

Undeliverable e-mails, or “bounce backs” will be tracked and followed up on to obtain updated information.

Non-responsive municipalities will receive follow-up phone calls to previously identified informants to remind them to complete the survey. |

Technical Assistance |

All sampled municipalities will be provided with a toll-free telephone number they can call to speak to project staff and obtain technical assistance. This toll-free number will be included in the mailed invitation letter, as well as posted on the questionnaire. |

Same as Condition 1 |

Data Collection Instrument. The HE/AL Community-Based Surveillance Questionnaire is a self-administered, web-based instrument (Appendix C) that consists of 42 items divided into 4 sections. The first section, Community-wide Planning Efforts, asks questions on the planning documents local municipalities may have in place to support HE/AL. The next section asks respondents to indicate what policies they have in place to support aspects of the built environment that encourage physical activity. The third section of the survey asks about policies in place to support access to healthy food and beverages, as well as breastfeeding. The final section of the questionnaire asks process questions to ascertain the barriers and challenges respondents may have encountered in completing the survey. Additionally, as a part of the methodological component of the pilot study, each of the survey items also asks respondents to indicate whether they are unable to provide a response because the question is not understood or because the data is too difficult to obtain.

Data Collection Procedures. To initiate the data collection, each sampled municipality, regardless of the assigned condition, will be contacted to validate the sample and to confirm the name and contact information for a key informant—usually the city manager or planner—to whom all correspondence will be directed. The city manager or an equivalent individual is designated as the primary respondent for the survey as he/she typically has the broadest knowledge of a municipality’s policies. The sampled municipalities, regardless of the condition to which they are assigned, will then receive via Federal Express an invitational packet containing the following items:

An invitational letter from CDC (Appendix D1) that explains the study and includes instructions on how to access and complete the questionnaire (Appendix D5).

A project fact sheet in Q&A format (Appendix D2), which includes answers to questions regarding methods through which municipalities can participate, burden expectations, and timeline for participation.

An informed consent document (Appendix D3) explaining their rights as participants.

The invitational packet serves to recruit the sampled community to participate in the study and will provide them with the needed information to access the web-based survey system. Receipt of FedEx packets will be tracked online at www.FedEx.com . Project staff will place calls to municipalities where packets could not be delivered to obtain updated mailing information, and packets will be re-sent.

Respondents will primarily complete the self-administered survey through the web-based data collection system. Sampled municipalities will be assigned a unique identifier, or token, which will provide the key informant with security-enabled access to this web-based data collection system where they can complete and submit the questionnaire. Alternatively, if the respondent in either study condition chooses to do so, the questionnaire can also be completed in a paper format and mailed back to the study headquarters. Respondents who wish to use a paper survey can choose to print the survey from the web-based data collection system, complete the questionnaire, and return it to project headquarters using instructions that will be attached to the invitation letter. It is anticipated that some municipalities will choose this option, but the pilot study includes this mode of administration because there is little prior knowledge of which mode of administration participants prefer.

During the fielding period, non-respondents in the moderate condition will receive a minimum of three reminder telephone calls to encourage a survey response and help resolve any questions that may exist. Municipalities in the low condition will not receive this non-response follow-up telephone call. Both the low and moderate condition subsamples will receive a series of reminder e-mails bi-monthly over the remainder of the data collection fielding period until a survey response is submitted. Once key informants have completed the survey, they will submit the completed survey via the web-based system or by mailing the survey into the contractor’s headquarters. The data collected from paper questionnaires will be entered directly into the web-based data collection system by the contractor’s trained field staff.

Field staff for the study are experienced survey field managers who will be trained on the web-based system, survey questionnaire and also receive refresher training in refusal-conversion techniques over the course of a 1-day training. Field staff will be required to comply with the data security protocols established for the study.

B.2.c. Unusual Problems Requiring Specialized Sampling Procedures

No specialized sampling procedures are involved.

B.2.d. Any Use of Periodic (less frequent than annual) Data Collection Cycles to Reduce Burden

This is a one-time collection of information.

B.3 Methods to Maximize Responses Rates and Address Non-Response

B.3.a Expected Response Rates

This study anticipates differences in participation rates among the study conditions. A key methodological objective of this pilot study is to determine the non-response follow-up necessary to achieve an adequate survey response for this sample population. Web-based surveys that have targeted this population of respondents generally have a response rate of 30-40%; however, these studies have only made 1-3 contacts with respondents over the fielding period.2 This data collection effort hopes to attain a higher response rate of 55-65% overall based on more intense study recruitment strategies and non-response follow-up techniques. The sample validation operation that will occur before the study invitational packet is sent will ensure that the sample is updated, thus minimizing the likelihood for non-response based on outdated information. Regardless of the study condition a sampled municipality is assigned to, e-mail reminders will be issued bi-monthly during the fielding period. The study anticipates that with these measures, a response rate of at least 50% is likely for the low condition, while a response rate of 65% is likely for the moderate condition, where additional telephone follow-up will take place. The assumption of a 65% response rate for the moderate condition is based upon a comparison of methods used in similar methodological studies, like the 2010 NYPANS and the most recent cycle of SHPPS, where response rates were closer to 75% for these studies. The response rate by sampled strata and respondent characteristics will be analyzed as a part of the pilot study to understand the impact of the different recruitment conditions on the overall response rate and on the number of attempts to obtain a completed survey from a respondent.

B.3.b Methods for Maximizing Responses and Handling Non-Response

Prior to sending the study invitation materials, sample validation will occur to confirm the contact name, work address, work e-mail, and work telephone number for the city managers being targeted as key informants for the sampled municipalities. This process will ensure that incorrect or outdated information is replaced with current data, thereby minimizing instances of non-response due to incorrect contact information. During the pilot study, survey initiation and completion rates will be tracked weekly via case management features of the web-based data collection system in order to support efforts to maximize responses and handle non-response. To maximize the response rate for respondents in either condition, study participants will receive a detailed invitation letter that emphasizes the importance of the study and how the information will help CDC to better understand the feasibility of a national surveillance system. As a part of the invitation, participating municipalities are being offered a report that presents their status compared with municipalities in their state.

A dedicated toll-free telephone line and e-mail address will be provided to handle requests for technical assistance or inquiries about the survey from participants in both study conditions. Both the toll-free line and the e-mail account will be monitored daily by project staff who will provide assistance as needed. To encourage responses, participants in both conditions will receive an e-mail reminder within 7–10 days of receipt of the initial mailing, followed by a minimum of an e-mail reminder issued on average from every 10 days up to 2 weeks of the data collection fielding period. Once data have been submitted by a municipality, e-mail reminders to that entity will cease.

Methods to address non-response begin with the procedures for tracking and delivering survey reminders. For respondents in both conditions, all undeliverable e-mails, or “bounce backs” will be tracked and followed-up to obtain updated information. Project staff will try to determine another viable e-mail address where the reminder can be sent independently, and then, if needed, a follow-up phone call will be made to confirm the new e-mail addresses. For survey participants in the moderate condition (Study Condition 2), additional follow-up for non-response will consist of a minimum of three follow-up phone calls that will be made on average every two weeks to remind municipalities to complete the survey. Project staff will actively work to convert refusals in a sensitive manner that respects the voluntary nature of the study. These refusal conversion techniques will remind participants of the importance of their response and will troubleshoot any barriers to responding to the survey; but they will not unduly pressure the respondents. When telephone follow-up calls are made and the respondent is not available, the data collection staff will leave a voicemail message to let the respondent know the call was for this specific research study.

B.4 Tests of Procedures and Methods to be Used

From September 2011 to October 2011, the contractor conducted a pretest to assess the clarity and comprehension of questionnaire items. This pretest was conducted within OMB guidelines with nine randomly selected city managers and city planners, the key informants for the survey. The pretest sample was diversified according to the size of the municipality using the sampling strategy. In an effort to approximate the circumstances under which respondents will participate, pretests took place by telephone in front of an internet-connected computer. Cognitive interviews were conducted to determine how respondents interpreted items, to evaluate the adequacy of response options, definitions, and other descriptions provided within the questionnaires and to assess the appropriateness of specific terms or phrases. Empirical estimates of respondent burden were also obtained through the administration of the questionnaire in its entirety. As a result of the pretests, respondent burden was reduced and the potential utility of survey results was enhanced through the elimination or clarification of questions and response options.

B.5 Consultation on Statistical Aspects of the Study Design

Statistical aspects of the study have been reviewed by:

Ronaldo Iachan, PhD

Senior Sampling Statistician

ICF International

11785 Beltsville Drive, Suite 300

Beltsville, MD 20705

(301) 572-0538

Within the agency, the following individuals will be responsible for receiving and approving contract deliverables and will have primary responsibility for the data collection and analysis:

Deborah

Galuska, MPH, PhD, Technical Monitor

Associate

Director of Science

Division of Nutrition, Physical Activity,

and Obesity

National Center for Chronic Disease Prevention and

Health Promotion

Centers for Disease Control and Prevention

4770

Buford Highway, NE, MS/K-24

Atlanta GA, 30341-3717

(770)

488-6017

The representatives of the contractor responsible for conducting the planned data collection are:

Erika Gordon, PhD

Project Director

ICF International

11785 Beltsville Drive, Suite 300

Beltsville, MD, 20705

(301) 572-0881

egordon@icfi.com

Alice M. Roberts, MS

Technical Specialist

ICF International

11785 Beltsville Drive, Suite 300

Beltsville, MD 20705

(301) 572-0290

Ken Goodman, MA

Technical Specialist and Content Expert

ICF International

3

Corporate Square NE, Suite 370

Atlanta, GA 30329

(404) 321-3211, Ext. 2203

1 As defined by U.S. Census Bureau statistics on governments, the term “municipal governments” refers to political subdivisions within which a municipal corporation has been established to provide general local government for a specific population concentration in a defined area; it includes all active governmental units officially designated as cities, boroughs (except in Alaska), towns (except in the six New England States, and in Minnesota, New York, and Wisconsin), and villages.

2 Marla Hollander, Sarah Levin Martin, and Tammy Vehige. The Surveys Are In! The Role of Local Government in Supporting Active Community Design. J Public Health Management Practice, 2008, 14(3), 228-237.

| File Type | application/msword |

| File Title | OMB SUPPORTING STATEMENT: |

| Author | Erika Gordon;alice.m.roberts |

| Last Modified By | Macaluso, Renita (CDC/ONDIEH/NCCDPHP) |

| File Modified | 2012-01-19 |

| File Created | 2012-01-04 |

© 2026 OMB.report | Privacy Policy