3145-0209 Part A

3145-0209 Part A.docx

Implementation Evaluation of the ADVANCE Program

OMB: 3145-0209

REQUEST FOR CLEARANCE of DAtA Collection for the

Implementation Evaluation of the ADVANCE Program

Conducted by the Urban Institute

Funded by the National Science Foundation

Request Submitted by:

Directorate for Education and Human Resources

National Science Foundation

4201 Wilson Boulevard

Arlington, VA 22230

Current OMB Control Number (expiration date): 3145-0209 (10/2012)

Date of Amended Request: 5 October 2010

I. Section A

Introduction

A.1. Circumstances Requiring the Collection of Data

A.2. Purposes and Uses of the Data

A.3. Use of Information Technology To Reduce Burden

A.4. Efforts To Identify Duplication

A.5. Small Business

A.6. Consequences of Not Collecting the Information

A.7. Special Circumstances Justifying Inconsistencies with Guidelines in 5 CFR 1320.6

A.8. Consultation Outside the Agency

A.9. Payments or Gifts to Respondents

A.10. Assurance of Confidentiality

A.11. Questions of a Sensitive Nature

A.12. Estimates of Response Burden

A.13. Estimate of Total Capital and Startup Costs/Operation and Maintenance Costs to Respondents or Record Keepers

A.14. Estimates of Costs to the Federal Government

A.15. Changes in Burden

A.16. Plans for Publication, Analysis, and Schedule

A.17. Approval to Not Display Expiration Date

A.18 Exceptions to Item 19 of OMB Form 83-I

Section B

Introduction

B.1. Respondent Universe and Sampling Methods

B.2. Information Collection Procedures/Limitations of the Study

B.3. Methods to Maximize Response Rate and Deal with Nonresponse

B.4. Tests of Procedures or Methods

B.5. Names and Telephone Numbers of Individuals Consulted

III. Appendix

Telephone Interview Protocol

Case Study Protocols

Confidentiality Pledge

Request for Clearance of Data Collection for the

Implementation Evaluation of the ADVANCE Program

Funded by the National Science Foundation

4201 Wilson Boulevard

Arlington, VA 22230

Section A

Introduction to the Supporting Statement

Program Name: ADVANCE

The National Science Foundation (NSF), requests that the Office of Management and Budget (OMB) approve, under the Paperwork Reduction Act of 1995, clearance for NSF to conduct data collection for the evaluation of the ADVANCE Program funded by the Directorate for Education and Human Services (EHR) at NSF.

The NSF funds research and education in science and engineering through grants, contracts, and cooperative agreements to more than 2,000 colleges, universities, and other research and/or education institutions in all parts of the United States. The Foundation accounts for about 20 percent of Federal support to academic institutions for basic research. The mission of EHR is to achieve excellence in U.S. science, technology, engineering and mathematics (STEM) education at all levels and in all settings (both formal and informal) in order to support the development of a diverse and well-prepared STEM workforce. The ADVANCE Program is one of the efforts designed and supported by NSF to achieve this goal.

Program Overview: ADVANCE

The ADVANCE Program was established by NSF in 2001 to address the underrepresentation and inadequate advancement of women on STEM faculties at postsecondary institutions. Although women faculty in STEM have made progress towards achieving parity with their male counterparts, stubborn gaps exist at all junctures along the academic pipeline, from entry into a tenure-track faculty position to attainment of tenure, promotion to senior academic ranks, and assignment to positions of leadership in academe.

The ADVANCE Program is designed to allow institutions to identify specific structural and policy barriers to women’s representation and progress inherent in their own institutions and, most importantly, to devise and pursue remedial strategies. Through funding provided for up to five years to successful applicants, the ADVANCE program has encouraged and supported the development of interventions to remedy the under-representation of women in academe, with the explicit goal of institutionalizing and disseminating successful practices in the hopes that reforms will outlive the funding phase and spread to other institutions.

The ADVANCE program has three main components: Institutional Transformation, Leadership, and PAID projects1. Each of these components represents a distinct approach to addressing barriers to women’s underrepresentation and lack of advancement in STEM academic careers:

Institutional Transformation Awards: Begun in 2001, these awards encourage changes in institutional and departmental systems and infrastructure to increase the representation of women STEM faculty and their advancement to senior ranks and leadership positions.

Leadership Awards: Leadership awards were established in 2001 to build on the contributions of organizations and individuals who have been leaders in increasing participation and advancement of women in academic science and engineering careers and to help them sustain, broaden and initiate new activities.

PAID (Partnerships for Adaptation, Implementation and Dissemination): Established in 2006, this funding incorporates the Leadership awards and supports analysis, adaptation, dissemination and use of existing innovative materials and practices known to be effective in increasing the representation and participation of women in science and engineering careers.

Evaluation Overview: the Study Design

Program components. The main focus of this implementation study will be on the core component of ADVANCE—the Institutional Transformation (IT) awards. We will also conduct a descriptive analysis of PAID and Leadership awards in terms of key project characteristics.

Cohorts. As table 1 shows, every cohort of IT awards for which reasonable outcomes data might

be expected (that is, sufficient time has elapsed since the award) will

be included in the evaluation. Therefore, all grantees in cohorts

1 and 2 (1991 and 1992) will be

included, and current grantees

(2006) excluded. In addition, all existing cohorts of Leadership and PAID awards as of the start date of this evaluation will be included

because analysis of these two program components will be

descriptive.

ADVANCE Component 1: Institutional Transformation (IT)

As a process evaluation, the main objectives of the study are to (1) document how grantees have structured their strategies and activities to meet the ADVANCE IT goals and identify major models that emerge; (2) identify the major theories of changes on which these strategies seem to be based; and (3) describe how grantees have implemented institutional change processes. The study will also examine whether and how approaches chosen by grantees differ by institutional types, characteristics and context.

The determination of projects’ success in achieving most of the ADVANCE intermediate and long-term outcomes is beyond the scope of this evaluation and will be addressed in the summative evaluation to be conducted in the future. We will, nevertheless, attempt to document the degree to which projects have been able to institutionalize and disseminate aspects of institutional transformation achieved by the project. The process evaluation will also situate the projects’ approaches and strategies within the institutional change literature.

Conceptual Framework

Figure 1, found on the next page, is a conceptualization of the process by which grantees achieve project goals. The shaded area shows the focus of the process evaluation, which—as described above—is to document strategies of institutional transformation within the context of the institutional change literature and the context of the institutions themselves.

Study Questions

The following questions guide the process evaluation of the IT component:

What are the main components/activities pursued by the grantee institutions to achieve the project goals?

What identifiable models emerge from the constellation of activities chosen by the individual grantees to implement their projects? Do these vary by institutional characteristics?

Which major theories of institutional change can be identified in the approaches that undergird the main models?

How have the individual grantees chosen to implement the three processes of institutional change (identified in the conceptual model)? Which implementation strategies have been successful? Does the way the grantee institutions implement processes of institutional change vary by institutional characteristics or context? By IT model?

Figure 1. Conceptual Framework: NSF’s Institutional Transformation Program

Institutional Transformation Process Evaluation Focus

ADVANCE

Activities Leadership

development Networking

Policy/Practice

reform Work-life

support Culture/climate

reform Data

collection and monitoring Problem

identification Assessment

of

progress

Strategies

for Achieving Institutional Transformation

Outcomes

of Institutional Transformation

Processes

of Institutional

Change

(Eckel,

Green and Hill, 2001) Defining

and creating context

for

change Choosing

and developing

change

strategies Monitoring

and measuring progress

Theories

of Institutional

Change

Transtheoretical

Model Dual

Agenda Schien/Lewin

Change Theory Theories

of cultural

change

(Berquist, Tierney)

Intermediate

Outcomes Increase

in Women Hired Promoted Tenured Increase

in women

in

leadership positions Changes

in policies and practices for tenure, recruitment, hiring, promotion

and resource allocation Parity

in allocation of resources

Decreased

isolation of women Improved

climate for women Establishment

of facilities related to work-life support Lactation

center Day

care System

for reporting gender data for monitoring and decision making

Longer-Term

Outcomes Increase

in number of female faculty members Increase

in number of senior female faculty members Increase

in number of tenured female faculty members Institutionalization

of gender-friendly policies Dissemination

Shaded box = focus of the implementation evaluation of ADVANCE.

Data Collection

The evaluation of IT entails two primary modes of data collection: telephone interviews and case studies.

We conducted telephone interviews with grantees of the nineteen institutions funded through the first two cohorts (2001 and 2002) to gather implementation data as well as information on changes in policies and practices, institutionalization and dissemination of ADVANCE approaches. Main informants included the project director as well as additional key personnel who may be identified by the project director. Through the telephone interviews (and document review), we identified different models of implementation of the ADVANCE program.

Case studies (to be conducted in Fall 2010 and for which we request clearance) will involve site visits and in-depth interviews with faculty and administrators as well as focus groups with faculty. Data gathered during the site visits will be used to write case studies, which will yield greater understanding of how interventions were implemented within diverse settings, what barriers and facilitating factors were encountered, how barriers were addressed and how facilitating factors were leveraged. Through the case studies of institutions in each of the models, we will seek to:

explain the processes that drove the development of the models and may explain the variations,

examine the role of theories of institutional transformation in implementing the given model of change,

identify differences within model type (especially in terms of process) that can be attributed to differing characteristics such as size and Carnegie classification,

document the development, dissemination, and adoption of innovations pioneered by ADVANCE sites,

identify factors that encouraged or inhibited success of project, and

determine in greater depth factors most responsible for the successful institutionalization of key ADVANCE components.

ADVANCE Component 2: Leadership Awards

The evaluation of the Leadership component of ADVANCE will be descriptive and will rely on a review of documents to provide a summary analysis of the characteristics of Leadership awards made. All available dimensions of Leadership awards will be analyzed and reported to provide NSF with a descriptive landscape of awards to date.

The population of awards funded in 2001, 2003, and 2005 (30 awards) will be included. After the document review is finalized, awards found at institutions selected for site visits will be interviewed during the visits to assess the role these awards may have played in enhancing the achievement of gender equity at selected IT awardee institutions. Three leadership awards have been made at institutions that also had an IT award in the cohorts included in this evaluation.

ADVANCE Component 3: PAID Awards

The last component of ADVANCE to be studied—Partnerships in Adaptation, Implementation, and Dissemination (PAID)—was recently launched. Therefore, the evaluation will review available documents to provide a summary analysis of the main characteristics of PAID awards, similar to that conducted for Leadership awards.

The population of awards funded thus far (15 awards to 19 institutions in 2006) will be included (i.e., no sampling will be carried out). Lastly, if institutions selected for case study site visits (as part of the IT component of the evaluation) also are recipients of PAID awards, questions regarding these awards will be incorporated in interviews and focus groups. Six PAID awards have been made at institutions that also had a IT award in the cohorts included in this evaluation.

A.1. Circumstances Requiring the Collection of Data

The ADVANCE program was initiated in 2001. Since then the NSF has invested over $130 million to support ADVANCE projects at more than one-hundred institutions of higher education and STEM-related not-for-profit organizations in forty-one states, the District of Columbia, and Puerto Rico. A process evaluation of the ADVANCE program has not been conducted previously so results of an in-depth analysis of program implementation data would not be available except from the current proposed data collection activities. Data collected in this evaluation will enable program administrators to better assess their funding approach and to make informed decisions regarding funding award strategies and program development. Lastly, the evaluation data will enable the NSF to meet their annual Government Performance and Results Act (GPRA) reporting responsibilities.

A.2. Purposes and Uses of the Data

The purpose of this proposed data collection is to support the implementation evaluation of the ADVANCE Program.

A.3. Use of Information Technology and Burden Reduction

The use of automated information technology is limited in this study. Document review, however, will be facilitated through direct download of project documents from the NSF project database. A member of the research team from the Urban Institute has successfully completed training to obtain clearance to access the NSF project database. In an effort to minimize burden on participants, the evaluation will rely upon information that can be obtained through extant data sources. Only data not available through other sources will be collected.

Telephone Interviews. Based on information available from other sources, we designed a telephone interview protocol to obtain needed information regarding the implementation of ADVANCE at each grantee site, information generally not available through other sources. In some instances, information from other sources will be inserted into the interview protocol so that it can be verified and/or updated by the interviewee. In others, we may purposefully ask a question as a validity check on extant data. Responses and general feedback obtained during field tests (details provided below) guided the wording of questions, addition of probes, and exclusion of questions deemed unnecessary. See the Appendix for the telephone interview protocol, and section B4 for details regarding the field test of this instrument.

Case Study Site Interviews and Focus Groups. After conducting the review of project documents as well as the telephone interviews with all former grantees, we analyzed the data to select six sites for in-depth study and to develop protocols to be used during the site visits. In case study sites, we will interview project staff and university administrators, and conduct focus groups with faculty. The draft interview and focus group protocols to be used during case studies are included in the attached appendix.

A.4. Efforts to Identify Duplication

The implementation evaluation of the ADVANCE Program does not duplicate other NSF efforts. The data being collected for this evaluation have not and are not being collected by either NSF or other institutions.

A.5. Impact on Small Businesses or Other Small Entities

No small businesses will be involved in this study.

A.6. Consequences of Not Collecting the Information

If the information is not collected NSF will be less prepared to meet its accountability requirements, and to make future program decisions. Findings drawn from the data collected and analyzed in this evaluation will enable NSF to assess its success in broadening participation in the STEM workforce.

A.7. Special Circumstances Relating to the Guidelines in 5 CFR 1320.6

The data collection will comply with 5 CFR 1320.6.

A.8. Consultation Outside the Agency

The original notice for this information collection request was published in Vol. 74, No. 4, Monday, May 18, 2009. No comments were received and clearance was granted by OMB from October 2009 through October 2012 (#3145-0209). Per the Notice of Action dated October 7, 2009, NSF is now seeking approval for the case studies and the focus groups. The Federal Register notice was published at 75 FR 47645 on August 6, 2010 and no comments were received.

The evaluation design was developed in consultation with NSF staff from the Division of Human Resource Development (HRD), which is the division within the Directorate for Education and Human Resources (EHR) that funds the ADVANCE Program, and an advisory board comprised of experts in higher education, organizational change, and gender equity.

The advisory board will meet with evaluators annually to review evaluation activities and provide advice on plans for activities during the following year. They will also provide feedback on an as-needed basis. The first advisory board meeting was held in March 2009. Advisory board members provided input into the design of the evaluation and, later in 2009, the telephone interview protocol included in this application. The next advisory board meeting (contingent on receipt of OMB approval of the case study component of this collection) is currently being scheduled to obtain feedback on existing analysis, case study selection, and next steps.

A.9. Payments or Gifts to Respondents

No payment or gifts will be provided to respondents.

A.10. Assurance of Confidentiality

Participants in this evaluation will be assured that the information they provide will not be released in any form that identifies them as individuals. Evaluation findings on the projects will be reported in aggregate form in the final report. The evaluation contractor, The Urban Institute, has extensive experience collecting information and maintaining the confidentiality, security, and integrity of data.

In compliance with Urban Institute policy, the evaluators will undergo IRB clearance prior to collecting any data and will adhere to the following confidentiality and data protection procedures in conducting this evaluation:

Confidentiality pledge: Evaluation team members will be instructed to treat all data collected as confidential. In addition, all staff members participating in work related to the ADVANCE evaluation will sign a confidentiality pledge (found in the Appendix). Any new or additional staff involved with this project in the future will be asked to sign a confidentiality pledge which will be retained in our files.

Interviewees will be provided with the following statement of confidentiality: “The information that you provide will be kept confidential, and will not be disclosed to anyone but the researchers conducting the study.” Respondents will also be told that participation in the study is voluntary and that there will be no consequences to non-participation.

Restricted Access: Access to the database used to store answers, as well as any electronic files that may contain confidential information, will be strictly limited to authorized project members only.

Storage: Hard copies of paper files (such as hand-written notes and interview transcripts) or tapes will be kept in a locked file. Access to these documents will be restricted to the Principal Investigator, and primary researchers in charge of the data collection.

Disposal: All paper documents and tapes will be shredded as soon as the need for the hard copies no longer exists.

Backups: All basic computer files will be duplicated on backup disks to allow for file restoration in the event of unrecoverable loss of the original data. These backup files will be stored under secure conditions (locked file cabinet, as identified in the Urban Institute IRB security plan for the evaluation) and access will be restricted to authorized project personnel.

Reporting: All data will be reported in aggregate form and will not contain any identifying information on the interviewee.

Identifiers: The database created to store interview transcripts will contain no identifiers (no names, addresses or phone numbers) as they are not needed for analyses.

Linkage file: A linkage file containing identifying information will be stored under secure conditions in case it is needed for subsequent follow up or verification. This linkage file will only be available to the evaluation Co-Principal Investigators.

A.11. Justification for Sensitive Questions

Data collection instruments will not include any sensitive questions (see the protocols included in the Appendix).

A.12. Estimate of Hour Burden Including Annualized Hourly Costs

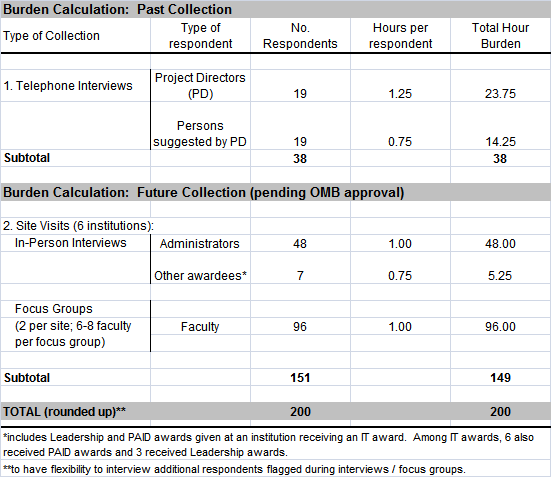

Estimates for the hour burden below are based on time estimates derived through field-tests already completed for the telephone interview. Estimates for the site visit interviews are based on average response time of similar instruments used in other studies.

The hour burden estimated in the request for clearance for this collection (telephone interviews) is 38 hours. The hour burden estimated to be submitted in a request to add the future collections (interviews and focus groups conducted during site visits) is 149 hours.

A.13. Estimate of Other Total Annual Cost Burden to Respondents or Record-keepers

There are no annualized capital/startup or ongoing operation and maintenance costs involved in collecting the information. Other than the opportunity costs represented by the time to complete the interviews and focus groups, there are no direct monetary costs to study participants.

The opportunity costs associated with this data collection can be quantified by taking the average hourly wage of faculty included in the focus groups, project directors interviewed by telephone, and administrators interviewed during site visits, as follows.

Total overall cost to the respondents is estimated to be $9,904, divided into $2,364 for the present request (telephone interviews) and $7,540 for the future request (interviews and focus groups conducted during site visits). See the table below.

For the telephone interviews with project directors and the in-person administrator interviews, total cost is derived by multiplying the time burden (e.g., 1.25 hours per project director, three quarters of an hour for additional interviewees suggested by the project director, and 1 hour per administrator) by the average hourly wage of a full professor at a public university, adjusted upward as noted in the table below. For the faculty focus groups, total cost is derived by multiplying the time burden (1 hour) by the average hourly wage of an associate professor, also adjusted upward. Note that, for estimation purposes, we use the associate professor salary as an average because faculty of all ranks will be included in the focus groups (assistant, associate, and full professors). The table below shows the estimated total cost to respondents, by type of respondent, and provides details regarding sources of data and adjustments.

A.14. Annualized Cost to the Federal Government

The estimated cost to the Federal Government for the entire data collection (and all related activities) included in this request for approval is $314,695. This cost estimate includes: instrument development and field-testing; staff training; site recruitment; site visit travel and per diem; data collection and transcription; analysis; report writing; and expenses related to advisory board meetings.

A.15. Explanation for Program Changes or Adjustments

This is a new collection of information.

A.16. Plans for Publication, Analysis, and Schedule

Timeline for data collection and analysis. The Evaluation of the ADVANCE Program is a three-year evaluation that covers October 17, 2008 – September 30, 2011. Collection of information for this evaluation begins with a thorough literature review, review of ADVANCE project documents, and identification of preliminary models. It is followed by telephone interviews with project heads (September - October, 2009) and case study site visits involving interviews and focus groups (November, 2010). (The case study dates have been adjusted to await OMB clearance.) If OMB clearance is received in time to schedule all (6) site visits this Fall, data collection will be completed by December 2010, analysis will take place in early 2011, and the final report will be submitted in June 2011.

Publications. The Urban Institute is conducting this third-party evaluation of the implementation of the ADVANCE Program on behalf of NSF, but is forbidden contractually from publishing results unless permitted to do so by NSF. After the final report is submitted, NSF will determine whether the quality of the products deserves publication. Often it is only after seeing the quality of the information delivered by the study that NSF decides the format (raw or analytical) and manner (in the NSF-numbered product Online Document System (ODS) or simply a page on the NSF Web site) in which to publish. NSF plans to publish at least one analytical report in the ODS for the ADVANCE Program within two years of the study's conclusion, which is estimated to be 9/30/2011. This means that the report will be available on the NSF Web site within two years of the conclusion of the evaluation. NSF classifies formal publications as reports, not statistical reports. The NSF may chose to allow the Urban Institute to publish the report in its website or through policy or academic publications, provide the Urban Institute obtains proper approvals and adheres to NSF requirements.

A.17. Approval to Not Display Expiration Date

Not applicable.

A.18 Exceptions to Item 19 of OMB Form 83-I

No exceptions apply.

1 Note that Fellowship awards given in 2001 and 2002 are excluded from the evaluation.

Page

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | REQUEST FOR CLEARANCE OF DATA COLLECTION FOR THE |

| Author | Administrator |

| File Modified | 0000-00-00 |

| File Created | 2021-02-01 |

© 2026 OMB.report | Privacy Policy