Violence Paperwork Reduction Act Submission extension part A 7 6 09 (2)

Violence Paperwork Reduction Act Submission extension part A 7 6 09 (2).doc

An Impact Evaluation of a School-Based Violence Prevention Program

OMB: 1850-0814

Paperwork

Reduction Act Submission

Supporting Statement

An

Impact Evaluation of a School-Based

Violence Prevention Program

PART A - Justification

[Request to Extend Data Collection Period for One Year]

Prepared

for: U.S. Department of Education

Prepared by: RTI International

February 5, 2009

Table of Contents

1. Circumstances Making the Collection of Information Necessary 2

Conceptual Framework and Logic Model for the Intervention 6

2. Purpose and Use of the Information Collection 7

3. Use of Automated, Electronic, Mechanical, or Other Technological Collection Techniques 11

4. Efforts to Identify and Avoid Duplication 11

5. Impact on Small Businesses or Small Entities 12

6. Consequences of Not Conducting the Data Collection or Collecting It Less Frequently 12

8. Federal Register Announcement and Consultation 13

9. Explanation of Any Payment or Gift to Respondents 13

10. Assurance of Confidentiality 15

11. Justification for Sensitive Questions 16

12. Estimates of Hour Burden 17

13. Estimate for the Total Annual Cost Burden to Respondents or Recordkeepers 17

14. Estimates of Annualized Costs to Federal Government 17

15. Reasons for Program Change 19

16. Plans for Tabulation and Publication and Project Time Schedule 19

Impact Analyses for the School-Based Violence Prevention Program 20

17. Request for Approval to Not Display OMB Approval Expiration Date 25

18. Exceptions to Certification for Paperwork Reduction Act Submissions 25

Appendix A: Student Survey

Appendix B: Teacher Survey

Appendix C: Violence Prevention Coordinator Interview

Appendix D: Violence Prevention Staff Interview Guide

Appendix E: School Management Team Interview Guide

Appendix F: RIPP Implementation Records

Appendix G: Classroom Observations

Appendix H: School Policy Violations and Disciplinary Actions

Appendix I: Individual Student Records

Appendix J: List of Experts Consulted

Appendix K: Teacher Survey Assent Form

Appendix L: Parental Consent Form

List of Exhibits

Exhibit 1. Research Question 3

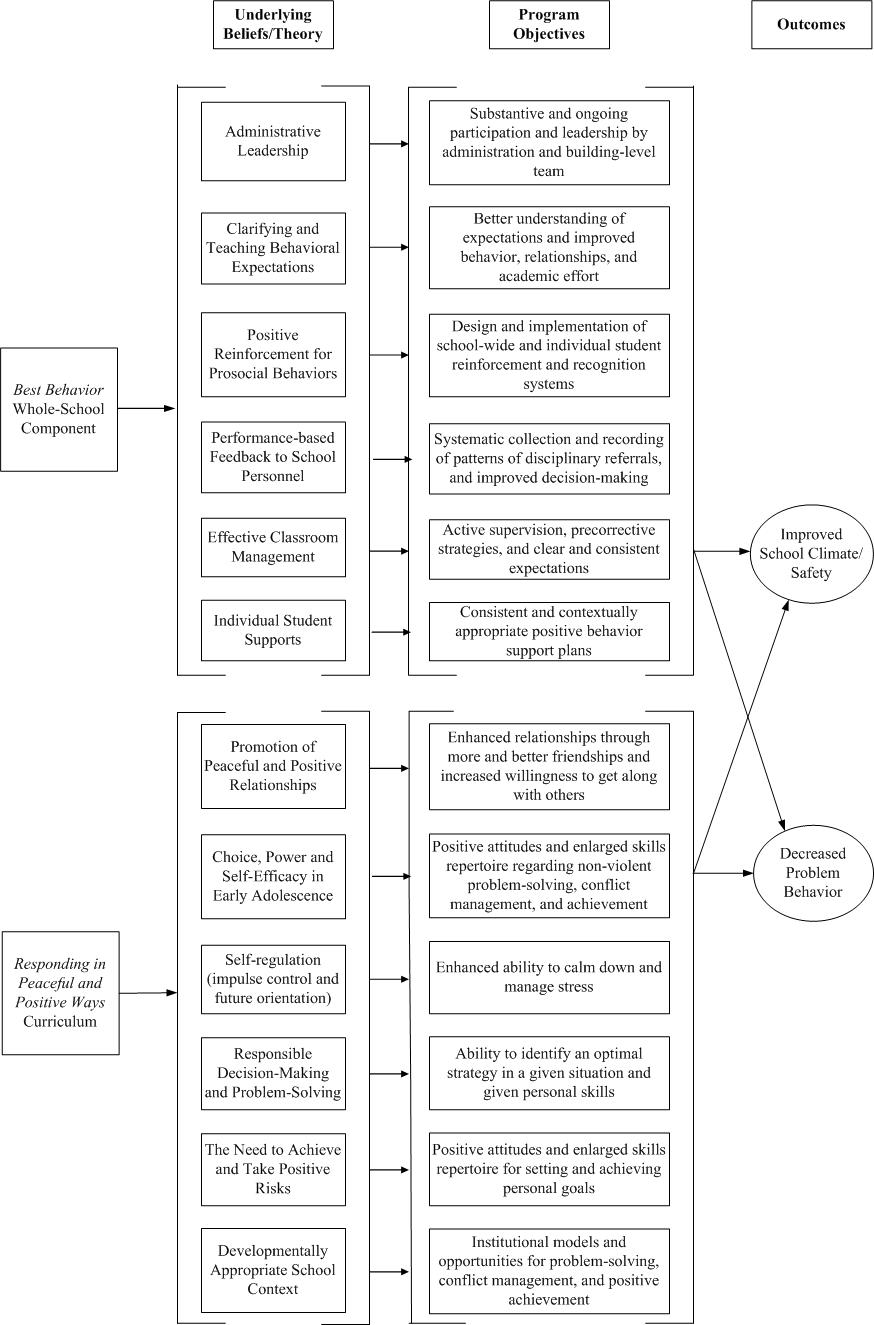

Exhibit 2. Logic Model for Intervention Combining RiPP and Best Behavior 5

Exhibit 3. Data Collection Activities 8

Exhibit 4. Burden in Hours to Respondents 18

Exhibit 5. Effect of Violence Prevention Intervention on Violent Behaviors 19

Exhibit

6. Effect of Violence Prevention Intervention on Student

Infractions

(Binary Outcomes) 20

Exhibit 7. Proposed Data Collection Points 20

Exhibit 8. Project Schedule 24

PAPERWORK REDUCTION ACT SUBMISSION

SUPPORTING STATEMENT

Agency: Department of Education

Title: An Impact Evaluation of a School-Based Violence Prevention Program

Student Survey

Teacher Survey

Violence Prevention Coordinator Interview

Violence Prevention Staff Interview Guide

School Management Team Interview Guide

RiPP Implementation Records

Classroom Observation Form

School Policy Violations and Disciplinary Actions

Individual Student Records

Form: N/A

A. JUSTIFICATION

Abstract

In an ongoing effort to reduce the levels of violence and bullying in our nation’s middle schools, the U.S. Department of Education is sponsoring a national study of a violence prevention intervention. The purpose of the study is to implement and test an intervention that combines a classroom-based curriculum with a whole-school approach. The study will implement and evaluate the program using a rigorous randomized control trial design.

In 1986, Congress enacted the Drug-Free Schools and Communities Act (DFSCA) as Subtitle B of Title IV of the Anti-Drug Abuse Act of 1986, which quickly became the largest federal effort to prevent alcohol and other drug use among the nation’s school-aged youth. As safety in our schools became a more pressing concern—and after the President and the nation’s governors adopted the National Education Goals for the year 2000, including the goal for safe, drug-free, and disciplined schools—Congress reauthorized the DFSCA as Title IV, Safe and Drug-Free Schools and Communities Act of 1994 (SDFSCA). A major change brought about by this reauthorization was the inclusion of violence prevention as a supported activity under the Act. State grants from SDFSCA provide funding to approximately 98% of all school districts to implement programs that target youth alcohol and other drug use and school safety.

The No Child Left Behind Act of 2001 (NCLB) reauthorized the Elementary and Secondary Education Act of 1965. Section 4121 of the NCLB authorizes the Secretary to carry out programs to prevent the illegal use of drugs and violence among, and promote safety and discipline for, students, including the development, demonstration, scientifically based evaluation, and dissemination of innovative and high quality drug and violence prevention programs and activities, based on State and local needs.

RTI International (RTI), under contract with the National Center for Education Evaluation in the Institute for Education Sciences at the U.S. Department of Education (ED), is conducting a five-year study, An Impact Evaluation of a School-Based Violence Prevention Program. Subcontractors Pacific Institute for Research and Evaluation (PIRE) and Tanglewood Research, Inc. are collaborating with RTI in this effort. This study will provide rigorous and systematic information about the effectiveness of a hybrid model of middle school violence prevention combining a curriculum based approach and a whole-school based approach, a model which is hypothesized to be effective but which has not been rigorously tested.

1. Circumstances Making the Collection of Information Necessary

This is a request to extend the current data collection period for this research study by one year to 5/31/10. Nothing in the justification statement itself has been revised, including the burden estimates.

Despite its overall decline in the past decade, school crime continues to be a major concern for many schools and their students. Violent school crime is a particular concern for middle schools because middle-school age children are more likely to be victims of school violence than their high-school-age counterparts (Indicators of School Crime and Safety, 2003). However, the violence prevention programs available for middle schools lack rigorous evaluation. The Department has been advised by a group of experts in school violence to evaluate a violence-prevention program for middle schools that combines two approaches: (1) a curriculum-based model to facilitate students’ social competency, problem solving, and self-control skills, and (2) a whole-school model that targets school practices and policies usually through classroom management or teaching strategies, or through systemic reorganization and modification of school management, disciplinary policies, and enforcement procedures. Through an open competition and advice from a panel of experts in the field of violence prevention, the program Responding in Peaceful and Positive Ways (RiPP) was chosen as the curriculum-based component of the intervention and the Best Behavior program was chosen as the whole-school component of the intervention.

The study will be conducted in 40 middle schools that include grades 6 through 8, of which half will be randomly assigned to receive the violence prevention program being tested and half to serve as a control school (with no intervention). Data will be collected at each middle school over three years of program delivery, during the 2006-2007, 2007-2008, and 2008-2009 school years.

Research Questions

Exhibit 1. Research Question |

Student Outcomes and Impacts |

|

Intervention Implementation |

|

Overall Approach

There are three major components to the study related to program selection, implementation and evaluation.

Program Selection

RTI and its subcontractors assisted the Department in selecting programs to test in this study by soliciting proposals from the developers of school-based violence prevention programs and convening a group of experts to review these proposals. RTI held an open competition which was announced through a study website, email distribution lists at the U.S. Department of Education, relevant conferences, and direct contact with all known program developers of school violence prevention programs.

After a careful review of the proposals by the panel, a decision was made to award the subcontract for the violence prevention curriculum to the developers of Responding in Peaceful and Positive Ways (RiPP). Selection of this program was recommended based on a number of factors, including: the program is developmentally appropriate, is designed for middle school students, and is implemented sequentially by grade level; the program has the best preliminary evidence of effectiveness of any violence prevention program; the majority of teaching and training materials are fully developed; and the program is ready to scale up. The Best Behavior program was selected as the whole-school component because it is a research-based program that has been paired successfully with curriculum–based programs similar to RiPP, and incorporates school-wide activities at the school and classroom levels.

Program Implementation

An implementation team formed by Tanglewood Research staff and a cadre of locally based site monitors will be responsible for overseeing full and faithful implementation of RiPP and Best Behavior. Implementation activities include staff and/or teacher training, early program troubleshooting and continued program monitoring, and ongoing technical assistance. Under contract with RTI, the RiPP and Best Behavior program developers will assist the implementation team with these activities. The implementation team will also hire and train coaches/monitors who will visit each of the treatment schools at least one day a week during the school year for an entire day in order to meet with staff who are responsible for program delivery and to observe the program in action throughout the intervention.

Program Evaluation

An evaluation team formed by RTI and PIRE staff will perform all activities needed to rigorously evaluate the intervention. A process evaluation component will assess fidelity of implementation, level of student attendance, training of implementers, implementation of interventions other than the test intervention, and other issues relevant to effective implementation. An outcome evaluation will assess intervention and control schools’ changes in disruptive, aggressive, and violent behaviors, other in-school outcomes, behaviors such as delinquency, and mediating and moderating variables related to the program’s logic model. The evaluation will assess outcome changes in intervention schools compared to control schools, the extent to which changes in outcomes are linked to implementation of the intervention, and whether the effects of the program vary by student risk characteristics. The evaluation will also examine the costs of the program.

The study will be conducted in a set of middle schools that include grades 6th through 8th. Schools will be randomly assigned to either receive the combined violence prevention intervention or to serve as a control school (with no intervention beyond what is usually implemented). Students and teachers will be surveyed at each middle school over three years of program delivery. Data on students’ violations of school policies will also be collected as will data on these violations for schools as a whole. Also, evaluation team members will conduct interviews with school staff and observe implementation of RiPP in classrooms, and RiPP teachers will provide further data on implementation.

Intervention

The intervention combines two approaches: (1) Responding in Peaceful and Positive Ways (RiPP), a curriculum-based model to facilitate students’ social competency, problem solving, and self-control skills, and (2) Best Behavior, a whole-school model that targets school practices and policies through classroom management or teaching strategies, or through systemic reorganization and modification of school management, disciplinary policies, and enforcement procedures. (See logic model in Exhibit 2).

Exhibit 2. Logic Model for Intervention Combining RiPP and Best Behavior

The combined intervention will be implemented in approximately 20 middle schools for three consecutive school years (i.e., in the 2006-2007, 2007-2008, and 2008-2009 school years). The whole-school component will begin during spring 2006, prior to the 2006-07 school year.

Conceptual Framework and Logic Model for the Intervention

The goal of the violence prevention program is to improve the safety and climate of the school as well as to decrease violent and aggressive acts in students (Exhibit 2). These outcomes will be measured in the study. The program is predicted to have its effects on these behaviors through a number of intermediate (or mediating) factors. The violence prevention program is a hybrid of a curriculum (RiPP) and a whole school intervention (Best Behavior). RiPP is a universal social-cognitive violence prevention program focused primarily on situational and relationship violence. The program is designed to increase social competence and thereby reduce violent behavior and other problem behaviors and improve school climate and safety. The RiPP curriculum for this study consists of 16 fifty-minute lessons delivered over the course of a school year. Grades 6, 7, and 8 each receive 16 lessons that are designed specifically for each respective grade level. The Best Behavior whole-school component aims to improve school and classroom discipline in schools and is designed to complement and reinforce the RiPP concepts taught in the classroom. This component will be implemented by a school management team made up of teachers and administrators. The team will meet weekly for the first two months of the program and monthly thereafter. The whole-school component will involve intervention strategies at the school and classroom levels, including:

review and refinement of school discipline policies;

systematic collection of and review of patterns of discipline referrals to guide decision making and planning;

instruction on classroom organization and management techniques;

use of positive reinforcement and recognition for prosocial behavior in classrooms and school wide; and

clarifying and teaching behavioral expectations for student behaviors

In addition, teams will be taught highly effective procedures for defusing angry and aggressive behavior in the classroom. For the purposes of this study, Best Behavior will be adapted to reinforce and coordinate with the RIPP curriculum.

2. Purpose and Use of the Information Collection

The purpose of the evaluation is to determine the overall effectiveness of a violence prevention intervention that combines two approaches: (1) a curriculum-based model to facilitate students’ social competency, problem solving, and self-control skills (Responding in Peaceful and Positive Ways – RiPP), and (2) a whole-school model that targets school practices and policies through classroom management and teaching strategies, and through systemic reorganization and modification of school management, disciplinary policies, and enforcement procedures (Best Behavior). The evaluation will provide important and useful information by helping to determine if the intervention decreases problem behaviors and improves school climate and safety.

Reducing school violence and bullying and improving school and classroom climate are important to the Department of Education—particularly in middle schools, which often experience more problems of this nature than elementary or high schools. This study will provide valuable evaluative information regarding the RiPP and Best Behavior programs, which are very promising violence prevention programs designed for middle schools. This study will be of great utility to the Department in its efforts to decrease school violence and improve school and classroom climate. We will present our findings to the Department via reports and briefings. We will also provide the Department a data file of all data collected in the study, along with supporting documentation.

RTI and its subcontractors will faithfully implement a school-based violence prevention program and conduct an independent and rigorous evaluation using a randomized control trial (RCT) design. The evaluation will assess outcome changes in intervention schools compared with control schools, the extent to which changes in outcomes are linked to implementation of the intervention, and whether the effects of the program vary by various profiles of student risk. To evaluate the effectiveness of the intervention, the evaluation will collect data from middle schools in both treatment and control conditions. Because the data collected at each school will be combined with, and compared against, those collected from other schools, it is critical that data collection procedures and elements be uniform across all the schools. RTI has designed a complementary set of data collection tools to capture information on key components of the intervention, outcomes the intervention is intended to influence, and potential mediators through which such influence is expected to occur. Exhibit 3 summarizes the surveys, survey format, data collection schedule, and respondents. In the section below, we describe the key topic areas to be addressed by each of the data collection instruments.

Exhibit 3. Data Collection Activities

Instrument |

Source |

Condition |

Year 1 |

Year 2 |

Year 3 |

|||

Fall 06 |

Spring 07 |

Fall 07 |

Spring 08 |

Fall 08 |

Spring to Fall 09 |

|||

Student Self-report Surveys |

Students |

Treatment and Control Schools |

|

|

|

|

|

|

Teacher Surveys |

Teachers |

Treatment and Control Schools |

|

|

|

|

|

|

Violence Prevention Coordinator Interview Guide |

Violence Prevention Coordinator |

Treatment and Control Schools |

|

|

|

|

|

|

Violence Prevention Staff Interview Guide |

Violence Prevention Staff |

Treatment Schools |

|

|

|

|

|

|

School Management Team Interview Guide |

School Management Team Members |

Treatment Schools |

|

|

|

|

|

|

RiPP Implementation Records |

RiPP Teachers |

Treatment Schools |

|

|

|

|

|

|

Classroom Observations |

Evaluation Staff |

Treatment Schools |

|

|

|

|

|

|

School Policy Violations and Disciplinary Actions |

Principals |

Treatment and Control Schools |

|

|

|

|

|

|

Individual Student Records |

Principals |

Treatment and Control Schools |

|

|

|

|

|

|

The Student Survey (Attachment A) will be conducted in both treatment and control schools. The student survey will be administered to all 6th graders in the fall and spring of the 2006-2007 school year. Respondents who remain in study schools will complete the survey again in spring 2008 as 7th graders. The survey will be administered to all 8th graders in the spring of 2009. Questions will ask about students’ social competency skills; aggressive or disruptive conduct; attitudes toward violence; victimization by violence or bullying; and perceptions of safety at school and related avoidance behaviors. The survey will be self-administered in a classroom setting. It will be available in both English and Spanish and will take approximately 45 minutes to complete.

The Teacher Survey (Attachment B) will be completed by teachers in both intervention schools and comparison schools. Questions will ask about teachers’ perceptions of the level of disruptive behaviors in class, perceptions of school climate, experience with victimization and feelings of safety in school. It will take approximately 30 minutes to complete. The survey will be administered to a random sample of 24 teachers (stratified by grade) at each of the middle schools participating in the study. Teachers will complete the survey in spring of 2007, 2008, and 2009, with a new random sample of teachers selected each year.

The Violence Prevention Coordinator Interview (Attachment C) will provide information on school-wide policies and programs for violence prevention. The interview will ask about the school’s security, monitoring, and discipline policies and procedures; staff professional development for violence prevention; information regarding all of the schools’ violence prevention efforts; and, in intervention schools only, the coordinator’s impressions of the teachers’ response to RiPP and how well RiPP and Best Behavior work together. Each interview will take approximately 45 minutes in control schools and 60 minutes in treatment schools. Data will be collected once each school year during the 2006/2007, 2007/2008, and 2008/2009 school years. We anticipate that we will conduct the interviews in approximately February so that respondents will be able to describe activities that have been conducted or are ongoing. We will revisit this schedule if necessary.

The Violence Prevention Staff Interview (Attachment D) will be conducted to obtain information on implementation of RiPP. Interviews will be conducted with teachers and other violence prevention staff implementing RiPP in treatment schools. The semi-structured interview will ask about RiPP implementation experiences, challenges, and impressions; the time taken by teachers to prepare, deliver, and follow-up the intervention; fidelity and adaptation; training and technical assistance; and how RiPP and Best Behavior fit together. Each interview will take approximately 50 minutes. Data will be collected once each school year during the 2006-2007, 2007-2008, and 2008-2009 school years. We anticipate conducting these interviews as part of the same site visit during which the Violence Prevention Coordinator interview will be conducted.

The School Management Team (SMT) Interview (Attachment E) will provide information on the implementation of the Best Behavior whole-school approach to violence prevention. The interview will ask about the SMT member’s background and violence prevention roles; learn how staff in each school have implemented Best Behavior; estimate the time taken by each member in SMT activities; assess fidelity of implementation; gather staff impressions on training and technical assistance received; and gather staff impressions on how well Best Behavior and RiPP have fit together and with other programs. Each interview will take approximately 50 minutes. Data will be collected once each school year during the 2006-2007, 2007-2008, and 2008-2009 school years. We anticipate conducting these interviews as part of the same site visit during which the Violence Prevention Coordinator interview will be conducted.

RiPP Implementation Records (Attachment F) will provide information on implementation of the RiPP curriculum and will be collected in treatment schools only. We will provide RiPP teachers standard reporting forms to record implementation information including program attendance, number of participants, and sessions covered or topics addressed. The data recording will take approximately 240 minutes over the course of the school year. Reports will be collected monthly during implementation in each treatment school, in the 2006-2007, 2007-2008, and 2008-2009 school years.

Classroom Observations (Attachment G) will provide information on tangible features of the violence prevention program such as adherence to program design, consistent delivery, and level of student participation. Evaluation staff members will be trained in the use of a standardized observation form and protocol to ensure consistency of observations across classrooms and schools. During annual site visits held each spring during program implementation, evaluation staff members will conduct observations in treatment school classrooms in which RiPP is implemented. Observations will be designed to minimize disruption to classroom implementation of RiPP.

School- Policy Violations and Disciplinary Actions (Attachment H) will provide information on important outcomes at the school level, and will be gathered every 8 weeks in treatment and control schools. We will provide materials with consistent operational definitions and data collection procedures for all study schools. Many schools will gather these types of data for other purposes and will be able to provide it with little effort. Where more effort is required, we will work with each school to identify mutually agreeable procedures for collecting the data. Possibilities include using our trained field data collection or reimbursing the school for staff time. Information gathered will include suspensions, expulsions, violent or delinquent infractions and related disciplinary actions or referrals for counseling. The time required for gathering the data may vary among schools; we estimate that on average it will take approximately 95 minutes per school year. Data collection will be ongoing for each school year to ensure schools’ compliance with the data collection protocol. Data collection for the first year will begin in the fall of 2006 and continue until the spring of 2007, for the second year it will begin in fall of 2007 and end in spring of 2008, and for the third year it will begin in fall of 2008 and end in fall of 2009.

Individual Student Records (Attachment I) will provide information on behavioral outcomes for individual students in treatment and control schools. We will work with each school to identify mutually agreeable procedures for collecting the data. We will ask schools to provide electronic files if possible, in which case RTI will extract the pertinent data. (Like all project staff, these staff members will be required to sign a non-disclosure agreement promising to keep confidential all project data.) If it is necessary to manually abstract information from student records, our first preference will be to use our trained field data collection staff. Some schools may not allow that so as an alternative we will reimburse the school for staff time devoted to abstracting student records data. Information gathered will include attendance, policy violations and consequences, and disciplinary actions (e.g. detention, suspension, expulsion). We estimate that abstraction will take on average 15 minutes per student. Data will be collected in the spring to fall of 2007, 2008, and 2009, following the completion of the school year.

3. Use of Automated, Electronic, Mechanical, or Other Technological Collection Techniques

We will collect only the minimum information necessary for the study. We have designed data collection procedures to minimize respondent burden and use reporting formats that are best-suited for the type of information to be gathered. The student surveys and the teacher surveys will be self-administered paper and pencil surveys using almost entirely yes/no, Likert scale, or checklist responses to expedite data collection. Responses to the student and teacher surveys will be recorded on forms designed to be optically scanned. For collection of school-wide crime and disciplinary data, student records data, and implementation records data, we will use existing data, in electronic format where possible. Where manual collection or abstraction is required we will use standard forms. We will use interviews with teachers and other violence prevention staff in order to obtain a complete and accurate view of violence prevention activities and policies. These interviews will include structured questions to ease reporting and maximize comparability of responses.

4. Efforts to Identify and Avoid Duplication

The Department of Education will submit 60-day and 30-day Federal Register notices intended to solicit public comment on the proposed information collection.

RTI has reviewed other evaluations of school violence prevention programs and believes the proposed information collection is unique in several regards. This data collection is expressly designed to measure important aspects of the implementation of the unique intervention that combines a whole-school approach with a student-focused curriculum, and to measure elements of the intended outcomes of decreased problem behavior and improved school climate/safety. No previous studies to our knowledge have assessed the implementation and impact of this type of combined intervention, which is thought to be a most promising approach to preventing violence in middle schools.

Moreover, this data collection is unique in its plans for collecting complementary, non-redundant information from the participants and stakeholders in this combined intervention. No available studies provide information from all sources necessary to rigorously evaluate this intervention, including student and teacher surveys, interviews with school staff, school records on violence-related infractions and disciplinary actions, records of participation in intervention activities, and classroom observations of intervention implementation. Data from these sources are specific to the intervention and its intended outcomes are critical to the study and must be gathered in a uniform and consistent manner.

Extant, ongoing national surveys represent a potential for duplicate data collection. RTI explored the possibility of relying on data from surveys such as the Youth Risk Behavior Survey and Monitoring the Future and identified the following insurmountable problems: (1) these surveys are not conducted in all sites, (2) site-representative data are not available from many of the sites where these surveys are conducted, and (3) the data collected by these surveys do not include measures that would allow a comprehensive assessment of the impact of RiPP and Best Behavior.

5. Impact on Small Businesses or Small Entities

The respondents for the study are students, teachers, and other school staff. No small businesses or small entities will be involved in this data collection.

6. Consequences of Not Conducting the Data Collection or Collecting It Less Frequently

Without this data collection, the Department of Education cannot assess the impact of this unique intervention combining a whole-school approach with a student-focused curriculum to reduce violent and other delinquent behaviors among 6th, 7th and 8th grade students. In particular, it is critical to conduct data collection at specific times in schools participating in the study in order to measure changes in student behavior and school climate/safety and assess whether those changes are related to the intervention. Existing data from other schools collected at other times will not provide sufficient information to establish the impact of the intervention. The data collection described herein and analysis of these data will provide information critical to the development of effective school violence prevention programs, which will potentially advance the important field of school violence prevention. In addition, it is necessary to collect the data as scheduled to accurately assess the cumulative effects of the violence prevention program with each successive year of implementation; thus, data will be collected over the three consecutive years that the program is implemented.

7. Special Circumstances

There are no special circumstances involved with this data collection.

8. Federal Register Announcement and Consultation

A 60-day notice was published in the Federal Register on December 5, 2005, with an end date of February 3, 2006 to provide the opportunity for public comment. No substantive comments were received. In addition, as part of the evaluation design process, a panel of nationally known experts reviewed and commented on the overall evaluation strategy and some members provided input on measurement instruments. (See Attachment J for names of the experts who contributed.) The design and planning of the data collection effort was completed in collaboration with representatives from the Department of Education. These representatives were particularly interested and knowledgeable in school-based violence prevention programs and made helpful contributions.

9. Explanation of Any Payment or Gift to Respondents

We plan to follow the Guidelines for Incentives for NCEE Evaluation Studies prepared by the Department of Education and dated March 22, 2005. This memo outlines the circumstances in which respondent incentives are appropriate in NCEE studies and the maximum amounts permitted. This study meets the criteria for the use of respondent incentives as outlined in these Guidelines.

Teacher survey incentives. We will give each teacher an incentive each time that he or she completes the teacher survey. This incentive will be $30 in accordance with the amount stated in the Guidelines for a survey of medium burden. This incentive will be instrumental in obtaining completed teacher surveys for this study because teachers are the target of numerous requests to complete surveys on a wide variety of topics from state and district offices, independent researchers, and the Department of Education (PPSS and NCES). Further, the teachers’ school days are already quite busy, potentially requiring them to complete surveys outside school time. There are also in some localities collective bargaining agreements that do not allow teachers to complete surveys during school time.

Incentives for consent. In addition, we will provide a $25 incentive to classroom teachers or the school survey coordinator to encourage high rates of return for the parental consent forms needed for the student survey. Our preferred method of distributing consent forms is to include the forms in a packet parents receive at the school’s open house during enrollment at the beginning of the school year. Alternatively, homeroom teachers may distribute consent forms to their students or the school may wish to have the forms mailed directly to the students’ home. We will work closely with each school to ensure that parents who do not return the consent form are sent reminder notices with replacement forms. This process will be repeated, as needed, to achieve the target active parental consent rate. We will provide an incentive ($25 gift card) for each classroom in which at least 90% of the student survey parental consent forms are returned, whether or not the parent allows the student to participate in the student survey. The incentive will be provided either to the classroom teacher or to the school survey coordinator, based on who is responsible for monitoring the consent form returns, for the added burden this process will place. The purpose of the classroom incentive is to encourage the teacher or the survey coordinator to monitor consent returns carefully and follow up with students for whom a form has not been returned, encouraging students to return a signed form. We have used similar approaches successfully in previous studies. For example, in the cross-site evaluation of the Safe Schools/Healthy Students Initiative we told teachers and staff that we would provide each school an additional $25 for each classroom in which a minimum of 70% of active parental consent forms were completed by parents and returned to the school. In schools where we used this approach we achieved return rates of parental consent forms of 78%, on average. We will make this incentive opportunity available for each Grade 6 classroom participating in the survey in the 2006/2007 school year, and in the 8th grade classrooms participating in the 2008/2009 school year.

Control schools incentive. In accordance with the Guidelines, we will provide a stipend of $1500 to each control group school to partially offset costs related to participating in the study. Control group schools will receive this stipend each year that they participate in the study and will be offered the opportunity to use their stipends to purchase the violence prevention program and training at a discounted rate, following the third year of the study. Treatment schools will not receive this stipend because they are receiving the intervention.

The burden for control schools will be fairly high in this study and there will be no inherent benefit to the control schools resulting from their participation. Control schools will not receive the intervention under study for three years and will be asked to not implement any violence prevention programs similar to the intervention under study for three years. For control schools in this evaluation, there will be a student survey in the fall and spring, a teacher survey in the spring and interviews with multiple staff for multiple days during the year. In addition, control schools will provide records about school policy violations committed by students, and distribute and collect parental consent forms. The data collection will be spread throughout the school year and will occur over three consecutive years. This is a lengthy and intense fielding period with no direct benefits to the control schools, so it is important to use the incentives outlined in the Guidelines to maintain a commitment from these schools so that they will continue participation throughout the three years and provide us with all the data needed for the study.

Control schools that participate in the study will not necessarily receive Department grants from the Office of Safe and Drug Free Schools; thus, they will not be obliged to participate in a study of violence prevention for the Department. Partially off-setting the costs of participation of control schools will be important in order to maintain their continued participation and cooperation. Because random assignment takes place at the school level, not the student level, the loss of a single control school will result in the loss of a significant portion of the study sample. It is imperative to keep all control schools in the study or the result of the whole study could be vitiated. Also, this study is a random assignment impact study to which substantial Department of Education resources have been committed. Without high respondent completion rates for both the program and control groups, the investment of Department funds will not produce valid findings.

10. Assurance of Confidentiality

All data collection activities will comply with the Privacy Act of 1974, P.L. 93-579, 5 USC 552 a; the “Buckley Amendment,” Family Educational and Privacy Act of 1974, 20 USC 1232 g; the Freedom of Information Act, 5 USC 522; and related regulations, including but not limited to: 41 CFR Part 1-1, 45 CFR Part 5b, and 40 FR 44502 (September 26, 1975); and, as appropriate, the Federal common rule or Department final regulations on protection of human research subjects. RTI International conducts all research involving human subjects in accordance with federal regulations (45 CFR 46 and 21 CFR 50 and 56). It is the responsibility of RTI's Office of Research Protection and Ethics (ORPE), as well as the research staff, to ensure that these regulations are followed. One of the functions of ORPE is to oversee RTI’s three Institutional Review Boards (IRBs), which review all research involving human subjects that is conducted by RTI researchers. RTI holds a Federalwide Assurance (FWA) from the Department of Health and Human Services' Office for Human Research Protections (OHRP). RTI assumes full responsibility for performing all research involving human subjects (regardless of funding agency) in accordance with that Assurance, including compliance with all federal, state, and local laws as they may relate to the research. RTI's IRB procedures are updated as needed and are available for review by any federal agency for whom RTI does human subjects research.

All data collection activities will be conducted in full compliance with Department of Education regulations to maintain the confidentiality of data obtained on private persons and to protect the rights and welfare of human research subjects as contained in Department of Education regulations. Research participants (students, teachers, and school staff) will sign written consent (or assent) forms (Attachments K and L). The consent materials will inform respondents about the nature of the information that will be requested and confidentiality protection, and they will be assured that information will be reported only in aggregate statistical form. The consent materials will also inform respondents that the data will be used only for research purposes by researchers who have signed a confidentiality agreement.

In addition to the consent forms, each self-administered instrument will include a reminder of the protection of confidentiality. Where data are collected through in-person interviews or group surveys, interviewers or survey administrators will remind respondents of the confidentiality protections provided, as well as their right to refuse to answer questions to which they object. During the group administration of the student survey report, desks will be arranged in classroom style to ensure that children cannot see the responses provided by classmates. All data collectors and interviewers will be knowledgeable about confidentiality procedures and will be prepared to describe them in full detail, if necessary, or to answer any related questions raised by respondents.

RTI has a long history of protecting confidentiality and privacy of records, and considers such practice a critical aspect of the scientific and legal integrity of any data collection. The integrity RTI brings to protecting data confidentiality and privacy will extend to every aspect of data collection and data handling for this study. RTI plans to use its ongoing, long-standing techniques that have proven effective in the past. Every interviewer will be required to sign a pledge to protect the confidentiality of respondent data. The pledge indicates that any violation or unauthorized disclosure may result in legal action or other sanctions by the national evaluator.

In addition, the following safeguards are routinely employed by RTI to carry out confidentiality assurances:

Access to sample selection data is limited to those who have direct responsibility for providing the sample. At the conclusion of the research, these data are destroyed.

Identifying information is maintained on separate forms, which are linked to the interviews only by a sample identification number. These forms are separated from the interviews as soon as possible.

Access to the file linking sample identification numbers with the respondents’ identification and contact information is limited to a small number of individuals who have a need to know this information.

Access to the hard copy documents is strictly limited. Documents are stored in locked files and cabinets. Discarded material is shredded.

Computer data files are protected with passwords and access is limited to specific users.

11. Justification for Sensitive Questions

Only the data collection proposed for students and teacher surveys contain questions that could be deemed sensitive; the data collection instruments for other participants do not contain sensitive questions. Some of the questions on the Student Survey could be deemed sensitive—most notably those related to violent behavior or victimization. Voluntary student self-reports are needed because administrative records do not contain the information needed to assess the impact of this intervention on these sensitive behaviors. Specifically, administrative records (either school or police records) will miss many incidents, particularly, for example, less serious but potentially damaging violent incidents (e.g., bullying). Also, accurate records of incidents of behaviors outside school cannot be obtained from administrative records.

Many of the questions that could be considered sensitive are drawn from or are consistent with widely used national surveys administered to similar populations in school settings. Questions addressing violent behavior and victimization are crucial to assessing the impact of the intervention.

Students and teachers will be advised during the informed consent process of the voluntary nature of participation and their right to refuse to answer any question. They will also be assured that the responses are completely confidential. Moreover, the development and conduct of the evaluation, including the administration of the student survey, is overseen by one of RTI’s three Institutional Review Boards, each of which has HHS Multiple Project Assurance.

12. Estimates of Hour Burden

Exhibit 4 provides our estimate of time burden. Data collection among the sixth graders will take place in the 2006-2007 school year. Students from the 6th grade will be followed into the 7th grade with follow up data collection occurring in the spring of 2008. A refreshed census will be collected from 8th graders in the 2008-2009 school years.

The estimated burden for Individual Student Records and School Policy Violations and Disciplinary Actions are upper bound estimates because they are based on school staff abstracting information from records while we will actually have evaluation staff do the abstraction wherever possible.

13. Estimate for the Total Annual Cost Burden to Respondents or Recordkeepers

There are no direct costs to individual participants other than their time to participate in the study. We have assumed that there are no financial costs to student respondents because the surveys will be completed during regular school hours; however, we recognize that participants will each miss approximately 45 minutes from their classes. To the extent possible we will administer the surveys during students’ study halls or other elective classes in order not to interfere with learning activities in core academic courses.

14. Estimates of Annualized Costs to Federal Government

The Department of Education conducted an open competition to select an organization to conduct this study. RTI was awarded a 5-year contract that is being funded incrementally. The estimated cost of the Federal government contract is $10,291,690 with an average annual cost of $2,000,000.

Exhibit 4. Burden in Hours to Respondents

|

Grade 6 |

Grade 7 |

Grade 8 |

Number

of Respondents |

Responses/ Respondent |

Avg. Burden Hours/ Response |

Total Burden Hours |

Student Survey |

|

|

|

|

|

|

|

Fall 2006 |

9,720 |

|

|

9,720 |

1 |

0.75 |

7290 |

Spring 2007 |

9,720 |

|

|

9,720 |

1 |

0.75 |

7290 |

Fall 2007 |

|

|

|

0 |

0 |

0 |

0 |

Spring 2008 |

|

7,760 |

|

7,760 |

1 |

0.75 |

5820 |

Spring 2009 |

|

|

9,720 |

9,720 |

1 |

0.75 |

7290 |

|

|

|

|

|

|

|

|

Teacher Survey |

|

|

|

|

|

|

|

Spring 2007 |

|

|

|

960 |

1 |

0.50 |

480 |

Spring 2008 |

|

|

|

960 |

1 |

0.50 |

480 |

Spring 2009 |

|

|

|

960 |

1 |

0.50 |

480 |

|

|

|

|

|

|

|

|

Violence

Prevention |

|

|

|

|

|

|

|

Spring 2006 |

|

|

|

40 |

1 |

.875 |

35 |

Spring 2007 |

|

|

|

40 |

1 |

.875 |

35 |

Spring 2008 |

|

|

|

40 |

1 |

.875 |

35 |

|

|

|

|

|

|

|

|

Violence Prevention Staff Interview |

|

|

|

|

|

|

|

Spring 2006 |

|

|

|

120 |

1 |

.833 |

100 |

Spring 2007 |

|

|

|

120 |

1 |

.833 |

100 |

Spring 2008 |

|

|

|

120 |

1 |

.833 |

100 |

|

|

|

|

|

|

|

|

School Management Team Interview |

|

|

|

|

|

|

|

Spring 2006 |

|

|

|

120 |

1 |

.833 |

100 |

Spring 2007 |

|

|

|

120 |

1 |

.833 |

100 |

Spring 2008 |

|

|

|

120 |

1 |

.833 |

100 |

|

|

|

|

|

|

|

|

RiPP Implementation Records |

|

|

|

|

|

|

|

Fall 2007 |

|

|

|

240 |

1 |

4 |

960 |

Fall 2008 |

|

|

|

240 |

1 |

4 |

960 |

Fall 2009 |

|

|

|

240 |

1 |

4 |

960 |

|

|

|

|

|

|

|

|

School Policy Violations and Disciplinary Actions |

|

|

|

|

|

|

|

2006-2007 school year |

|

|

|

40 |

1 |

1.5 |

60 |

2007 – 2008 school year |

|

|

|

40 |

1 |

1.5 |

60 |

2008 – 2009 school year |

|

|

|

40 |

1 |

1.5 |

60 |

|

|

|

|

|

|

|

|

Individual Student Records |

|

|

|

|

|

|

|

Spring 2007 |

|

|

|

40 |

1 |

69.5 |

2780 |

Spring 2008 |

|

|

|

40 |

1 |

139 |

5560 |

Spring 2009 |

|

|

|

40 |

1 |

139 |

5560 |

|

|

|

|

|

|

|

|

TOTALS |

|

|

|

41,600 |

|

|

46,795 |

15. Reasons for Program Change

The program change of 15,599 average annual hours of burden is because this is a new collection.

16. Plans for Tabulation and Publication and Project Time Schedule

The RTI evaluation team will conduct data analyses that address the key impact and process evaluation questions. In this section we first provide sample templates of tables we will use to report data. Next we provide an overview of our plans for analysis of outcomes, mediators, and process measures. Then we describe our planned statistical models. Last, we present the project schedule and our plans for reporting and publications. Technical details on sampling and power are provided in Section B.

Tabulation Plans

Exhibit 5 shows a sample template of a table that will provide means and standard errors by treatment conditions for student outcomes of primary interest. Information is given for the spring data collection period, following intervention activities. A comparison of treatment and control means for each outcome is provided and a standardized effect size estimate is given.

Exhibit 5. Effect of Violence Prevention Intervention on Violent Behaviors

|

|||||||

Outcomes |

Treatment Schools |

Control Schools |

|

||||

N |

Mean (SE) Spring |

N |

Mean (SE) Spring |

Impact |

Effect Size |

||

Student reports of their own behavior |

20 |

|

20 |

|

|

|

|

Student reports of others’ behaviors toward them |

20 |

|

20 |

|

|

|

|

* Difference is statistically significant at the .05 level

Effect size = Impact expressed in standard deviation units

Sources: Student Survey

Exhibit 6 shows a sample template of a table that will provide mean percentages and standard errors by schools. A comparison of treatment and control percentages for each outcome is provided, and a standardized effect size estimate is given.

Exhibit 6. Effect of Violence Prevention Intervention on Student Infractions (Binary Outcomes)

Outcome |

Mean

Percentages |

Model Results |

||

Treatment Schools (n =20) |

Control

Schools |

|||

% |

% |

Impact |

Effect Size |

|

Bullying |

|

|

|

|

Robbery |

|

|

|

|

Physical attack or fight |

|

|

|

|

Threat of physical attack |

|

|

|

|

Theft/larceny |

|

|

|

|

Possession of firearm or explosive device |

|

|

|

|

Possession of knife or sharp object |

|

|

|

|

* Difference is statistically significant at the .05 level

Notes: Odds ratio = odds of occurrence in the treatment group divided by odds of occurrence in the control group.

Sources: School Policy Violations and Disciplinary Actions

Impact Analyses for the School-Based Violence Prevention Program

The impact analysis is based on a group-randomized control experiment. We will evaluate program impact using multiple regression models that predict each outcome measure (e.g., aggression, victimization) as a function of condition (treatment versus control), and relevant covariates (e.g., baseline measures, demographic characteristics). The inclusion of covariates related to the outcomes but unrelated to program exposure will improve the precision of the test of the program effect by reducing unwanted variation. Because students will be nested within schools and schools nested within condition, we will estimate these effects using multilevel regression equations and software. Exhibit 7 explains the planned data collection

Exhibit 7. Proposed Data Collection Pointsa

Data Collection Year |

Grade Level |

||

6th grade |

7th grade |

8th grade |

|

Year 1 |

Census of 6th grade fall & spring |

|

|

Year 2 |

|

7th grade stayers & ITT high-riskb spring |

|

Year 3 |

|

|

Census of 8th grade & ITT high-riskb spring |

aData will be collected at both treatment and control schools.

bIntent-to-treat sample of students at high risk for aggressive and violent behaviors.

Question 1: Are there decreases in violence and aggression in schools that implement the violence prevention program compared to schools that do not implement it?

These analyses address the primary issue of program impact and will include an overall impact evaluation after three years of implementation as well as an interim evaluation of the effects of the program after one year of implementation. The overall impact analysis (i.e., impact of three years of program implementation) will be based on data collected from a census of students in the eighth grade class in the spring of 2009 in treatment and control schools. An interim analysis of program impact after a single year of program implementation will be based on a census of data collected from students in the sixth grade in spring of 2007 in treatment and control schools. The difference in follow-up between the sixth graders in control and treatment schools will then be compared.

Question 2: What is the impact of the violence prevention program over time on students who are at an increased risk for violence and aggression?

Similar to the analysis of main effects, analysis of program impacts on the high-risk group will occur in implementation years one, two, and three. First, using information from the aggression measures in the 6th grade baseline survey in year one, we will identify a high-risk sample in both control and treatment schools before the program begins. Impacts will be estimated for this intent-to-treat subgroup for all three years of the data collection. Impacts from the first year will appear in the interim report and the longer term effects from years two and three will appear in the final report.

Question 3: What are the outcomes of the violence prevention program on students with varying years of program exposure?

The current study design does not permit an experimental examination of dosage and such a study would be cost prohibitive. But the current design will permit a correlational analysis of dosage using data from treatment students with varying years of exposure to the program and comparing the data to students in the control schools. This analysis will take place after the third year of program implementation when there will be varying degrees of exposure to the program among the eighth-grade students in the treatment group.

Mediation Analyses

We will conduct a series of mediation analyses (Baron and Kenny, 1986; MacKinnon, 2002; MacKinnon and Dwyer, 1993) to identify mediators of program effects. Our mediation analysis will evaluate the indirect effects of the program on student outcomes by positing two intermediate variables in the analytic causal pathway: student attitudes towards violence and aggression, and student strategies for coping with anger. By examining these intermediate factors that are hypothetically related to the outcomes of interest, our mediation analysis will begin to address important questions about how the program achieves its effects (MacKinnon and Dwyer, 1993). We will build a model that examines how participating in the intervention affects these mediators and how they in turn relate to the student outcomes. Path models, estimated with Mplus statistical software, will be used to conduct all mediation analyses. Mediated effects will be estimated as the product of two regression coefficients obtained from the path model: (1) the coefficient relating treatment group to the mediators at follow-up and (2) the coefficient relating the mediator at follow-up to the outcome at follow-up. This estimate, together with an estimate of the standard error of this effect (Sobel, 1982), provides a z-score test of each mediated effect (MacKinnon et al., 2002).

Process Evaluation

The purpose of the process evaluation will be twofold. First, it will be used to determine if the violence prevention program was implemented as designed and intended. Second, it will be used to describe any violence prevention activities taking place in control schools or treatment schools (other than the program being evaluated), and to determine the extent to which these activities are similar to the violence prevention program used in the treatment condition. We will determine the fidelity of the violence prevention program in treatment schools by analyzing data from interviews with violence prevention staff, implementation records, and classroom observations. We will determine the nature of any additional violence-prevention activities in the treatment schools (and determine the treatment contrast between treatment and control schools) also through these measures. In addition, we will examine treatment contrast through the responses of the violence coordinator interviews as well as several of the responses from surveys of teachers.

Statistical Models

The primary research questions will be addressed using statistical models that properly account for the complexities of the study design. Both nested cross-sectional and nested longitudinal models will be employed. To address question one, student population outcomes will be assessed with a cross-sectional model. For this class of model, the analytical unit is the schools, not individuals within the schools. For question two, outcomes for high-risk students will be assessed with a longitudinal experimental design with repeated measures taken on students nested in schools and schools nested within experimental conditions. For question three, a dosage analysis will compare results from students in treatment schools who receive varying years of program exposure to students at control schools. The standard errors estimated and significance tests conducted will account for the fact that schools (not students) are the units of random assignment.

Question 1: Are there decreases in violence and aggression in schools that implement the violence prevention program compared to schools that do not implement it?

The nested cross-sectional model is appropriate to assess the primary research question as well as for interim analyses that evaluate short-term effects of the program. We assume a census of each grade will provide approximately 243 students in the censuses of both grades six and eight in each school. We assume balance across experimental conditions and express the model as follows:

(1)

(1)

In this model,

Yi:k:l represents the response of the ith

person nested in the kth group in the lth

condition. The four terms on the right hand side of the equation

represent the grand mean (µ); the effect of the lth

condition (Cl); the realized value of the kth

group (Gk:l); any difference between this predicted

value and the observed value is allocated to residual error

(eij:k:l). If any of random components are excluded, the

model will be misspecified. The inclusion of covariates

![]() can improve the test of the intervention effect (i.e., increase the

precision of the model) to the extent that the covariates explain a

portion of the residual variation.

can improve the test of the intervention effect (i.e., increase the

precision of the model) to the extent that the covariates explain a

portion of the residual variation.

Question 2: What is the impact of the violence prevention program over time on students who are at an increased risk for violence and aggression?

Repeated measures nested cohort models will be employed to address the impact of the program over time on a high-risk sample of students. Estimation of treatment effects will be accomplished with a model that includes repeated measures on an intent-to-treat sample of students within groups and on groups within conditions. With additional levels of nesting, this model will have five components of random variation and can be expressed as:

(2)

(2)

In this model, Yij:k:l represents the response of the ith

person measured at time j, nested in the kth group of the

lth condition. The first four terms (normal text)

represent the fixed-effects portion of the model, and the last five

terms (bold text) represent the random-effects portion of the model.

The fixed effects include: the grand mean (µ); the effect of

the lth condition (Cl); the effect of the jth

time (Tj); and the joint effect of the jth time and the

lth condition (TCjl). The random components include: the

realized value of the ith person (Mi:k:l);

the realized value of the kth group (Gk:l);

the realized value of the kth group at time j (TGjk:l);

and the realized value of the combination of the ith member at the

time j (MTij:k:l). Any difference between

this predicted value and the observed value is allocated to residual

error (eij:k:l). If any of random components

are excluded the model will be misspecified. The inclusion of

covariates

![]() can improve the test of the intervention effect (i.e., increase the

precision of the model) to the degree that the covariates represent

variables that are related to the endpoint and unevenly distributed

across conditions, these variables would confound the observed value

of the intervention effect if ignored. In this model, the null

hypothesis is no difference across the adjusted condition means over

time. Any variation associated with the intervention effect will be

captured in the adjusted time by condition means (TCjl)

and evaluated against the variation among the adjusted time by group

means (TGjk:l). Once again, the standard errors estimated

and significance tests conducted will account for the fact that

schools (not students) are the units of random assignment.

can improve the test of the intervention effect (i.e., increase the

precision of the model) to the degree that the covariates represent

variables that are related to the endpoint and unevenly distributed

across conditions, these variables would confound the observed value

of the intervention effect if ignored. In this model, the null

hypothesis is no difference across the adjusted condition means over

time. Any variation associated with the intervention effect will be

captured in the adjusted time by condition means (TCjl)

and evaluated against the variation among the adjusted time by group

means (TGjk:l). Once again, the standard errors estimated

and significance tests conducted will account for the fact that

schools (not students) are the units of random assignment.

Project Schedule

Data collection will begin in 2006 and continue through 2009, as noted in Exhibit 8.

Activity |

Schedule |

||

2006-2007 |

2007-2008 |

2008-2009 |

|

Consent letters sent to parents1 |

August – September April-May |

|

August – September April-May |

Student Survey |

August

–September |

|

|

Teacher Surveys |

April-May |

April-May |

April-May |

Violence Prevention Coordinator Interview |

January – April |

January – April |

January – April |

Violence Prevention Staff Interviews |

January – April |

January – April |

January – April |

School Management Team Interviews |

January – April |

January – April |

January – April |

RiPP Implementation Records |

September – June |

September – June |

September – June l |

Classroom Observation |

January – April |

January – April |

January – April |

School Policy Violations and Disciplinary Data |

September – June |

September – June |

September 2008 – November 2009 |

Individual Student Records |

May 2007 - November 2007 |

May 2008 – November 2008 |

May 2009 – November 2009 |

Reports First |

December

2009 |

||

1Consent letters in spring 2007 and spring 2009 will be needed only for students who are new to the study. The spring 2008 survey will include only students who participated in the fall 2006 survey; no additional consent letters will be needed.

Reporting and Publications

There will be two reports of evaluation results as shown in Exhibit 8 above. These reports will present findings from the analyses described above, including descriptive analyses of violence prevention in study schools and implementation of the violence prevention program in treatment schools; changes in outcomes and differential change in treatment and control schools; whether changes in outcomes are mediated or moderated; and the cost of implementing the violence prevention program\. Special reports will be prepared as needed/requested.

17. Request for Approval to Not Display OMB Approval Expiration Date

The present submission does not request such approval. The expiration date will be displayed along with the OMB approval number.

18. Exceptions to Certification for Paperwork Reduction Act Submissions

No exceptions to the certification statement are requested or required.

REFERENCES

Bureau of Labor Statistics, U.S. Department of Labor, Occupational Outlook Handbook, 2006-07 Edition, Teachers—Preschool, Kindergarten, Elementary, Middle, and Secondary, on the Internet at http://www.bls.gov/oco/ocos069.htm (visited February 14, 2006).

Cheong, J., MacKinnon, D.P., & Khoo, S.-T. (2003). Investigation of mediational processes using parallel process latent growth curve modeling. Structural Equation Modeling, 10(2), 238-262.

Farrell, A. D., Meyer, A. L., & Sullivan, T.N., & Kung, E.M. (2003). Evaluation of the Responding in Peaceful and Positive Ways (RIPP) Seventh Grade Violence Prevention Curriculum. Journal of Child and Family Studies, Vol. 12, No. 1, 101-120.

Farrell, A. D., Valois, R. F., Meyer, A. L., & Tidwell, R. P. (2003). Impact of the RIPP violence prevention program on rural middle school students. The Journal of Primary Prevention , 24(2), 143-167.

Farrell, A. D., Meyer, A. L., & White, K.S. (2001). Evaluation of Responding in Peaceful and Positive Ways (RIPP): A School-Based Prevention Program fro Reducing Violence Among Urban Adolescents. Journal of Clinical Child Psychology, Vol. 30, No. 4, 451-463.

Farrell, A. D., Valois, R. F., & Meyer, A. L. (2002). Evaluation of the RIPP-6 Violence Prevention Program at a Rural Middle School. American Journal of Health Education , 33(3), 167-172.

Gold, M.R., J.E. Siegel, L.B. Russell, M.C. Weinstein (Eds). (1996). Cost-Effectiveness in Health and Medicine. New York, NY: Oxford University Press.

Indicators of School Crime and Safety (2003). National Center for Education Statistics; Bureau of Justice Statistics, Department of Justice. “School related” is defined as occurring at, or on the way to or from school.

Janega, J. B., Murray, D. M., Varnell, S. P., Blitstein, J. L., Birnbaum, A. S., & Lytle, L. A. (2004) Assessing the most powerful analysis method for schools intervention studies with alcohol, tobacco, and other drug outcomes. Addictive Behaviors, 29(3), 595-606.

Murray, D. M., (1998).Design and Analysis of Group Randomized Trials. New York: Oxford University Press.

Polsky, D., H.A. Glick, R. Willke, and S. Dunmeyer. (1997). Confidence intervals for cost-effectiveness ratios: A comparison of four methods. Health Economics 6(3): 243-252.

Shadish, W.R., Cook, T.D., & Campbell, D.T. (2002). Experimental and Quasi-Experimental Designs for Generalized Causal Inference. Boston, MA: Houghton Mifflin Company.

Snedecor, G. W., & Cochran, W. M. (1989). Statistical Methods (8th ed.). Ames, IA: The Iowa State University Press.

Varnell, S., Murray, D. M., Janega, J. B., & Blitstein, J. L. (2004). Design and analysis of group-randomized trials: A review of recent practices. American Journal of Public Health, 94(3), 393-399.

Zarkin, G.A., L.J. Dunlap, and G. Homsi. (In Press). The substance abuse services cost analysis program (SASCAP): a new method for estimating drug treatment services costs. Evaluation and Program Planning.

Exhibit 12 –

Statute Authoring the Evaluation

SEC. 4121. FEDERAL ACTIVITIES.

(a) PROGRAM AUTHORIZED- From funds made available to carry out this subpart under section 4003(2), the Secretary, in consultation with the Secretary of Health and Human Services, the Director of the Office of National Drug Control Policy, and the Attorney General, shall carry out programs to prevent the illegal use of drugs and violence among, and promote safety and discipline for, students. The Secretary shall carry out such programs directly, or through grants, contracts, or cooperative agreements with public and private entities and individuals, or through agreements with other Federal agencies, and shall coordinate such programs with other appropriate Federal activities. Such programs may include —

(1) the development and demonstration of innovative strategies for the training of school personnel, parents, and members of the community for drug and violence prevention activities based on State and local needs;

(2) the development, demonstration, scientifically based evaluation, and dissemination of innovative and high quality drug and violence prevention programs and activities, based on State and local needs, which may include —

(A) alternative education models, either established within a school or separate and apart from an existing school, that are designed to promote drug and violence prevention, reduce disruptive behavior, reduce the need for repeat suspensions and expulsions, enable students to meet challenging State academic standards, and enable students to return to the regular classroom as soon as possible;

(B) community service and service-learning projects, designed to rebuild safe and healthy neighborhoods and increase students' sense of individual responsibility;

(C) video-based projects developed by noncommercial telecommunications entities that provide young people with models for conflict resolution and responsible decisionmaking; and

(D) child abuse education and prevention programs for elementary and secondary students;

(3) the provision of information on drug abuse education and prevention to the Secretary of Health and Human Services for dissemination;

(4) the provision of information on violence prevention and education and school safety to the Department of Justice for dissemination;

(5) technical assistance to chief executive officers, State agencies, local educational agencies, and other recipients of funding under this part to build capacity to develop and implement high-quality, effective drug and violence prevention programs consistent with the principles of effectiveness in section 4115(a);

(6) assistance to school systems that have particularly severe drug and violence problems, including hiring drug prevention and school safety coordinators, or assistance to support appropriate response efforts to crisis situations;

(7) the development of education and training programs, curricula, instructional materials, and professional training and development for preventing and reducing the incidence of crimes and conflicts motivated by hate in localities most directly affected by hate crimes;

(8) activities in communities designated as empowerment zones or enterprise communities that will connect schools to community-wide efforts to reduce drug and violence problems; and

(9) other activities in accordance with the purpose of this part, based on State and local needs.

(b) PEER REVIEW- The Secretary shall use a peer review process in reviewing applications for funds under this section.

| File Type | application/msword |

| File Title | PAPERWORK REDUCTION ACT SUBMISSION |

| Author | RTI Staff |

| Last Modified By | #Administrator |

| File Modified | 2009-07-17 |

| File Created | 2009-07-17 |

© 2026 OMB.report | Privacy Policy