FACES 2009 Supporting Statement A FINAL Revised 6.1.09

FACES 2009 Supporting Statement A FINAL Revised 6.1.09.doc

Head Start Family and Child Experience Survey (FACES 2009)

OMB: 0970-0151

Head Start Family and Child Experiences Survey (FACES 2009)

Supporting Statement Part A for OMB Approval

March 12, 2009

A. JUSTIFICATION

The Administration for Children and Families (ACF) of the Department of Health and Human Services (DHHS) is requesting Office of Management and Budget (OMB) clearance for the methodology and instruments to be used to extend a currently approved data collection program, the Head Start Family and Child Experiences Survey (FACES), to a new sample of Head Start programs, and the families and children who will be entering Head Start for the first time in fall 2009. The design of FACES 2009 and the procedures that are used to select the sample and conduct baseline and three waves of follow-up data collection are for the most part the same as those used in FACES 2006 (OMB number 0970-0151). This section provides supporting statements for each of the points outlined in Part A of the Office of Management and Budget (OMB) guidelines for the collection of information in FACES 2009. Two additional requests for clearance also support FACES 2009 activities: (1) a general information clearance for the purpose of gathering information that will be used to develop a sampling frame of Head Start centers in each program and to facilitate the selection of the center, class, and child samples for FACES 2009 (OMB Number 0970-0356) and (2) a general pretesting clearance for piloting activities of new procedures for identifying children and for obtaining parent consents and to pilot a few assessment instruments that are new to FACES (OMB Number 0970-0355). The current submission requests clearance for the main FACES 2009 study, including sampling and recruitment of centers and children and families, data collection instruments and procedures, data analyses, and the reporting of findings.

A.1. Circumstances Making the Collection of Information Necessary

Overview of Request

ACF has contracted with Mathematica Policy Research, Inc. (MPR) and its subcontractors, Juarez and Associates and The Educational Testing Service, to collect information on Head Start Performance Measures. FACES 2009, like the four cohorts that preceded it, will collect information from a national probability sample of Head Start children, their parents, teachers, and program officials, to ascertain what progress Head Start has made in meeting program performance goals.

There are two legislative bases for the FACES data collection: the Government Performance and Results Act of 1993 (P.L. 103-62), requiring that the Office of Head Start move expeditiously toward development and testing of Head Start Performance Measures, and the Improving Head Start for School Readiness Act of 2007 (P.L. 110-134), outlining requirements on monitoring, research, and standards for Head Start (see Appendix A). The extension of FACES will allow the Office of Head Start to continue using the system of program performance measures for accountability and program improvement goals mandated by Congress as well as to contribute information that may assist with new aspects of the Improving Head Start for School Readiness Act, such as defining what constitutes a research-based curriculum and documenting the status of limited-English-proficient children and families in Head Start.

Study Context and Rationale

Since its founding, Head Start has served as the nation’s premier federally funded early childhood intervention. Focusing on children in the years before formal schooling begins—often children from low-income families with multiple risks—Head Start has served as a natural and national laboratory for a wide range of basic, prevention, early intervention, and program evaluation research (see Love et al. 2006). In 1995, ACF brought together a wide variety of expert advisers and produced a report recommending specific performance measures in the areas of health, education, partnerships with families, and program management to be included in the Program Performance Measures system (ACYF 1995). The resulting Head Start Performance Measures provide criteria for assessing how well the Head Start program as a whole is fulfilling its primary mission of increasing the school readiness of young children from low-income families nationwide. The measures are also designed to assess how well the program is meeting the related objective of helping low-income families attain their educational, economic, and child-rearing goals.

FACES collects program performance data on successive nationally representative samples of children and families served by Head Start. FACES provides the Office of Head Start, the federal government, local programs, and the public with valid and reliable national information about the skills and abilities of Head Start children, how they compare to normative samples of American children, and their readiness for and subsequent performance in school. FACES data have also been useful in responding to additional program requirements; they have been widely disseminated within the Head Start community to assist with continuous efforts toward program improvement and have guided training and technical assistance efforts.

Successive FACES samples provide ongoing descriptive pictures of the stability and change of the population served, staff qualifications, observed classroom practices and quality, and child and family outcomes. FACES 2009, alone and in combination with earlier FACES cohorts, will provide updated information to document status and change in a number of key areas including (1) demographic characteristics of families and children enrolled in Head Start; (2) goals, strengths, and needs of participant families; (3) activities and experiences of families while their child is enrolled in Head Start; (4) Head Start programs’ approaches related to family involvement and support, and barriers to such involvement; (5) responsibilities, training, and credentials of staff; (6) quality of observed classroom practice; (7) use of curricula and assessment and provision of program support through training, mentoring, and supervision; and (8) child and family outcomes as conceptualized by the Head Start Performance Measures.

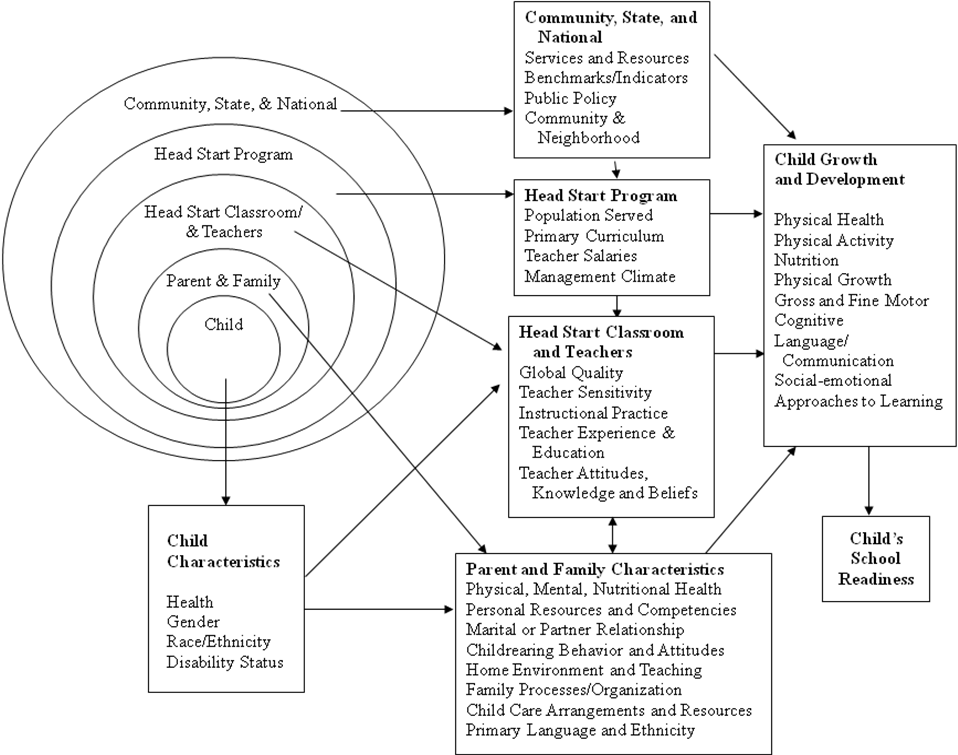

The conceptual model for FACES 2009 builds on the conceptual framework the Head Start program developed to guide reporting on program performance.3 The performance measures framework unifies and organizes the Program Performance Measures to display the linkages between Head Start program and classroom process measures, and the outcome measures for Head Start children and families. The framework indicates that the ultimate goal of Head Start is to promote the school readiness of children as related to children’s cognitive, social-emotional, and physical development. Each level of the framework supports and contributes to the successful realization of the next level, to include families, programs, and communities. FACES is designed to measure all tiers of this framework. The conceptual model that guides the design of FACES 2009 illustrates the complex interrelationships that help to shape the developmental trajectories of children in Head Start (see Exhibit A.1). The child’s place is primary; fostering his or her progress toward school readiness. The surrounding contexts of parent and family, Head Start, and state and national policies affect the child’s life. Further, the Head Start experience is designed to promote immediate short-term and long-term goals for children and families. Thus, measurement of these child and family outcomes both during the program year and through followup at the end of kindergarten allows fuller understanding of how well the program prepares children and their parents for participation in school. In addition to supplying current data on several aspects of program performance, FACES 2009 will furnish information needed to measure how the program is currently performing, as well as the extent to which the Head Start population has changed since 1997 and whether program performance has improved, remained constant, or deteriorated over time.

A.2. Purpose and Use of the Information Collection

The design of FACES 2009, to include the sampling plan, instruments, procedures, and data analysis plan, draws heavily from the design of FACES 2006; few changes in approach or instruments are proposed. Like the four earlier cohorts, FACES 2009 uses a multi-stage sample design with four stages: (1) Head Start programs; (2) centers within programs; (3) classrooms within centers; and (4) children within classrooms. The FACES 2009 sample will include about 3,400 3- and 4-year-olds and their families selected from about 120 centers in 60 Head Start programs. Children eligible for sampling will be those entering Head Start for the first time in fall 2009. Data on programs, classrooms, and children and families for FACES 2009 will be collected by MPR staff under contract with ACF. Major study activities will include:

Selecting a nationally representative sample of Head Start programs and gathering information from those programs to develop a center sampling frame

Selecting a nationally representative sample of Head Start centers and recruiting them to participate in the study, and then sampling classrooms within those centers

Sampling children and recruiting families of Head Start enrollees to participate in the study

Collecting data from children and families, Head Start staff, Head Start classrooms, and kindergarten teachers

Analyzing and reporting findings

EXHIBIT A.1

Conceptual

Model for FACES 2009

Sampling procedures are described more fully in section B.1; all letters and forms used when recruiting and contacting programs, centers, teachers, and parents are included in Appendix B. Data collection procedures are described more fully in section B.3; an overview of the changes made to the FACES 2006 instruments for FACES 2009 can be found in Appendix C.

The study includes four waves of data collection—fall and spring of children’s first Head Start year, spring of the second Head Start year for children who were 3 years old at the time the sample was selected, and spring of the children’s kindergarten year (Table A.1). For the most part, data collection instruments and procedures are the same as those used in FACES 2006. Direct child assessments (to include teacher ratings) will document children’s cognition and general knowledge; language use and emerging literacy; social and emotional development; approaches to learning; and physical development. Interviews with parents will obtain data on the families Head Start serves as well as on the children’s experiences and development. Interviews with lead teachers about their educational background and instructional practices will examine classroom characteristics that relate to the quality of services. In the spring of the Head Start year we will conduct classroom observations of the quality of equipment, materials, teacher-child interactions, and instructional practices. No burden is associated with the observation, and thus it will not be discussed further in this package; see Appendix D for the components of the classroom observation. Interviews with program directors, center directors, and education coordinators will provide data to measure program characteristics that relate to service quality. For children who leave Head Start after their first year, but have not yet gone to kindergarten, parents of those “leavers” will receive a shorter, 15-minute interview in later waves.

TABLE A.1

INSTRUMENT

COMPONENTS, TYPE OF ADMINISTRATION, AND PERIODICITY

Instrument |

How instrument |

Fall |

Spring 2010 |

Spring 2011 |

Spring 2012 |

Parent Interview |

CATI/CAPI |

X |

X |

X |

X |

Child Assessment |

CAPI |

X |

X |

X |

X |

Head Start Teacher Interview |

CAPI |

X |

X |

X |

|

Head Start Teacher Child Rating |

Web with paper |

X |

X |

X |

|

Program Director Interview |

Paper by phone |

X |

|

|

|

Center Director Interview |

Paper in-person |

X |

|

|

|

Education Coordinator Interview |

Paper in-person |

X |

|

|

|

Head Start Classroom Observation |

Paper and laptop entry |

|

X |

X |

|

Kindergarten Teacher Questionnaire |

Web with paper |

|

|

X |

X |

Kindergarten Teacher Child Rating |

Web with paper |

|

|

X |

X |

CATI = Computer-assisted telephone interviewing

CAPI = Computer-assisted personal interviewing

FACES 2009 data will be used to provide descriptions of the characteristics, experiences, and outcomes for children and families served by Head Start and to observe the relationships among family and program characteristics and outcomes. Findings from the study will help guide ACF, the Office of Head Start, national and regional training and technical assistance (T/TA) providers, and local programs in supporting policy development and program improvement at all levels. The data collected will provide information on whether programs are meeting the required Head Start Performance Measures. The main goals are to:

Describe the population served by Head Start, including demographics, strengths and needs of families over time, and information on children’s development over time.

Describe national Head Start, including variations in and patterns of services provided to families, training and credentials of staff, and quality of observed classroom practices.

Explore how change over time in child and family outcomes is related to specific aspects of the program and services received.

A.3. Use of Improved Information Technology and Burden Reduction

The proposed data collection will use a variety of information technologies to reduce burden. Parent interviews will be administered using computer-assisted telephone interviewing (CATI) and computer-assisted personal interviewing (CAPI). Child assessments will also be administered using CAPI in order to facilitate the routing and calculation of basal and ceiling rules, thereby lessening the amount of time required to administer the assessments and reducing burden on the child.

Head Start classroom teacher interviews at each wave will be administered using CAPI to reduce respondent burden by facilitating routing and skip patterns. Head Start teachers will be given the option of completing their Head Start teacher child report (TCR) forms on the web or on paper, and kindergarten teachers will be given this option for completing the TCR and teacher questionnaire.

The Sample Management System (SMS) also improves data efficiency, incorporating administrative entities such as teams, team members, and week of data collection to accommodate sample assignment and data collection management. The SMS manages the status of all data collection instruments and their relationships. For example, if a parent is interviewed and we subsequently learn that the child is ineligible, the status of the parent record must be revised. The SMS also deals with the dynamic sample member relationships including, but not limited to, children transferring classrooms, new teachers, and shifting living arrangements.

A.4. Efforts to Identify Duplication and Use of Similar Information

There is no evidence of other studies that offer comprehensive information on issues relevant to this project. Previous cohorts of FACES do not capture new program initiatives or changes to the population served by Head Start.

While many useful interview items have been identified from other studies and adopted for use in FACES, no comparable data have been collected on a nationally representative sample of Head Start children and families, particularly at program entry. No available studies combine the four sources of primary data (staff interviews, parent interviews, classroom observations, and child assessments) that will be collected in FACES 2009. Also, there is no other source for detailed child-level information that may be used to describe changes in the population served by Head Start over time. New instruments to enhance the information on children’s development (e.g., measure of executive functioning) match those planned for Head Start CARES (Classroom-based Approaches and Resources for Emotion and Social skill promotion) to promote linkage across ACF-funded studies, but FACES captures information for children attending the population of Head Start centers as opposed to Head Start CARES which is examining a randomized trial of interventions. The information from these sources and the linking of data across them make FACES a unique data set.

A.5. Impact on Small Businesses or Other Small Entities

No small businesses are impacted by the data collection in this project.

A.6. Consequences of Collecting the Information Less Frequently

FACES has been fielded in three-year intervals since 1997 to be a descriptive study of the population served by Head Start and for program performance monitoring. The three-year interval provides ongoing information to examine continuity and change in Head Start programs as well as providing a longitudinal picture for a given cohort. The last cohort of FACES was begun in the fall of 2006; the proposed new cohort will begin in fall 2009. This will make a three-year interval between cohorts for purposes of tracking change over time in the user population and in program performance. In the last three years, the national Office of Head Start has implemented a number of significant program initiatives, such as an increased focus on dual language learners and changes to the way training and technical assistance are provided to programs. In addition, because of welfare reform and high rates of immigration, rapid changes are occurring in the composition and life circumstances of the U.S. low-income population with young children. FACES 2009 then will capture information on Head Start program and children’s performance within the initial stage of new initiatives. A longer interval between successive waves of FACES would mean that the ability to detect and interpret changes would be significantly impaired.

A.7. Special Circumstances Relating to the Guidelines of 5 CFR 1320.5

There are no special circumstances requiring deviation from these guidelines.

A.8. Comments in Response to the Federal Register Notice and Efforts to Consult Outside the Agency

Appendix E contains documentation from all Federal Register announcements, comments received, and responses to comments. The first Federal Register notice for the FACES 2009 data collection was published in the Federal Register, Volume 73 on December 4, 2008 (Reference number FR E8–28655). No public comments were received during the 60 days following that announcement.

A copy of the 60-day notice is included in Appendix E.

The second Federal Register notice for the FACES 2009 data collection was published in the Federal Register, Volume 74 on March 10, 2009 (Reference number FR E9-4926). A copy of the 30-day notice is included in Appendix E.

Many individuals and organizations, including the FACES Technical Work Group, have been contacted for advice on various aspects of the design of the study and data collection instruments. Their feedback was obtained through in-person meetings and telephone conversations. Members of the FACES Technical Work Group are listed in Table A.2.

TABLE A.2

MEMBERSHIP

OF FACES TECHNICAL WORK GROUP

Brenda Jones Harden |

University of Maryland, College Park |

Thomas Schultz |

Council of Chief State School Officers |

Robert Pianta |

University of Virginia |

Gayle Cunningham |

Jefferson County Committee for Economic Opportunity (JCCEO), Birmingham, AL |

Doug Clements |

University of Buffalo |

Margaret Burchinal |

University of North Carolina, Chapel Hill |

Richard Lambert |

University of North Carolina, Charlotte |

Donna Bryant |

University of North Carolina, Chapel Hill |

Nilsa Velasquez |

KIDCO Child Care Head Start, Miami, FL |

Barbara Wasik |

Johns Hopkins University |

A.9. Explanation of Any Payment or Gift to Respondents

Participation in FACES will place some burden on program staff, families, and children. We have attempted to minimize this burden through our data collection procedures and use of carefully constructed instruments and assessments. Nevertheless, we believe it is important to acknowledge the burden that participation entails at each wave of the study. To offset this burden, we have developed a nominal payment structure that is based on the one used effectively in FACES 2006 and attempts to acknowledge respondents efforts in a respectful way.

Head Start teachers will be asked to complete a Teacher Child Report (TCR) form for each sampled and consented FACES child in their classroom, using either a web or paper instrument. Teachers will receive $5 for each TCR they complete, and an additional $2 for each form that is completed on the web. In FACES 2006, 77 percent of Head Start teachers used the web instrument.

Study children’s kindergarten teachers will receive $25 for completing a teacher survey, and $5 for each TCR that they complete. An additional $2 will be provided for each TCR that they complete on the web. In FACES 2006, 57 percent of kindergarten teachers used the web instrument.

Parents are asked to participate in a 45-minute interview during each data collection wave. We propose offering parents a $35 token of appreciation for participating in the parent interview at each wave. In addition, the children will participate in a 45-minute child assessment during each wave of data collection. We propose to offer participating families a children’s book worth approximately $10 each time their child participates.

The FACES onsite coordinators (OSC) are critical to the success of the FACES study. In the fall of 2009, the OSCs will support the study by providing classroom rosters, assisting in the consent process, and scheduling the data collection activities. We propose to provide the OSCs $500 for their assistance in this wave. In subsequent waves, spring 2010 and 2011, when the OSCs will provide updated enrollment information, schedule the data collection, and assist with locating efforts as necessary, we propose to provide an additional $250 each wave.

A.10. Assurance of Privacy Provided to Respondents

Respondents will receive information about privacy protections when they consent to participate in the study; this information will be repeated in the introduction at the start of each interview. All interviewers and data collectors will be knowledgeable about privacy procedures and will be prepared to describe them in detail or to answer any related questions raised by respondents.

We have crafted carefully worded consent forms (Appendix B) that explain in simple, direct language the steps we will take to protect the privacy of the information each sample member provides. Assurances of privacy will be given to each parent as he or she is recruited for the study and before each wave of data collection. Parents will be assured that their responses will not be shared with the Head Start program staff, their child’s primary caregiver, or the program, and that their responses will be reported only as part of aggregate statistics across all participating families. We will not share any information with any Head Start staff member. Moreover, no scale scores from direct child assessments will be reported back to programs. ACF will obtain signed, informed consent from all parents prior to their participation and obtain their consent to assess their children. The FACES fact sheet makes it clear that parents may withdraw their consent at any time.

To further ensure privacy, personal identifiers that could be used to link individuals with their responses will be removed from all completed questionnaires and stored under lock and key at the research team offices. Data on laptop computers will be secured through hard drive encryption as well as operation and survey system configuration and a password. Any computer files that contain this information also will be locked and password-protected. Interview and data management procedures that ensure the security of data and privacy of information will be a major part of training. Additionally, all MPR staff will be required to sign a confidentiality statement (see Appendix F).

We are obtaining a NIH certificate of confidentiality to help ensure the privacy of study participants. We are in the process of applying for the IRB clearance needed prior to applying for the certificate.

A.11. Justification for Sensitive Questions

To achieve its primary goal of describing the characteristics of the children and families served by Head Start, we will be asking some sensitive questions, including some aimed at assessing feelings of depression, use of services for emotional or mental health problems, and use of services for personal problems such as family violence or substance abuse. The questions obtain important information for understanding family needs and for describing Head Start’s involvement in these aspects of individual and family functioning, and have been used in previous cohorts of FACES.

As part of the consent process, participating parents will be informed that sensitive questions will be asked, and they will be asked to sign a consent to participate form acknowledging that their participation is voluntary. All respondents will be informed that their identity will be kept private and that they do not have to answer questions that make them uncomfortable. All respondents will also be informed that none of the responses they provide will be reported back to their child’s teacher or program.

A.12. Estimates of Annualized Burden Hours and Costs

The proposed data collection does not impose a financial burden on respondents nor will respondents incur any expense other than the time spent participating

The estimated annual burden for study respondents—parents, children, and program staff—is listed in Table A.3. Response times are derived from previous cohorts of FACES. The total annual burden is expected to be 6,843 hours for all of the instruments.

Estimates of Annualized Costs

To compute the total estimated annual cost, the total burden hours were multiplied by the average hourly wage for each adult participant, according to the Bureau of Labor Statistics, Current Population Survey, 2008. We also used the CPS table from the first quarter of 2009 for education (detailed occupation earnings are only available annually). The results appear in Table A.3 below. For Head Start teachers, we used the mean salary for child care providers ($9.05 per hour). For program directors, we used the mean salary for full-time employees with a degree higher than a bachelor’s degree ($33.15 per hour). For parents, we used the mean salary for full-time employees over the age of 25 who are high school graduates with no college experience ($15.03 per hour). For other Head Start staff and kindergarten teachers, we used the mean salary for full-time employees over the age of 25 with a bachelor’s degree.

TABLE A.3

ESTIMATED ANNUAL RESPONSE BURDEN AND ANNUAL COST

Instrument |

Number of Respondents |

Number of Responses per Respondent |

Average Burden Hours per Response |

Total Burden Hours |

Average Hourly Wage |

Total |

Parent Interview |

3,185 |

1.0 |

0.81 |

2,564 |

$15.50 |

$39,742.00 |

Child Assessment |

3,245 |

1.0 |

0.75 |

2,434 |

n/a |

n/a |

Head Start Teacher Interview |

405 |

1.0 |

0.50 |

203 |

$9.05 |

$1,837.15 |

Head Start Teacher Child Report |

405 |

9.0 |

0.17 |

620 |

$9.05 |

$5,611.00 |

Program Director Interview |

20 |

1.0 |

0.50 |

10 |

$33.15 |

$331.50 |

Center Director Interview |

40 |

1.0 |

0.50 |

20 |

$25.60 |

$512.00 |

Education Coordinator Interview |

20 |

1.0 |

0.50 |

10 |

$25.60 |

$256.00 |

Kindergarten Teacher Questionnaire |

1,128 |

1.3 |

0.50 |

733 |

$25.60 |

$18,764.80 |

Kindergarten Teacher Child Report |

1,128 |

1.3 |

0.17 |

249 |

$25.60 |

$6,374.40 |

Estimated Total |

|

|

|

6,843 |

$73,428.85 |

A.13. Estimates of Other Total Annual Cost Burden to Respondents and Record Keepers

Not applicable.

A.14. Annualized Cost to Federal Government

The total cost for the three years of data collection is $11,071,006, or $3,690,335.33 per year. These costs include the sampling, data collection, participant tracking, data processing, and data coding.

A.15. Explanations for Program Changes or Adjustments

The changes made to the FACES 2009 data collection reflect an increased interest in studying dual language learners. Additional items added to the parent interview ask about children’s exposure to multiple languages, and the child assessment focuses on language development in both English and Spanish for those who are exposed to both languages. A summary of the proposed changes is included in Appendix C.

FACES 2009 will differ from FACES 2006 in two other ways. First, children who leave Head Start will be assessed and their parents interviewed after leaving the program. In FACES 2006, children who left the program were not included in the follow-up data collections. Second, in order to increase the efficiency of the sample accrual and selection procedures, field enrollment specialists will visit each sampled center three weeks prior to the scheduled date of the fall 2009 data collection. These staff members will work with the OSC and MPR statisticians to select the sample of classrooms and the child sample, and obtain parental consent for the children’s participation in the study.

A.16. Plans for Tabulation and Publication and Project Time Schedule

Analysis Plan

Analytic strategies will be aligned with the Head Start Performance

Measures Framework and will be tailored to address each of the

study’s research objectives. Specifically, the analyses will

aim to (1) describe key characteristics of newly entering children

and families served in Head Start, including demographics and

children’s developmental progress; (2) describe the

characteristics of Head Start programs, teachers, and classrooms

serving children; and

(3) explore associations between

classroom, teacher, and program characteristics, and child and family

well-being. Research questions related to key subgroups of interest,

such as children with disabilities and dual language learners (DLLs),

will also be supported.

Direct child assessments and reports from teachers, parents, and assessors will provide data on children’s skills, abilities and development at single time points, and gains and changes in this development during Head Start and by the end of kindergarten. Analyses focused on children’s families and home environments will draw on data from interviews with parents. Interviews with program staff and structured observations of Head Start classrooms will provide descriptive data about classrooms and programs, such as staff background and qualifications, curricula use and assessment practices, and classroom activities, climate, and instructional practices.

Analyses will employ a variety of methods, including cross-sectional and longitudinal approaches, descriptive statistics (means, percentages), simple tests of differences across subgroups and over time (t-tests, chi-square tests), and multivariate analysis (regression analysis, hierarchical linear modeling). In this descriptive study, many of the research questions can be answered by calculating averages and percentages of children, classrooms, or programs falling into various categories; comparisons of these averages across time or across subgroups; and changes in outcomes over time. More complex analyses of relations among program characteristics and child and family outcomes can be done through hierarchical linear modeling (HLM). Growth-curve models will allow comparison of developmental trajectories across children in different types of programs and with different family characteristics—for example, dual language learners compared to non-dual language learners.

For questions about the current characteristics of Head Start children and families (for example, family characteristics at Head Start entry or children’s knowledge and skills in the fall of their first Head Start year), we will calculate averages and percentages. For all descriptive analyses, we will calculate standard errors, taking into account multilevel sampling and clustering at the appropriate level (program, center, classroom, and child). We will use analysis weights taking into account complex multilevel sampling and nonresponse at each level. For questions on the current characteristics of Head Start classrooms, teachers, or programs (for example, average quality of classrooms or current teacher education levels), we will calculate average values and percentages.

For questions about changes in children’s outcomes over the Head Start year (two time points), we will calculate the average differences in outcomes from fall to spring of the Head Start year for all children. We will evaluate whether the fall-spring differences are statistically significant by calculating standard errors that take into account the multi-level sampling at the grantee, center, and classroom stages. Outcomes that have been normed on broad populations of preschool-age children (for example, the Woodcock-Johnson III Letter-Word Identification or the Peabody Picture Vocabulary Test, 4th Edition) will be compared with the published norms to judge how Head Start children have progressed relative to the national and published norms. As in all descriptive studies, particular care will be taken to ensure that the longitudinal results and comparisons are not misconstrued as impacts of the program on child and family outcomes and children’s developmental trajectories. These descriptive analyses will also support comparisons across cohorts and across key subgroups. We will use t-tests and chi-square tests to assess the statistical significance of differences across subgroups.

Finally, questions relating characteristics of the classroom, teacher, or family background to children’s outcomes at the end of Head Start or kindergarten, or changes during the Head Start year will be addressed using HLM techniques that take into account the fact that children are nested within classrooms (teachers) that are nested within centers within programs. Models predicting changes in children’s outcomes over the Head Start year or through kindergarten will include appropriate controls and covariates. For example, models will include the child’s fall score on that outcome as one of the independent variables in the regression to control for initial status as a fixed effect. The HLM models will then compare the children’s trajectories and relate those to differences in classroom processes, program characteristics, or family background.

Another potential approach compares children’s outcomes across FACES cohorts. Such analyses rely on the same variables (outcome variables and family, teacher, and classroom characteristics) being available across cohorts. This approach is potentially powerful if we assume that children entering Head Start in each cohort (1997, 2000, 2003, 2006, and 2009) are the same in their potential to develop in Head Start. This underlying assumption can be tested by examining the distribution of fall scores for each cohort included in the analysis. Most differences in the composition of children in each cohort can be controlled for statistically, using the extensive set of family and child background characteristics collected in each wave of FACES. Changes in programs—for example, the growing levels of teacher education over the five cohorts—can then be related explicitly to changes in children’s growth in Head Start over that period.

Time Schedule and Publications

Five project reports are planned, presenting key findings as data and analyses become available from each wave of data collection. The first report, planned for publication in late 2009, will discuss the sampling plan, measurement and data collection plan, and the data analysis plan, and how the FACES study design supports Head Start’s program performance measurement. The project’s second report will include descriptions of the characteristics of entering children, their families, and programs in the fall of 2009. The third (planned for 2011) and fourth (planned for early 2012) reports will describe children’s progress during the first and second year of Head Start. A final report, planned for 2013, will summarize the findings on children’s progress through kindergarten for the FACES 2009 cohort.

A.17. Display of Expiration Date for OMB Approval

The OMB number and expiration date will be displayed at the top of the cover page or first Web page for each instrument used in the study. For CATI or CAPI instruments, we will offer to read the OMB number and expiration date at the start of the interview.

A.18. Exceptions to Certification for Paperwork Reduction Act Submissions

No exceptions are necessary for this data collection.

References

Administration on Children, Youth, and Families. Charting our progress: development of the Head Start Program Performance Measures. Washington, DC: U.S. Department of Health and Human Services, 1995.

Administration on Children, Youth, and Families. Head Start FACES: Longitudinal Findings on Program Performance, Third Progress Report. Washington, DC: U.S. Department of Health and Human Services, 2001.

Love, John M., Louisa B. Tarullo, Helen Raikes, and Rachel Chazan-Cohen. “Head Start: What do we Know About Its Effectiveness? What Do We Need to Know?” In Blackwell Handbook of Early Childhood Development, edited by Kathleen McCartney and Deborah Phillips. Malden, MA: Blackwell Publishing, 2006.

3 See “Head Start FACES: Longitudinal findings on program performance. Third progress report” (ACYF 2001) for a list of the 24 Program Performance Measures. These “measures” are not specific instruments, but rather domains, objectives, and indicators for measuring performance.

| File Type | application/msword |

| Author | lmalone |

| Last Modified By | mwoolverton |

| File Modified | 2009-06-01 |

| File Created | 2009-06-01 |

© 2026 OMB.report | Privacy Policy